## What changes were proposed in this pull request?

Remove spark.memory.useLegacyMode and StaticMemoryManager. Update tests that used the StaticMemoryManager to equivalent use of UnifiedMemoryManager.

## How was this patch tested?

Existing tests, with modifications to make them work with a different mem manager.

Closes#23457 from srowen/SPARK-26539.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

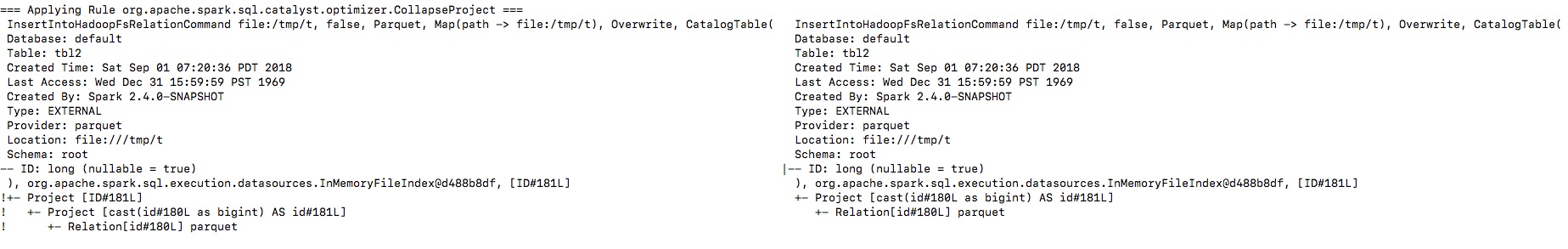

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/18576

The newly added rule `UpdateNullabilityInAttributeReferences` does the same thing the `FixNullability` does, we only need to keep one of them.

This PR removes `UpdateNullabilityInAttributeReferences`, and use `FixNullability` to replace it. Also rename it to `UpdateAttributeNullability`

## How was this patch tested?

existing tests

Closes#23390 from cloud-fan/nullable.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

Added a cache for java.time.format.DateTimeFormatter instances with keys consist of pattern and locale. This should allow to avoid parsing of timestamp/date patterns each time when new instance of `TimestampFormatter`/`DateFormatter` is created.

## How was this patch tested?

By existing test suites `TimestampFormatterSuite`/`DateFormatterSuite` and `JsonFunctionsSuite`/`JsonSuite`.

Closes#23462 from MaxGekk/time-formatter-caching.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Allow multiple spark.sql.extensions to be specified in the

configuration.

## How was this patch tested?

New tests are added.

Closes#23398 from jamisonbennett/SPARK-26493.

Authored-by: Jamison Bennett <jamison.bennett@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This is to fix a bug in https://github.com/apache/spark/pull/23036, which would lead to an exception in case of two consecutive hints.

## How was this patch tested?

Added a new test.

Closes#23501 from maryannxue/query-hint-followup.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/23043 , we introduced a behavior change: Spark users are not able to distinguish 0.0 and -0.0 anymore.

This PR proposes an alternative fix to the original bug, to retain the difference between 0.0 and -0.0 inside Spark.

The idea is, we can rewrite the window partition key, join key and grouping key during logical phase, to normalize the special floating numbers. Thus only operators care about special floating numbers need to pay the perf overhead, and end users can distinguish -0.0.

## How was this patch tested?

existing test

Closes#23388 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Refactor ExternalAppendOnlyUnsafeRowArrayBenchmark to use main method.

## How was this patch tested?

Manually tested and regenerated results.

Please note that `spark.memory.debugFill` setting has a huge impact on this benchmark. Since it is set to true by default when running the benchmark from SBT, we need to disable it:

```

SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt ";project sql;set javaOptions in Test += \"-Dspark.memory.debugFill=false\";test:runMain org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark"

```

Closes#22617 from peter-toth/SPARK-25484.

Lead-authored-by: Peter Toth <peter.toth@gmail.com>

Co-authored-by: Peter Toth <ptoth@hortonworks.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

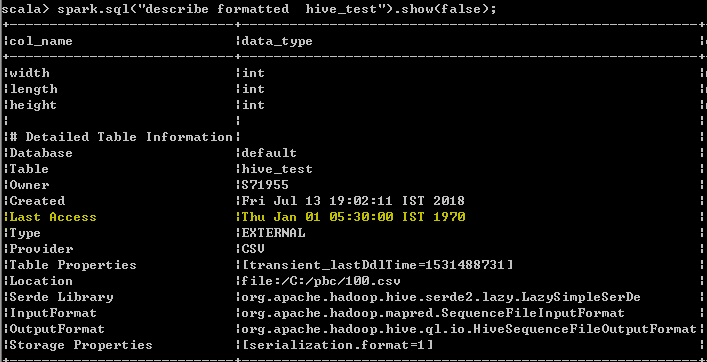

## What changes were proposed in this pull request?

Currently Spark table maintains Hive catalog storage format, so that Hive client can read it. In `HiveSerDe.scala`, Spark uses a mapping from its data source to HiveSerde. The mapping is old, we need to update with latest canonical name of Parquet and Orc FileFormat.

Otherwise the following queries will result in wrong Serde value in Hive table(default value `org.apache.hadoop.mapred.SequenceFileInputFormat`), and Hive client will fail to read the output table:

```

df.write.format("org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat").saveAsTable(..)

```

```

df.write.format("org.apache.spark.sql.execution.datasources.orc.OrcFileFormat").saveAsTable(..)

```

This minor PR is to fix the mapping.

## How was this patch tested?

Unit test.

Closes#23491 from gengliangwang/fixHiveSerdeMap.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Currently there is code scattered in a bunch of places to do different

things related to HTTP security, such as access control, setting

security-related headers, and filtering out bad content. This makes it

really easy to miss these things when writing new UI code.

This change creates a new filter that does all of those things, and

makes sure that all servlet handlers that are attached to the UI get

the new filter and any user-defined filters consistently. The extent

of the actual features should be the same as before.

The new filter is added at the end of the filter chain, because authentication

is done by custom filters and thus needs to happen first. This means that

custom filters see unfiltered HTTP requests - which is actually the current

behavior anyway.

As a side-effect of some of the code refactoring, handlers added after

the initial set also get wrapped with a GzipHandler, which didn't happen

before.

Tested with added unit tests and in a history server with SPNEGO auth

configured.

Closes#23302 from vanzin/SPARK-24522.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

Fixing leap year calculations for date operators (year/month/dayOfYear) where the Julian calendars are used (before 1582-10-04). In a Julian calendar every years which are multiples of 4 are leap years (there is no extra exception for years multiples of 100).

## How was this patch tested?

With a unit test ("SPARK-26002: correct day of year calculations for Julian calendar years") which focuses to these corner cases.

Manually:

```

scala> sql("select year('1500-01-01')").show()

+------------------------------+

|year(CAST(1500-01-01 AS DATE))|

+------------------------------+

| 1500|

+------------------------------+

scala> sql("select dayOfYear('1100-01-01')").show()

+-----------------------------------+

|dayofyear(CAST(1100-01-01 AS DATE))|

+-----------------------------------+

| 1|

+-----------------------------------+

```

Closes#23000 from attilapiros/julianOffByDays.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

For Scala UDF, when checking input nullability, we will skip inputs with type `Any`, and only check the inputs that provide nullability info.

We should do the same for checking input types.

## How was this patch tested?

new tests

Closes#23275 from cloud-fan/udf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR https://github.com/apache/spark/pull/23446 happened to introduce a behaviour change - empty dataframes can't be read anymore from underscore files. It looks controversial to allow or disallow this case so this PR targets to fix to issue warning instead of throwing an exception to be more conservative.

**Before**

```scala

scala> spark.read.schema("a int").parquet("_tmp*").show()

org.apache.spark.sql.AnalysisException: All paths were ignored:

file:/.../_tmp

file:/.../_tmp1;

at org.apache.spark.sql.execution.datasources.DataSource.checkAndGlobPathIfNecessary(DataSource.scala:570)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:360)

at org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:231)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:219)

at org.apache.spark.sql.DataFrameReader.parquet(DataFrameReader.scala:651)

at org.apache.spark.sql.DataFrameReader.parquet(DataFrameReader.scala:635)

... 49 elided

scala> spark.read.text("_tmp*").show()

org.apache.spark.sql.AnalysisException: All paths were ignored:

file:/.../_tmp

file:/.../_tmp1;

at org.apache.spark.sql.execution.datasources.DataSource.checkAndGlobPathIfNecessary(DataSource.scala:570)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:360)

at org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:231)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:219)

at org.apache.spark.sql.DataFrameReader.text(DataFrameReader.scala:723)

at org.apache.spark.sql.DataFrameReader.text(DataFrameReader.scala:695)

... 49 elided

```

**After**

```scala

scala> spark.read.schema("a int").parquet("_tmp*").show()

19/01/07 15:14:43 WARN DataSource: All paths were ignored:

file:/.../_tmp

file:/.../_tmp1

+---+

| a|

+---+

+---+

scala> spark.read.text("_tmp*").show()

19/01/07 15:14:51 WARN DataSource: All paths were ignored:

file:/.../_tmp

file:/.../_tmp1

+-----+

|value|

+-----+

+-----+

```

## How was this patch tested?

Manually tested as above.

Closes#23481 from HyukjinKwon/SPARK-26339.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.test` and `spark.testing` configs to use `ConfigEntry` and put them in the config package.

## How was this patch tested?

existing UTs

Closes#23413 from mgaido91/SPARK-26491.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

The existing query hint implementation relies on a logical plan node `ResolvedHint` to store query hints in logical plans, and on `Statistics` in physical plans. Since `ResolvedHint` is not really a logical operator and can break the pattern matching for existing and future optimization rules, it is a issue to the Optimizer as the old `AnalysisBarrier` was to the Analyzer.

Given the fact that all our query hints are either 1) a join hint, i.e., broadcast hint; or 2) a re-partition hint, which is indeed an operator, we only need to add a hint field on the Join plan and that will be a good enough solution for the current hint usage.

This PR is to let `Join` node have a hint for its left sub-tree and another hint for its right sub-tree and each hint is a merged result of all the effective hints specified in the corresponding sub-tree. The "effectiveness" of a hint, i.e., whether that hint should be propagated to the `Join` node, is currently consistent with the hint propagation rules originally implemented in the `Statistics` approach. Note that the `ResolvedHint` node still has to live through the analysis stage because of the `Dataset` interface, but it will be got rid of and moved to the `Join` node in the "pre-optimization" stage.

This PR also introduces a change in how hints work with join reordering. Before this PR, hints would stop join reordering. For example, in "a.join(b).join(c).hint("broadcast").join(d)", the broadcast hint would stop d from participating in the cost-based join reordering while still allowing reordering from under the hint node. After this PR, though, the broadcast hint will not interfere with join reordering at all, and after reordering if a relation associated with a hint stays unchanged or equivalent to the original relation, the hint will be retained, otherwise will be discarded. For example, the original plan is like "a.join(b).hint("broadcast").join(c).hint("broadcast").join(d)", thus the join order is "a JOIN b JOIN c JOIN d". So if after reordering the join order becomes "a JOIN b JOIN (c JOIN d)", the plan will be like "a.join(b).hint("broadcast").join(c.join(d))"; but if after reordering the join order becomes "a JOIN c JOIN b JOIN d", the plan will be like "a.join(c).join(b).hint("broadcast").join(d)".

## How was this patch tested?

Added new tests.

Closes#23036 from maryannxue/query-hint.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

When passing wrong url to jdbc then It would throw IllegalArgumentException instead of NPE.

### How was this patch tested?

Adding test case to Existing tests in JDBCSuite

Closes#23464 from ayudovin/fixing-npe.

Authored-by: ayudovin <a.yudovin6695@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

`StreamingReadSupport` is designed to be a `package` interface. Mockito seems to complain during `Maven` testing. This doesn't fail in `sbt` and IntelliJ. For mock-testing purpose, this PR makes it `public` interface and adds explicit comments like `public interface ReadSupport`

```scala

EpochCoordinatorSuite:

*** RUN ABORTED ***

java.lang.IllegalAccessError: tried to

access class org.apache.spark.sql.sources.v2.reader.streaming.StreamingReadSupport

from class org.apache.spark.sql.sources.v2.reader.streaming.ContinuousReadSupport$MockitoMock$58628338

at org.apache.spark.sql.sources.v2.reader.streaming.ContinuousReadSupport$MockitoMock$58628338.<clinit>(Unknown Source)

at sun.reflect.GeneratedSerializationConstructorAccessor632.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.objenesis.instantiator.sun.SunReflectionFactoryInstantiator.newInstance(SunReflectionFactoryInstantiator.java:48)

at org.objenesis.ObjenesisBase.newInstance(ObjenesisBase.java:73)

at org.mockito.internal.creation.instance.ObjenesisInstantiator.newInstance(ObjenesisInstantiator.java:19)

at org.mockito.internal.creation.bytebuddy.SubclassByteBuddyMockMaker.createMock(SubclassByteBuddyMockMaker.java:47)

at org.mockito.internal.creation.bytebuddy.ByteBuddyMockMaker.createMock(ByteBuddyMockMaker.java:25)

at org.mockito.internal.util.MockUtil.createMock(MockUtil.java:35)

at org.mockito.internal.MockitoCore.mock(MockitoCore.java:69)

```

## How was this patch tested?

Pass the Jenkins with Maven build

Closes#23463 from dongjoon-hyun/SPARK-26536-2.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

The `toHiveString()` and `toHiveStructString` methods were removed from `HiveUtils` because they have been already implemented in `HiveResult`. One related test was moved to `HiveResultSuite`.

## How was this patch tested?

By tests from `hive-thriftserver`.

Closes#23466 from MaxGekk/dedup-hive-result-string.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

My pull request #23288 was resolved and merged to master, but it turned out later that my change breaks another regression test. Because we cannot reopen pull request, I create a new pull request here.

Commit 92934b4 is only change after pull request #23288.

`CheckFileExist` was avoided at 239cfa4 after discussing #23288 (comment).

But, that change turned out to be wrong because we should not check if argument checkFileExist is false.

Test 27e42c1de5/sql/core/src/test/scala/org/apache/spark/sql/SQLQuerySuite.scala (L2555)

failed when we avoided checkFileExist, but now successed after commit 92934b4 .

## How was this patch tested?

Both of below tests were passed.

```

testOnly org.apache.spark.sql.execution.datasources.csv.CSVSuite

testOnly org.apache.spark.sql.SQLQuerySuite

```

Closes#23446 from KeiichiHirobe/SPARK-26339.

Authored-by: Hirobe Keiichi <keiichi_hirobe@forcia.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Address SPARK-26548, in Spark 2.4.0, the CacheManager holds a write lock while computing the executedPlan for a cached logicalPlan. In some cases with very large query plans this can be an expensive operation, taking minutes to run. The entire cache is blocked during this time. This PR changes that so the writeLock is only obtained after the executedPlan is generated, this reduces the time the lock is held to just the necessary time when the shared data structure is being updated.

gatorsmile and cloud-fan - You can committed patches in this area before. This is a small incremental change.

## How was this patch tested?

Has been tested on a live system where the blocking was causing major issues and it is working well.

CacheManager has no explicit unit test but is used in many places internally as part of the SharedState.

Closes#23469 from DaveDeCaprio/optimizer-unblocked.

Lead-authored-by: Dave DeCaprio <daved@alum.mit.edu>

Co-authored-by: David DeCaprio <daved@alum.mit.edu>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The truth table comment in EqualNullSafe incorrectly marked FALSE results as UNKNOWN.

## How was this patch tested?

N/A

Closes#23461 from rednaxelafx/fix-typo.

Authored-by: Kris Mok <kris.mok@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Added new JSON option `inferTimestamp` (`true` by default) to control inferring of `TimestampType` from string values.

## How was this patch tested?

Add new UT to `JsonInferSchemaSuite`.

Closes#23455 from MaxGekk/json-infer-time-followup.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

While backporting the patch to 2.4/2.3, I realized that the patch introduces unneeded imports (probably leftovers from intermediate changes). This PR removes the useless import.

## How was this patch tested?

NA

Closes#23451 from mgaido91/SPARK-26078_FOLLOWUP.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This PR upgrades Mockito from 1.10.19 to 2.23.4. The following changes are required.

- Replace `org.mockito.Matchers` with `org.mockito.ArgumentMatchers`

- Replace `anyObject` with `any`

- Replace `getArgumentAt` with `getArgument` and add type annotation.

- Use `isNull` matcher in case of `null` is invoked.

```scala

saslHandler.channelInactive(null);

- verify(handler).channelInactive(any(TransportClient.class));

+ verify(handler).channelInactive(isNull());

```

- Make and use `doReturn` wrapper to avoid [SI-4775](https://issues.scala-lang.org/browse/SI-4775)

```scala

private def doReturn(value: Any) = org.mockito.Mockito.doReturn(value, Seq.empty: _*)

```

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#23452 from dongjoon-hyun/SPARK-26536.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Increase test memory to avoid OOM in TimSort-related tests.

## How was this patch tested?

Existing tests.

Closes#23425 from srowen/SPARK-26306.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Test case in SPARK-10316 is used to make sure non-deterministic `Filter` won't be pushed through `Project`

But in current code base this test case can't cover this purpose.

Change LogicalRDD to HadoopFsRelation can fix this issue.

## How was this patch tested?

Modified test pass.

Closes#23440 from LinhongLiu/fix-test.

Authored-by: Liu,Linhong <liulinhong@baidu.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

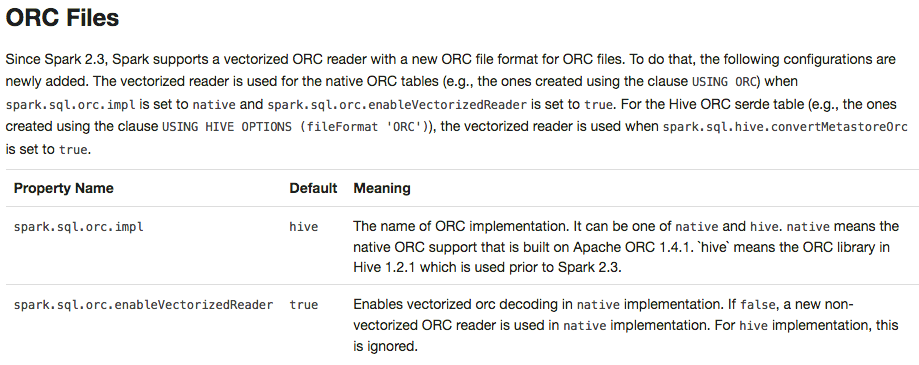

## What changes were proposed in this pull request?

Currently OrcColumnarBatchReader returns all the partition column values in the batch read.

In data source V2, we can improve it by returning the required partition column values only.

This PR is part of https://github.com/apache/spark/pull/23383 . As cloud-fan suggested, create a new PR to make review easier.

Also, this PR doesn't improve `OrcFileFormat`, since in the method `buildReaderWithPartitionValues`, the `requiredSchema` filter out all the partition columns, so we can't know which partition column is required.

## How was this patch tested?

Unit test

Closes#23387 from gengliangwang/refactorOrcColumnarBatch.

Lead-authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Co-authored-by: Gengliang Wang <ltnwgl@gmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`testExactCaseQueryPruning` and `testMixedCaseQueryPruning` don't need to set up `PARQUET_VECTORIZED_READER_ENABLED` config. Because `withMixedCaseData` will run against both Spark vectorized reader and Parquet-mr reader.

## How was this patch tested?

Existing test.

Closes#23427 from viirya/fix-parquet-schema-pruning-test.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

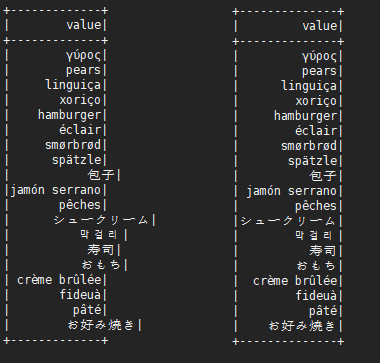

## What changes were proposed in this pull request?

In the PR, I propose to move `hiveResultString()` out of `QueryExecution` and put it to a separate object.

Closes#23409 from MaxGekk/hive-result-string.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

This PR fixes `pivot(Column)` can accepts `collection.mutable.WrappedArray`.

Note that we return `collection.mutable.WrappedArray` from `ArrayType`, and `Literal.apply` doesn't support this.

We can unwrap the array and use it for type dispatch.

```scala

val df = Seq(

(2, Seq.empty[String]),

(2, Seq("a", "x")),

(3, Seq.empty[String]),

(3, Seq("a", "x"))).toDF("x", "s")

df.groupBy("x").pivot("s").count().show()

```

Before:

```

Unsupported literal type class scala.collection.mutable.WrappedArray$ofRef WrappedArray()

java.lang.RuntimeException: Unsupported literal type class scala.collection.mutable.WrappedArray$ofRef WrappedArray()

at org.apache.spark.sql.catalyst.expressions.Literal$.apply(literals.scala:80)

at org.apache.spark.sql.RelationalGroupedDataset.$anonfun$pivot$2(RelationalGroupedDataset.scala:427)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237)

at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36)

at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.WrappedArray.foreach(WrappedArray.scala:39)

at scala.collection.TraversableLike.map(TraversableLike.scala:237)

at scala.collection.TraversableLike.map$(TraversableLike.scala:230)

at scala.collection.AbstractTraversable.map(Traversable.scala:108)

at org.apache.spark.sql.RelationalGroupedDataset.pivot(RelationalGroupedDataset.scala:425)

at org.apache.spark.sql.RelationalGroupedDataset.pivot(RelationalGroupedDataset.scala:406)

at org.apache.spark.sql.RelationalGroupedDataset.pivot(RelationalGroupedDataset.scala:317)

at org.apache.spark.sql.DataFramePivotSuite.$anonfun$new$1(DataFramePivotSuite.scala:341)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

```

After:

```

+---+---+------+

| x| []|[a, x]|

+---+---+------+

| 3| 1| 1|

| 2| 1| 1|

+---+---+------+

```

## How was this patch tested?

Manually tested and unittests were added.

Closes#23349 from HyukjinKwon/SPARK-26403.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`DataSourceScanExec` overrides "wrong" `treeString` method without `append`. In the PR, I propose to make `treeString`s **final** to prevent such mistakes in the future. And removed the `treeString` and `verboseString` since they both use `simpleString` with reduction.

## How was this patch tested?

It was tested by `DataSourceScanExecRedactionSuite`

Closes#23431 from MaxGekk/datasource-scan-exec-followup.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

In `org.apache.spark.sql.execution.metric.SQLMetricsSuite`, there's a test case named "WholeStageCodegen metrics". However, it is executed with whole-stage codegen disabled. This PR fixes this by enable whole-stage codegen for this test case.

## How was this patch tested?

Tested locally using exiting test cases.

Closes#23224 from seancxmao/codegen-metrics.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR addresses warning messages in Java files reported at [lgtm.com](https://lgtm.com).

[lgtm.com](https://lgtm.com) provides automated code review of Java/Python/JavaScript files for OSS projects. [Here](https://lgtm.com/projects/g/apache/spark/alerts/?mode=list&severity=warning) are warning messages regarding Apache Spark project.

This PR addresses the following warnings:

- Result of multiplication cast to wider type

- Implicit narrowing conversion in compound assignment

- Boxed variable is never null

- Useless null check

NOTE: `Potential input resource leak` looks false positive for now.

## How was this patch tested?

Existing UTs

Closes#23420 from kiszk/SPARK-26508.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Default timestamp pattern defined in `JSONOptions` doesn't allow saving/loading timestamps with time zones of seconds precision. Because of that, the round trip test failed for timestamps before 1582. In the PR, I propose to extend zone offset section from `XXX` to `XXXXX` which should allow to save/load zone offsets like `-07:52:48`.

## How was this patch tested?

It was tested by `JsonHadoopFsRelationSuite` and `TimestampFormatterSuite`.

Closes#23417 from MaxGekk/hadoopfsrelationtest-new-formatter.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When we operate as below:

`

0: jdbc:hive2://xxx/> create function funnel_analysis as 'com.xxx.hive.extend.udf.UapFunnelAnalysis';

`

`

0: jdbc:hive2://xxx/> select funnel_analysis(1,",",1,'');

Error: org.apache.spark.sql.AnalysisException: Undefined function: 'funnel_analysis'. This function is neither a registered temporary function nor a permanent function registered in the database 'xxx'.; line 1 pos 7 (state=,code=0)

`

`

0: jdbc:hive2://xxx/> describe function funnel_analysis;

+-----------------------------------------------------------+--+

| function_desc |

+-----------------------------------------------------------+--+

| Function: xxx.funnel_analysis |

| Class: com.xxx.hive.extend.udf.UapFunnelAnalysis |

| Usage: N/A. |

+-----------------------------------------------------------+--+

`

We can see describe funtion will get right information,but when we actually use this funtion,we will get an undefined exception.

Which is really misleading,the real cause is below:

`

No handler for Hive UDF 'com.xxx.xxx.hive.extend.udf.UapFunnelAnalysis': java.lang.IllegalStateException: Should not be called directly;

at org.apache.hadoop.hive.ql.udf.generic.GenericUDTF.initialize(GenericUDTF.java:72)

at org.apache.spark.sql.hive.HiveGenericUDTF.outputInspector$lzycompute(hiveUDFs.scala:204)

at org.apache.spark.sql.hive.HiveGenericUDTF.outputInspector(hiveUDFs.scala:204)

at org.apache.spark.sql.hive.HiveGenericUDTF.elementSchema$lzycompute(hiveUDFs.scala:212)

at org.apache.spark.sql.hive.HiveGenericUDTF.elementSchema(hiveUDFs.scala:212)

`

This patch print the actual failure for quick debugging.

## How was this patch tested?

UT

Closes#21790 from caneGuy/zhoukang/print-warning1.

Authored-by: zhoukang <zhoukang199191@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

…Type

## What changes were proposed in this pull request?

Modifed JdbcUtils.makeGetter to handle ByteType.

## How was this patch tested?

Added a new test to JDBCSuite that maps ```TINYINT``` to ```ByteType```.

Closes#23400 from twdsilva/tiny_int_support.

Authored-by: Thomas D'Silva <tdsilva@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The current `SelectedField` extractor is somewhat complicated and it seems to be handling cases that should be handled automatically:

- `GetArrayItem(child: GetStructFieldObject())`

- `GetArrayStructFields(child: GetArrayStructFields())`

- `GetMap(value: GetStructFieldObject())`

This PR removes those cases and simplifies the extractor by passing down the data type instead of a field.

## How was this patch tested?

Existing tests.

Closes#23397 from hvanhovell/SPARK-26495.

Authored-by: Herman van Hovell <hvanhovell@databricks.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

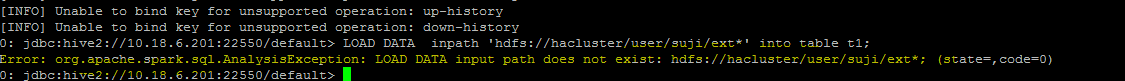

## What changes were proposed in this pull request?

As the description in SPARK-26339, spark.read behavior is very confusing when reading files that start with underscore, fix this by throwing exception which message is "Path does not exist".

## How was this patch tested?

manual tests.

Both of codes below throws exception which message is "Path does not exist".

```

spark.read.csv("/home/forcia/work/spark/_test.csv")

spark.read.schema("test STRING, number INT").csv("/home/forcia/work/spark/_test.csv")

```

Closes#23288 from KeiichiHirobe/SPARK-26339.

Authored-by: Hirobe Keiichi <keiichi_hirobe@forcia.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Proposed new class `StringConcat` for converting a sequence of strings to string with one memory allocation in the `toString` method. `StringConcat` replaces `StringBuilderWriter` in methods of dumping of Spark plans and codegen to strings.

All `Writer` arguments are replaced by `String => Unit` in methods related to Spark plans stringification.

## How was this patch tested?

It was tested by existing suites `QueryExecutionSuite`, `DebuggingSuite` as well as new tests for `StringConcat` in `StringUtilsSuite`.

Closes#23406 from MaxGekk/rope-plan.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

#20560/[SPARK-23375](https://issues.apache.org/jira/browse/SPARK-23375) introduced an optimizer rule to eliminate redundant Sort. For a test case named "Sort metrics" in `SQLMetricsSuite`, because range is already sorted, sort is removed by the `RemoveRedundantSorts`, which makes this test case meaningless.

This PR modifies the query for testing Sort metrics and checks Sort exists in the plan.

## How was this patch tested?

Modify the existing test case.

Closes#23258 from seancxmao/sort-metrics.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Similar with https://github.com/apache/spark/pull/21446. Looks random string is not quite safe as a directory name.

```scala

scala> val prefix = Random.nextString(10); val dir = new File("/tmp", "del_" + prefix + "-" + UUID.randomUUID.toString); dir.mkdirs()

prefix: String = 窽텘⒘駖ⵚ駢⡞Ρ닋

dir: java.io.File = /tmp/del_窽텘⒘駖ⵚ駢⡞Ρ닋-a3f99855-c429-47a0-a108-47bca6905745

res40: Boolean = false // nope, didn't like this one

```

## How was this patch tested?

Unit test was added, and manually.

Closes#23405 from HyukjinKwon/SPARK-26496.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This PR fixes the codegen bug introduced by #23358 .

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.11/158/

```

Line 44, Column 93: A method named "apply" is not declared in any enclosing class

nor any supertype, nor through a static import

```

## How was this patch tested?

Manual. `DateExpressionsSuite` should be passed with Scala-2.11.

Closes#23394 from dongjoon-hyun/SPARK-26424.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Currently the `AttributeReference.withMetadata` method have return type `Attribute`, the rest of with methods in the `AttributeReference` return type are `AttributeReference`, as the [spark-25892](https://issues.apache.org/jira/browse/SPARK-25892?jql=project%20%3D%20SPARK%20AND%20component%20in%20(ML%2C%20PySpark%2C%20SQL)) mentioned.

This PR will change `AttributeReference.withMetadata` method's return type from `Attribute` to `AttributeReference`.

## How was this patch tested?

Run all `sql/test,` `catalyst/test` and `org.apache.spark.sql.execution.streaming.*`

Closes#22918 from kevinyu98/spark-25892.

Authored-by: Kevin Yu <qyu@us.ibm.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to add `maxFields` parameter to all functions involved in creation of textual representation of spark plans such as `simpleString` and `verboseString`. New parameter restricts number of fields converted to truncated strings. Any elements beyond the limit will be dropped and replaced by a `"... N more fields"` placeholder. The threshold is bumped up to `Int.MaxValue` for `toFile()`.

## How was this patch tested?

Added a test to `QueryExecutionSuite` which checks `maxFields` impacts on number of truncated fields in `LocalRelation`.

Closes#23159 from MaxGekk/to-file-max-fields.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

Spark SQL doesn't support creating partitioned table using Hive CTAS in SQL syntax. However it is supported by using DataFrameWriter API.

```scala

val df = Seq(("a", 1)).toDF("part", "id")

df.write.format("hive").partitionBy("part").saveAsTable("t")

```

Hive begins to support this syntax in newer version: https://issues.apache.org/jira/browse/HIVE-20241:

```

CREATE TABLE t PARTITIONED BY (part) AS SELECT 1 as id, "a" as part

```

This patch adds this support to SQL syntax.

## How was this patch tested?

Added tests.

Closes#23376 from viirya/hive-ctas-partitioned-table.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to switch the `DateFormatClass`, `ToUnixTimestamp`, `FromUnixTime`, `UnixTime` on java.time API for parsing/formatting dates and timestamps. The API has been already implemented by the `Timestamp`/`DateFormatter` classes. One of benefit is those classes support parsing timestamps with microsecond precision. Old behaviour can be switched on via SQL config: `spark.sql.legacy.timeParser.enabled` (`false` by default).

## How was this patch tested?

It was tested by existing test suites - `DateFunctionsSuite`, `DateExpressionsSuite`, `JsonSuite`, `CsvSuite`, `SQLQueryTestSuite` as well as PySpark tests.

Closes#23358 from MaxGekk/new-time-cast.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

fix ExpresionInfo assert error in windows operation system, when running unit tests.

## How was this patch tested?

unit tests

Closes#23363 from yanlin-Lynn/unit-test-windows.

Authored-by: wangyanlin01 <wangyanlin01@baidu.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Variation of https://github.com/apache/spark/pull/20500

I cheated by not referencing fields or columns at all as this exception propagates in contexts where both would be applicable.

## How was this patch tested?

Existing tests

Closes#23373 from srowen/SPARK-14023.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

The PRs #23150 and #23196 switched JSON and CSV datasources on new formatter for dates/timestamps which is based on `DateTimeFormatter`. In this PR, I replaced `SimpleDateFormat` by `DateTimeFormatter` to reflect the changes.

Closes#23374 from MaxGekk/java-time-docs.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

GetStructField with different optional names should be semantically equal. We will use this as building block to compare the nested fields used in the plans to be optimized by catalyst optimizer.

This PR also fixes a bug below that accessing nested fields with different cases in case insensitive mode will result `AnalysisException`.

```

sql("create table t (s struct<i: Int>) using json")

sql("select s.I from t group by s.i")

```

which is currently failing

```

org.apache.spark.sql.AnalysisException: expression 'default.t.`s`' is neither present in the group by, nor is it an aggregate function

```

as cloud-fan pointed out.

## How was this patch tested?

New tests are added.

Closes#23353 from dbtsai/nestedEqual.

Lead-authored-by: DB Tsai <d_tsai@apple.com>

Co-authored-by: DB Tsai <dbtsai@dbtsai.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This patch adds explanation of `why "spark.sql.shuffle.partitions" keeps unchanged in structured streaming`, which couple of users already wondered and some of them even thought it as a bug.

This patch would help other end users to know about such behavior before they find by theirselves and being wondered.

## How was this patch tested?

No need to test because this is a simple addition on guide doc with markdown editor.

Closes#22238 from HeartSaVioR/SPARK-25245.

Lead-authored-by: Jungtaek Lim <kabhwan@gmail.com>

Co-authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Use of `ProcessingTime` class was deprecated in favor of `Trigger.ProcessingTime` in Spark 2.2. And, [SPARK-21464](https://issues.apache.org/jira/browse/SPARK-21464) minimized it at 2.2.1. Recently, it grows again in test suites. This PR aims to clean up newly introduced deprecation warnings for Spark 3.0.

## How was this patch tested?

Pass the Jenkins with existing tests and manually check the warnings.

Closes#23367 from dongjoon-hyun/SPARK-26428.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

A followup of https://github.com/apache/spark/pull/23178 , to keep binary compability by using abstract class.

## How was this patch tested?

Manual test. I created a simple app with Spark 2.4

```

object TryUDF {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().appName("test").master("local[*]").getOrCreate()

import spark.implicits._

val f1 = udf((i: Int) => i + 1)

println(f1.deterministic)

spark.range(10).select(f1.asNonNullable().apply($"id")).show()

spark.stop()

}

}

```

When I run it with current master, it fails with

```

java.lang.IncompatibleClassChangeError: Found interface org.apache.spark.sql.expressions.UserDefinedFunction, but class was expected

```

When I run it with this PR, it works

Closes#23351 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Currently, even if I explicitly disable Hive support in SparkR session as below:

```r

sparkSession <- sparkR.session("local[4]", "SparkR", Sys.getenv("SPARK_HOME"),

enableHiveSupport = FALSE)

```

produces when the Hadoop version is not supported by our Hive fork:

```

java.lang.reflect.InvocationTargetException

...

Caused by: java.lang.IllegalArgumentException: Unrecognized Hadoop major version number: 3.1.1.3.1.0.0-78

at org.apache.hadoop.hive.shims.ShimLoader.getMajorVersion(ShimLoader.java:174)

at org.apache.hadoop.hive.shims.ShimLoader.loadShims(ShimLoader.java:139)

at org.apache.hadoop.hive.shims.ShimLoader.getHadoopShims(ShimLoader.java:100)

at org.apache.hadoop.hive.conf.HiveConf$ConfVars.<clinit>(HiveConf.java:368)

... 43 more

Error in handleErrors(returnStatus, conn) :

java.lang.ExceptionInInitializerError

at org.apache.hadoop.hive.conf.HiveConf.<clinit>(HiveConf.java:105)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.spark.util.Utils$.classForName(Utils.scala:193)

at org.apache.spark.sql.SparkSession$.hiveClassesArePresent(SparkSession.scala:1116)

at org.apache.spark.sql.api.r.SQLUtils$.getOrCreateSparkSession(SQLUtils.scala:52)

at org.apache.spark.sql.api.r.SQLUtils.getOrCreateSparkSession(SQLUtils.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

```

The root cause is that:

```

SparkSession.hiveClassesArePresent

```

check if the class is loadable or not to check if that's in classpath but `org.apache.hadoop.hive.conf.HiveConf` has a check for Hadoop version as static logic which is executed right away. This throws an `IllegalArgumentException` and that's not caught:

36edbac1c8/sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala (L1113-L1121)

So, currently, if users have a Hive built-in Spark with unsupported Hadoop version by our fork (namely 3+), there's no way to use SparkR even though it could work.

This PR just propose to change the order of bool comparison so that we can don't execute `SparkSession.hiveClassesArePresent` when:

1. `enableHiveSupport` is explicitly disabled

2. `spark.sql.catalogImplementation` is `in-memory`

so that we **only** check `SparkSession.hiveClassesArePresent` when Hive support is explicitly enabled by short circuiting.

## How was this patch tested?

It's difficult to write a test since we don't run tests against Hadoop 3 yet. See https://github.com/apache/spark/pull/21588. Manually tested.

Closes#23356 from HyukjinKwon/SPARK-26422.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`HadoopFileWholeTextReader` and `HadoopFileLinesReader` will be eventually called in `FileSourceScanExec`.

In fact, locality has been implemented in `FileScanRDD`, even if we implement it in `HadoopFileWholeTextReader ` and `HadoopFileLinesReader`, it would be useless.

So I think these `TODO` can be removed.

## How was this patch tested?

N/A

Closes#23339 from 10110346/noneededtodo.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`SQLConf` is supposed to be serializable. However, currently it is not serializable in `WithTestConf`. `WithTestConf` uses the method `overrideConfs` in closure, while the classes which implements it (`TestHiveSessionStateBuilder` and `TestSQLSessionStateBuilder`) are not serializable.

This PR is to use a local variable to fix it.

## How was this patch tested?

Add unit test.

Closes#23352 from gengliangwang/serializableSQLConf.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Currently, when we infer the schema for scala/java decimals, we return as data type the `SYSTEM_DEFAULT` implementation, ie. the decimal type with precision 38 and scale 18. But this is not right, as we know nothing about the right precision and scale and these values can be not enough to store the data. This problem arises in particular with UDF, where we cast all the input of type `DecimalType` to a `DecimalType(38, 18)`: in case this is not enough, null is returned as input for the UDF.

The PR defines a custom handling for casting to the expected data types for ScalaUDF: the decimal precision and scale is picked from the input, so no casting to different and maybe wrong percision and scale happens.

## How was this patch tested?

added UTs

Closes#23308 from mgaido91/SPARK-26308.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Deprecate Row.merge

## How was this patch tested?

N/A

Closes#23271 from KyleLi1985/master.

Authored-by: 李亮 <liang.li.work@outlook.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In Spark 2.3.0 and previous versions, Hive CTAS command will convert to use data source to write data into the table when the table is convertible. This behavior is controlled by the configs like HiveUtils.CONVERT_METASTORE_ORC and HiveUtils.CONVERT_METASTORE_PARQUET.

In 2.3.1, we drop this optimization by mistake in the PR [SPARK-22977](https://github.com/apache/spark/pull/20521/files#r217254430). Since that Hive CTAS command only uses Hive Serde to write data.

This patch adds this optimization back to Hive CTAS command. This patch adds OptimizedCreateHiveTableAsSelectCommand which uses data source to write data.

## How was this patch tested?

Added test.

Closes#22514 from viirya/SPARK-25271-2.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

For better test coverage, this pr proposed to use the 4 mixed config sets of `WHOLESTAGE_CODEGEN_ENABLED` and `CODEGEN_FACTORY_MODE` when running `SQLQueryTestSuite`:

1. WHOLESTAGE_CODEGEN_ENABLED=true, CODEGEN_FACTORY_MODE=CODEGEN_ONLY

2. WHOLESTAGE_CODEGEN_ENABLED=false, CODEGEN_FACTORY_MODE=CODEGEN_ONLY

3. WHOLESTAGE_CODEGEN_ENABLED=true, CODEGEN_FACTORY_MODE=NO_CODEGEN

4. WHOLESTAGE_CODEGEN_ENABLED=false, CODEGEN_FACTORY_MODE=NO_CODEGEN

This pr also moved some existing tests into `ExplainSuite` because explain output results are different between codegen and interpreter modes.

## How was this patch tested?

Existing tests.

Closes#23213 from maropu/InterpreterModeTest.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a small clean up.

By design catalyst rules should be orthogonal: each rule should have its own responsibility. However, the `ColumnPruning` rule does not only do column pruning, but also remove no-op project and window.

This PR updates the `RemoveRedundantProject` rule to remove no-op window as well, and clean up the `ColumnPruning` rule to only do column pruning.

## How was this patch tested?

existing tests

Closes#23343 from cloud-fan/column-pruning.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In `ReplaceExceptWithFilter` we do not consider properly the case in which the condition returns NULL. Indeed, in that case, since negating NULL still returns NULL, so it is not true the assumption that negating the condition returns all the rows which didn't satisfy it, rows returning NULL may not be returned. This happens when constraints inferred by `InferFiltersFromConstraints` are not enough, as it happens with `OR` conditions.

The rule had also problems with non-deterministic conditions: in such a scenario, this rule would change the probability of the output.

The PR fixes these problem by:

- returning False for the condition when it is Null (in this way we do return all the rows which didn't satisfy it);

- avoiding any transformation when the condition is non-deterministic.

## How was this patch tested?

added UTs

Closes#23315 from mgaido91/SPARK-26366.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Currently, SQL configs are not propagated to executors while schema inferring in CSV datasource. For example, changing of `spark.sql.legacy.timeParser.enabled` does not impact on inferring timestamp types. In the PR, I propose to fix the issue by wrapping schema inferring action using `SQLExecution.withSQLConfPropagated`.

## How was this patch tested?

Added logging to `TimestampFormatter`:

```patch

-object TimestampFormatter {

+object TimestampFormatter extends Logging {

def apply(format: String, timeZone: TimeZone, locale: Locale): TimestampFormatter = {

if (SQLConf.get.legacyTimeParserEnabled) {

+ logError("LegacyFallbackTimestampFormatter is being used")

new LegacyFallbackTimestampFormatter(format, timeZone, locale)

} else {

+ logError("Iso8601TimestampFormatter is being used")

new Iso8601TimestampFormatter(format, timeZone, locale)

}

}

```

and run the command in `spark-shell`:

```shell

$ ./bin/spark-shell --conf spark.sql.legacy.timeParser.enabled=true

```

```scala

scala> Seq("2010|10|10").toDF.repartition(1).write.mode("overwrite").text("/tmp/foo")

scala> spark.read.option("inferSchema", "true").option("header", "false").option("timestampFormat", "yyyy|MM|dd").csv("/tmp/foo").printSchema()

18/12/18 10:47:27 ERROR TimestampFormatter: LegacyFallbackTimestampFormatter is being used

root

|-- _c0: timestamp (nullable = true)

```

Closes#23345 from MaxGekk/csv-schema-infer-propagate-configs.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR proposes to use foreach instead of misuse of map (for Unit). This could cause some weird errors potentially and it's not a good practice anyway. See also SPARK-16694

## How was this patch tested?

N/A

Closes#23341 from HyukjinKwon/followup-SPARK-26081.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The `JsonInferSchema` class is extended to support `TimestampType` inferring from string fields in JSON input:

- If the `prefersDecimal` option is set to `true`, it tries to infer decimal type from the string field.

- If decimal type inference fails or `prefersDecimal` is disabled, `JsonInferSchema` tries to infer `TimestampType`.

- If timestamp type inference fails, `StringType` is returned as the inferred type.

## How was this patch tested?

Added new test suite - `JsonInferSchemaSuite` to check date and timestamp types inferring from JSON using `JsonInferSchema` directly. A few tests were added `JsonSuite` to check type merging and roundtrip tests. This changes was tested by `JsonSuite`, `JsonExpressionsSuite` and `JsonFunctionsSuite` as well.

Closes#23201 from MaxGekk/json-infer-time.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR implements a new feature - window aggregation Pandas UDF for bounded window.

#### Doc:

https://docs.google.com/document/d/14EjeY5z4-NC27-SmIP9CsMPCANeTcvxN44a7SIJtZPc/edit#heading=h.c87w44wcj3wj

#### Example:

```

from pyspark.sql.functions import pandas_udf, PandasUDFType

from pyspark.sql.window import Window

df = spark.range(0, 10, 2).toDF('v')

w1 = Window.partitionBy().orderBy('v').rangeBetween(-2, 4)

w2 = Window.partitionBy().orderBy('v').rowsBetween(-2, 2)

pandas_udf('double', PandasUDFType.GROUPED_AGG)

def avg(v):

return v.mean()

df.withColumn('v_mean', avg(df['v']).over(w1)).show()

# +---+------+

# | v|v_mean|

# +---+------+

# | 0| 1.0|

# | 2| 2.0|

# | 4| 4.0|

# | 6| 6.0|

# | 8| 7.0|

# +---+------+

df.withColumn('v_mean', avg(df['v']).over(w2)).show()

# +---+------+

# | v|v_mean|

# +---+------+

# | 0| 2.0|

# | 2| 3.0|

# | 4| 4.0|

# | 6| 5.0|

# | 8| 6.0|

# +---+------+

```

#### High level changes:

This PR modifies the existing WindowInPandasExec physical node to deal with unbounded (growing, shrinking and sliding) windows.

* `WindowInPandasExec` now share the same base class as `WindowExec` and share utility functions. See `WindowExecBase`

* `WindowFunctionFrame` now has two new functions `currentLowerBound` and `currentUpperBound` - to return the lower and upper window bound for the current output row. It is also modified to allow `AggregateProcessor` == null. Null aggregator processor is used for `WindowInPandasExec` where we don't have an aggregator and only uses lower and upper bound functions from `WindowFunctionFrame`

* The biggest change is in `WindowInPandasExec`, where it is modified to take `currentLowerBound` and `currentUpperBound` and write those values together with the input data to the python process for rolling window aggregation. See `WindowInPandasExec` for more details.

#### Discussion

In benchmarking, I found numpy variant of the rolling window UDF is much faster than the pandas version:

Spark SQL window function: 20s

Pandas variant: ~80s

Numpy variant: 10s

Numpy variant with numba: 4s

Allowing numpy variant of the vectorized UDFs is something I want to discuss because of the performance improvement, but doesn't have to be in this PR.

## How was this patch tested?

New tests

Closes#22305 from icexelloss/SPARK-24561-bounded-window-udf.

Authored-by: Li Jin <ice.xelloss@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

SinkProgress should report similar properties like SourceProgress as long as they are available for given Sink. Count of written rows is metric availble for all Sinks. Since relevant progress information is with respect to commited rows, ideal object to carry this info is WriterCommitMessage. For brevity the implementation will focus only on Sinks with API V2 and on Micro Batch mode. Implemention for Continuous mode will be provided at later date.

### Before

```

{"description":"org.apache.spark.sql.kafka010.KafkaSourceProvider3c0bd317"}

```

### After

```

{"description":"org.apache.spark.sql.kafka010.KafkaSourceProvider3c0bd317","numOutputRows":5000}

```

### This PR is related to:

- https://issues.apache.org/jira/browse/SPARK-24647

- https://issues.apache.org/jira/browse/SPARK-21313

## How was this patch tested?

Existing and new unit tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#21919 from vackosar/feature/SPARK-24933-numOutputRows.

Lead-authored-by: Vaclav Kosar <admin@vaclavkosar.com>

Co-authored-by: Kosar, Vaclav: Functions Transformation <Vaclav.Kosar@barclayscapital.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

1. rename `FormatterUtils` to `DateTimeFormatterHelper`, and move it to a separated file

2. move `DateFormatter` and its implementation to a separated file

3. mark some methods as private

4. add `override` to some methods

## How was this patch tested?

existing tests

Closes#23329 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR is a follow-up of the PR https://github.com/apache/spark/pull/17899. It is to add the rule TransposeWindow the optimizer batch.

## How was this patch tested?

The existing tests.

Closes#23222 from gatorsmile/followupSPARK-20636.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

- The original comment about `updateDriverMetrics` is not right.

- Refactor the code to ensure `selectedPartitions ` has been set before sending the driver-side metrics.

- Restore the original name, which is more general and extendable.

## How was this patch tested?

The existing tests.

Closes#23328 from gatorsmile/followupSpark-26142.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The optimizer rule `org.apache.spark.sql.catalyst.optimizer.ReorderJoin` performs join reordering on inner joins. This was introduced from SPARK-12032 (https://github.com/apache/spark/pull/10073) in 2015-12.

After it had reordered the joins, though, it didn't check whether or not the output attribute order is still the same as before. Thus, it's possible to have a mismatch between the reordered output attributes order vs the schema that a DataFrame thinks it has.

The same problem exists in the CBO version of join reordering (`CostBasedJoinReorder`) too.

This can be demonstrated with the example:

```scala

spark.sql("create table table_a (x int, y int) using parquet")

spark.sql("create table table_b (i int, j int) using parquet")

spark.sql("create table table_c (a int, b int) using parquet")

val df = spark.sql("""

with df1 as (select * from table_a cross join table_b)

select * from df1 join table_c on a = x and b = i

""")

```

here's what the DataFrame thinks:

```

scala> df.printSchema

root

|-- x: integer (nullable = true)

|-- y: integer (nullable = true)

|-- i: integer (nullable = true)

|-- j: integer (nullable = true)

|-- a: integer (nullable = true)

|-- b: integer (nullable = true)

```

here's what the optimized plan thinks, after join reordering:

```

scala> df.queryExecution.optimizedPlan.output.foreach(a => println(s"|-- ${a.name}: ${a.dataType.typeName}"))

|-- x: integer

|-- y: integer

|-- a: integer

|-- b: integer

|-- i: integer

|-- j: integer

```

If we exclude the `ReorderJoin` rule (using Spark 2.4's optimizer rule exclusion feature), it's back to normal:

```

scala> spark.conf.set("spark.sql.optimizer.excludedRules", "org.apache.spark.sql.catalyst.optimizer.ReorderJoin")

scala> val df = spark.sql("with df1 as (select * from table_a cross join table_b) select * from df1 join table_c on a = x and b = i")

df: org.apache.spark.sql.DataFrame = [x: int, y: int ... 4 more fields]

scala> df.queryExecution.optimizedPlan.output.foreach(a => println(s"|-- ${a.name}: ${a.dataType.typeName}"))

|-- x: integer

|-- y: integer

|-- i: integer

|-- j: integer

|-- a: integer

|-- b: integer

```

Note that this output attribute ordering problem leads to data corruption, and can manifest itself in various symptoms:

* Silently corrupting data, if the reordered columns happen to either have matching types or have sufficiently-compatible types (e.g. all fixed length primitive types are considered as "sufficiently compatible" in an `UnsafeRow`), then only the resulting data is going to be wrong but it might not trigger any alarms immediately. Or

* Weird Java-level exceptions like `java.lang.NegativeArraySizeException`, or even SIGSEGVs.

## How was this patch tested?

Added new unit test in `JoinReorderSuite` and new end-to-end test in `JoinSuite`.

Also made `JoinReorderSuite` and `StarJoinReorderSuite` assert more strongly on maintaining output attribute order.

Closes#23303 from rednaxelafx/fix-join-reorder.

Authored-by: Kris Mok <rednaxelafx@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The `CSVInferSchema` class is extended to support inferring of `DateType` from CSV input. The attempt to infer `DateType` is performed after inferring `TimestampType`.

## How was this patch tested?

Added new test for inferring date types from CSV . It was also tested by existing suites like `CSVInferSchemaSuite`, `CsvExpressionsSuite`, `CsvFunctionsSuite` and `CsvSuite`.

Closes#23202 from MaxGekk/csv-date-inferring.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

CSV parsing accidentally uses the previous good value for a bad input field. See example in Jira.

This PR ensures that the associated column is set to null when an input field cannot be converted.

## How was this patch tested?

Added new test.

Ran all SQL unit tests (testOnly org.apache.spark.sql.*).

Ran pyspark tests for pyspark-sql

Closes#23323 from bersprockets/csv-bad-field.

Authored-by: Bruce Robbins <bersprockets@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When there is a self-join as result of a IN subquery, the join condition may be invalid, resulting in trivially true predicates and return wrong results.

The PR deduplicates the subquery output in order to avoid the issue.

## How was this patch tested?

added UT

Closes#23057 from mgaido91/SPARK-26078.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to switch on **java.time API** for parsing timestamps and dates from JSON inputs with microseconds precision. The SQL config `spark.sql.legacy.timeParser.enabled` allow to switch back to previous behavior with using `java.text.SimpleDateFormat`/`FastDateFormat` for parsing/generating timestamps/dates.

## How was this patch tested?

It was tested by `JsonExpressionsSuite`, `JsonFunctionsSuite` and `JsonSuite`.

Closes#23196 from MaxGekk/json-time-parser.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Multiple SparkContexts are discouraged and it has been warning for last 4 years, see SPARK-4180. It could cause arbitrary and mysterious error cases, see SPARK-2243.

Honestly, I didn't even know Spark still allows it, which looks never officially supported, see SPARK-2243.

I believe It should be good timing now to remove this configuration.

## How was this patch tested?

Each doc was manually checked and manually tested:

```

$ ./bin/spark-shell --conf=spark.driver.allowMultipleContexts=true

...

scala> new SparkContext()

org.apache.spark.SparkException: Only one SparkContext should be running in this JVM (see SPARK-2243).The currently running SparkContext was created at:

org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:939)

...

org.apache.spark.SparkContext$.$anonfun$assertNoOtherContextIsRunning$2(SparkContext.scala:2435)

at scala.Option.foreach(Option.scala:274)

at org.apache.spark.SparkContext$.assertNoOtherContextIsRunning(SparkContext.scala:2432)

at org.apache.spark.SparkContext$.markPartiallyConstructed(SparkContext.scala:2509)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:80)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:112)

... 49 elided

```

Closes#23311 from HyukjinKwon/SPARK-26362.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When using ordinals to access linked list, the time cost is O(n).

## How was this patch tested?

Existing tests.

Closes#23280 from CarolinePeng/update_Two.

Authored-by: CarolinPeng <00244106@zte.intra>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

When using a higher-order function with the same variable name as the existing columns in `Filter` or something which uses `Analyzer.resolveExpressionBottomUp` during the resolution, e.g.,:

```scala

val df = Seq(

(Seq(1, 9, 8, 7), 1, 2),

(Seq(5, 9, 7), 2, 2),

(Seq.empty, 3, 2),

(null, 4, 2)

).toDF("i", "x", "d")

checkAnswer(df.filter("exists(i, x -> x % d == 0)"),

Seq(Row(Seq(1, 9, 8, 7), 1, 2)))

checkAnswer(df.select("x").filter("exists(i, x -> x % d == 0)"),

Seq(Row(1)))

```

the following exception happens:

```

java.lang.ClassCastException: org.apache.spark.sql.catalyst.expressions.BoundReference cannot be cast to org.apache.spark.sql.catalyst.expressions.NamedExpression

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at scala.collection.TraversableLike.map(TraversableLike.scala:237)

at scala.collection.TraversableLike.map$(TraversableLike.scala:230)

at scala.collection.AbstractTraversable.map(Traversable.scala:108)

at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.$anonfun$functionsForEval$1(higherOrderFunctions.scala:147)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:237)

at scala.collection.immutable.List.foreach(List.scala:392)

at scala.collection.TraversableLike.map(TraversableLike.scala:237)

at scala.collection.TraversableLike.map$(TraversableLike.scala:230)

at scala.collection.immutable.List.map(List.scala:298)

at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.functionsForEval(higherOrderFunctions.scala:145)

at org.apache.spark.sql.catalyst.expressions.HigherOrderFunction.functionsForEval$(higherOrderFunctions.scala:145)

at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionsForEval$lzycompute(higherOrderFunctions.scala:369)

at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionsForEval(higherOrderFunctions.scala:369)

at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.functionForEval(higherOrderFunctions.scala:176)

at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.functionForEval$(higherOrderFunctions.scala:176)

at org.apache.spark.sql.catalyst.expressions.ArrayExists.functionForEval(higherOrderFunctions.scala:369)

at org.apache.spark.sql.catalyst.expressions.ArrayExists.nullSafeEval(higherOrderFunctions.scala:387)

at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.eval(higherOrderFunctions.scala:190)

at org.apache.spark.sql.catalyst.expressions.SimpleHigherOrderFunction.eval$(higherOrderFunctions.scala:185)

at org.apache.spark.sql.catalyst.expressions.ArrayExists.eval(higherOrderFunctions.scala:369)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificPredicate.eval(Unknown Source)

at org.apache.spark.sql.execution.FilterExec.$anonfun$doExecute$3(basicPhysicalOperators.scala:216)

at org.apache.spark.sql.execution.FilterExec.$anonfun$doExecute$3$adapted(basicPhysicalOperators.scala:215)

...

```

because the `UnresolvedAttribute`s in `LambdaFunction` are unexpectedly resolved by the rule.

This pr modified to use a placeholder `UnresolvedNamedLambdaVariable` to prevent unexpected resolution.

## How was this patch tested?

Added a test and modified some tests.

Closes#23320 from ueshin/issues/SPARK-26370/hof_resolution.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

I was looking at the code and it was a bit difficult to see the life cycle of InMemoryFileIndex passed into getOrInferFileFormatSchema, because once it is passed in, and another time it was created in getOrInferFileFormatSchema. It'd be easier to understand the life cycle if we move the creation of it out.

## How was this patch tested?

This is a simple code move and should be covered by existing tests.

Closes#23317 from rxin/SPARK-26368.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Regarding the performance issue of SPARK-26155, it reports the issue on TPC-DS. I think it is better to add a benchmark for `LongToUnsafeRowMap` which is the root cause of performance regression.

It can be easier to show performance difference between different metric implementations in `LongToUnsafeRowMap`.

## How was this patch tested?

Manually run added benchmark.

Closes#23284 from viirya/SPARK-26337.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Added query status updates to ContinuousExecution.

## How was this patch tested?

Existing unit tests + added ContinuousQueryStatusAndProgressSuite.

Closes#23095 from gaborgsomogyi/SPARK-23886.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

As discussed in https://github.com/apache/spark/pull/23208/files#r239684490 , we should put `newScanBuilder` in read related mix-in traits like `SupportsBatchRead`, to support write-only table.

In the `Append` operator, we should skip schema validation if not necessary. In the future we would introduce a capability API, so that data source can tell Spark that it doesn't want to do validation.

## How was this patch tested?

existing tests.

Closes#23266 from cloud-fan/ds-read.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

There are some comments issues left when `ConvertToLocalRelation` rule was added (see #22205/[SPARK-25212](https://issues.apache.org/jira/browse/SPARK-25212)). This PR fixes those comments issues.

## How was this patch tested?

N/A

Closes#23273 from seancxmao/ConvertToLocalRelation-doc.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

cleanup some tests to make sure expression is resolved during test.

## How was this patch tested?

test-only PR

Closes#23297 from cloud-fan/test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Some documents of `Distribution/Partitioning` are stale and misleading, this PR fixes them:

1. `Distribution` never have intra-partition requirement

2. `OrderedDistribution` does not require tuples that share the same value being colocated in the same partition.

3. `RangePartitioning` can provide a weaker guarantee for a prefix of its `ordering` expressions.

## How was this patch tested?

comment-only PR.

Closes#23249 from cloud-fan/doc.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Follow up pr for #23207, include following changes:

- Rename `SQLShuffleMetricsReporter` to `SQLShuffleReadMetricsReporter` to make it match with write side naming.

- Display text changes for read side for naming consistent.

- Rename function in `ShuffleWriteProcessor`.

- Delete `private[spark]` in execution package.

## How was this patch tested?

Existing tests.

Closes#23286 from xuanyuanking/SPARK-26193-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

If `checkForContinuous` is called ( `checkForStreaming` is called in `checkForContinuous` ), the `checkForStreaming` mothod will be called twice in `createQuery` , this is not necessary, and the `checkForStreaming` method has a lot of statements, so it's better to remove one of them.

## How was this patch tested?

Existing unit tests in `StreamingQueryManagerSuite` and `ContinuousAggregationSuite`

Closes#23251 from 10110346/isUnsupportedOperationCheckEnabled.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to return partial results from JSON datasource and JSON functions in the PERMISSIVE mode if some of JSON fields are parsed and converted to desired types successfully. The changes are made only for `StructType`. Whole bad JSON records are placed into the corrupt column specified by the `columnNameOfCorruptRecord` option or SQL config.

Partial results are not returned for malformed JSON input.

## How was this patch tested?

Added new UT which checks converting JSON strings with one invalid and one valid field at the end of the string.

Closes#23253 from MaxGekk/json-bad-record.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?