This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems.

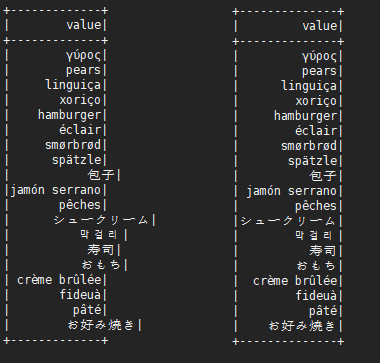

It is effective for English, Chinese characters, Japanese, Korean and so on.

```scala

before:

+---+---------------------------+-------------+

|id |中国 |s2 |

+---+---------------------------+-------------+

|1 |ab |[a] |

|2 |null |[中国, abc] |

|3 |ab1 |[hello world]|

|4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] |

|5 |中国(你好)a |[“中(国), 312] |

|6 |中国山(东)服务区 |[“中(国)] |

|7 |中国山东服务区 |[中(国)] |

|8 | |[中国] |

+---+---------------------------+-------------+

after:

+---+-----------------------------------+----------------+

|id |中国 |s2 |

+---+-----------------------------------+----------------+

|1 |ab |[a] |

|2 |null |[中国, abc] |

|3 |ab1 |[hello world] |

|4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] |

|5 |中国(你好)a |[“中(国), 312]|

|6 |中国山(东)服务区 |[“中(国)] |

|7 |中国山东服务区 |[中(国)] |

|8 | |[中国] |

+---+-----------------------------------+----------------+

```

## What changes were proposed in this pull request?

When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem.

Try to fix this problem.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22048 from xuejianbest/master.

Authored-by: xuejianbest <384329882@qq.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

SPARK-10399 introduced a performance regression on the hash computation for UTF8String.

The regression can be evaluated with the code attached in the JIRA. That code runs in about 120 us per method on my laptop (MacBook Pro 2.5 GHz Intel Core i7, RAM 16 GB 1600 MHz DDR3) while the code from branch 2.3 takes on the same machine about 45 us for me. After the PR, the code takes about 45 us on the master branch too.

## How was this patch tested?

running the perf test from the JIRA

Closes#22338 from mgaido91/SPARK-25317.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to extended `to_json` and support any types as element types of input arrays. It should allow converting arrays of primitive types and arrays of arrays. For example:

```

select to_json(array('1','2','3'))

> ["1","2","3"]

select to_json(array(array(1,2,3),array(4)))

> [[1,2,3],[4]]

```

## How was this patch tested?

Added a couple sql tests for arrays of primitive type and of arrays. Also I added round trip test `from_json` -> `to_json`.

Closes#22226 from MaxGekk/to_json-array.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`HiveExternalCatalogVersionsSuite` Scala-2.12 test has been failing due to class path issue. It is marked as `ABORTED` because it fails at `beforeAll` during data population stage.

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/

```

org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite *** ABORTED ***

Exception encountered when invoking run on a nested suite - spark-submit returned with exit code 1.

```

The root cause of the failure is that `runSparkSubmit` mixes 2.4.0-SNAPSHOT classes and old Spark (2.1.3/2.2.2/2.3.1) together during `spark-submit`. This PR aims to provide `non-test` mode execution mode to `runSparkSubmit` by removing the followings.

- SPARK_TESTING

- SPARK_SQL_TESTING

- SPARK_PREPEND_CLASSES

- SPARK_DIST_CLASSPATH

Previously, in the class path, new Spark classes are behind the old Spark classes. So, new ones are unseen. However, Spark 2.4.0 reveals this bug due to the recent data source class changes.

## How was this patch tested?

Manual test. After merging, it will be tested via Jenkins.

```scala

$ dev/change-scala-version.sh 2.12

$ build/mvn -DskipTests -Phive -Pscala-2.12 clean package

$ build/mvn -Phive -Pscala-2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite test

...

HiveExternalCatalogVersionsSuite:

- backward compatibility

...

Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#22340 from dongjoon-hyun/SPARK-25337.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

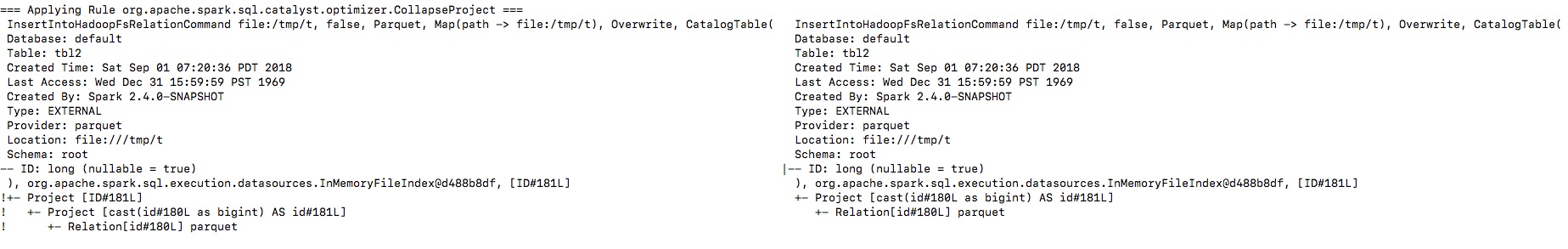

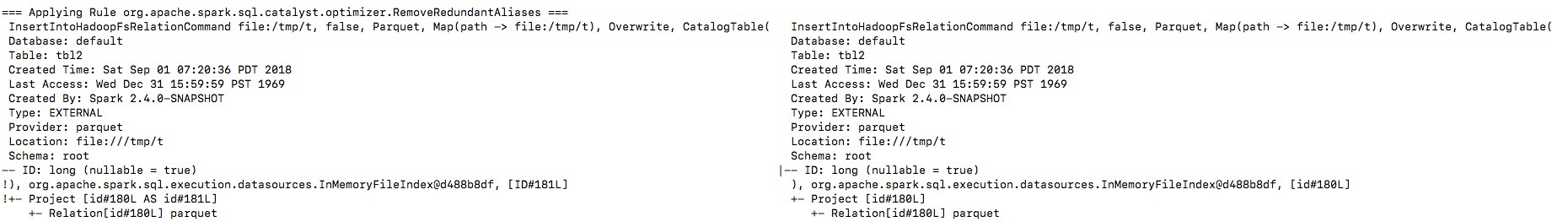

## What changes were proposed in this pull request?

Let's see the follow example:

```

val location = "/tmp/t"

val df = spark.range(10).toDF("id")

df.write.format("parquet").saveAsTable("tbl")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"CREATE TABLE tbl2(ID long) USING parquet location $location")

spark.sql("INSERT OVERWRITE TABLE tbl2 SELECT ID FROM view1")

println(spark.read.parquet(location).schema)

spark.table("tbl2").show()

```

The output column name in schema will be `id` instead of `ID`, thus the last query shows nothing from `tbl2`.

By enabling the debug message we can see that the output naming is changed from `ID` to `id`, and then the `outputColumns` in `InsertIntoHadoopFsRelationCommand` is changed in `RemoveRedundantAliases`.

**To guarantee correctness**, we should change the output columns from `Seq[Attribute]` to `Seq[String]` to avoid its names being replaced by optimizer.

I will fix project elimination related rules in https://github.com/apache/spark/pull/22311 after this one.

## How was this patch tested?

Unit test.

Closes#22320 from gengliangwang/fixOutputSchema.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Upgrade chill to 0.9.3, Kryo to 4.0.2, to get bug fixes and improvements.

The resolved tickets includes:

- SPARK-25258 Upgrade kryo package to version 4.0.2

- SPARK-23131 Kryo raises StackOverflow during serializing GLR model

- SPARK-25176 Kryo fails to serialize a parametrised type hierarchy

More details:

https://github.com/twitter/chill/releases/tag/v0.9.3cc3910d501

## How was this patch tested?

Existing tests.

Closes#22179 from wangyum/SPARK-23131.

Lead-authored-by: Yuming Wang <yumwang@ebay.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Zinc is 23.5MB (tgz).

```

$ curl -LO https://downloads.lightbend.com/zinc/0.3.15/zinc-0.3.15.tgz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 23.5M 100 23.5M 0 0 35.4M 0 --:--:-- --:--:-- --:--:-- 35.3M

```

Currently, Spark downloads Zinc once. However, it occurs too many times in build systems. This PR aims to skip Zinc downloading when the system already has it.

```

$ build/mvn clean

exec: curl --progress-bar -L https://downloads.lightbend.com/zinc/0.3.15/zinc-0.3.15.tgz

######################################################################## 100.0%

```

This will reduce many resources(CPU/Networks/DISK) at least in Mac and Docker-based build system.

## How was this patch tested?

Pass the Jenkins.

Closes#22333 from dongjoon-hyun/SPARK-25335.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

An alternative fix for https://github.com/apache/spark/pull/21698

When Spark rerun tasks for an RDD, there are 3 different behaviors:

1. determinate. Always return the same result with same order when rerun.

2. unordered. Returns same data set in random order when rerun.

3. indeterminate. Returns different result when rerun.

Normally Spark doesn't need to care about it. Spark runs stages one by one, when a task is failed, just rerun it. Although the rerun task may return a different result, users will not be surprised.

However, Spark may rerun a finished stage when seeing fetch failures. When this happens, Spark needs to rerun all the tasks of all the succeeding stages if the RDD output is indeterminate, because the input of the succeeding stages has been changed.

If the RDD output is determinate, we only need to rerun the failed tasks of the succeeding stages, because the input doesn't change.

If the RDD output is unordered, it's same as determinate, because shuffle partitioner is always deterministic(round-robin partitioner is not a shuffle partitioner that extends `org.apache.spark.Partitioner`), so the reducers will still get the same input data set.

This PR fixed the failure handling for `repartition`, to avoid correctness issues.

For `repartition`, it applies a stateful map function to generate a round-robin id, which is order sensitive and makes the RDD's output indeterminate. When the stage contains `repartition` reruns, we must also rerun all the tasks of all the succeeding stages.

**future improvement:**

1. Currently we can't rollback and rerun a shuffle map stage, and just fail. We should fix it later. https://issues.apache.org/jira/browse/SPARK-25341

2. Currently we can't rollback and rerun a result stage, and just fail. We should fix it later. https://issues.apache.org/jira/browse/SPARK-25342

3. We should provide public API to allow users to tag the random level of the RDD's computing function.

## How is this pull request tested?

a new test case

Closes#22112 from cloud-fan/repartition.

Lead-authored-by: Wenchen Fan <wenchen@databricks.com>

Co-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

Running a large Spark job with speculation turned on was causing executor heartbeats to time out on the driver end after sometime and eventually, after hitting the max number of executor failures, the job would fail.

## What changes were proposed in this pull request?

The main reason for the heartbeat timeouts was that the heartbeat-receiver-event-loop-thread was blocked waiting on the TaskSchedulerImpl object which was being held by one of the dispatcher-event-loop threads executing the method dequeueSpeculativeTasks() in TaskSetManager.scala. On further analysis of the heartbeat receiver method executorHeartbeatReceived() in TaskSchedulerImpl class, we found out that instead of waiting to acquire the lock on the TaskSchedulerImpl object, we can remove that lock and make the operations to the global variables inside the code block to be atomic. The block of code in that method only uses one global HashMap taskIdToTaskSetManager. Making that map a ConcurrentHashMap, we are ensuring atomicity of operations and speeding up the heartbeat receiver thread operation.

## How was this patch tested?

Screenshots of the thread dump have been attached below:

**heartbeat-receiver-event-loop-thread:**

<img width="1409" alt="screen shot 2018-08-24 at 9 19 57 am" src="https://user-images.githubusercontent.com/22228190/44593413-e25df780-a788-11e8-9520-176a18401a59.png">

**dispatcher-event-loop-thread:**

<img width="1409" alt="screen shot 2018-08-24 at 9 21 56 am" src="https://user-images.githubusercontent.com/22228190/44593484-13d6c300-a789-11e8-8d88-34b1d51d4541.png">

Closes#22221 from pgandhi999/SPARK-25231.

Authored-by: pgandhi <pgandhi@oath.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

Implement an image schema datasource.

This image datasource support:

- partition discovery (loading partitioned images)

- dropImageFailures (the same behavior with `ImageSchema.readImage`)

- path wildcard matching (the same behavior with `ImageSchema.readImage`)

- loading recursively from directory (different from `ImageSchema.readImage`, but use such path: `/path/to/dir/**`)

This datasource **NOT** support:

- specify `numPartitions` (it will be determined by datasource automatically)

- sampling (you can use `df.sample` later but the sampling operator won't be pushdown to datasource)

## How was this patch tested?

Unit tests.

## Benchmark

I benchmark and compare the cost time between old `ImageSchema.read` API and my image datasource.

**cluster**: 4 nodes, each with 64GB memory, 8 cores CPU

**test dataset**: Flickr8k_Dataset (about 8091 images)

**time cost**:

- My image datasource time (automatically generate 258 partitions): 38.04s

- `ImageSchema.read` time (set 16 partitions): 68.4s

- `ImageSchema.read` time (set 258 partitions): 90.6s

**time cost when increase image number by double (clone Flickr8k_Dataset and loads double number images)**:

- My image datasource time (automatically generate 515 partitions): 95.4s

- `ImageSchema.read` (set 32 partitions): 109s

- `ImageSchema.read` (set 515 partitions): 105s

So we can see that my image datasource implementation (this PR) bring some performance improvement compared against old`ImageSchema.read` API.

Closes#22328 from WeichenXu123/image_datasource.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

This is a follow-up of #22313 and aim to ignore the micro benchmark test which takes over 2 minutes in Jenkins.

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-sbt-hadoop-2.6/4939/consoleFull

## How was this patch tested?

The test case should be ignored in Jenkins.

```

[info] FilterPushdownBenchmark:

...

[info] - Pushdown benchmark with many filters !!! IGNORED !!!

```

Closes#22336 from dongjoon-hyun/SPARK-25306-2.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

The problem occurs because stage object is removed from liveStages in

AppStatusListener onStageCompletion. Because of this any onTaskEnd event

received after onStageCompletion event do not update stage metrics.

The fix is to retain stage objects in liveStages until all tasks are complete.

1. Fixed the reproducible example posted in the JIRA

2. Added unit test

Closes#22209 from ankuriitg/ankurgupta/SPARK-24415.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Add a new metric to measure the executor's process (JVM) CPU time.

## How was this patch tested?

Manually tested on a Spark cluster (see SPARK-25228 for an example screenshot).

Closes#22218 from LucaCanali/AddExecutrCPUTimeMetric.

Authored-by: LucaCanali <luca.canali@cern.ch>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/22259 .

Scala case class has a wide surface: apply, unapply, accessors, copy, etc.

In https://github.com/apache/spark/pull/22259 , we change the type of `UserDefinedFunction.inputTypes` from `Option[Seq[DataType]]` to `Option[Seq[Schema]]`. This breaks backward compatibility.

This PR changes the type back, and use a `var` to keep the new nullable info.

## How was this patch tested?

N/A

Closes#22319 from cloud-fan/revert.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Revert SPARK-24863 (#21819) and SPARK-24748 (#21721) as per discussion in #21721. We will revisit them when the data source v2 APIs are out.

## How was this patch tested?

Jenkins

Closes#22334 from zsxwing/revert-SPARK-24863-SPARK-24748.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The configuration parameter "spark.shuffle.service.enabled" has defined in `package.scala`, and it is also used in many place, so we can replace it with `SHUFFLE_SERVICE_ENABLED`.

and unified this configuration parameter "spark.shuffle.service.port" together.

## How was this patch tested?

N/A

Closes#22306 from 10110346/unifiedserviceenable.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In both ORC data sources, `createFilter` function has exponential time complexity due to its skewed filter tree generation. This PR aims to improve it by using new `buildTree` function.

**REPRODUCE**

```scala

// Create and read 1 row table with 1000 columns

sql("set spark.sql.orc.filterPushdown=true")

val selectExpr = (1 to 1000).map(i => s"id c$i")

spark.range(1).selectExpr(selectExpr: _*).write.mode("overwrite").orc("/tmp/orc")

print(s"With 0 filters, ")

spark.time(spark.read.orc("/tmp/orc").count)

// Increase the number of filters

(20 to 30).foreach { width =>

val whereExpr = (1 to width).map(i => s"c$i is not null").mkString(" and ")

print(s"With $width filters, ")

spark.time(spark.read.orc("/tmp/orc").where(whereExpr).count)

}

```

**RESULT**

```scala

With 0 filters, Time taken: 653 ms

With 20 filters, Time taken: 962 ms

With 21 filters, Time taken: 1282 ms

With 22 filters, Time taken: 1982 ms

With 23 filters, Time taken: 3855 ms

With 24 filters, Time taken: 6719 ms

With 25 filters, Time taken: 12669 ms

With 26 filters, Time taken: 25032 ms

With 27 filters, Time taken: 49585 ms

With 28 filters, Time taken: 98980 ms // over 1 min 38 seconds

With 29 filters, Time taken: 198368 ms // over 3 mins

With 30 filters, Time taken: 393744 ms // over 6 mins

```

**AFTER THIS PR**

```scala

With 0 filters, Time taken: 774 ms

With 20 filters, Time taken: 601 ms

With 21 filters, Time taken: 399 ms

With 22 filters, Time taken: 679 ms

With 23 filters, Time taken: 363 ms

With 24 filters, Time taken: 342 ms

With 25 filters, Time taken: 336 ms

With 26 filters, Time taken: 352 ms

With 27 filters, Time taken: 322 ms

With 28 filters, Time taken: 302 ms

With 29 filters, Time taken: 307 ms

With 30 filters, Time taken: 301 ms

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes#22313 from dongjoon-hyun/SPARK-25306.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

I made one pass over barrier APIs added to Spark 2.4 and updates some scopes and docs. I will update Python docs once Scala doc was reviewed.

One major issue is that `BarrierTaskContext` implements `TaskContextImpl` that exposes some public methods. And internally there were several direct references to `TaskContextImpl` methods instead of `TaskContext`. This PR moved some methods from `TaskContextImpl` to `TaskContext`, remaining package private, and used delegate methods to avoid inheriting `TaskContextImp` and exposing unnecessary APIs.

TODOs:

- [x] scala doc

- [x] python doc (#22261 ).

Closes#22240 from mengxr/SPARK-25248.

Authored-by: Xiangrui Meng <meng@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

Previously in `TakeOrderedAndProjectSuite` the SparkSession will not get recycled when the test suite finishes.

## How was this patch tested?

N/A

Closes#22330 from jiangxb1987/SPARK-19355.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR integrates handling of `UnsafeArrayData` and `GenericArrayData` into one. The current `CodeGenerator.createUnsafeArray` handles only allocation of `UnsafeArrayData`.

This PR introduces a new method `createArrayData` that returns a code to allocate `UnsafeArrayData` or `GenericArrayData` and to assign a value into the allocated array.

This PR also reduce the size of generated code by calling a runtime helper.

This PR replaced `createArrayData` with `createUnsafeArray`. This PR also refactor `ArraySetLike` that can be used for `ArrayDistinct`, too.

This PR also refactors`ArrayDistinct` to use `ArraryBuilder`.

## How was this patch tested?

Existing tests

Closes#21912 from kiszk/SPARK-24962.

Lead-authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Co-authored-by: Takuya UESHIN <ueshin@happy-camper.st>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR fixes a problem that `ArraysOverlap` function throws a `CompilationException` with non-nullable array type.

The following is the stack trace of the original problem:

```

Code generation of arrays_overlap([1,2,3], [4,5,3]) failed:

java.util.concurrent.ExecutionException: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 56, Column 11: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 56, Column 11: Expression "isNull_0" is not an rvalue

java.util.concurrent.ExecutionException: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 56, Column 11: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 56, Column 11: Expression "isNull_0" is not an rvalue

at com.google.common.util.concurrent.AbstractFuture$Sync.getValue(AbstractFuture.java:306)

at com.google.common.util.concurrent.AbstractFuture$Sync.get(AbstractFuture.java:293)

at com.google.common.util.concurrent.AbstractFuture.get(AbstractFuture.java:116)

at com.google.common.util.concurrent.Uninterruptibles.getUninterruptibly(Uninterruptibles.java:135)

at com.google.common.cache.LocalCache$Segment.getAndRecordStats(LocalCache.java:2410)

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2380)

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2342)

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2257)

at com.google.common.cache.LocalCache.get(LocalCache.java:4000)

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4004)

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:4874)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$.compile(CodeGenerator.scala:1305)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateMutableProjection$.create(GenerateMutableProjection.scala:143)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateMutableProjection$.create(GenerateMutableProjection.scala:48)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateMutableProjection$.create(GenerateMutableProjection.scala:32)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator.generate(CodeGenerator.scala:1260)

```

## How was this patch tested?

Added test in `CollectionExpressionSuite`.

Closes#22317 from kiszk/SPARK-25310.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Invoking ArrayContains function with non nullable array type throws the following error in the code generation phase. Below is the error snippet.

```SQL

Code generation of array_contains([1,2,3], 1) failed:

java.util.concurrent.ExecutionException: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 40, Column 11: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 40, Column 11: Expression "isNull_0" is not an rvalue

java.util.concurrent.ExecutionException: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 40, Column 11: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 40, Column 11: Expression "isNull_0" is not an rvalue

at com.google.common.util.concurrent.AbstractFuture$Sync.getValue(AbstractFuture.java:306)

at com.google.common.util.concurrent.AbstractFuture$Sync.get(AbstractFuture.java:293)

at com.google.common.util.concurrent.AbstractFuture.get(AbstractFuture.java:116)

at com.google.common.util.concurrent.Uninterruptibles.getUninterruptibly(Uninterruptibles.java:135)

at com.google.common.cache.LocalCache$Segment.getAndRecordStats(LocalCache.java:2410)

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2380)

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2342)

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2257)

at com.google.common.cache.LocalCache.get(LocalCache.java:4000)

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4004)

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:4874)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$.compile(CodeGenerator.scala:1305)

```

## How was this patch tested?

Added test in CollectionExpressionSuite.

Closes#22315 from dilipbiswal/SPARK-25308.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Improve build for Scala 2.12. Current build for sbt fails on the subproject `repl`:

```

[info] Compiling 6 Scala sources to /Users/rendong/wdi/spark/repl/target/scala-2.12/classes...

[error] /Users/rendong/wdi/spark/repl/scala-2.11/src/main/scala/org/apache/spark/repl/SparkILoopInterpreter.scala:80: overriding lazy value importableSymbolsWithRenames in class ImportHandler of type List[(this.intp.global.Symbol, this.intp.global.Name)];

[error] lazy value importableSymbolsWithRenames needs `override' modifier

[error] lazy val importableSymbolsWithRenames: List[(Symbol, Name)] = {

[error] ^

[warn] /Users/rendong/wdi/spark/repl/src/main/scala/org/apache/spark/repl/SparkILoop.scala:53: variable addedClasspath in class ILoop is deprecated (since 2.11.0): use reset, replay or require to update class path

[warn] if (addedClasspath != "") {

[warn] ^

[warn] /Users/rendong/wdi/spark/repl/src/main/scala/org/apache/spark/repl/SparkILoop.scala:54: variable addedClasspath in class ILoop is deprecated (since 2.11.0): use reset, replay or require to update class path

[warn] settings.classpath append addedClasspath

[warn] ^

[warn] two warnings found

[error] one error found

[error] (repl/compile:compileIncremental) Compilation failed

[error] Total time: 93 s, completed 2018-9-3 10:07:26

```

## How was this patch tested?

```

./dev/change-scala-version.sh 2.12

## For Maven

./build/mvn -Pscala-2.12 [mvn commands]

## For SBT

sbt -Dscala.version=2.12.6

```

Closes#22310 from sadhen/SPARK-25298.

Authored-by: Darcy Shen <sadhen@zoho.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The "date function on DataFrame" test fails consistently on my laptop. In this PR

i am fixing it by changing the way we compare the two timestamp values. With this change i am able to run the tests clean.

## How was this patch tested?

Fixed the failing test.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#22274 from dilipbiswal/r-sql-test-fix2.

## What changes were proposed in this pull request?

remove test-2.10.jar and add test-2.12.jar.

## How was this patch tested?

```

$ sbt -Dscala-2.12

> ++ 2.12.6

> project hive

> testOnly *HiveSparkSubmitSuite -- -z "8489"

```

Closes#22308 from sadhen/SPARK-8489-FOLLOWUP.

Authored-by: Darcy Shen <sadhen@zoho.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Add the R version of array_intersect/array_except/array_union/shuffle

## How was this patch tested?

Add test in test_sparkSQL.R

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes#22291 from huaxingao/spark-25007.

## What changes were proposed in this pull request?

The PR adds the lift measure to Association rules.

## How was this patch tested?

existing and modified UTs

Closes#22236 from mgaido91/SPARK-10697.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, when FDR is used for `ChiSqSelector` and no feature is selected an exception is thrown because the max operation fails.

The PR fixes the problem by handling this case and returning an empty array in that case, as sklearn (which was the reference for the initial implementation of FDR) does.

## How was this patch tested?

added UT

Closes#22303 from mgaido91/SPARK-25289.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

`BytesToBytesMapOnHeapSuite`.`randomizedStressTest` caused `OutOfMemoryError` on several test runs. Seems better to reduce memory usage in this test.

## How was this patch tested?

Unit tests.

Closes#22297 from viirya/SPARK-25290.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR works for one of TODOs in `GenerateUnsafeProjection` "if the nullability of field is correct, we can use it to save null check" to simplify generated code.

When `nullable=false` in `DataType`, `GenerateUnsafeProjection` removed code for null checks in the generated Java code.

## How was this patch tested?

Added new test cases into `GenerateUnsafeProjectionSuite`

Closes#20637 from kiszk/SPARK-23466.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Fixes the issue brought up in https://github.com/GoogleCloudPlatform/spark-on-k8s-operator/issues/273 where the arguments were being comma-delineated, which was incorrect wrt to the PythonRunner and RRunner.

## How was this patch tested?

Modified unit test to test this change.

Author: Ilan Filonenko <if56@cornell.edu>

Closes#22257 from ifilonenko/SPARK-25264.

## What changes were proposed in this pull request?

I propose to remove one of `parmap` methods which accepts an execution context as a parameter. The method should be removed to eliminate any deadlocks that can occur if `parmap` is called recursively on thread pools restricted by size.

Closes#22292 from MaxGekk/remove-overloaded-parmap.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Move the output verification of Explain test cases to a new suite ExplainSuite.

## How was this patch tested?

N/A

Closes#22300 from gatorsmile/test3200.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

As described in [SPARK-25261](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-25261),the unit of spark.executor.memory and spark.driver.memory is parsed as bytes in some cases if no unit specified, while in https://spark.apache.org/docs/latest/configuration.html#application-properties, they are descibed as MiB, which may lead to some misunderstandings.

## How was this patch tested?

N/A

Closes#22252 from ivoson/branch-correct-configuration.

Lead-authored-by: huangtengfei02 <huangtengfei02@baidu.com>

Co-authored-by: Huang Tengfei <tengfei.h@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, filter pushdown will not work if Parquet schema and Hive metastore schema are in different letter cases even spark.sql.caseSensitive is false.

Like the below case:

```scala

spark.sparkContext.hadoopConfiguration.setInt("parquet.block.size", 8 * 1024 * 1024)

spark.range(1, 40 * 1024 * 1024, 1, 1).sortWithinPartitions("id").write.parquet("/tmp/t")

sql("CREATE TABLE t (ID LONG) USING parquet LOCATION '/tmp/t'")

sql("select * from t where id < 100L").write.csv("/tmp/id")

```

Although filter "ID < 100L" is generated by Spark, it fails to pushdown into parquet actually, Spark still does the full table scan when reading.

This PR provides a case-insensitive field resolution to make it work.

Before - "ID < 100L" fail to pushedown:

<img width="273" alt="screen shot 2018-08-23 at 10 08 26 pm" src="https://user-images.githubusercontent.com/2989575/44530558-40ef8b00-a721-11e8-8abc-7f97671590d3.png">

After - "ID < 100L" pushedown sucessfully:

<img width="267" alt="screen shot 2018-08-23 at 10 08 40 pm" src="https://user-images.githubusercontent.com/2989575/44530567-44831200-a721-11e8-8634-e9f664b33d39.png">

## How was this patch tested?

Added UTs.

Closes#22197 from yucai/SPARK-25207.

Authored-by: yucai <yyu1@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Switch `org.apache.hive.service.server.HiveServer2` to register its shutdown callback with Spark's `ShutdownHookManager`, rather than direct with the Java Runtime callback.

This avoids race conditions in shutdown where the filesystem is shutdown before the flush/write/rename of the event log is completed, particularly on object stores where the write and rename can be slow.

## How was this patch tested?

There's no explicit unit for test this, which is consistent with every other shutdown hook in the codebase.

* There's an implicit test when the scalatest process is halted.

* More manual/integration testing is needed.

HADOOP-15679 has added the ability to explicitly execute the hadoop shutdown hook sequence which spark uses; that could be stabilized for testing if desired, after which all the spark hooks could be tested. Until then: external system tests only.

Author: Steve Loughran <stevel@hortonworks.com>

Closes#22186 from steveloughran/BUG/SPARK-25183-shutdown.

## What changes were proposed in this pull request?

### For `SPARK-5775 read array from partitioned_parquet_with_key_and_complextypes`:

scala2.12

```

scala> (1 to 10).toString

res4: String = Range 1 to 10

```

scala2.11

```

scala> (1 to 10).toString

res2: String = Range(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

```

And

```

def prepareAnswer(answer: Seq[Row], isSorted: Boolean): Seq[Row] = {

val converted: Seq[Row] = answer.map(prepareRow)

if (!isSorted) converted.sortBy(_.toString()) else converted

}

```

sortBy `_.toString` is not a good idea.

### Other failures are caused by

```

Array(Int.box(1)).toSeq == Array(Double.box(1.0)).toSeq

```

It is false in 2.12.2 + and is true in 2.11.x , 2.12.0, 2.12.1

## How was this patch tested?

This is a patch on a specific unit test.

Closes#22264 from sadhen/SPARK25256.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Add an up-front check that `JIRA_USERNAME` and `JIRA_PASSWORD` have been set. If they haven't, ask user if they want to continue. This prevents the JIRA state update from failing at the very end of the process because user forgot to set these environment variables.

## How was this patch tested?

I ran the script with environment vars set, and unset, to verify it works as specified.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22294 from erikerlandson/spark-25287.

Authored-by: Erik Erlandson <eerlands@redhat.com>

Signed-off-by: Erik Erlandson <eerlands@redhat.com>

## What changes were proposed in this pull request?

Add a PAM configuration in k8s dockerfile to require authentication into wheel to run as `su`

## How was this patch tested?

Verify against CI that PAM config succeeds & causes no regressions

Closes#22285 from erikerlandson/spark-25275.

Authored-by: Erik Erlandson <eerlands@redhat.com>

Signed-off-by: Erik Erlandson <eerlands@redhat.com>

## What changes were proposed in this pull request?

Error messages from https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/183/

```

[INFO] --- scala-maven-plugin:3.2.2:compile (scala-compile-first) spark-repl_2.12 ---

[INFO] Using zinc server for incremental compilation

[warn] Pruning sources from previous analysis, due to incompatible CompileSetup.

[info] Compiling 6 Scala sources to /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/repl/target/scala-2.12/classes...

[error] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/repl/scala-2.11/src/main/scala/org/apache/spark/repl/SparkILoopInterpreter.scala:80: overriding lazy value importableSymbolsWithRenames in class ImportHandler of type List[(this.intp.global.Symbol, this.intp.global.Name)];

[error] lazy value importableSymbolsWithRenames needs `override' modifier

[error] lazy val importableSymbolsWithRenames: List[(Symbol, Name)] = {

[error] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/repl/src/main/scala/org/apache/spark/repl/SparkILoop.scala:53: variable addedClasspath in class ILoop is deprecated (since 2.11.0): use reset, replay or require to update class path

[warn] if (addedClasspath != "") {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/repl/src/main/scala/org/apache/spark/repl/SparkILoop.scala:54: variable addedClasspath in class ILoop is deprecated (since 2.11.0): use reset, replay or require to update class path

[warn] settings.classpath append addedClasspath

[warn] ^

[warn] two warnings found

[error] one error found

[error] Compile failed at Aug 29, 2018 5:28:22 PM [0.679s]

```

Readd the profile for `scala-2.12`. Using `-Pscala-2.12` will overrides `extra.source.dir` and `extra.testsource.dir` with two non-exist directories.

## How was this patch tested?

First, make sure it compiles.

```

dev/change-scala-version.sh 2.12

mvn -Pscala-2.12 -DskipTests compile install

```

Then, make a distribution to try the repl:

`./dev/make-distribution.sh --name custom-spark --tgz -Phadoop-2.7 -Phive -Pyarn -Pscala-2.12`

```

18/08/30 16:04:50 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://172.16.131.140:4040

Spark context available as 'sc' (master = local[*], app id = local-1535616298812).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.0-SNAPSHOT

/_/

Using Scala version 2.12.6 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_112)

Type in expressions to have them evaluated.

Type :help for more information.

scala> spark.sql("select percentile(key, 1) from values (1, 1),(2, 1) T(key, value)").show

+-------------------------------------+

|percentile(key, CAST(1 AS DOUBLE), 1)|

+-------------------------------------+

| 2.0|

+-------------------------------------+

```

Closes#22280 from sadhen/SPARK_24785_FOLLOWUP.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

`JavaColumnExpressionSuite.java` was added and `org.apache.spark.sql.ColumnExpressionSuite#test("isInCollection: Java Collection")` was removed.

It provides native Java tests for the method `org.apache.spark.sql.Column#isInCollection`.

Closes#22253 from aai95/isInCollectionJavaTest.

Authored-by: aai95 <aai95@yandex.ru>

Signed-off-by: DB Tsai <d_tsai@apple.com>

After SPARK-18371, it is guaranteed that there would be at least one message per partition per batch using direct kafka API when new messages exist in the topics. This change will give the user the option of setting the minimum instead of just a hard coded 1 limit

The related unit test is updated and some internal tests verified that the topic partitions with new messages will be progressed by the specified minimum.

Author: Reza Safi <rezasafi@cloudera.com>

Closes#22223 from rezasafi/streaminglag.

## What changes were proposed in this pull request?

This PR is an follow-up PR of #21087 based on [a discussion thread](https://github.com/apache/spark/pull/21087#discussion_r211080067]. Since #21087 changed a condition of `if` statement, the message in an exception is not consistent of the current behavior.

This PR updates the exception message.

## How was this patch tested?

Existing UTs

Closes#22269 from kiszk/SPARK-23997-followup.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

R tests require `testthat` v1.0.2. In the PR, I described how to install the version in the section http://spark.apache.org/docs/latest/building-spark.html#running-r-tests.

Closes#22272 from MaxGekk/r-testthat-doc.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Since https://github.com/apache/spark/pull/21696. Spark uses Parquet schema instead of Hive metastore schema to do pushdown.

That change can avoid wrong records returned when Hive metastore schema and parquet schema are in different letter cases. This pr add a test case for it.

More details:

https://issues.apache.org/jira/browse/SPARK-25206

## How was this patch tested?

unit tests

Closes#22267 from wangyum/SPARK-24716-TESTS.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Introduced by #21320 and #11744

```

$ sbt

> ++2.12.6

> project sql

> compile

...

[error] [warn] spark/sql/core/src/main/scala/org/apache/spark/sql/execution/ProjectionOverSchema.scala:41: match may not be exhaustive.

[error] It would fail on the following inputs: (_, ArrayType(_, _)), (_, _)

[error] [warn] getProjection(a.child).map(p => (p, p.dataType)).map {

[error] [warn]

[error] [warn] spark/sql/core/src/main/scala/org/apache/spark/sql/execution/ProjectionOverSchema.scala:52: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] getProjection(child).map(p => (p, p.dataType)).map {

[error] [warn]

...

```

And

```

$ sbt

> ++2.12.6

> project hive

> testOnly *ParquetMetastoreSuite

...

[error] /Users/rendong/wdi/spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala:22: object tools is not a member of package scala

[error] import scala.tools.nsc.Properties

[error] ^

[error] /Users/rendong/wdi/spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala:146: not found: value Properties

[error] val version = Properties.versionNumberString match {

[error] ^

[error] two errors found

...

```

## How was this patch tested?

Existing tests.

Closes#22260 from sadhen/fix_exhaustive_match.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

HyukjinKwon

## What changes were proposed in this pull request?

add __from pyspark.util import \_exception_message__ to python/pyspark/java_gateway.py

## How was this patch tested?

[flake8](http://flake8.pycqa.org) testing of https://github.com/apache/spark on Python 3.7.0

$ __flake8 . --count --select=E901,E999,F821,F822,F823 --show-source --statistics__

```

./python/pyspark/java_gateway.py:172:20: F821 undefined name '_exception_message'

emsg = _exception_message(e)

^

1 F821 undefined name '_exception_message'

1

```

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22265 from cclauss/patch-2.

Authored-by: cclauss <cclauss@bluewin.ch>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>