Fetch-to-mem is guaranteed to fail if the message is bigger than 2 GB,

so we might as well use fetch-to-disk in that case. The message includes

some metadata in addition to the block data itself (in particular

UploadBlock has a lot of metadata), so we leave a little room.

Author: Imran Rashid <irashid@cloudera.com>

Closes#21474 from squito/SPARK-24297.

## What changes were proposed in this pull request?

In Spark "local" scheme means resources are already on the driver/executor nodes, this pr ignore the files with "local" scheme in `SparkContext.addFile` for fixing potential bug.

## How was this patch tested?

Existing tests.

Author: Yuanjian Li <xyliyuanjian@gmail.com>

Closes#21533 from xuanyuanking/SPARK-24195.

(1) Netty's ByteBuf cannot support data > 2gb. So to transfer data from a

ChunkedByteBuffer over the network, we use a custom version of

FileRegion which is backed by the ChunkedByteBuffer.

(2) On the receiving end, we need to expose all the data in a

FileSegmentManagedBuffer as a ChunkedByteBuffer. We do that by memory

mapping the entire file in chunks.

Added unit tests. Ran the randomized test a couple of hundred times on my laptop. Tests cover the equivalent of SPARK-24107 for the ChunkedByteBufferFileRegion. Also tested on a cluster with remote cache reads >2gb (in memory and on disk).

Author: Imran Rashid <irashid@cloudera.com>

Closes#21440 from squito/chunked_bb_file_region.

**Description**

As described in [SPARK-24755](https://issues.apache.org/jira/browse/SPARK-24755), when speculation is enabled, there is scenario that executor loss can cause task to not be resubmitted.

This patch changes the variable killedByOtherAttempt to keeps track of the taskId of tasks that are killed by other attempt. By doing this, we can still prevent resubmitting task killed by other attempt while resubmit successful attempt when executor lost.

**How was this patch tested?**

A UT is added based on the UT written by xuanyuanking with modification to simulate the scenario described in SPARK-24755.

Author: Hieu Huynh <“Hieu.huynh@oath.com”>

Closes#21729 from hthuynh2/SPARK_24755.

## What changes were proposed in this pull request?

When speculation is enabled,

TaskSetManager#markPartitionCompleted should write successful task duration to MedianHeap,

not just increase tasksSuccessful.

Otherwise when TaskSetManager#checkSpeculatableTasks,tasksSuccessful non-zero, but MedianHeap is empty.

Then throw an exception successfulTaskDurations.median java.util.NoSuchElementException: MedianHeap is empty.

Finally led to stopping SparkContext.

## How was this patch tested?

TaskSetManagerSuite.scala

unit test:[SPARK-24677] MedianHeap should not be empty when speculation is enabled

Author: sychen <sychen@ctrip.com>

Closes#21656 from cxzl25/fix_MedianHeap_empty.

## What changes were proposed in this pull request?

While looking through the codebase, it appeared that the scala code for RDD.cartesian does not have any tests for correctness. This adds a couple basic tests to verify cartesian yields correct values. While the implementation for RDD.cartesian is pretty simple, it always helps to have a few tests!

## How was this patch tested?

The new test cases pass, and the scala style tests from running dev/run-tests all pass.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Nihar Sheth <niharrsheth@gmail.com>

Closes#21765 from NiharS/cartesianTests.

It is corrected as per the configuration.

## What changes were proposed in this pull request?

IdleTimeout info used to print in the logs is taken based on the cacheBlock. If it is cacheBlock then cachedExecutorIdleTimeoutS is considered else executorIdleTimeoutS

## How was this patch tested?

Manual Test

spark-sql> cache table sample;

2018-05-15 14:44:02 INFO DAGScheduler:54 - Submitting 3 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[8] at processCmd at CliDriver.java:376) (first 15 tasks are for partitions Vector(0, 1, 2))

2018-05-15 14:44:02 INFO YarnScheduler:54 - Adding task set 0.0 with 3 tasks

2018-05-15 14:44:03 INFO ExecutorAllocationManager:54 - Requesting 1 new executor because tasks are backlogged (new desired total will be 1)

...

...

2018-05-15 14:46:10 INFO YarnClientSchedulerBackend:54 - Actual list of executor(s) to be killed is 1

2018-05-15 14:46:10 INFO **ExecutorAllocationManager:54 - Removing executor 1 because it has been idle for 120 seconds (new desired total will be 0)**

2018-05-15 14:46:11 INFO YarnSchedulerBackend$YarnDriverEndpoint:54 - Disabling executor 1.

2018-05-15 14:46:11 INFO DAGScheduler:54 - Executor lost: 1 (epoch 1)

Author: sandeep-katta <sandeep.katta2007@gmail.com>

Closes#21565 from sandeep-katta/loginfoBug.

## What changes were proposed in this pull request?

In the PR, I propose to output an warning if the `addFile()` or `addJar()` methods are callled more than once for the same path. Currently, overwriting of already added files is not supported. New comments and warning are reflected the existing behaviour.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21771 from MaxGekk/warning-on-adding-file.

## What changes were proposed in this pull request?

The `WholeTextFileInputFormat` determines the `maxSplitSize` for the file/s being read using the `wholeTextFiles` method. While this works well for large files, for smaller files where the maxSplitSize is smaller than the defaults being used with configs like hive-site.xml or explicitly passed in the form of `mapreduce.input.fileinputformat.split.minsize.per.node` or `mapreduce.input.fileinputformat.split.minsize.per.rack` , it just throws up an exception.

```java

java.io.IOException: Minimum split size pernode 123456 cannot be larger than maximum split size 9962

at org.apache.hadoop.mapreduce.lib.input.CombineFileInputFormat.getSplits(CombineFileInputFormat.java:200)

at org.apache.spark.rdd.WholeTextFileRDD.getPartitions(WholeTextFileRDD.scala:50)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:250)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:250)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2096)

at org.apache.spark.rdd.RDD.count(RDD.scala:1158)

... 48 elided

`

This change checks the maxSplitSize against the minSplitSizePerNode and minSplitSizePerRack and set them if `maxSplitSize < minSplitSizePerNode/Rack`

## How was this patch tested?

Test manually setting the conf while launching the job and added unit test.

Author: Dhruve Ashar <dhruveashar@gmail.com>

Closes#21601 from dhruve/bug/SPARK-24610.

## What changes were proposed in this pull request?

This PR enables a Java bytecode check tool [spotbugs](https://spotbugs.github.io/) to avoid possible integer overflow at multiplication. When an violation is detected, the build process is stopped.

Due to the tool limitation, some other checks will be enabled. In this PR, [these patterns](http://spotbugs-in-kengo-toda.readthedocs.io/en/lqc-list-detectors/detectors.html#findpuzzlers) in `FindPuzzlers` can be detected.

This check is enabled at `compile` phase. Thus, `mvn compile` or `mvn package` launches this check.

## How was this patch tested?

Existing UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21542 from kiszk/SPARK-24529.

## What changes were proposed in this pull request?

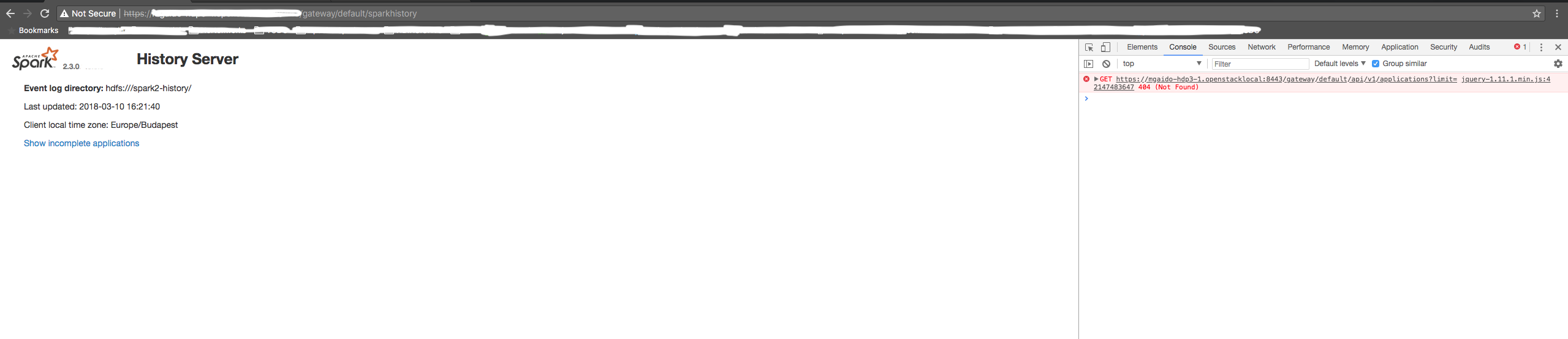

Added check for 404, to avoid json parsing on not found response and to avoid returning malformed or bad request when it was a not found http response.

Not sure if I need to add an additional check on non json response [if(connection.getHeaderField("Content-Type").contains("text/html")) then exception] as non-json is a subset of malformed json and covered in flow.

## How was this patch tested?

./dev/run-tests

Author: Rekha Joshi <rekhajoshm@gmail.com>

Closes#21684 from rekhajoshm/SPARK-24470.

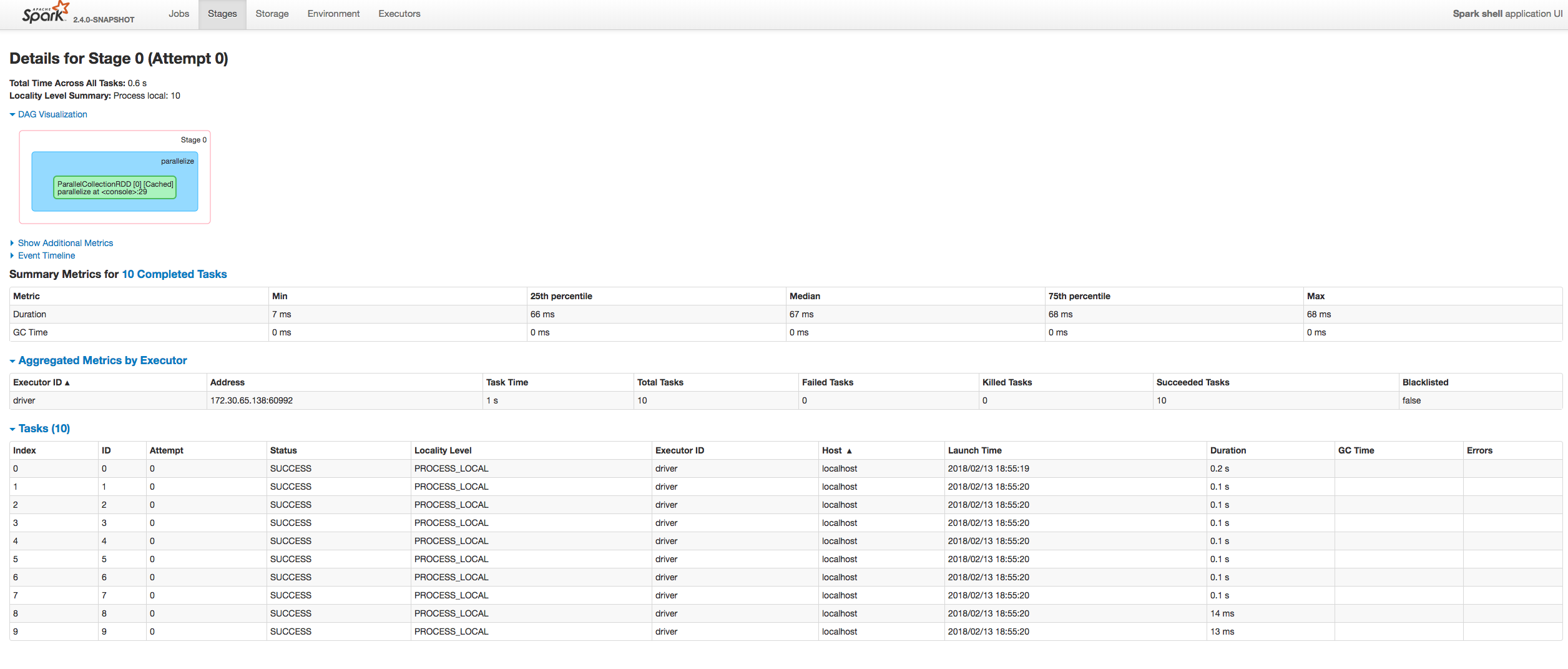

## What changes were proposed in this pull request?

Currently, `BlockRDD.getPreferredLocations` only get hosts info of blocks, which results in subsequent schedule level is not better than 'NODE_LOCAL'. We can just make a small changes, the schedule level can be improved to 'PROCESS_LOCAL'

## How was this patch tested?

manual test

Author: sharkdtu <sharkdtu@tencent.com>

Closes#21658 from sharkdtu/master.

## What changes were proposed in this pull request?

In the case of getting tokens via customized `ServiceCredentialProvider`, it is required that `ServiceCredentialProvider` be available in local spark-submit process classpath. In this case, all the configured remote sources should be forced to download to local.

For the ease of using this configuration, here propose to add wildcard '*' support to `spark.yarn.dist.forceDownloadSchemes`, also clarify the usage of this configuration.

## How was this patch tested?

New UT added.

Author: jerryshao <sshao@hortonworks.com>

Closes#21633 from jerryshao/SPARK-21917-followup.

This adds an option to event logging to include the long form of the callsite instead of the short form.

Author: Michael Mior <mmior@uwaterloo.ca>

Closes#21433 from michaelmior/long-callsite.

## What changes were proposed in this pull request?

Upgrade ASM to 6.1 to support JDK9+

## How was this patch tested?

Existing tests.

Author: DB Tsai <d_tsai@apple.com>

Closes#21459 from dbtsai/asm.

## What changes were proposed in this pull request?

Make SparkSubmit pass in the main class even if `SparkLauncher.NO_RESOURCE` is the primary resource.

## How was this patch tested?

New integration test written to capture this case.

Author: mcheah <mcheah@palantir.com>

Closes#21660 from mccheah/fix-k8s-no-resource.

This PR use spark.network.timeout in place of spark.storage.blockManagerSlaveTimeoutMs when it is not configured, as configuration doc said

manual test

Author: xueyu <278006819@qq.com>

Closes#21575 from xueyumusic/slaveTimeOutConfig.

## What changes were proposed in this pull request?

Add a new test suite to test RecordBinaryComparator.

## How was this patch tested?

New test suite.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21570 from jiangxb1987/rbc-test.

## What changes were proposed in this pull request?

Updated URL/href links to include a '/' before '?id' to make links consistent and avoid http 302 redirect errors within UI port 4040 tabs.

## How was this patch tested?

Built a runnable distribution and executed jobs. Validated that http 302 redirects are no longer encountered when clicking on links within UI port 4040 tabs.

Author: Steven Kallman <SJKallmangmail.com>

Author: Kallman, Steven <Steven.Kallman@CapitalOne.com>

Closes#21600 from SJKallman/{Spark-24553}{WEB-UI}-redirect-href-fixes.

This passes the unique task attempt id instead of attempt number to v2 data sources because attempt number is reused when stages are retried. When attempt numbers are reused, sources that track data by partition id and attempt number may incorrectly clean up data because the same attempt number can be both committed and aborted.

For v1 / Hadoop writes, generate a unique ID based on available attempt numbers to avoid a similar problem.

Closes#21558

Author: Marcelo Vanzin <vanzin@cloudera.com>

Author: Ryan Blue <blue@apache.org>

Closes#21606 from vanzin/SPARK-24552.2.

## What changes were proposed in this pull request?

This pr modified JDBC datasource code to verify and normalize a partition column based on the JDBC resolved schema before building `JDBCRelation`.

Closes#20370

## How was this patch tested?

Added tests in `JDBCSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21379 from maropu/SPARK-24327.

## What changes were proposed in this pull request?

In our distribution, because we don't do such fine-grained access control of config file, also configuration file is world readable shared between different components, so password may leak to different users.

Hadoop credential provider API support storing password in a secure way, in which Spark could read it in a secure way, so here propose to add support of using credential provider API to get password.

## How was this patch tested?

Adding tests and verified locally.

Author: jerryshao <sshao@hortonworks.com>

Closes#21548 from jerryshao/SPARK-24518.

**Problem**

MapStatus uses hardcoded value of 2000 partitions to determine if it should use highly compressed map status. We should make it configurable to allow users to more easily tune their jobs with respect to this without having for them to modify their code to change the number of partitions. Note we can leave this as an internal/undocumented config for now until we have more advise for the users on how to set this config.

Some of my reasoning:

The config gives you a way to easily change something without the user having to change code, redeploy jar, and then run again. You can simply change the config and rerun. It also allows for easier experimentation. Changing the # of partitions has other side affects, whether good or bad is situation dependent. It can be worse are you could be increasing # of output files when you don't want to be, affects the # of tasks needs and thus executors to run in parallel, etc.

There have been various talks about this number at spark summits where people have told customers to increase it to be 2001 partitions. Note if you just do a search for spark 2000 partitions you will fine various things all talking about this number. This shows that people are modifying their code to take this into account so it seems to me having this configurable would be better.

Once we have more advice for users we could expose this and document information on it.

**What changes were proposed in this pull request?**

I make the hardcoded value mentioned above to be configurable under the name _SHUFFLE_MIN_NUM_PARTS_TO_HIGHLY_COMPRESS_, which has default value to be 2000. Users can set it to the value they want by setting the property name _spark.shuffle.minNumPartitionsToHighlyCompress_

**How was this patch tested?**

I wrote a unit test to make sure that the default value is 2000, and _IllegalArgumentException_ will be thrown if user set it to a non-positive value. The unit test also checks that highly compressed map status is correctly used when the number of partition is greater than _SHUFFLE_MIN_NUM_PARTS_TO_HIGHLY_COMPRESS_.

Author: Hieu Huynh <“Hieu.huynh@oath.com”>

Closes#21527 from hthuynh2/spark_branch_1.

When an output stage is retried, it's possible that tasks from the previous

attempt are still running. In that case, there would be a new task for the

same partition in the new attempt, and the coordinator would allow both

tasks to commit their output since it did not keep track of stage attempts.

The change adds more information to the stage state tracked by the coordinator,

so that only one task is allowed to commit the output in the above case.

The stage state in the coordinator is also maintained across stage retries,

so that a stray speculative task from a previous stage attempt is not allowed

to commit.

This also removes some code added in SPARK-18113 that allowed for duplicate

commit requests; with the RPC code used in Spark 2, that situation cannot

happen, so there is no need to handle it.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21577 from vanzin/SPARK-24552.

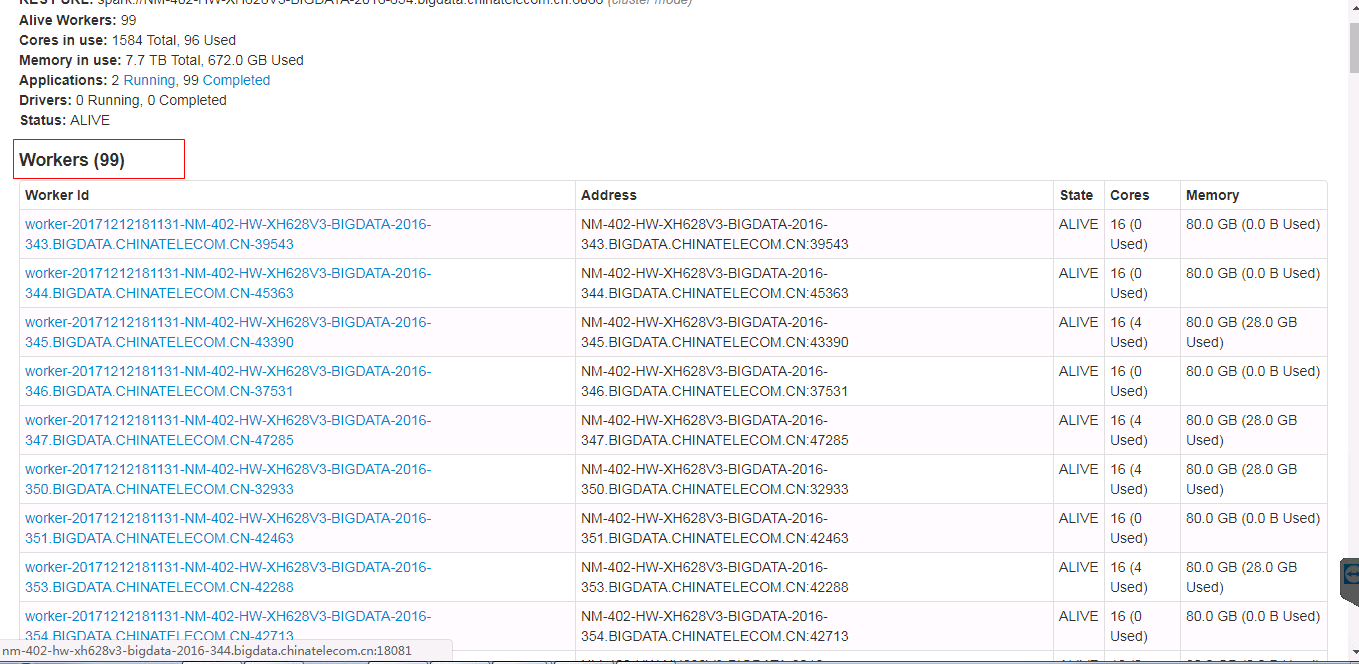

## What changes were proposed in this pull request?

This change extends YARN resource allocation handling with blacklisting functionality.

This handles cases when node is messed up or misconfigured such that a container won't launch on it. Before this change backlisting only focused on task execution but this change introduces YarnAllocatorBlacklistTracker which tracks allocation failures per host (when enabled via "spark.yarn.blacklist.executor.launch.blacklisting.enabled").

## How was this patch tested?

### With unit tests

Including a new suite: YarnAllocatorBlacklistTrackerSuite.

#### Manually

It was tested on a cluster by deleting the Spark jars on one of the node.

#### Behaviour before these changes

Starting Spark as:

```

spark2-shell --master yarn --deploy-mode client --num-executors 4 --conf spark.executor.memory=4g --conf "spark.yarn.max.executor.failures=6"

```

Log is:

```

18/04/12 06:49:36 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 11, (reason: Max number of executor failures (6) reached)

18/04/12 06:49:39 INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with FAILED (diag message: Max number of executor failures (6) reached)

18/04/12 06:49:39 INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered.

18/04/12 06:49:39 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://apiros-1.gce.test.com:8020/user/systest/.sparkStaging/application_1523459048274_0016

18/04/12 06:49:39 INFO util.ShutdownHookManager: Shutdown hook called

```

#### Behaviour after these changes

Starting Spark as:

```

spark2-shell --master yarn --deploy-mode client --num-executors 4 --conf spark.executor.memory=4g --conf "spark.yarn.max.executor.failures=6" --conf "spark.yarn.blacklist.executor.launch.blacklisting.enabled=true"

```

And the log is:

```

18/04/13 05:37:43 INFO yarn.YarnAllocator: Will request 1 executor container(s), each with 1 core(s) and 4505 MB memory (including 409 MB of overhead)

18/04/13 05:37:43 INFO yarn.YarnAllocator: Submitted 1 unlocalized container requests.

18/04/13 05:37:43 INFO yarn.YarnAllocator: Launching container container_1523459048274_0025_01_000008 on host apiros-4.gce.test.com for executor with ID 6

18/04/13 05:37:43 INFO yarn.YarnAllocator: Received 1 containers from YARN, launching executors on 1 of them.

18/04/13 05:37:43 INFO yarn.YarnAllocator: Completed container container_1523459048274_0025_01_000007 on host: apiros-4.gce.test.com (state: COMPLETE, exit status: 1)

18/04/13 05:37:43 INFO yarn.YarnAllocatorBlacklistTracker: blacklisting host as YARN allocation failed: apiros-4.gce.test.com

18/04/13 05:37:43 INFO yarn.YarnAllocatorBlacklistTracker: adding nodes to YARN application master's blacklist: List(apiros-4.gce.test.com)

18/04/13 05:37:43 WARN yarn.YarnAllocator: Container marked as failed: container_1523459048274_0025_01_000007 on host: apiros-4.gce.test.com. Exit status: 1. Diagnostics: Exception from container-launch.

Container id: container_1523459048274_0025_01_000007

Exit code: 1

Stack trace: ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:604)

at org.apache.hadoop.util.Shell.run(Shell.java:507)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:789)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:213)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

Where the most important part is:

```

18/04/13 05:37:43 INFO yarn.YarnAllocatorBlacklistTracker: blacklisting host as YARN allocation failed: apiros-4.gce.test.com

18/04/13 05:37:43 INFO yarn.YarnAllocatorBlacklistTracker: adding nodes to YARN application master's blacklist: List(apiros-4.gce.test.com)

```

And execution was continued (no shutdown called).

### Testing the backlisting of the whole cluster

Starting Spark with YARN blacklisting enabled then removing a the Spark core jar one by one from all the cluster nodes. Then executing a simple spark job which fails checking the yarn log the expected exit status is contained:

```

18/06/15 01:07:10 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 11, (reason: Due to executor failures all available nodes are blacklisted)

18/06/15 01:07:13 INFO util.ShutdownHookManager: Shutdown hook called

```

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Closes#21068 from attilapiros/SPARK-16630.

## What changes were proposed in this pull request?

When a `union` is invoked on several RDDs of which one is an empty RDD, the result of the operation is a `UnionRDD`. This causes an unneeded extra-shuffle when all the other RDDs have the same partitioning.

The PR ignores incoming empty RDDs in the union method.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21333 from mgaido91/SPARK-23778.

## What changes were proposed in this pull request?

This PR fixes possible overflow in int add or multiply. In particular, their overflows in multiply are detected by [Spotbugs](https://spotbugs.github.io/)

The following assignments may cause overflow in right hand side. As a result, the result may be negative.

```

long = int * int

long = int + int

```

To avoid this problem, this PR performs cast from int to long in right hand side.

## How was this patch tested?

Existing UTs.

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21481 from kiszk/SPARK-24452.

`WebUI` defines `addStaticHandler` that web UIs don't use (and simply introduce duplication). Let's clean them up and remove duplications.

Local build and waiting for Jenkins

Author: Jacek Laskowski <jacek@japila.pl>

Closes#21510 from jaceklaskowski/SPARK-24490-Use-WebUI.addStaticHandler.

## What changes were proposed in this pull request?

Previously, the scheduler backend was maintaining state in many places, not only for reading state but also writing to it. For example, state had to be managed in both the watch and in the executor allocator runnable. Furthermore, one had to keep track of multiple hash tables.

We can do better here by:

1. Consolidating the places where we manage state. Here, we take inspiration from traditional Kubernetes controllers. These controllers tend to follow a level-triggered mechanism. This means that the controller will continuously monitor the API server via watches and polling, and on periodic passes, the controller will reconcile the current state of the cluster with the desired state. We implement this by introducing the concept of a pod snapshot, which is a given state of the executors in the Kubernetes cluster. We operate periodically on snapshots. To prevent overloading the API server with polling requests to get the state of the cluster (particularly for executor allocation where we want to be checking frequently to get executors to launch without unbearably bad latency), we use watches to populate snapshots by applying observed events to a previous snapshot to get a new snapshot. Whenever we do poll the cluster, the polled state replaces any existing snapshot - this ensures eventual consistency and mirroring of the cluster, as is desired in a level triggered architecture.

2. Storing less specialized in-memory state in general. Previously we were creating hash tables to represent the state of executors. Instead, it's easier to represent state solely by the snapshots.

## How was this patch tested?

Integration tests should test there's no regressions end to end. Unit tests to be updated, in particular focusing on different orderings of events, particularly accounting for when events come in unexpected ordering.

Author: mcheah <mcheah@palantir.com>

Closes#21366 from mccheah/event-queue-driven-scheduling.

## What changes were proposed in this pull request?

We don't require specific ordering of the input data, the sort action is not necessary and misleading.

## How was this patch tested?

Existing test suite.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21536 from jiangxb1987/sorterSuite.

## What changes were proposed in this pull request?

This patch measures and logs elapsed time for each operation which communicate with file system (mostly remote HDFS in production) in HDFSBackedStateStoreProvider to help investigating any latency issue.

## How was this patch tested?

Manually tested.

Author: Jungtaek Lim <kabhwan@gmail.com>

Closes#21506 from HeartSaVioR/SPARK-24485.

## What changes were proposed in this pull request?

This PR enables using a grouped aggregate pandas UDFs as window functions. The semantics is the same as using SQL aggregation function as window functions.

```

>>> from pyspark.sql.functions import pandas_udf, PandasUDFType

>>> from pyspark.sql import Window

>>> df = spark.createDataFrame(

... [(1, 1.0), (1, 2.0), (2, 3.0), (2, 5.0), (2, 10.0)],

... ("id", "v"))

>>> pandas_udf("double", PandasUDFType.GROUPED_AGG)

... def mean_udf(v):

... return v.mean()

>>> w = Window.partitionBy('id')

>>> df.withColumn('mean_v', mean_udf(df['v']).over(w)).show()

+---+----+------+

| id| v|mean_v|

+---+----+------+

| 1| 1.0| 1.5|

| 1| 2.0| 1.5|

| 2| 3.0| 6.0|

| 2| 5.0| 6.0|

| 2|10.0| 6.0|

+---+----+------+

```

The scope of this PR is somewhat limited in terms of:

(1) Only supports unbounded window, which acts essentially as group by.

(2) Only supports aggregation functions, not "transform" like window functions (n -> n mapping)

Both of these are left as future work. Especially, (1) needs careful thinking w.r.t. how to pass rolling window data to python efficiently. (2) is a bit easier but does require more changes therefore I think it's better to leave it as a separate PR.

## How was this patch tested?

WindowPandasUDFTests

Author: Li Jin <ice.xelloss@gmail.com>

Closes#21082 from icexelloss/SPARK-22239-window-udf.

## What changes were proposed in this pull request?

Currently, `spark.ui.filters` are not applied to the handlers added after binding the server. This means that every page which is added after starting the UI will not have the filters configured on it. This can allow unauthorized access to the pages.

The PR adds the filters also to the handlers added after the UI starts.

## How was this patch tested?

manual tests (without the patch, starting the thriftserver with `--conf spark.ui.filters=org.apache.hadoop.security.authentication.server.AuthenticationFilter --conf spark.org.apache.hadoop.security.authentication.server.AuthenticationFilter.params="type=simple"` you can access `http://localhost:4040/sqlserver`; with the patch, 401 is the response as for the other pages).

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21523 from mgaido91/SPARK-24506.

## What changes were proposed in this pull request?

When user create a aggregator object in scala and pass the aggregator to Spark Dataset's agg() method, Spark's will initialize TypedAggregateExpression with the nodeName field as aggregator.getClass.getSimpleName. However, getSimpleName is not safe in scala environment, depending on how user creates the aggregator object. For example, if the aggregator class full qualified name is "com.my.company.MyUtils$myAgg$2$", the getSimpleName will throw java.lang.InternalError "Malformed class name". This has been reported in scalatest https://github.com/scalatest/scalatest/pull/1044 and discussed in many scala upstream jiras such as SI-8110, SI-5425.

To fix this issue, we follow the solution in https://github.com/scalatest/scalatest/pull/1044 to add safer version of getSimpleName as a util method, and TypedAggregateExpression will invoke this util method rather than getClass.getSimpleName.

## How was this patch tested?

added unit test

Author: Fangshi Li <fli@linkedin.com>

Closes#21276 from fangshil/SPARK-24216.

## What changes were proposed in this pull request?

When HadoopMapRedCommitProtocol is used (e.g., when using saveAsTextFile() or

saveAsHadoopFile() with RDDs), it's not easy to determine which output committer

class was used, so this PR simply logs the class that was used, similarly to what

is done in SQLHadoopMapReduceCommitProtocol.

## How was this patch tested?

Built Spark then manually inspected logging when calling saveAsTextFile():

```scala

scala> sc.setLogLevel("INFO")

scala> sc.textFile("README.md").saveAsTextFile("/tmp/out")

...

18/05/29 10:06:20 INFO HadoopMapRedCommitProtocol: Using output committer class org.apache.hadoop.mapred.FileOutputCommitter

```

Author: Jonathan Kelly <jonathak@amazon.com>

Closes#21452 from ejono/master.

## What changes were proposed in this pull request?

Introducing Python Bindings for PySpark.

- [x] Running PySpark Jobs

- [x] Increased Default Memory Overhead value

- [ ] Dependency Management for virtualenv/conda

## How was this patch tested?

This patch was tested with

- [x] Unit Tests

- [x] Integration tests with [this addition](https://github.com/apache-spark-on-k8s/spark-integration/pull/46)

```

KubernetesSuite:

- Run SparkPi with no resources

- Run SparkPi with a very long application name.

- Run SparkPi with a master URL without a scheme.

- Run SparkPi with an argument.

- Run SparkPi with custom labels, annotations, and environment variables.

- Run SparkPi with a test secret mounted into the driver and executor pods

- Run extraJVMOptions check on driver

- Run SparkRemoteFileTest using a remote data file

- Run PySpark on simple pi.py example

- Run PySpark with Python2 to test a pyfiles example

- Run PySpark with Python3 to test a pyfiles example

Run completed in 4 minutes, 28 seconds.

Total number of tests run: 11

Suites: completed 2, aborted 0

Tests: succeeded 11, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Author: Ilan Filonenko <if56@cornell.edu>

Author: Ilan Filonenko <ifilondz@gmail.com>

Closes#21092 from ifilonenko/master.

change runTasks to submitTasks in the TaskSchedulerImpl.scala 's comment

Author: xueyu <xueyu@yidian-inc.com>

Author: Xue Yu <278006819@qq.com>

Closes#21485 from xueyumusic/fixtypo1.

## What changes were proposed in this pull request?

Currently we only clean up the local directories on application removed. However, when executors die and restart repeatedly, many temp files are left untouched in the local directories, which is undesired behavior and could cause disk space used up gradually.

We can detect executor death in the Worker, and clean up the non-shuffle files (files not ended with ".index" or ".data") in the local directories, we should not touch the shuffle files since they are expected to be used by the external shuffle service.

Scope of this PR is limited to only implement the cleanup logic on a Standalone cluster, we defer to experts familiar with other cluster managers(YARN/Mesos/K8s) to determine whether it's worth to add similar support.

## How was this patch tested?

Add new test suite to cover.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21390 from jiangxb1987/cleanupNonshuffleFiles.

This PR adds a debugging log for YARN-specific credential providers which is loaded by service loader mechanism.

It took me a while to debug if it's actually loaded or not. I had to explicitly set the deprecated configuration and check if that's actually being loaded.

The change scope is manually tested. Logs are like:

```

Using the following builtin delegation token providers: hadoopfs, hive, hbase.

Using the following YARN-specific credential providers: yarn-test.

```

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21466 from HyukjinKwon/minor-log.

Change-Id: I18e2fb8eeb3289b148f24c47bb3130a560a881cf

## What changes were proposed in this pull request?

This adds a new API `TaskContext.getLocalProperty(key)` to the Python TaskContext. It mirrors the Java TaskContext API of returning a string value if the key exists, or None if the key does not exist.

## How was this patch tested?

New test added.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#21437 from tdas/SPARK-24397.

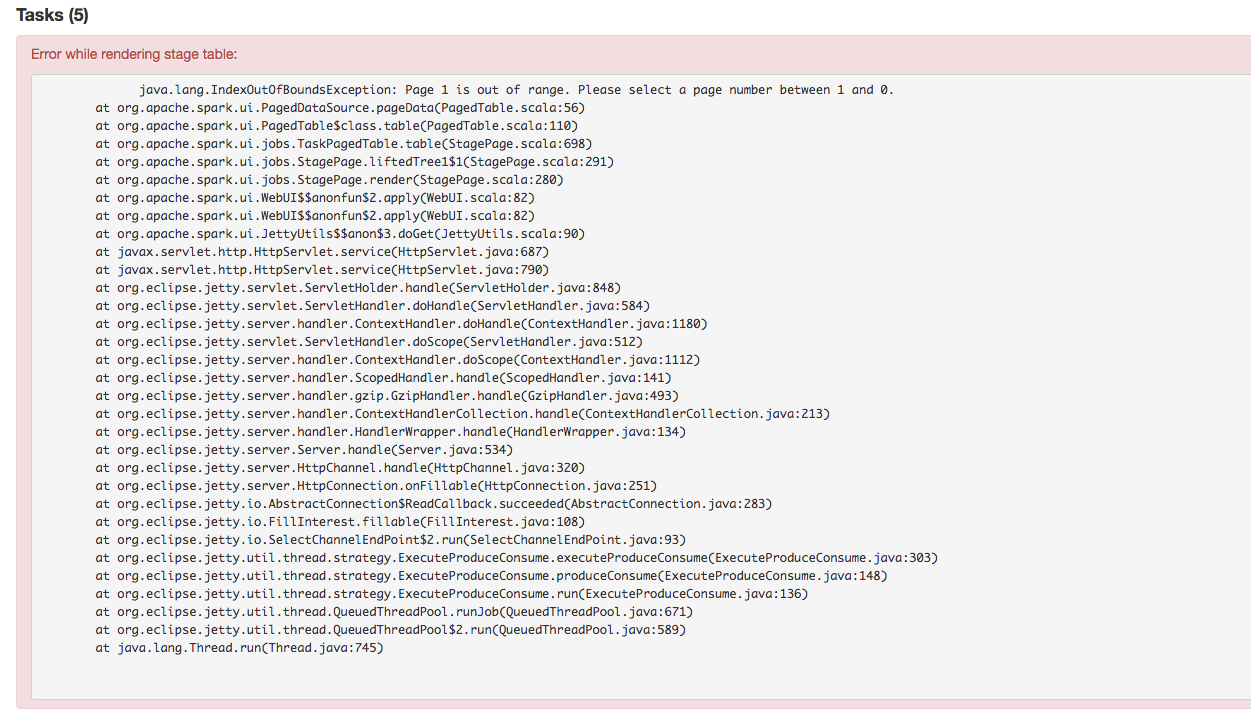

This change takes into account all non-pending tasks when calculating

the number of tasks to be shown. This also means that when the stage

is pending, the task table (or, in fact, most of the data in the stage

page) will not be rendered.

I also fixed the label when the known number of tasks is larger than

the recorded number of tasks (it was inverted).

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21457 from vanzin/SPARK-24414.

As discussed separately, this avoids the possibility of XSS on certain request param keys.

CC vanzin

Author: Sean Owen <srowen@gmail.com>

Closes#21464 from srowen/XSS2.

## What changes were proposed in this pull request?

Improve the exception messages when retrieving Spark conf values to include the key name when the value is invalid.

## How was this patch tested?

Unit tests for all get* operations in SparkConf that require a specific value format

Author: William Sheu <william.sheu@databricks.com>

Closes#21454 from PenguinToast/SPARK-24337-spark-config-errors.

## What changes were proposed in this pull request?

In client side before context initialization specifically, .py file doesn't work in client side before context initialization when the application is a Python file. See below:

```

$ cat /home/spark/tmp.py

def testtest():

return 1

```

This works:

```

$ cat app.py

import pyspark

pyspark.sql.SparkSession.builder.getOrCreate()

import tmp

print("************************%s" % tmp.testtest())

$ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py

...

************************1

```

but this doesn't:

```

$ cat app.py

import pyspark

import tmp

pyspark.sql.SparkSession.builder.getOrCreate()

print("************************%s" % tmp.testtest())

$ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py

Traceback (most recent call last):

File "/home/spark/spark/app.py", line 2, in <module>

import tmp

ImportError: No module named tmp

```

### How did it happen?

In client mode specifically, the paths are being added into PythonRunner as are:

628c7b5179/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala (L430)628c7b5179/core/src/main/scala/org/apache/spark/deploy/PythonRunner.scala (L49-L88)

The problem here is, .py file shouldn't be added as are since `PYTHONPATH` expects a directory or an archive like zip or egg.

### How does this PR fix?

We shouldn't simply just add its parent directory because other files in the parent directory could also be added into the `PYTHONPATH` in client mode before context initialization.

Therefore, we copy .py files into a temp directory for .py files and add it to `PYTHONPATH`.

## How was this patch tested?

Unit tests are added and manually tested in both standalond and yarn client modes with submit.

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21426 from HyukjinKwon/SPARK-24384.

## What changes were proposed in this pull request?

For some Spark applications, though they're a java program, they require not only jar dependencies, but also python dependencies. One example is Livy remote SparkContext application, this application is actually an embedded REPL for Scala/Python/R, it will not only load in jar dependencies, but also python and R deps, so we should specify not only "--jars", but also "--py-files".

Currently for a Spark application, --py-files can only be worked for a pyspark application, so it will not be worked in the above case. So here propose to remove such restriction.

Also we tested that "spark.submit.pyFiles" only supports quite limited scenario (client mode with local deps), so here also expand the usage of "spark.submit.pyFiles" to be alternative of --py-files.

## How was this patch tested?

UT added.

Author: jerryshao <sshao@hortonworks.com>

Closes#21420 from jerryshao/SPARK-24377.

## What changes were proposed in this pull request?

SPARK-17874 introduced a new configuration to set the port where SSL services bind to. We missed to update the scaladoc and the `toString` method, though. The PR adds it in the missing places

## How was this patch tested?

checked the `toString` output in the logs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21429 from mgaido91/minor_ssl.

## What changes were proposed in this pull request?

Cleanup unused vals in `DAGScheduler.handleTaskCompletion` to reduce the code complexity slightly.

## How was this patch tested?

Existing test cases.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21406 from jiangxb1987/handleTaskCompletion.

## What changes were proposed in this pull request?

When OutOfMemoryError thrown from BroadcastExchangeExec, scala.concurrent.Future will hit scala bug – https://github.com/scala/bug/issues/9554, and hang until future timeout:

We could wrap the OOM inside SparkException to resolve this issue.

## How was this patch tested?

Manually tested.

Author: jinxing <jinxing6042@126.com>

Closes#21342 from jinxing64/SPARK-24294.

## What changes were proposed in this pull request?

This change changes spark behavior to use the correct environment variable set by Mesos in the container on startup.

Author: Jake Charland <jakec@uber.com>

Closes#18894 from jakecharland/MesosSandbox.

## What changes were proposed in this pull request?

The ultimate goal is for listeners to onTaskEnd to receive metrics when a task is killed intentionally, since the data is currently just thrown away. This is already done for ExceptionFailure, so this just copies the same approach.

## How was this patch tested?

Updated existing tests.

This is a rework of https://github.com/apache/spark/pull/17422, all credits should go to noodle-fb

Author: Xianjin YE <advancedxy@gmail.com>

Author: Charles Lewis <noodle@fb.com>

Closes#21165 from advancedxy/SPARK-20087.

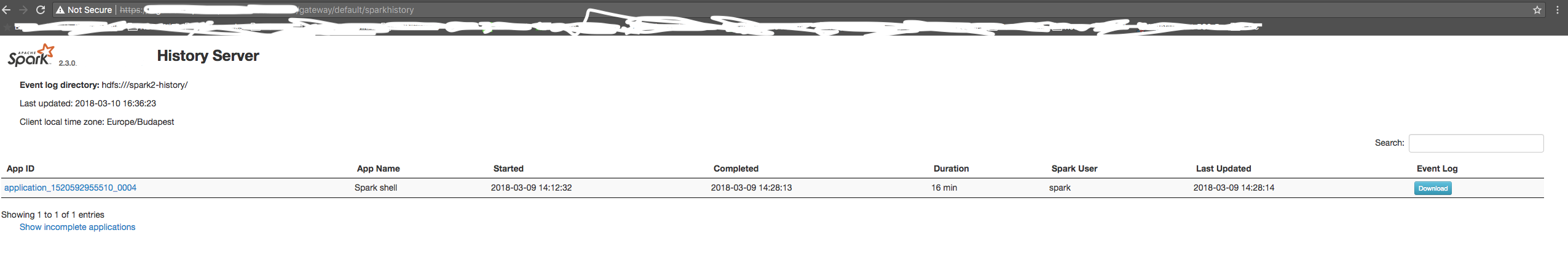

## What changes were proposed in this pull request?

The PR retrieves the proxyBase automatically from the header `X-Forwarded-Context` (if available). This is the header used by Knox to inform the proxied service about the base path.

This provides 0-configuration support for Knox gateway (instead of having to properly set `spark.ui.proxyBase`) and it allows to access directly SHS when it is proxied by Knox. In the previous scenario, indeed, after setting `spark.ui.proxyBase`, direct access to SHS was not working fine (due to bad link generated).

## How was this patch tested?

added UT + manual tests

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21268 from mgaido91/SPARK-24209.

EventListeners can interrupt the event queue thread. In particular,

when the EventLoggingListener writes to hdfs, hdfs can interrupt the

thread. When there is an interrupt, the queue should be removed and stop

accepting any more events. Before this change, the queue would continue

to take more events (till it was full), and then would not stop when the

application was complete because the PoisonPill couldn't be added.

Added a unit test which failed before this change.

Author: Imran Rashid <irashid@cloudera.com>

Closes#21356 from squito/SPARK-24309.

re-submit https://github.com/apache/spark/pull/21299 which broke build.

A few new commits are added to fix the SQLConf problem in `JsonSchemaInference.infer`, and prevent us to access `SQLConf` in DAGScheduler event loop thread.

## What changes were proposed in this pull request?

Previously in #20136 we decided to forbid tasks to access `SQLConf`, because it doesn't work and always give you the default conf value. In #21190 we fixed the check and all the places that violate it.

Currently the pattern of accessing configs at the executor side is: read the configs at the driver side, then access the variables holding the config values in the RDD closure, so that they will be serialized to the executor side. Something like

```

val someConf = conf.getXXX

child.execute().mapPartitions {

if (someConf == ...) ...

...

}

```

However, this pattern is hard to apply if the config needs to be propagated via a long call stack. An example is `DataType.sameType`, and see how many changes were made in #21190 .

When it comes to code generation, it's even worse. I tried it locally and we need to change a ton of files to propagate configs to code generators.

This PR proposes to allow tasks to access `SQLConf`. The idea is, we can save all the SQL configs to job properties when an SQL execution is triggered. At executor side we rebuild the `SQLConf` from job properties.

## How was this patch tested?

a new test suite

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21376 from cloud-fan/config.

## What changes were proposed in this pull request?

- Removes the check for the keytab when we are running in mesos cluster mode.

- Keeps the check for client mode since in cluster mode we eventually launch the driver within the cluster in client mode. In the latter case we want to have the check done when the container starts, the keytab should be checked if it exists within the container's local filesystem.

## How was this patch tested?

This was manually tested by running spark submit in mesos cluster mode.

Author: Stavros <st.kontopoulos@gmail.com>

Closes#20967 from skonto/fix_mesos_keytab_susbmit.

## What changes were proposed in this pull request?

Previously in #20136 we decided to forbid tasks to access `SQLConf`, because it doesn't work and always give you the default conf value. In #21190 we fixed the check and all the places that violate it.

Currently the pattern of accessing configs at the executor side is: read the configs at the driver side, then access the variables holding the config values in the RDD closure, so that they will be serialized to the executor side. Something like

```

val someConf = conf.getXXX

child.execute().mapPartitions {

if (someConf == ...) ...

...

}

```

However, this pattern is hard to apply if the config needs to be propagated via a long call stack. An example is `DataType.sameType`, and see how many changes were made in #21190 .

When it comes to code generation, it's even worse. I tried it locally and we need to change a ton of files to propagate configs to code generators.

This PR proposes to allow tasks to access `SQLConf`. The idea is, we can save all the SQL configs to job properties when an SQL execution is triggered. At executor side we rebuild the `SQLConf` from job properties.

## How was this patch tested?

a new test suite

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21299 from cloud-fan/config.

The old code was relying on a core configuration and extended its

default value to include things that redact desired things in the

app's environment. Instead, add a SQL-specific option for which

options to redact, and apply both the core and SQL-specific rules

when redacting the options in the save command.

This is a little sub-optimal since it adds another config, but it

retains the current default behavior.

While there I also fixed a typo and a couple of minor config API

usage issues in the related redaction option that SQL already had.

Tested with existing unit tests, plus checking the env page on

a shell UI.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21158 from vanzin/SPARK-23850.

## What changes were proposed in this pull request?

In HadoopMapReduceCommitProtocol and FileFormatWriter, there are unnecessary settings in hadoop configuration.

Also clean up some code in SQL module.

## How was this patch tested?

Unit test

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21329 from gengliangwang/codeCleanWrite.

## What changes were proposed in this pull request?

According to the discussion in https://github.com/apache/spark/pull/21175 , this PR proposes 2 improvements:

1. add comments to explain why we call `limit` to write out `ByteBuffer` with slices.

2. remove the `try ... finally`

## How was this patch tested?

existing tests

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21327 from cloud-fan/minor.

## What changes were proposed in this pull request?

There's a period of time when an accumulator has been garbage collected, but hasn't been removed from AccumulatorContext.originals by ContextCleaner. When an update is received for such accumulator it will throw an exception and kill the whole job. This can happen when a stage completes, but there're still running tasks from other attempts, speculation etc. Since AccumulatorContext.get() returns an option we can just return None in such case.

## How was this patch tested?

Unit test.

Author: Artem Rudoy <artem.rudoy@gmail.com>

Closes#21114 from artemrd/SPARK-22371.

## What changes were proposed in this pull request?

```

~/spark-2.3.0-bin-hadoop2.7$ bin/spark-sql --num-executors 0 --conf spark.dynamicAllocation.enabled=true

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=1024m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=1024m; support was removed in 8.0

Error: Number of executors must be a positive number

Run with --help for usage help or --verbose for debug output

```

Actually, we could start up with min executor number with 0 before if dynamically

## How was this patch tested?

ut added

Author: Kent Yao <yaooqinn@hotmail.com>

Closes#21290 from yaooqinn/SPARK-24241.

## What changes were proposed in this pull request?

When multiple clients attempt to resolve artifacts via the `--packages` parameter, they could run into race condition when they each attempt to modify the dummy `org.apache.spark-spark-submit-parent-default.xml` file created in the default ivy cache dir.

This PR changes the behavior to encode UUID in the dummy module descriptor so each client will operate on a different resolution file in the ivy cache dir. In addition, this patch changes the behavior of when and which resolution files are cleaned to prevent accumulation of resolution files in the default ivy cache dir.

Since this PR is a successor of #18801, close#18801. Many codes were ported from #18801. **Many efforts were put here. I think this PR should credit to Victsm .**

## How was this patch tested?

added UT into `SparkSubmitUtilsSuite`

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21251 from kiszk/SPARK-10878.

## What changes were proposed in this pull request?

Sometimes "SparkListenerSuite.local metrics" test fails because the average of executorDeserializeTime is too short. As squito suggested to avoid these situations in one of the task a reference introduced to an object implementing a custom Externalizable.readExternal which sleeps 1ms before returning.

## How was this patch tested?

With unit tests (and checking the effect of this change to the average with a much larger sleep time).

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Author: Attila Zsolt Piros <2017933+attilapiros@users.noreply.github.com>

Closes#21280 from attilapiros/SPARK-19181.

## What changes were proposed in this pull request?

Drastically improves performance and won't cause Spark applications to fail because they write too much data to the Docker image's specific file system. The file system's directories that back emptydir volumes are generally larger and more performant.

## How was this patch tested?

Has been in use via the prototype version of Kubernetes support, but lost in the transition to here.

Author: mcheah <mcheah@palantir.com>

Closes#21238 from mccheah/mount-local-dirs.

## What changes were proposed in this pull request?

In method *CoarseGrainedSchedulerBackend.killExecutors()*, `numPendingExecutors` should add

`executorsToKill.size` rather than `knownExecutors.size` if we do not adjust target number of executors.

## How was this patch tested?

N/A

Author: wuyi <ngone_5451@163.com>

Closes#21209 from Ngone51/SPARK-24141.

It was missing the jax-rs annotation.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21245 from vanzin/SPARK-24188.

Change-Id: Ib338e34b363d7c729cc92202df020dc51033b719

## What changes were proposed in this pull request?

SPARK-24160 is causing a compilation failure (after SPARK-24143 was merged). This fixes the issue.

## How was this patch tested?

building successfully

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21256 from mgaido91/SPARK-24160_FOLLOWUP.

## What changes were proposed in this pull request?

This patch modifies `ShuffleBlockFetcherIterator` so that the receipt of zero-size blocks is treated as an error. This is done as a preventative measure to guard against a potential source of data loss bugs.

In the shuffle layer, we guarantee that zero-size blocks will never be requested (a block containing zero records is always 0 bytes in size and is marked as empty such that it will never be legitimately requested by executors). However, the existing code does not fully take advantage of this invariant in the shuffle-read path: the existing code did not explicitly check whether blocks are non-zero-size.

Additionally, our decompression and deserialization streams treat zero-size inputs as empty streams rather than errors (EOF might actually be treated as "end-of-stream" in certain layers (longstanding behavior dating to earliest versions of Spark) and decompressors like Snappy may be tolerant to zero-size inputs).

As a result, if some other bug causes legitimate buffers to be replaced with zero-sized buffers (due to corruption on either the send or receive sides) then this would translate into silent data loss rather than an explicit fail-fast error.

This patch addresses this problem by adding a `buf.size != 0` check. See code comments for pointers to tests which guarantee the invariants relied on here.

## How was this patch tested?

Existing tests (which required modifications, since some were creating empty buffers in mocks). I also added a test to make sure we fail on zero-size blocks.

To test that the zero-size blocks are indeed a potential corruption source, I manually ran a workload in `spark-shell` with a modified build which replaces all buffers with zero-size buffers in the receive path.

Author: Josh Rosen <joshrosen@databricks.com>

Closes#21219 from JoshRosen/SPARK-24160.

## What changes were proposed in this pull request?

In current code(`MapOutputTracker.convertMapStatuses`), mapstatus are converted to (blockId, size) pair for all blocks – no matter the block is empty or not, which result in OOM when there are lots of consecutive empty blocks, especially when adaptive execution is enabled.

(blockId, size) pair is only used in `ShuffleBlockFetcherIterator` to control shuffle-read and only non-empty block request is sent. Can we just filter out the empty blocks in MapOutputTracker.convertMapStatuses and save memory?

## How was this patch tested?

not added yet.

Author: jinxing <jinxing6042@126.com>

Closes#21212 from jinxing64/SPARK-24143.

…path and set permissions properly

## What changes were proposed in this pull request?

Spark history server should create spark.history.store.path and set permissions properly. Note createdDirectories doesn't do anything if the directories are already created. This does not stomp on the permissions if the user had manually created the directory before the history server could.

## How was this patch tested?

Manually tested in a 100 node cluster. Ensured directories created with proper permissions. Ensured restarted worked apps/temp directories worked as apps were read.

Author: Thomas Graves <tgraves@thirteenroutine.corp.gq1.yahoo.com>

Closes#21234 from tgravescs/SPARK-24124.

## What changes were proposed in this pull request?

It's possible that Accumulators of Spark 1.x may no longer work with Spark 2.x. This is because `LegacyAccumulatorWrapper.isZero` may return wrong answer if `AccumulableParam` doesn't define equals/hashCode.

This PR fixes this by using reference equality check in `LegacyAccumulatorWrapper.isZero`.

## How was this patch tested?

a new test

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21229 from cloud-fan/accumulator.

Fetch failure lead to multiple tasksets which are active for a given

stage. While there is only one "active" version of the taskset, the

earlier attempts can still have running tasks, which can complete

successfully. So a task completion needs to update every taskset

so that it knows the partition is completed. That way the final active

taskset does not try to submit another task for the same partition,

and so that it knows when it is completed and when it should be

marked as a "zombie".

Added a regression test.

Author: Imran Rashid <irashid@cloudera.com>

Closes#21131 from squito/SPARK-23433.

JIRA Issue: https://issues.apache.org/jira/browse/SPARK-24107?jql=text%20~%20%22ChunkedByteBuffer%22

ChunkedByteBuffer.writeFully method has not reset the limit value. When

chunks larger than bufferWriteChunkSize, such as 80 * 1024 * 1024 larger than

config.BUFFER_WRITE_CHUNK_SIZE(64 * 1024 * 1024),only while once, will lost 16 * 1024 * 1024 byte

Author: WangJinhai02 <jinhai.wang02@ele.me>

Closes#21175 from manbuyun/bugfix-ChunkedByteBuffer.

## What changes were proposed in this pull request?

Added support to specify the 'spark.executor.extraJavaOptions' value in terms of the `{{APP_ID}}` and/or `{{EXECUTOR_ID}}`, `{{APP_ID}}` will be replaced by Application Id and `{{EXECUTOR_ID}}` will be replaced by Executor Id while starting the executor.

## How was this patch tested?

I have verified this by checking the executor process command and gc logs. I verified the same in different deployment modes(Standalone, YARN, Mesos) client and cluster modes.

Author: Devaraj K <devaraj@apache.org>

Closes#21088 from devaraj-kavali/SPARK-24003.

## What changes were proposed in this pull request?

For the details of the exception please see [SPARK-24062](https://issues.apache.org/jira/browse/SPARK-24062).

The issue is:

Spark on Yarn stores SASL secret in current UGI's credentials, this credentials will be distributed to AM and executors, so that executors and drive share the same secret to communicate. But STS/Hive library code will refresh the current UGI by UGI's loginFromKeytab() after Spark application is started, this will create a new UGI in the current driver's context with empty tokens and secret keys, so secret key is lost in the current context's UGI, that's why Spark driver throws secret key not found exception.

In Spark 2.2 code, Spark also stores this secret key in SecurityManager's class variable, so even UGI is refreshed, the secret is still existed in the object, so STS with SASL can still be worked in Spark 2.2. But in Spark 2.3, we always search key from current UGI, which makes it fail to work in Spark 2.3.

To fix this issue, there're two possible solutions:

1. Fix in STS/Hive library, when a new UGI is refreshed, copy the secret key from original UGI to the new one. The difficulty is that some codes to refresh the UGI is existed in Hive library, which makes us hard to change the code.

2. Roll back the logics in SecurityManager to match Spark 2.2, so that this issue can be fixed.

2nd solution seems a simple one. So I will propose a PR with 2nd solution.

## How was this patch tested?

Verified in local cluster.

CC vanzin tgravescs please help to review. Thanks!

Author: jerryshao <sshao@hortonworks.com>

Closes#21138 from jerryshao/SPARK-24062.

## What changes were proposed in this pull request?

By default, the dynamic allocation will request enough executors to maximize the

parallelism according to the number of tasks to process. While this minimizes the

latency of the job, with small tasks this setting can waste a lot of resources due to

executor allocation overhead, as some executor might not even do any work.

This setting allows to set a ratio that will be used to reduce the number of

target executors w.r.t. full parallelism.

The number of executors computed with this setting is still fenced by

`spark.dynamicAllocation.maxExecutors` and `spark.dynamicAllocation.minExecutors`

## How was this patch tested?

Units tests and runs on various actual workloads on a Yarn Cluster

Author: Julien Cuquemelle <j.cuquemelle@criteo.com>

Closes#19881 from jcuquemelle/AddTaskPerExecutorSlot.

TaskSetManager.hasAttemptOnHost had a misleading comment. The comment

said that it only checked for running tasks, but really it checked for

any tasks that might have run in the past as well. This updates to line

up with the implementation.

Author: wuyi <ngone_5451@163.com>

Closes#20998 from Ngone51/SPARK-23888.

This allows sockets to be bound even if there are sockets

from a previous application that are still pending closure. It

avoids bind issues when, for example, re-starting the SHS.

Don't enable the option on Windows though. The following page

explains some odd behavior that this option can have there:

https://msdn.microsoft.com/en-us/library/windows/desktop/ms740621%28v=vs.85%29.aspx

I intentionally ignored server sockets that always bind to

ephemeral ports, since those don't benefit from this option.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21110 from vanzin/SPARK-24029.

## What changes were proposed in this pull request?

SparkContextSuite.test("Cancelling stages/jobs with custom reasons.") could stay in an infinite loop because of the problem found and fixed in [SPARK-23775](https://issues.apache.org/jira/browse/SPARK-23775).

This PR solves this mentioned flakyness by removing shared variable usages when cancel happens in a loop and using wait and CountDownLatch for synhronization.

## How was this patch tested?

Existing unit test.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#21105 from gaborgsomogyi/SPARK-24022.

## What changes were proposed in this pull request?

There‘s a miswrite in BlacklistTracker's updateBlacklistForFetchFailure:

```

val blacklistedExecsOnNode =

nodeToBlacklistedExecs.getOrElseUpdate(exec, HashSet[String]())

blacklistedExecsOnNode += exec

```

where first **exec** should be **host**.

## How was this patch tested?

adjust existed test.

Author: wuyi <ngone_5451@163.com>

Closes#21104 from Ngone51/SPARK-24021.

## What changes were proposed in this pull request?

SparkContext submitted a map stage from `submitMapStage` to `DAGScheduler`,

`markMapStageJobAsFinished` is called only in (https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L933 and https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L1314);

But think about below scenario:

1. stage0 and stage1 are all `ShuffleMapStage` and stage1 depends on stage0;

2. We submit stage1 by `submitMapStage`;

3. When stage 1 running, `FetchFailed` happened, stage0 and stage1 got resubmitted as stage0_1 and stage1_1;

4. When stage0_1 running, speculated tasks in old stage1 come as succeeded, but stage1 is not inside `runningStages`. So even though all splits(including the speculated tasks) in stage1 succeeded, job listener in stage1 will not be called;

5. stage0_1 finished, stage1_1 starts running. When `submitMissingTasks`, there is no missing tasks. But in current code, job listener is not triggered.

We should call the job listener for map stage in `5`.

## How was this patch tested?

Not added yet.

Author: jinxing <jinxing6042@126.com>

Closes#21019 from jinxing64/SPARK-23948.

## What changes were proposed in this pull request?

In current code, it will scanning all partition paths when spark.sql.hive.verifyPartitionPath=true.

e.g. table like below:

```

CREATE TABLE `test`(

`id` int,

`age` int,

`name` string)

PARTITIONED BY (

`A` string,

`B` string)

load data local inpath '/tmp/data0' into table test partition(A='00', B='00')

load data local inpath '/tmp/data1' into table test partition(A='01', B='01')

load data local inpath '/tmp/data2' into table test partition(A='10', B='10')

load data local inpath '/tmp/data3' into table test partition(A='11', B='11')

```

If I query with SQL – "select * from test where A='00' and B='01' ", current code will scan all partition paths including '/data/A=00/B=00', '/data/A=00/B=00', '/data/A=01/B=01', '/data/A=10/B=10', '/data/A=11/B=11'. It costs much time and memory cost.

This pr proposes to avoid iterating all partition paths. Add a config `spark.files.ignoreMissingFiles` and ignore the `file not found` when `getPartitions/compute`(for hive table scan). This is much like the logic brought by

`spark.sql.files.ignoreMissingFiles`(which is for datasource scan).

## How was this patch tested?

UT

Author: jinxing <jinxing6042@126.com>

Closes#19868 from jinxing64/SPARK-22676.

## What changes were proposed in this pull request?

Update

```scala

SparkEnv.get.conf.getLong("spark.shuffle.spill.numElementsForceSpillThreshold", Long.MaxValue)

```

to

```scala

SparkEnv.get.conf.get(SHUFFLE_SPILL_NUM_ELEMENTS_FORCE_SPILL_THRESHOLD)

```

because of `SHUFFLE_SPILL_NUM_ELEMENTS_FORCE_SPILL_THRESHOLD`'s default value is `Integer.MAX_VALUE`:

c99fc9ad9b/core/src/main/scala/org/apache/spark/internal/config/package.scala (L503-L511)

## How was this patch tested?

N/A

Author: Yuming Wang <yumwang@ebay.com>

Closes#21077 from wangyum/SPARK-21033.

## What changes were proposed in this pull request?

Spark introduced new writer mode to overwrite only related partitions in SPARK-20236. While we are using this feature in our production cluster, we found a bug when writing multi-level partitions on HDFS.

A simple test case to reproduce this issue:

val df = Seq(("1","2","3")).toDF("col1", "col2","col3")

df.write.partitionBy("col1","col2").mode("overwrite").save("/my/hdfs/location")

If HDFS location "/my/hdfs/location" does not exist, there will be no output.

This seems to be caused by the job commit change in SPARK-20236 in HadoopMapReduceCommitProtocol.

In the commit job process, the output has been written into staging dir /my/hdfs/location/.spark-staging.xxx/col1=1/col2=2, and then the code calls fs.rename to rename /my/hdfs/location/.spark-staging.xxx/col1=1/col2=2 to /my/hdfs/location/col1=1/col2=2. However, in our case the operation will fail on HDFS because /my/hdfs/location/col1=1 does not exists. HDFS rename can not create directory for more than one level.

This does not happen in the new unit test added with SPARK-20236 which uses local file system.

We are proposing a fix. When cleaning current partition dir /my/hdfs/location/col1=1/col2=2 before the rename op, if the delete op fails (because /my/hdfs/location/col1=1/col2=2 may not exist), we call mkdirs op to create the parent dir /my/hdfs/location/col1=1 (if the parent dir does not exist) so the following rename op can succeed.

Reference: in official HDFS document(https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/filesystem/filesystem.html), the rename command has precondition "dest must be root, or have a parent that exists"

## How was this patch tested?

We have tested this patch on our production cluster and it fixed the problem

Author: Fangshi Li <fli@linkedin.com>

Closes#20931 from fangshil/master.

## What changes were proposed in this pull request?

Get the count of dropped events for output in log message.

## How was this patch tested?

The fix is pretty trivial, but `./dev/run-tests` were run and were successful.

Please review http://spark.apache.org/contributing.html before opening a pull request.

vanzin cloud-fan

The contribution is my original work and I license the work to the project under the project’s open source license.

Author: Patrick Pisciuneri <Patrick.Pisciuneri@target.com>

Closes#20977 from phpisciuneri/fix-log-warning.

The current in-process launcher implementation just calls the SparkSubmit

object, which, in case of errors, will more often than not exit the JVM.

This is not desirable since this launcher is meant to be used inside other

applications, and that would kill the application.

The change turns SparkSubmit into a class, and abstracts aways some of

the functionality used to print error messages and abort the submission

process. The default implementation uses the logging system for messages,

and throws exceptions for errors. As part of that I also moved some code

that doesn't really belong in SparkSubmit to a better location.

The command line invocation of spark-submit now uses a special implementation

of the SparkSubmit class that overrides those behaviors to do what is expected

from the command line version (print to the terminal, exit the JVM, etc).

A lot of the changes are to replace calls to methods such as "printErrorAndExit"

with the new API.

As part of adding tests for this, I had to fix some small things in the

launcher option parser so that things like "--version" can work when

used in the launcher library.

There is still code that prints directly to the terminal, like all the

Ivy-related code in SparkSubmitUtils, and other areas where some re-factoring

would help, like the CommandLineUtils class, but I chose to leave those

alone to keep this change more focused.

Aside from existing and added unit tests, I ran command line tools with

a bunch of different arguments to make sure messages and errors behave

like before.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20925 from vanzin/SPARK-22941.

This change introduces two optimizations to help speed up generation

of listing data when parsing events logs.

The first one allows the parser to be stopped when enough data to

create the listing entry has been read. This is currently the start

event plus environment info, to capture UI ACLs. If the end event is

needed, the code will skip to the end of the log to try to find that

information, instead of parsing the whole log file.

Unfortunately this works better with uncompressed logs. Skipping bytes

on compressed logs only saves the work of parsing lines and some events,

so not a lot of gains are observed.

The second optimization deals with in-progress logs. It works in two

ways: first, it completely avoids parsing the rest of the log for

these apps when enough listing data is read. This, unlike the above,

also speeds things up for compressed logs, since only the very beginning

of the log has to be read.

On top of that, the code that decides whether to re-parse logs to get

updated listing data will ignore in-progress applications until they've

completed.

Both optimizations can be disabled but are enabled by default.

I tested this on some fake event logs to see the effect. I created

500 logs of about 60M each (so ~30G uncompressed; each log was 1.7M

when compressed with zstd). Below, C = completed, IP = in-progress,

the size means the amount of data re-parsed at the end of logs

when necessary.

```

none/C none/IP zstd/C zstd/IP

On / 16k 2s 2s 22s 2s

On / 1m 3s 2s 24s 2s

Off 1.1m 1.1m 26s 24s

```

This was with 4 threads on a single local SSD. As expected from the

previous explanations, there are considerable gains for in-progress

logs, and for uncompressed logs, but not so much when looking at the

full compressed log.

As a side note, I removed the custom code to get the scan time by

creating a file on HDFS; since file mod times are not used to detect

changed logs anymore, local time is enough for the current use of

the SHS.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20952 from vanzin/SPARK-6951.

SPARK-19276 ensured that FetchFailures do not get swallowed by other

layers of exception handling, but it also meant that a killed task could

look like a fetch failure. This is particularly a problem with

speculative execution, where we expect to kill tasks as they are reading

shuffle data. The fix is to ensure that we always check for killed

tasks first.

Added a new unit test which fails before the fix, ran it 1k times to

check for flakiness. Full suite of tests on jenkins.

Author: Imran Rashid <irashid@cloudera.com>

Closes#20987 from squito/SPARK-23816.

## What changes were proposed in this pull request?

The test case JobCancellationSuite."interruptible iterator of shuffle reader" has been flaky because `KillTask` event is handled asynchronously, so it can happen that the semaphore is released but the task is still running.

Actually we only have to check if the total number of processed elements is less than the input elements number, so we know the task get cancelled.

## How was this patch tested?

The new test case still fails without the purposed patch, and succeeded in current master.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20993 from jiangxb1987/JobCancellationSuite.

## What changes were proposed in this pull request?

This PR avoids possible overflow at an operation `long = (long)(int * int)`. The multiplication of large positive integer values may set one to MSB. This leads to a negative value in long while we expected a positive value (e.g. `0111_0000_0000_0000 * 0000_0000_0000_0010`).

This PR performs long cast before the multiplication to avoid this situation.

## How was this patch tested?

Existing UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21002 from kiszk/SPARK-23893.

## What changes were proposed in this pull request?

This PR allows us to use one of several types of `MemoryBlock`, such as byte array, int array, long array, or `java.nio.DirectByteBuffer`. To use `java.nio.DirectByteBuffer` allows to have off heap memory which is automatically deallocated by JVM. `MemoryBlock` class has primitive accessors like `Platform.getInt()`, `Platform.putint()`, or `Platform.copyMemory()`.

This PR uses `MemoryBlock` for `OffHeapColumnVector`, `UTF8String`, and other places. This PR can improve performance of operations involving memory accesses (e.g. `UTF8String.trim`) by 1.8x.

For now, this PR does not use `MemoryBlock` for `BufferHolder` based on cloud-fan's [suggestion](https://github.com/apache/spark/pull/11494#issuecomment-309694290).

Since this PR is a successor of #11494, close#11494. Many codes were ported from #11494. Many efforts were put here. **I think this PR should credit to yzotov.**

This PR can achieve **1.1-1.4x performance improvements** for operations in `UTF8String` or `Murmur3_x86_32`. Other operations are almost comparable performances.

Without this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 526 / 536 0.0 131399881.5 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 525 / 552 1022.6 1.0 1.0X

substring 414 / 423 1298.0 0.8 1.3X

```

With this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 474 / 488 0.0 118552232.0 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 476 / 480 1127.3 0.9 1.0X

substring 287 / 291 1869.9 0.5 1.7X

```

Benchmark program

```

test("benchmark Murmur3_x86_32") {

val length = 8192 * 32768 + 31

val seed = 42L

val iters = 1 << 2

val random = new Random(seed)

val arrays = Array.fill[MemoryBlock](numArrays) {

val bytes = new Array[Byte](length)

random.nextBytes(bytes)

new ByteArrayMemoryBlock(bytes, Platform.BYTE_ARRAY_OFFSET, length)

}

val benchmark = new Benchmark("Hash byte arrays with length " + length,

iters * numArrays, minNumIters = 20)

benchmark.addCase("HiveHasher") { _: Int =>

var sum = 0L

for (_ <- 0L until iters) {

sum += HiveHasher.hashUnsafeBytesBlock(

arrays(i), Platform.BYTE_ARRAY_OFFSET, length)

}

}

benchmark.run()

}

test("benchmark UTF8String") {

val N = 512 * 1024 * 1024

val iters = 2

val benchmark = new Benchmark("UTF8String benchmark", N, minNumIters = 20)

val str0 = new java.io.StringWriter() { { for (i <- 0 until N) { write(" ") } } }.toString

val s0 = UTF8String.fromString(str0)

benchmark.addCase("hashCode") { _: Int =>

var h: Int = 0

for (_ <- 0L until iters) { h += s0.hashCode }

}

benchmark.addCase("substring") { _: Int =>

var s: UTF8String = null

for (_ <- 0L until iters) { s = s0.substring(N / 2 - 5, N / 2 + 5) }

}

benchmark.run()

}

```

I run [this benchmark program](https://gist.github.com/kiszk/94f75b506c93a663bbbc372ffe8f05de) using [the commit](ee5a79861c). I got the following results:

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Memory access benchmarks: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

ByteArrayMemoryBlock get/putInt() 220 / 221 609.3 1.6 1.0X

Platform get/putInt(byte[]) 220 / 236 610.9 1.6 1.0X

Platform get/putInt(Object) 492 / 494 272.8 3.7 0.4X

OnHeapMemoryBlock get/putLong() 322 / 323 416.5 2.4 0.7X

long[] 221 / 221 608.0 1.6 1.0X

Platform get/putLong(long[]) 321 / 321 418.7 2.4 0.7X

Platform get/putLong(Object) 561 / 563 239.2 4.2 0.4X

```

I also run [this benchmark program](https://gist.github.com/kiszk/5fdb4e03733a5d110421177e289d1fb5) for comparing performance of `Platform.copyMemory()`.

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Platform copyMemory: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Object to Object 1961 / 1967 8.6 116.9 1.0X

System.arraycopy Object to Object 1917 / 1921 8.8 114.3 1.0X

byte array to byte array 1961 / 1968 8.6 116.9 1.0X

System.arraycopy byte array to byte array 1909 / 1937 8.8 113.8 1.0X

int array to int array 1921 / 1990 8.7 114.5 1.0X