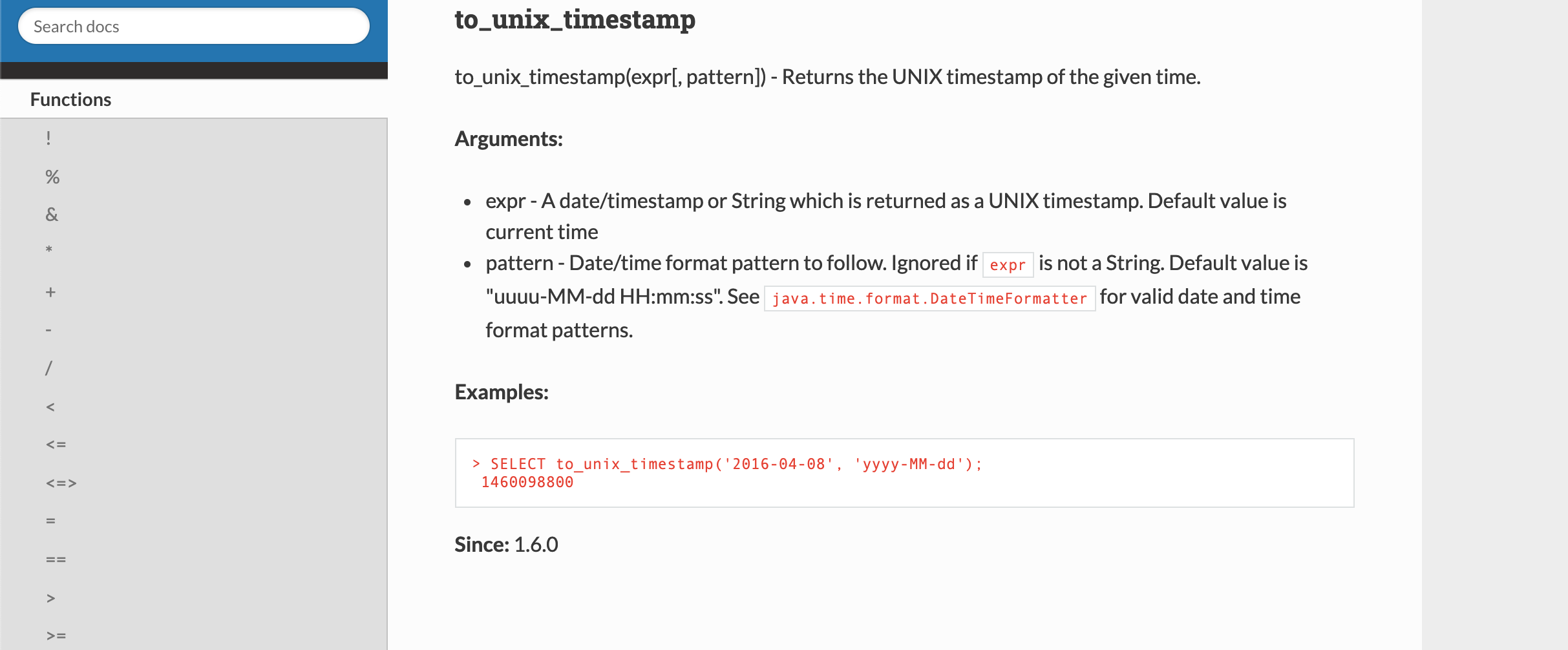

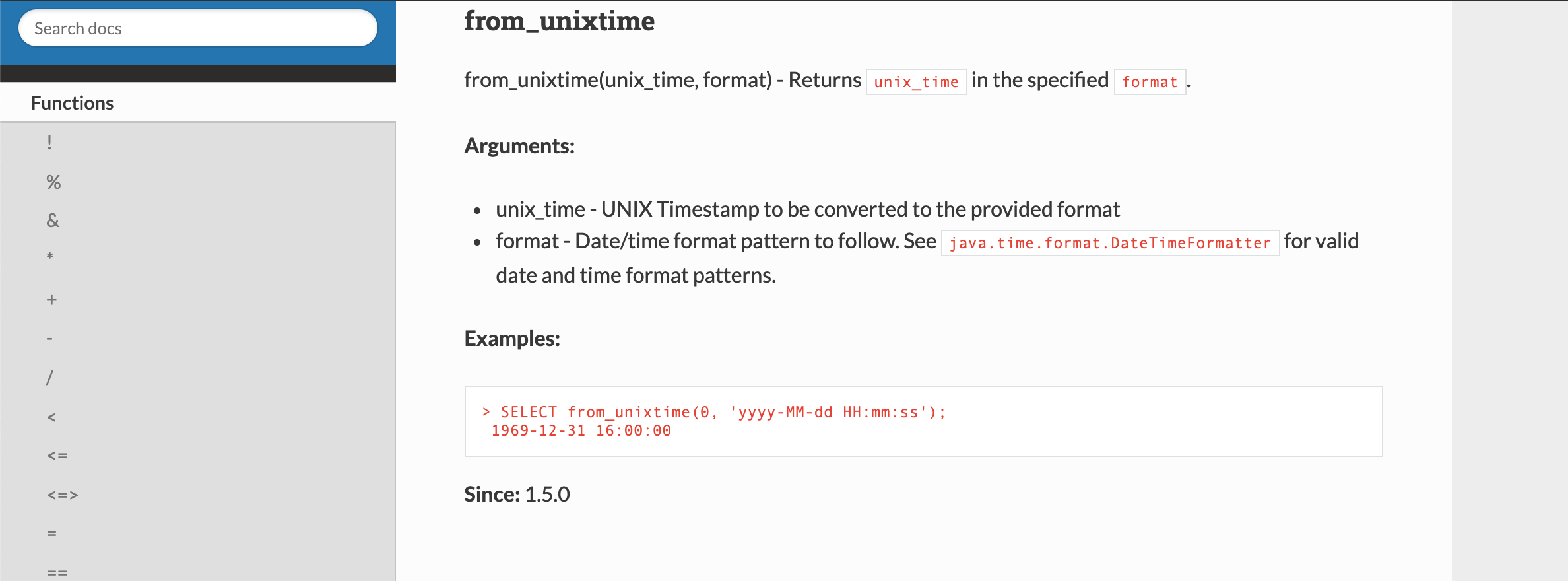

### What changes were proposed in this pull request? Link to appropriate Java Class with list of date/time patterns supported ### Why are the changes needed? Avoid confusion on the end-user's side of things, as seen in questions like [this](https://stackoverflow.com/questions/54496878/date-format-conversion-is-adding-1-year-to-the-border-dates) on StackOverflow ### Does this PR introduce any user-facing change? Yes, Docs are updated. ### How was this patch tested? `date_format`:  `to_unix_timestamp`:  `unix_timestamp`:  `from_unixtime`:  `to_date`:  `to_timestamp`:  Closes #26864 from johnhany97/SPARK-30236. Authored-by: John Ayad <johnhany97@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> |

||

|---|---|---|

| .. | ||

| catalyst | ||

| core | ||

| hive | ||

| hive-thriftserver | ||

| create-docs.sh | ||

| gen-sql-markdown.py | ||

| mkdocs.yml | ||

| README.md | ||

Spark SQL

This module provides support for executing relational queries expressed in either SQL or the DataFrame/Dataset API.

Spark SQL is broken up into four subprojects:

- Catalyst (sql/catalyst) - An implementation-agnostic framework for manipulating trees of relational operators and expressions.

- Execution (sql/core) - A query planner / execution engine for translating Catalyst's logical query plans into Spark RDDs. This component also includes a new public interface, SQLContext, that allows users to execute SQL or LINQ statements against existing RDDs and Parquet files.

- Hive Support (sql/hive) - Includes extensions that allow users to write queries using a subset of HiveQL and access data from a Hive Metastore using Hive SerDes. There are also wrappers that allow users to run queries that include Hive UDFs, UDAFs, and UDTFs.

- HiveServer and CLI support (sql/hive-thriftserver) - Includes support for the SQL CLI (bin/spark-sql) and a HiveServer2 (for JDBC/ODBC) compatible server.

Running ./sql/create-docs.sh generates SQL documentation for built-in functions under sql/site.