### What changes were proposed in this pull request?

Refactor ScriptTransformation to remove input parameter and replace it by child.output

### Why are the changes needed?

refactor code

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existed UT

Closes#32228 from AngersZhuuuu/SPARK-34035.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR implements the decorrelation technique in the paper "Unnesting Arbitrary Queries" by T. Neumann; A. Kemper

(http://www.btw-2015.de/res/proceedings/Hauptband/Wiss/Neumann-Unnesting_Arbitrary_Querie.pdf). It currently supports Filter, Project, Aggregate, Join, and UnaryNode that passes CheckAnalysis.

This feature can be controlled by the config `spark.sql.optimizer.decorrelateInnerQuery.enabled` (default: true).

A few notes:

1. This PR does not relax any constraints in CheckAnalysis for correlated subqueries, even though some cases can be supported by this new framework, such as aggregate with correlated non-equality predicates. This PR focuses on adding the new framework and making sure all existing cases can be supported. Constraints can be relaxed gradually in the future via separate PRs.

2. The new framework is only enabled for correlated scalar subqueries, as the first step. EXISTS/IN subqueries can be supported in the future.

### Why are the changes needed?

Currently, Spark has limited support for correlated subqueries. It only allows `Filter` to reference outer query columns and does not support non-equality predicates when the subquery is aggregated. This new framework will allow more operators to host outer column references and support correlated non-equality predicates and more types of operators in correlated subqueries.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing unit and SQL query tests and new optimizer plan tests.

Closes#32072 from allisonwang-db/spark-34974-decorrelation.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

- Share a static ImmutableBitSet for `treePatternBits` in all object instances of AttributeReference.

- Share three static ImmutableBitSets for `treePatternBits` in three kinds of Literals.

- Add an ImmutableBitSet as a subclass of BitSet.

### Why are the changes needed?

Reduce the additional memory usage caused by `treePatternBits`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32157 from sigmod/leaf.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

This PR updated the `foundNonEqualCorrelatedPred` logic for correlated subqueries in `CheckAnalysis` to only allow correlated equality predicates that guarantee one-to-one mapping between inner and outer attributes, instead of all equality predicates.

### Why are the changes needed?

To fix correctness bugs. Before this fix Spark can give wrong results for certain correlated subqueries that pass CheckAnalysis:

Example 1:

```sql

create or replace view t1(c) as values ('a'), ('b')

create or replace view t2(c) as values ('ab'), ('abc'), ('bc')

select c, (select count(*) from t2 where t1.c = substring(t2.c, 1, 1)) from t1

```

Correct results: [(a, 2), (b, 1)]

Spark results:

```

+---+-----------------+

|c |scalarsubquery(c)|

+---+-----------------+

|a |1 |

|a |1 |

|b |1 |

+---+-----------------+

```

Example 2:

```sql

create or replace view t1(a, b) as values (0, 6), (1, 5), (2, 4), (3, 3);

create or replace view t2(c) as values (6);

select c, (select count(*) from t1 where a + b = c) from t2;

```

Correct results: [(6, 4)]

Spark results:

```

+---+-----------------+

|c |scalarsubquery(c)|

+---+-----------------+

|6 |1 |

|6 |1 |

|6 |1 |

|6 |1 |

+---+-----------------+

```

### Does this PR introduce _any_ user-facing change?

Yes. Users will not be able to run queries that contain unsupported correlated equality predicates.

### How was this patch tested?

Added unit tests.

Closes#32179 from allisonwang-db/spark-35080-subquery-bug.

Lead-authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR fixes a couple of things in TypeCoercion rules:

- Only run the propagate types step if the children of a node have output attributes with changed dataTypes and/or nullability. This is implemented as custom tree transformation. The TypeCoercion rules now only implement a partial function.

- Combine multiple type coercion rules into a single rule. Multiple rules are applied in single tree traversal.

- Reduce calls to conf.get in DecimalPrecision. This now happens once per tree traversal, instead of once per matched expression.

- Reduce the use of withNewChildren.

This brings down the number of CPU cycles spend in analysis by ~28% (benchmark: 10 iterations of all TPC-DS queries on SF10).

## How was this patch tested?

Existing tests.

Closes#32208 from sigmod/coercion.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

Remove Antlr 4.7 workaround.

### Why are the changes needed?

The https://github.com/antlr/antlr4/commit/ac9f7530 has been fixed in upstream, so remove the workaround to simplify code.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existed UTs.

Closes#32238 from pan3793/antlr-minor.

Authored-by: Cheng Pan <379377944@qq.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Similarly to the test from the PR https://github.com/apache/spark/pull/31799, add tests:

1. Months -> Period -> Months

2. Period -> Months -> Period

3. Duration -> micros -> Duration

### Why are the changes needed?

Add round trip tests for period <-> month and duration <-> micros

### Does this PR introduce _any_ user-facing change?

'No'. Just test cases.

### How was this patch tested?

Jenkins test

Closes#32234 from beliefer/SPARK-34715.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Parse the year-month interval literals like `INTERVAL '1-1' YEAR TO MONTH` to values of `YearMonthIntervalType`, and day-time interval literals to `DayTimeIntervalType` values. Currently, Spark SQL supports:

- DAY TO HOUR

- DAY TO MINUTE

- DAY TO SECOND

- HOUR TO MINUTE

- HOUR TO SECOND

- MINUTE TO SECOND

All such interval literals are converted to `DayTimeIntervalType`, and `YEAR TO MONTH` to `YearMonthIntervalType` while loosing info about `from` and `to` units.

**Note**: new behavior is under the SQL config `spark.sql.legacy.interval.enabled` which is `false` by default. When the config is set to `true`, the interval literals are parsed to `CaledarIntervalType` values.

Closes#32176

### Why are the changes needed?

To conform the ANSI SQL standard which assumes conversions of interval literals to year-month or day-time interval but not to mixed interval type like Catalyst's `CalendarIntervalType`.

### Does this PR introduce _any_ user-facing change?

Yes.

Before:

```sql

spark-sql> SELECT INTERVAL '1 01:02:03.123' DAY TO SECOND;

1 days 1 hours 2 minutes 3.123 seconds

spark-sql> SELECT typeof(INTERVAL '1 01:02:03.123' DAY TO SECOND);

interval

```

After:

```sql

spark-sql> SELECT INTERVAL '1 01:02:03.123' DAY TO SECOND;

1 01:02:03.123000000

spark-sql> SELECT typeof(INTERVAL '1 01:02:03.123' DAY TO SECOND);

day-time interval

```

### How was this patch tested?

1. By running the affected test suites:

```

$ ./build/sbt "test:testOnly *.ExpressionParserSuite"

$ SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "sql/testOnly *SQLQueryTestSuite -- -z interval.sql"

$ SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "sql/testOnly *SQLQueryTestSuite -- -z create_view.sql"

$ SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "sql/testOnly *SQLQueryTestSuite -- -z date.sql"

$ SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "sql/testOnly *SQLQueryTestSuite -- -z timestamp.sql"

```

2. PostgresSQL tests are executed with `spark.sql.legacy.interval.enabled` is set to `true` to keep compatibility with PostgreSQL output:

```sql

> SELECT interval '999' second;

0 years 0 mons 0 days 0 hours 16 mins 39.00 secs

```

Closes#32209 from MaxGekk/parse-ansi-interval-literals.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Extend the `Average` expression to support `DayTimeIntervalType` and `YearMonthIntervalType` added by #31614.

Note: the expressions can throw the overflow exception independently from the SQL config `spark.sql.ansi.enabled`. In this way, the modified expressions always behave in the ANSI mode for the intervals.

### Why are the changes needed?

Extend `org.apache.spark.sql.catalyst.expressions.aggregate.Average` to support `DayTimeIntervalType` and `YearMonthIntervalType`.

### Does this PR introduce _any_ user-facing change?

'No'.

Should not since new types have not been released yet.

### How was this patch tested?

Jenkins test

Closes#32229 from beliefer/SPARK-34837.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR makes the input buffer configurable (as an internal configuration). This is mainly to work around the regression in uniVocity/univocity-parsers#449.

This is particularly useful for SQL workloads that requires to rewrite the `CREATE TABLE` with options.

### Why are the changes needed?

To work around uniVocity/univocity-parsers#449.

### Does this PR introduce _any_ user-facing change?

No, it's only internal option.

### How was this patch tested?

Manually tested by modifying the unittest added in https://github.com/apache/spark/pull/31858 as below:

```diff

diff --git a/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala b/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala

index fd25a79619d..705f38dbfbd 100644

--- a/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala

+++ b/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala

-2456,6 +2456,7 abstract class CSVSuite

test("SPARK-34768: counting a long record with ignoreTrailingWhiteSpace set to true") {

val bufSize = 128

val line = "X" * (bufSize - 1) + "| |"

+ spark.conf.set("spark.sql.csv.parser.inputBufferSize", 128)

withTempPath { path =>

Seq(line).toDF.write.text(path.getAbsolutePath)

assert(spark.read.format("csv")

```

Closes#32231 from HyukjinKwon/SPARK-35045-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Now that `AnalysisOnlyCommand` in introduced in #32032, `CacheTable` and `UncacheTable` can extend `AnalysisOnlyCommand` to simplify the code base. For example, the logic to handle these commands such that the tables are only analyzed is scattered across different places.

### Why are the changes needed?

To simplify the code base to handle these two commands.

### Does this PR introduce _any_ user-facing change?

No, just internal refactoring.

### How was this patch tested?

The existing tests (e.g., `CachedTableSuite`) cover the changes in this PR. For example, if I make `CacheTable`/`UncacheTable` extend `LeafCommand`, there are few failures in `CachedTableSuite`.

Closes#32220 from imback82/cache_cmd_analysis_only.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR:

- Adds a new expression `GroupingExprRef` that can be used in aggregate expressions of `Aggregate` nodes to refer grouping expressions by index. These expressions capture the data type and nullability of the referred grouping expression.

- Adds a new rule `EnforceGroupingReferencesInAggregates` that inserts the references in the beginning of the optimization phase.

- Adds a new rule `UpdateGroupingExprRefNullability` to update nullability of `GroupingExprRef` expressions as nullability of referred grouping expression can change during optimization.

### Why are the changes needed?

If aggregate expressions (without aggregate functions) in an `Aggregate` node are complex then the `Optimizer` can optimize out grouping expressions from them and so making aggregate expressions invalid.

Here is a simple example:

```

SELECT not(t.id IS NULL) , count(*)

FROM t

GROUP BY t.id IS NULL

```

In this case the `BooleanSimplification` rule does this:

```

=== Applying Rule org.apache.spark.sql.catalyst.optimizer.BooleanSimplification ===

!Aggregate [isnull(id#222)], [NOT isnull(id#222) AS (NOT (id IS NULL))#226, count(1) AS c#224L] Aggregate [isnull(id#222)], [isnotnull(id#222) AS (NOT (id IS NULL))#226, count(1) AS c#224L]

+- Project [value#219 AS id#222] +- Project [value#219 AS id#222]

+- LocalRelation [value#219] +- LocalRelation [value#219]

```

where `NOT isnull(id#222)` is optimized to `isnotnull(id#222)` and so it no longer refers to any grouping expression.

Before this PR:

```

== Optimized Logical Plan ==

Aggregate [isnull(id#222)], [isnotnull(id#222) AS (NOT (id IS NULL))#234, count(1) AS c#232L]

+- Project [value#219 AS id#222]

+- LocalRelation [value#219]

```

and running the query throws an error:

```

Couldn't find id#222 in [isnull(id#222)#230,count(1)#226L]

java.lang.IllegalStateException: Couldn't find id#222 in [isnull(id#222)#230,count(1)#226L]

```

After this PR:

```

== Optimized Logical Plan ==

Aggregate [isnull(id#222)], [NOT groupingexprref(0) AS (NOT (id IS NULL))#234, count(1) AS c#232L]

+- Project [value#219 AS id#222]

+- LocalRelation [value#219]

```

and the query works.

### Does this PR introduce _any_ user-facing change?

Yes, the query works.

### How was this patch tested?

Added new UT.

Closes#31913 from peter-toth/SPARK-34581-keep-grouping-expressions.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

It seems that we miss classifying one `SparkOutOfMemoryError` in `HashedRelation`. Add the error classification for it. In addition, clean up two errors definition of `HashJoin` as they are not used.

### Why are the changes needed?

Better error classification.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32211 from c21/error-message.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Add checks for `YearMonthIntervalType` and `DayTimeIntervalType` to `MutableProjectionSuite`.

### Why are the changes needed?

To improve test coverage, and the same checks as for `CalendarIntervalType`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the modified test suite:

```

$ build/sbt "test:testOnly *MutableProjectionSuite"

```

Closes#32225 from MaxGekk/test-ansi-intervals-in-MutableProjectionSuite.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Extend the `Sum` expression to to support `DayTimeIntervalType` and `YearMonthIntervalType` added by #31614.

Note: the expressions can throw the overflow exception independently from the SQL config `spark.sql.ansi.enabled`. In this way, the modified expressions always behave in the ANSI mode for the intervals.

### Why are the changes needed?

Extend `org.apache.spark.sql.catalyst.expressions.aggregate.Sum` to support `DayTimeIntervalType` and `YearMonthIntervalType`.

### Does this PR introduce _any_ user-facing change?

'No'.

Should not since new types have not been released yet.

### How was this patch tested?

Jenkins test

Closes#32107 from beliefer/SPARK-34716.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: beliefer <beliefer@163.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

In the PR, I propose to add additional checks for ANSI interval types `YearMonthIntervalType` and `DayTimeIntervalType` to `LiteralExpressionSuite`.

Also, I replaced some long literal values by `CalendarInterval` to check `CalendarIntervalType` that the tests were supposed to check.

### Why are the changes needed?

To improve test coverage and have the same checks for ANSI types as for `CalendarIntervalType`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the modified test suite:

```

$ build/sbt "test:testOnly *LiteralExpressionSuite"

```

Closes#32213 from MaxGekk/interval-literal-tests.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

The precision of `java.time.Duration` is nanosecond, but when it is used as `DayTimeIntervalType` in Spark, it is microsecond.

At present, the `DayTimeIntervalType` data generated in the implementation of `RandomDataGenerator` is accurate to nanosecond, which will cause the `DayTimeIntervalType` to be converted to long, and then back to `DayTimeIntervalType` to lose the accuracy, which will cause the test to fail. For example: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137390/testReport/org.apache.spark.sql.hive.execution/HashAggregationQueryWithControlledFallbackSuite/udaf_with_all_data_types/

### Why are the changes needed?

Improve `RandomDataGenerator` so that the generated data fits the precision of DayTimeIntervalType in spark.

### Does this PR introduce _any_ user-facing change?

'No'. Just change the test class.

### How was this patch tested?

Jenkins test.

Closes#32212 from beliefer/SPARK-35116.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This issue fixes an issue that indentation of multiple output JSON records in a single split file are broken except for the first record in the split when `pretty` option is `true`.

```

// Run in the Spark Shell.

// Set spark.sql.leafNodeDefaultParallelism to 1 for the current master.

// Or set spark.default.parallelism for the previous releases.

spark.conf.set("spark.sql.leafNodeDefaultParallelism", 1)

val df = Seq("a", "b", "c").toDF

df.write.option("pretty", "true").json("/path/to/output")

# Run in a Shell

$ cat /path/to/output/*.json

{

"value" : "a"

}

{

"value" : "b"

}

{

"value" : "c"

}

```

### Why are the changes needed?

It's not pretty even though `pretty` option is true.

### Does this PR introduce _any_ user-facing change?

I think "No". Indentation style is changed but JSON format is not changed.

### How was this patch tested?

New test.

Closes#32203 from sarutak/fix-ugly-indentation.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Handle `YearMonthIntervalType` and `DayTimeIntervalType` in the `sql()` and `toString()` method of `Literal`, and format the ANSI interval in the ANSI style.

### Why are the changes needed?

To improve readability and UX with Spark SQL. For example, a test output before the changes:

```

-- !query

select timestamp'2011-11-11 11:11:11' - interval '2' day

-- !query schema

struct<TIMESTAMP '2011-11-11 11:11:11' - 172800000000:timestamp>

-- !query output

2011-11-09 11:11:11

```

### Does this PR introduce _any_ user-facing change?

Should not since the new intervals haven't been released yet.

### How was this patch tested?

By running new tests:

```

$ ./build/sbt "test:testOnly *LiteralExpressionSuite"

```

Closes#32196 from MaxGekk/literal-ansi-interval-sql.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

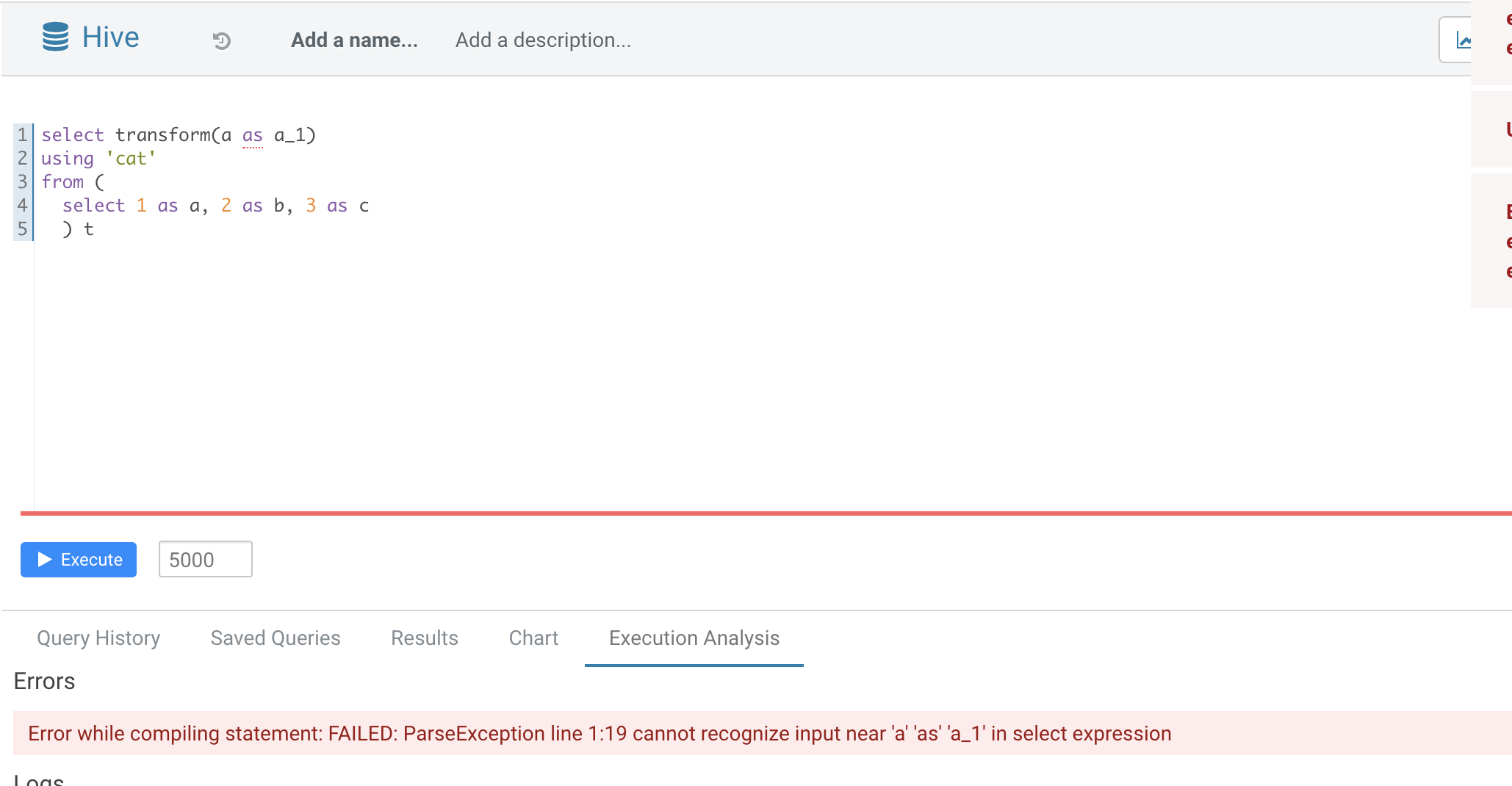

### What changes were proposed in this pull request?

Normal function parameters should not support alias, hive not support too

In this pr we forbid use alias in `TRANSFORM`'s inputs

### Why are the changes needed?

Fix bug

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#32165 from AngersZhuuuu/SPARK-35070.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Support `date +/- day-time interval`. In the PR, I propose to update the binary arithmetic rules, and cast an input date to a timestamp at the session time zone, and then add a day-time interval to it.

### Why are the changes needed?

1. To conform the ANSI SQL standard which requires to support such operation over dates and intervals:

<img width="811" alt="Screenshot 2021-03-12 at 11 36 14" src="https://user-images.githubusercontent.com/1580697/111081674-865d4900-8515-11eb-86c8-3538ecaf4804.png">

2. To fix the regression comparing to the recent Spark release 3.1 with default settings.

Before the changes:

```sql

spark-sql> select date'now' + (timestamp'now' - timestamp'yesterday');

Error in query: cannot resolve 'DATE '2021-04-14' + subtracttimestamps(TIMESTAMP '2021-04-14 18:14:56.497', TIMESTAMP '2021-04-13 00:00:00')' due to data type mismatch: argument 1 requires timestamp type, however, 'DATE '2021-04-14'' is of date type.; line 1 pos 7;

'Project [unresolvedalias(cast(2021-04-14 + subtracttimestamps(2021-04-14 18:14:56.497, 2021-04-13 00:00:00, false, Some(Europe/Moscow)) as date), None)]

+- OneRowRelation

```

Spark 3.1:

```sql

spark-sql> select date'now' + (timestamp'now' - timestamp'yesterday');

2021-04-15

```

Hive:

```sql

0: jdbc:hive2://localhost:10000/default> select date'2021-04-14' + (timestamp'2020-04-14 18:15:30' - timestamp'2020-04-13 00:00:00');

+------------------------+

| _c0 |

+------------------------+

| 2021-04-15 18:15:30.0 |

+------------------------+

```

### Does this PR introduce _any_ user-facing change?

Should not since new intervals have not been released yet.

After the changes:

```sql

spark-sql> select date'now' + (timestamp'now' - timestamp'yesterday');

2021-04-15 18:13:16.555

```

### How was this patch tested?

By running new tests:

```

$ build/sbt "test:testOnly *ColumnExpressionSuite"

```

Closes#32170 from MaxGekk/date-add-day-time-interval.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

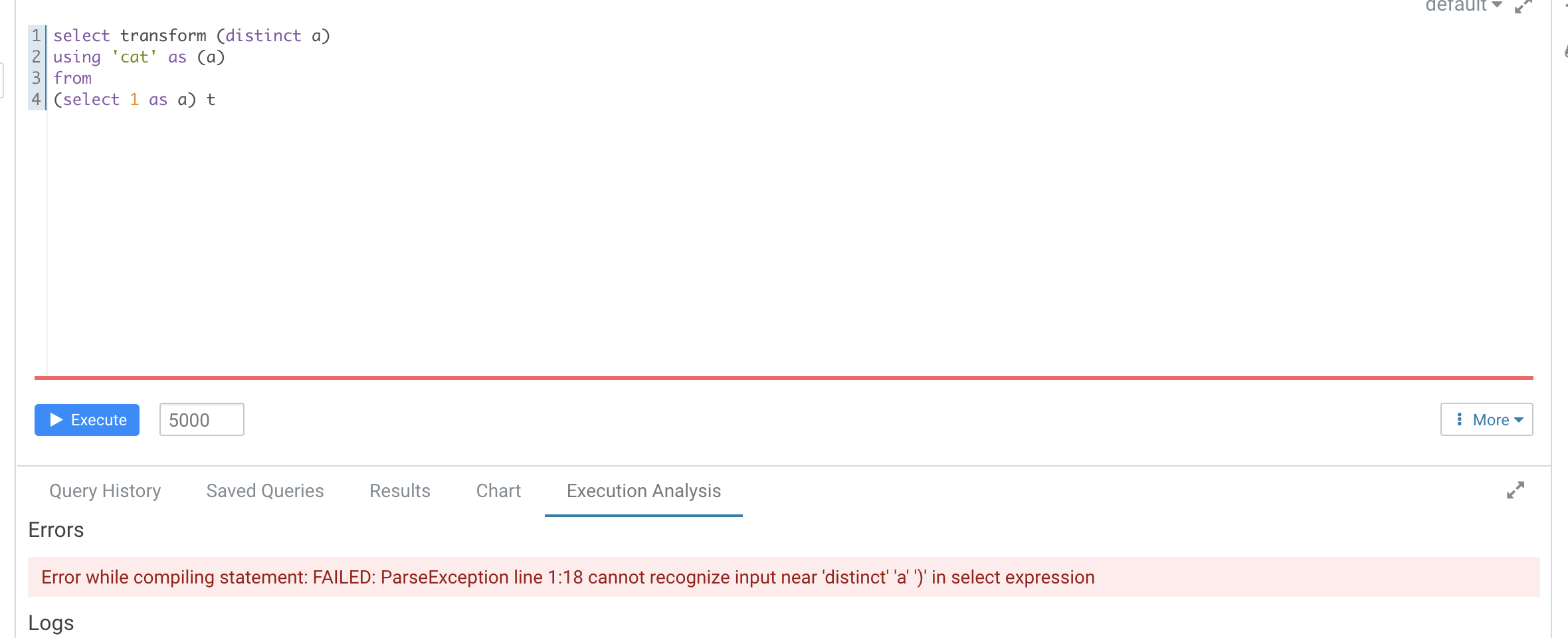

### What changes were proposed in this pull request?

According to https://github.com/apache/spark/pull/29087#discussion_r612267050, add UT in `transform.sql`

It seems that distinct is not recognized as a reserved word here

```

-- !query

explain extended SELECT TRANSFORM(distinct b, a, c)

USING 'cat' AS (a, b, c)

FROM script_trans

WHERE a <= 4

-- !query schema

struct<plan:string>

-- !query output

== Parsed Logical Plan ==

'ScriptTransformation [*], cat, [a#x, b#x, c#x], ScriptInputOutputSchema(List(),List(),None,None,List(),List(),None,None,false)

+- 'Project ['distinct AS b#x, 'a, 'c]

+- 'Filter ('a <= 4)

+- 'UnresolvedRelation [script_trans], [], false

== Analyzed Logical Plan ==

org.apache.spark.sql.AnalysisException: cannot resolve 'distinct' given input columns: [script_trans.a, script_trans.b, script_trans.c]; line 1 pos 34;

'ScriptTransformation [*], cat, [a#x, b#x, c#x], ScriptInputOutputSchema(List(),List(),None,None,List(),List(),None,None,false)

+- 'Project ['distinct AS b#x, a#x, c#x]

+- Filter (a#x <= 4)

+- SubqueryAlias script_trans

+- View (`script_trans`, [a#x,b#x,c#x])

+- Project [cast(a#x as int) AS a#x, cast(b#x as int) AS b#x, cast(c#x as int) AS c#x]

+- Project [a#x, b#x, c#x]

+- SubqueryAlias script_trans

+- LocalRelation [a#x, b#x, c#x]

```

Hive's error

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added Ut

Closes#32149 from AngersZhuuuu/SPARK-28227-new-followup.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to introduce the `AnalysisOnlyCommand` trait such that a command that extends this trait can have its children only analyzed, but not optimized. There is a corresponding analysis rule `HandleAnalysisOnlyCommand` that marks the command as analyzed after all other analysis rules are run.

This can be useful if a logical plan has children where they need to be only analyzed, but not optimized - e.g., `CREATE VIEW` or `CACHE TABLE AS`. This also addresses the issue found in #31933.

This PR also updates `CreateViewCommand`, `CacheTableAsSelect`, and `AlterViewAsCommand` to use the new trait / rule such that their children are only analyzed.

### Why are the changes needed?

To address the issue where the plan is unnecessarily re-analyzed in `CreateViewCommand`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests should cover the changes.

Closes#32032 from imback82/skip_transform.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Adds the duplicated common columns as hidden columns to the Projection used to rewrite NATURAL/USING JOINs.

### Why are the changes needed?

Allows users to resolve either side of the NATURAL/USING JOIN's common keys.

Previously, the user could only resolve the following columns:

| Join type | Left key columns | Right key columns |

| --- | --- | --- |

| Inner | Yes | No |

| Left | Yes | No |

| Right | No | Yes |

| Outer | No | No |

### Does this PR introduce _any_ user-facing change?

Yes. The user can now symmetrically resolve the common columns from a NATURAL/USING JOIN.

### How was this patch tested?

SQL-side tests. The behavior matches PostgreSQL and MySQL.

Closes#31666 from karenfeng/spark-34527.

Authored-by: Karen Feng <karen.feng@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes an issue that `LIST FILES/JARS/ARCHIVES path1 path2 ...` cannot list all paths if at least one path is quoted.

An example here.

```

ADD FILE /tmp/test1;

ADD FILE /tmp/test2;

LIST FILES /tmp/test1 /tmp/test2;

file:/tmp/test1

file:/tmp/test2

LIST FILES /tmp/test1 "/tmp/test2";

file:/tmp/test2

```

In this example, the second `LIST FILES` doesn't show `file:/tmp/test1`.

To resolve this issue, I modified the syntax rule to be able to handle this case.

I also changed `SparkSQLParser` to be able to handle paths which contains white spaces.

### Why are the changes needed?

This is a bug.

I also have a plan which extends `ADD FILE/JAR/ARCHIVE` to take multiple paths like Hive and the syntax rule change is necessary for that.

### Does this PR introduce _any_ user-facing change?

Yes. Users can pass quoted paths when using `ADD FILE/JAR/ARCHIVE`.

### How was this patch tested?

New test.

Closes#32074 from sarutak/fix-list-files-bug.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

This PR group exception messages in `/core/src/main/scala/org/apache/spark/sql/execution`.

### Why are the changes needed?

It will largely help with standardization of error messages and its maintenance.

### Does this PR introduce _any_ user-facing change?

No. Error messages remain unchanged.

### How was this patch tested?

No new tests - pass all original tests to make sure it doesn't break any existing behavior.

Closes#31920 from beliefer/SPARK-33604.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Populate table catalog and identifier from `DataStreamWriter` to `WriteToMicroBatchDataSource` so that we can invalidate cache for tables that are updated by a streaming write.

This is somewhat related [SPARK-27484](https://issues.apache.org/jira/browse/SPARK-27484) and [SPARK-34183](https://issues.apache.org/jira/browse/SPARK-34183) (#31700), as ideally we may want to replace `WriteToMicroBatchDataSource` and `WriteToDataSourceV2` with logical write nodes and feed them to analyzer. That will potentially change the code path involved in this PR.

### Why are the changes needed?

Currently `WriteToDataSourceV2` doesn't have cache invalidation logic, and therefore, when the target table for a micro batch streaming job is cached, the cache entry won't be removed when the table is updated.

### Does this PR introduce _any_ user-facing change?

Yes now when a DSv2 table which supports streaming write is updated by a streaming job, its cache will also be invalidated.

### How was this patch tested?

Added a new UT.

Closes#32039 from sunchao/streaming-cache.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Remove duplicate code in `TreeNode.treePatternBits`

### Why are the changes needed?

Code clean up. Make it easier for maintainence.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#32143 from gengliangwang/getBits.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

This PR makes the input buffer configurable (as an internal option). This is mainly to work around uniVocity/univocity-parsers#449.

### Why are the changes needed?

To work around uniVocity/univocity-parsers#449.

### Does this PR introduce _any_ user-facing change?

No, it's only internal option.

### How was this patch tested?

Manually tested by modifying the unittest added in https://github.com/apache/spark/pull/31858 as below:

```diff

diff --git a/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala b/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala

index fd25a79619d..b58f0bd3661 100644

--- a/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala

+++ b/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVSuite.scala

-2460,6 +2460,7 abstract class CSVSuite

Seq(line).toDF.write.text(path.getAbsolutePath)

assert(spark.read.format("csv")

.option("delimiter", "|")

+ .option("inputBufferSize", "128")

.option("ignoreTrailingWhiteSpace", "true").load(path.getAbsolutePath).count() == 1)

}

}

```

Closes#32145 from HyukjinKwon/SPARK-35045.

Lead-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Fix PhysicalAggregation to not transform a foldable expression.

### Why are the changes needed?

It can potentially break certain queries like the added unit test shows.

### Does this PR introduce _any_ user-facing change?

Yes, it fixes undesirable errors caused by a returned TypeCheckFailure from places like RegExpReplace.checkInputDataTypes.

Closes#32113 from sigmod/foldable.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR contains:

- AnalysisHelper changes to allow the resolve function family to stop earlier without traversing the entire tree;

- Example changes in a few rules to support such pruning, e.g., ResolveRandomSeed, ResolveWindowFrame, ResolveWindowOrder, and ResolveNaturalAndUsingJoin.

### Why are the changes needed?

It's a framework-level change for reducing the query compilation time.

In particular, if we update existing analysis rules' call sites as per the examples in this PR, the analysis time can be reduced as described in the [doc](https://docs.google.com/document/d/1SEUhkbo8X-0cYAJFYFDQhxUnKJBz4lLn3u4xR2qfWqk).

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

It is tested by existing tests.

Closes#32135 from sigmod/resolver.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

This PR allows non-aggregated correlated scalar subquery if the max output row is less than 2. Correlated scalar subqueries need to be aggregated because they are going to be decorrelated and rewritten as LEFT OUTER joins. If the correlated scalar subquery produces more than one output row, the rewrite will yield wrong results.

But this constraint can be relaxed when the subquery plan's the max number of output rows is less than or equal to 1.

### Why are the changes needed?

To relax a constraint in CheckAnalysis for the correlated scalar subquery.

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

Unit tests

Closes#32111 from allisonwang-db/spark-28379-aggregated.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Supports cardinality estimation of union, sort and range operator.

1. **Union**: number of rows in output will be the sum of number of rows in the output for each child of union, min and max for each column in the output will be the min and max of that particular column coming from its children.

Example:

Table 1

a b

1 6

2 3

Table 2

a b

1 3

4 1

stats for table1 union table2 would be number of rows = 4, columnStats = (a: {min: 1, max: 4}, b: {min: 1, max: 6})

2. **Sort**: row and columns stats would be same as its children.

3. **Range**: number of output rows and distinct count will be equal to number of elements, min and max is calculated from start, end and step param.

### Why are the changes needed?

The change will enhance the feature https://issues.apache.org/jira/browse/SPARK-16026 and will help in other stats based optimizations.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

New unit tests added.

Closes#30334 from ayushi-agarwal/SPARK-33411.

Lead-authored-by: ayushi agarwal <ayaga@microsoft.com>

Co-authored-by: ayushi-agarwal <36420535+ayushi-agarwal@users.noreply.github.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

1. Extend SQL syntax rules to support a sign before the interval strings of ANSI year-month and day-time intervals.

2. Recognize `-` in `AstBuilder` and negate parsed intervals.

### Why are the changes needed?

To conform to the SQL standard which allows a sign before the string interval, see `"5.3 <literal>"`:

```

<interval literal> ::=

INTERVAL [ <sign> ] <interval string> <interval qualifier>

<interval string> ::=

<quote> <unquoted interval string> <quote>

<unquoted interval string> ::=

[ <sign> ] { <year-month literal> | <day-time literal> }

<sign> ::=

<plus sign>

| <minus sign>

```

### Does this PR introduce _any_ user-facing change?

Should not because it just extends supported intervals syntax.

### How was this patch tested?

By running new tests in `interval.sql`:

```

$ build/sbt "sql/testOnly *SQLQueryTestSuite -- -z interval.sql"

```

Closes#32134 from MaxGekk/negative-parsed-intervals.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Disallow group by aliases under ANSI mode.

### Why are the changes needed?

As per the ANSI SQL standard secion 7.12 <group by clause>:

>Each `grouping column reference` shall unambiguously reference a column of the table resulting from the `from clause`. A column referenced in a `group by clause` is a grouping column.

By forbidding it, we can avoid ambiguous SQL queries like:

```

SELECT col + 1 as col FROM t GROUP BY col

```

### Does this PR introduce _any_ user-facing change?

Yes, group by aliases is not allowed under ANSI mode.

### How was this patch tested?

Unit tests

Closes#32129 from gengliangwang/disallowGroupByAlias.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

For Spark SQL, it can't support script transform SQL with aggregationClause/windowClause/LateralView.

This case we can't directly migration Hive SQL to Spark SQL.

In this PR, we treat all script transform statement's query part (exclude transform about part) as a separate query block and solve it as ScriptTransformation's child and pass a UnresolvedStart as ScriptTransform's input. Then in analyzer level, we pass child's output as ScriptTransform's input. Then we can support all kind of normal SELECT query combine with script transformation.

Such as transform with aggregation:

```

SELECT TRANSFORM ( d2, max(d1) as max_d1, sum(d3))

USING 'cat' AS (a,b,c)

FROM script_trans

WHERE d1 <= 100

GROUP BY d2

HAVING max_d1 > 0

```

When we build AST, we treat it as

```

SELECT TRANSFORM (*)

USING 'cat' AS (a,b,c)

FROM (

SELECT d2, max(d1) as max_d1, sum(d3)

FROM script_trans

WHERE d1 <= 100

GROUP BY d2

HAVING max_d1 > 0

) tmp

```

then in Analyzer's `ResolveReferences`, resolve `* (UnresolvedStar)`, then sql behavior like

```

SELECT TRANSFORM ( d2, max(d1) as max_d1, sum(d3))

USING 'cat' AS (a,b,c)

FROM script_trans

WHERE d1 <= 100

GROUP BY d2

HAVING max_d1 > 0

```

About UT, in this pr we add a lot of different SQL to check we can support all kind of such SQL and each kind of expressions can work well, such as alias, case when, binary compute etc...

### Why are the changes needed?

Support transform with aggregateClause/windowClause/LateralView etc , make sql migration more smoothly

### Does this PR introduce _any_ user-facing change?

User can write transform with aggregateClause/windowClause/LateralView.

### How was this patch tested?

Added UT

Closes#29087 from AngersZhuuuu/SPARK-28227-NEW.

Lead-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Support GROUP BY use Separate columns and CUBE/ROLLUP

In postgres sql, it support

```

select a, b, c, count(1) from t group by a, b, cube (a, b, c);

select a, b, c, count(1) from t group by a, b, rollup(a, b, c);

select a, b, c, count(1) from t group by cube(a, b), rollup (a, b, c);

select a, b, c, count(1) from t group by a, b, grouping sets((a, b), (a), ());

```

In this pr, we have done two things as below:

1. Support partial grouping analytics such as `group by a, cube(a, b)`

2. Support mixed grouping analytics such as `group by cube(a, b), rollup(b,c)`

*Partial Groupings*

Partial Groupings means there are both `group_expression` and `CUBE|ROLLUP|GROUPING SETS`

in GROUP BY clause. For example:

`GROUP BY warehouse, CUBE(product, location)` is equivalent to

`GROUP BY GROUPING SETS((warehouse, product, location), (warehouse, product), (warehouse, location), (warehouse))`.

`GROUP BY warehouse, ROLLUP(product, location)` is equivalent to

`GROUP BY GROUPING SETS((warehouse, product, location), (warehouse, product), (warehouse))`.

`GROUP BY warehouse, GROUPING SETS((product, location), (producet), ())` is equivalent to

`GROUP BY GROUPING SETS((warehouse, product, location), (warehouse, location), (warehouse))`.

*Concatenated Groupings*

Concatenated groupings offer a concise way to generate useful combinations of groupings. Groupings specified

with concatenated groupings yield the cross-product of groupings from each grouping set. The cross-product

operation enables even a small number of concatenated groupings to generate a large number of final groups.

The concatenated groupings are specified simply by listing multiple `GROUPING SETS`, `CUBES`, and `ROLLUP`,

and separating them with commas. For example:

`GROUP BY GROUPING SETS((warehouse), (producet)), GROUPING SETS((location), (size))` is equivalent to

`GROUP BY GROUPING SETS((warehouse, location), (warehouse, size), (product, location), (product, size))`.

`GROUP BY CUBE((warehouse), (producet)), ROLLUP((location), (size))` is equivalent to

`GROUP BY GROUPING SETS((warehouse, product), (warehouse), (producet), ()), GROUPING SETS((location, size), (location), ())`

`GROUP BY GROUPING SETS(

(warehouse, product, location, size), (warehouse, product, location), (warehouse, product),

(warehouse, location, size), (warehouse, location), (warehouse),

(product, location, size), (product, location), (product),

(location, size), (location), ())`.

`GROUP BY order, CUBE((warehouse), (producet)), ROLLUP((location), (size))` is equivalent to

`GROUP BY order, GROUPING SETS((warehouse, product), (warehouse), (producet), ()), GROUPING SETS((location, size), (location), ())`

`GROUP BY GROUPING SETS(

(order, warehouse, product, location, size), (order, warehouse, product, location), (order, warehouse, product),

(order, warehouse, location, size), (order, warehouse, location), (order, warehouse),

(order, product, location, size), (order, product, location), (order, product),

(order, location, size), (order, location), (order))`.

### Why are the changes needed?

Support more flexible grouping analytics

### Does this PR introduce _any_ user-facing change?

User can use sql like

```

select a, b, c, agg_expr() from table group by a, cube(b, c)

```

### How was this patch tested?

Added UT

Closes#30144 from AngersZhuuuu/SPARK-33229.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR contains:

- TreeNode, QueryPlan, AnalysisHelper changes to allow the transform function family to stop earlier without traversing the entire tree;

- Example changes in a few rules to support such pruning, e.g., ReorderJoin and OptimizeIn.

Here is a [design doc](https://docs.google.com/document/d/1SEUhkbo8X-0cYAJFYFDQhxUnKJBz4lLn3u4xR2qfWqk) that elaborates the ideas and benchmark numbers.

### Why are the changes needed?

It's a framework-level change for reducing the query compilation time.

In particular, if we update existing rules and transform call sites as per the examples in this PR, the analysis time and query optimization time can be reduced as described in this [doc](https://docs.google.com/document/d/1SEUhkbo8X-0cYAJFYFDQhxUnKJBz4lLn3u4xR2qfWqk) .

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

It is tested by existing tests.

Closes#32060 from sigmod/bits.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

before when we use aggregate ordinal in group by expression and index position is a aggregate function, it will show error as

```

– !query

select a, b, sum(b) from data group by 3

– !query schema

struct<>

– !query output

org.apache.spark.sql.AnalysisException

aggregate functions are not allowed in GROUP BY, but found sum(data.b)

```

It't not clear enough refactor this error message in this pr

### Why are the changes needed?

refactor error message

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existed UT

Closes#32089 from AngersZhuuuu/SPARK-34986.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

1. Extend `IntervalUtils` methods: `toYearMonthIntervalString` and `toDayTimeIntervalString` to support formatting of year-month/day-time intervals in Hive style. The methods get new parameter style which can have to values; `HIVE_STYLE` and `ANSI_STYLE`.

2. Invoke `toYearMonthIntervalString` and `toDayTimeIntervalString` from the `Cast` expression with the `style` parameter is set to `ANSI_STYLE`.

3. Invoke `toYearMonthIntervalString` and `toDayTimeIntervalString` from `HiveResult` with `style` is set to `HIVE_STYLE`.

### Why are the changes needed?

The `spark-sql` shell formats its output in Hive style by using `HiveResult.hiveResultString()`. The changes are needed to match Hive behavior. For instance,

Hive:

```sql

0: jdbc:hive2://localhost:10000/default> select timestamp'2021-01-01 01:02:03.000001' - date'2020-12-31';

+-----------------------+

| _c0 |

+-----------------------+

| 1 01:02:03.000001000 |

+-----------------------+

```

Spark before the changes:

```sql

spark-sql> select timestamp'2021-01-01 01:02:03.000001' - date'2020-12-31';

INTERVAL '1 01:02:03.000001' DAY TO SECOND

```

Also this should unblock #32099 which enables *.sql tests in `SQLQueryTestSuite`.

### Does this PR introduce _any_ user-facing change?

Yes. After the changes:

```sql

spark-sql> select timestamp'2021-01-01 01:02:03.000001' - date'2020-12-31';

1 01:02:03.000001000

```

### How was this patch tested?

1. Added new tests to `IntervalUtilsSuite`:

```

$ build/sbt "test:testOnly *IntervalUtilsSuite"

```

2. Modified existing tests in `HiveResultSuite`:

```

$ build/sbt -Phive-2.3 -Phive-thriftserver "testOnly *HiveResultSuite"

```

3. By running cast tests:

```

$ build/sbt "testOnly *CastSuite*"

```

Closes#32120 from MaxGekk/ansi-intervals-hive-thrift-server.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This patch proposes a fix of nested column pruning for extracting case-insensitive struct field from array of struct.

### Why are the changes needed?

Under case-insensitive mode, nested column pruning rule cannot correctly push down extractor of a struct field of an array of struct, e.g.,

```scala

val query = spark.table("contacts").select("friends.First", "friends.MiDDle")

```

Error stack:

```

[info] java.lang.IllegalArgumentException: Field "First" does not exist.

[info] Available fields:

[info] at org.apache.spark.sql.types.StructType$$anonfun$apply$1.apply(StructType.scala:274)

[info] at org.apache.spark.sql.types.StructType$$anonfun$apply$1.apply(StructType.scala:274)

[info] at scala.collection.MapLike$class.getOrElse(MapLike.scala:128)

[info] at scala.collection.AbstractMap.getOrElse(Map.scala:59)

[info] at org.apache.spark.sql.types.StructType.apply(StructType.scala:273)

[info] at org.apache.spark.sql.execution.ProjectionOverSchema$$anonfun$getProjection$3.apply(ProjectionOverSchema.scala:44)

[info] at org.apache.spark.sql.execution.ProjectionOverSchema$$anonfun$getProjection$3.apply(ProjectionOverSchema.scala:41)

```

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test

Closes#32059 from viirya/fix-array-nested-pruning.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

One of the main performance bottlenecks in query compilation is overly-generic tree transformation methods, namely `mapChildren` and `withNewChildren` (defined in `TreeNode`). These methods have an overly-generic implementation to iterate over the children and rely on reflection to create new instances. We have observed that, especially for queries with large query plans, a significant amount of CPU cycles are wasted in these methods. In this PR we make these methods more efficient, by delegating the iteration and instantiation to concrete node types. The benchmarks show that we can expect significant performance improvement in total query compilation time in queries with large query plans (from 30-80%) and about 20% on average.

#### Problem detail

The `mapChildren` method in `TreeNode` is overly generic and costly. To be more specific, this method:

- iterates over all the fields of a node using Scala’s product iterator. While the iteration is not reflection-based, thanks to the Scala compiler generating code for `Product`, we create many anonymous functions and visit many nested structures (recursive calls).

The anonymous functions (presumably compiled to Java anonymous inner classes) also show up quite high on the list in the object allocation profiles, so we are putting unnecessary pressure on GC here.

- does a lot of comparisons. Basically for each element returned from the product iterator, we check if it is a child (contained in the list of children) and then transform it. We can avoid that by just iterating over children, but in the current implementation, we need to gather all the fields (only transform the children) so that we can instantiate the object using the reflection.

- creates objects using reflection, by delegating to the `makeCopy` method, which is several orders of magnitude slower than using the constructor.

#### Solution

The proposed solution in this PR is rather straightforward: we rewrite the `mapChildren` method using the `children` and `withNewChildren` methods. The default `withNewChildren` method suffers from the same problems as `mapChildren` and we need to make it more efficient by specializing it in concrete classes. Similar to how each concrete query plan node already defines its children, it should also define how they can be constructed given a new list of children. Actually, the implementation is quite simple in most cases and is a one-liner thanks to the copy method present in Scala case classes. Note that we cannot abstract over the copy method, it’s generated by the compiler for case classes if no other type higher in the hierarchy defines it. For most concrete nodes, the implementation of `withNewChildren` looks like this:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = copy(children = newChildren)

```

The current `withNewChildren` method has two properties that we should preserve:

- It returns the same instance if the provided children are the same as its children, i.e., it preserves referential equality.

- It copies tags and maintains the origin links when a new copy is created.

These properties are hard to enforce in the concrete node type implementation. Therefore, we propose a template method `withNewChildrenInternal` that should be rewritten by the concrete classes and let the `withNewChildren` method take care of referential equality and copying:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = {

if (childrenFastEquals(children, newChildren)) {

this

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildrenInternal(newChildren)

res.copyTagsFrom(this)

res

}

}

}

```

With the refactoring done in a previous PR (https://github.com/apache/spark/pull/31932) most tree node types fall in one of the categories of `Leaf`, `Unary`, `Binary` or `Ternary`. These traits have a more efficient implementation for `mapChildren` and define a more specialized version of `withNewChildrenInternal` that avoids creating unnecessary lists. For example, the `mapChildren` method in `UnaryLike` is defined as follows:

```

override final def mapChildren(f: T => T): T = {

val newChild = f(child)

if (newChild fastEquals child) {

this.asInstanceOf[T]

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildInternal(newChild)

res.copyTagsFrom(this.asInstanceOf[T])

res

}

}

}

```

#### Results

With this PR, we have observed significant performance improvements in query compilation time, more specifically in the analysis and optimization phases. The table below shows the TPC-DS queries that had more than 25% speedup in compilation times. Biggest speedups are observed in queries with large query plans.

| Query | Speedup |

| ------------- | ------------- |

|q4 |29%|

|q9 |81%|

|q14a |31%|

|q14b |28%|

|q22 |33%|

|q33 |29%|

|q34 |25%|

|q39 |27%|

|q41 |27%|

|q44 |26%|

|q47 |28%|

|q48 |76%|

|q49 |46%|

|q56 |26%|

|q58 |43%|

|q59 |46%|

|q60 |50%|

|q65 |59%|

|q66 |46%|

|q67 |52%|

|q69 |31%|

|q70 |30%|

|q96 |26%|

|q98 |32%|

#### Binary incompatibility

Changing the `withNewChildren` in `TreeNode` breaks the binary compatibility of the code compiled against older versions of Spark because now it is expected that concrete `TreeNode` subclasses all implement the `withNewChildrenInternal` method. This is a problem, for example, when users write custom expressions. This change is the right choice, since it forces all newly added expressions to Catalyst implement it in an efficient manner and will prevent future regressions.

Please note that we have not completely removed the old implementation and renamed it to `legacyWithNewChildren`. This method will be removed in the future and for now helps the transition. There are expressions such as `UpdateFields` that have a complex way of defining children. Writing `withNewChildren` for them requires refactoring the expression. For now, these expressions use the old, slow method. In a future PR we address these expressions.

### Does this PR introduce _any_ user-facing change?

This PR does not introduce user facing changes but my break binary compatibility of the code compiled against older versions. See the binary compatibility section.

### How was this patch tested?

This PR is mainly a refactoring and passes existing tests.

Closes#32030 from dbaliafroozeh/ImprovedMapChildren.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

Implement toString() and sql() methods for TRY_CAST

### Why are the changes needed?

The new expression should have a different name from `CAST` in SQL/String representation.

### Does this PR introduce _any_ user-facing change?

Yes, in the result of `explain()`, users can see try_cast if the new expression is used.

### How was this patch tested?

Unit tests.

Closes#32098 from gengliangwang/tryCastString.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Change UpdateAction and InsertAction of MergeIntoTable to explicitly represent star,

### Why are the changes needed?

Currently, UpdateAction and InsertAction in the MergeIntoTable implicitly represent `update set *` and `insert *` with empty assignments. That means there is no way to differentiate between the representations of "update all columns" and "update no columns". For SQL MERGE queries, this inability does not matter because the SQL MERGE grammar that generated the MergeIntoTable plan does not allow "update no columns". However, other ways of generating the MergeIntoTable plan may not have that limitation, and may want to allow specifying "update no columns". For example, in the Delta Lake project we provide a type-safe Scala API for Merge, where it is perfectly valid to produce a Merge query with an update clause but no update assignments. Currently, we cannot use MergeIntoTable to represent this plan, thus complicating the generation, and resolution of merge query from scala API.

Side note: fixed another bug where a merge plan with star and no other expressions with unresolved attributes (e.g. all non-optional predicates are `literal(true)`), then resolution will be skipped and star wont expanded. added test for that.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing unit tests

Closes#32067 from tdas/SPARK-34962-2.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, we can't support use ordinal in CUBE/ROLLUP/GROUPING SETS,

this pr make CUBE/ROLLUP/GROUPING SETS support GROUP BY ordinal

### Why are the changes needed?

Make CUBE/ROLLUP/GROUPING SETS support GROUP BY ordinal.

Postgres SQL and TeraData support this use case.

### Does this PR introduce _any_ user-facing change?

User can use ordinal in CUBE/ROLLUP/GROUPING SETS, such as

```

-- can use ordinal in CUBE

select a, b, count(1) from data group by cube(1, 2);

-- mixed cases: can use ordinal in CUBE

select a, b, count(1) from data group by cube(1, b);

-- can use ordinal with cube

select a, b, count(1) from data group by 1, 2 with cube;

-- can use ordinal in ROLLUP

select a, b, count(1) from data group by rollup(1, 2);

-- mixed cases: can use ordinal in ROLLUP

select a, b, count(1) from data group by rollup(1, b);

-- can use ordinal with rollup

select a, b, count(1) from data group by 1, 2 with rollup;

-- can use ordinal in GROUPING SETS

select a, b, count(1) from data group by grouping sets((1), (2), (1, 2));

-- mixed cases: can use ordinal in GROUPING SETS

select a, b, count(1) from data group by grouping sets((1), (b), (a, 2));

select a, b, count(1) from data group by a, 2 grouping sets((1), (b), (a, 2));

```

### How was this patch tested?

Added UT

Closes#30145 from AngersZhuuuu/SPARK-33233.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR adds two additional checks in `CheckAnalysis` for correlated scalar subquery in Aggregate. It blocks the cases that Spark do not currently support based on the rewrite logic in `RewriteCorrelatedScalarSubquery`:

aff6c0febb/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/subquery.scala (L618-L624)

### Why are the changes needed?

It can be confusing to users when their queries pass the check analysis but cannot be executed. Also, the error messages are confusing:

#### Case 1: correlated scalar subquery in the grouping expressions but not in aggregate expressions

```sql

SELECT SUM(c2) FROM t t1 GROUP BY (SELECT SUM(c2) FROM t t2 WHERE t1.c1 = t2.c1)

```

We get this error:

```

java.lang.AssertionError: assertion failed: Expects 1 field, but got 2; something went wrong in analysis

```

because the correlated scalar subquery is not rewritten properly:

```scala

== Optimized Logical Plan ==

Aggregate [scalar-subquery#5 [(c1#6 = c1#6#93)]], [sum(c2#7) AS sum(c2)#11L]

: +- Aggregate [c1#6], [sum(c2#7) AS sum(c2)#15L, c1#6 AS c1#6#93]

: +- LocalRelation [c1#6, c2#7]

+- LocalRelation [c1#6, c2#7]

```

#### Case 2: correlated scalar subquery in the aggregate expressions but not in the grouping expressions

```sql

SELECT (SELECT SUM(c2) FROM t t2 WHERE t1.c1 = t2.c1), SUM(c2) FROM t t1 GROUP BY c1

```

We get this error:

```

java.lang.IllegalStateException: Couldn't find sum(c2)#69L in [c1#60,sum(c2#61)#64L]

```

because the transformed correlated scalar subquery output is not present in the grouping expression of the Aggregate:

```scala

== Optimized Logical Plan ==

Aggregate [c1#60], [sum(c2)#69L AS scalarsubquery(c1)#70L, sum(c2#61) AS sum(c2)#65L]

+- Project [c1#60, c2#61, sum(c2)#69L]

+- Join LeftOuter, (c1#60 = c1#60#95)

:- LocalRelation [c1#60, c2#61]

+- Aggregate [c1#60], [sum(c2#61) AS sum(c2)#69L, c1#60 AS c1#60#95]

+- LocalRelation [c1#60, c2#61]

```

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

New unit tests

Closes#32054 from allisonwang-db/spark-34946-scalar-subquery-agg.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Added new method `toDayTimeIntervalString()` to `IntervalUtils` which converts a day-time interval as a number of microseconds to a string in the form **"INTERVAL '[sign]days hours:minutes:secondsWithFraction' DAY TO SECOND"**.

2. Extended the `Cast` expression to support casting of `DayTimeIntervalType` to `StringType`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires to support such casting.

### Does this PR introduce _any_ user-facing change?

Should not because new day-time interval has not been released yet.

### How was this patch tested?

Added new tests for casting:

```

$ build/sbt "testOnly *CastSuite*"

```

Closes#32070 from MaxGekk/cast-dt-interval-to-string.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Current trait `GroupingSet` is ambiguous, since `grouping set` in parser level means one set of a group.

Rename this to `BaseGroupingSets` since cube/rollup is syntax sugar for grouping sets.`

### Why are the changes needed?

Refactor class name

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#32073 from AngersZhuuuu/SPARK-34976.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

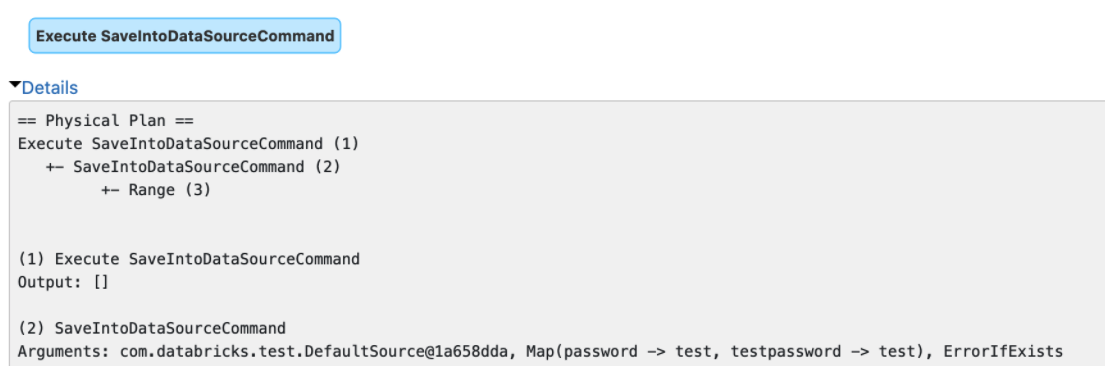

### What changes were proposed in this pull request?

The `explain()` method prints the arguments of tree nodes in logical/physical plans. The arguments could contain a map-type option that contains sensitive data.

We should map-type options in the output of `explain()`. Otherwise, we will see sensitive data in explain output or Spark UI.

### Why are the changes needed?

Data security.

### Does this PR introduce _any_ user-facing change?

Yes, redact the map-type options in the output of `explain()`

### How was this patch tested?

Unit tests

Closes#32066 from gengliangwang/redactOptions.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

## What changes were proposed in this pull request?

This adds a new API for catalog plugins that exposes functions to Spark. The API can list and load functions. This does not include create, delete, or alter operations.

- [Design Document](https://docs.google.com/document/d/1PLBieHIlxZjmoUB0ERF-VozCRJ0xw2j3qKvUNWpWA2U/edit?usp=sharing)

There are 3 types of functions defined:

* A `ScalarFunction` that produces a value for every call

* An `AggregateFunction` that produces a value after updates for a group of rows

Functions loaded from the catalog by name as `UnboundFunction`. Once input arguments are determined `bind` is called on the unbound function to get a `BoundFunction` implementation that is one of the 3 types above. Binding can fail if the function doesn't support the input type. `BoundFunction` returns the result type produced by the function.

## How was this patch tested?

This includes a test that demonstrates the new API.

Closes#24559 from rdblue/SPARK-27658-add-function-catalog-api.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a followup for https://github.com/apache/spark/pull/31932.

In this PR we:

- Introduce the `QuaternaryLike` trait for node types with 4 children.

- Specialize more node types

- Fix a number of style errors that were introduced in the original PR.

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

This is a refactoring, passes existing tests.

Closes#32065 from dbaliafroozeh/FollowupSPARK-34906.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR extends the current function registry and catalog to support table-valued functions by adding a table function registry. It also refactors `range` to be a built-in function in the table function registry.

### Why are the changes needed?

Currently, Spark resolves table-valued functions very differently from the other functions. This change is to make the behavior for table and non-table functions consistent. It also allows Spark to display information about built-in table-valued functions:

Before:

```scala

scala> sql("describe function range").show(false)

+--------------------------+

|function_desc |

+--------------------------+

|Function: range not found.|

+--------------------------+

```

After:

```scala

Function: range

Class: org.apache.spark.sql.catalyst.plans.logical.Range

Usage:

range(start: Long, end: Long, step: Long, numPartitions: Int)

range(start: Long, end: Long, step: Long)

range(start: Long, end: Long)

range(end: Long)

// Extended

Function: range

Class: org.apache.spark.sql.catalyst.plans.logical.Range

Usage:

range(start: Long, end: Long, step: Long, numPartitions: Int)

range(start: Long, end: Long, step: Long)

range(start: Long, end: Long)

range(end: Long)

Extended Usage:

Examples:

> SELECT * FROM range(1);

+---+

| id|

+---+

| 0|

+---+

> SELECT * FROM range(0, 2);

+---+

|id |

+---+

|0 |

|1 |

+---+

> SELECT range(0, 4, 2);

+---+

|id |

+---+

|0 |

|2 |

+---+

Since: 2.0.0

```

### Does this PR introduce _any_ user-facing change?

Yes. User will not be able to create a function with name `range` in the default database:

Before:

```scala

scala> sql("create function range as 'range'")

res3: org.apache.spark.sql.DataFrame = []

```

After:

```

scala> sql("create function range as 'range'")

org.apache.spark.sql.catalyst.analysis.FunctionAlreadyExistsException: Function 'default.range' already exists in database 'default'

```

### How was this patch tested?

Unit test

Closes#31791 from allisonwang-db/spark-34678-table-func-registry.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

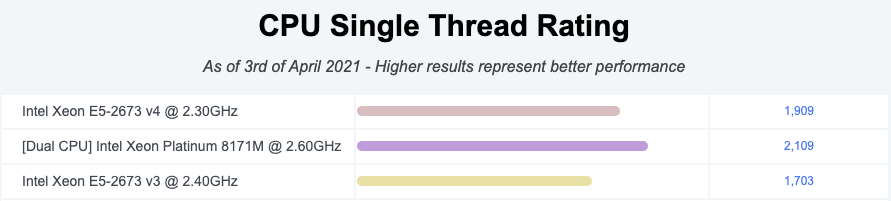

### What changes were proposed in this pull request?

Changed the cost comparison function of the CBO to use the ratios of row counts and sizes in bytes.

### Why are the changes needed?

In #30965 we changed to CBO cost comparison function so it would be "symetric": `A.betterThan(B)` now implies, that `!B.betterThan(A)`.

With that we caused a performance regressions in some queries - TPCDS q19 for example.

The original cost comparison function used the ratios `relativeRows = A.rowCount / B.rowCount` and `relativeSize = A.size / B.size`. The changed function compared "absolute" cost values `costA = w*A.rowCount + (1-w)*A.size` and `costB = w*B.rowCount + (1-w)*B.size`.

Given the input from wzhfy we decided to go back to the relative values, because otherwise one (size) may overwhelm the other (rowCount). But this time we avoid adding up the ratios.

Originally `A.betterThan(B) => w*relativeRows + (1-w)*relativeSize < 1` was used. Besides being "non-symteric", this also can exhibit one overwhelming other.

For `w=0.5` If `A` size (bytes) is at least 2x larger than `B`, then no matter how many times more rows does the `B` plan have, `B` will allways be considered to be better - `0.5*2 + 0.5*0.00000000000001 > 1`.

When working with ratios, then it would be better to multiply them.

The proposed cost comparison function is: `A.betterThan(B) => relativeRows^w * relativeSize^(1-w) < 1`.

### Does this PR introduce _any_ user-facing change?

Comparison of the changed TPCDS v1.4 query execution times at sf=10:

| absolute | multiplicative | | additive |

-- | -- | -- | -- | -- | --

q12 | 145 | 137 | -5.52% | 141 | -2.76%

q13 | 264 | 271 | 2.65% | 271 | 2.65%

q17 | 4521 | 4243 | -6.15% | 4348 | -3.83%

q18 | 758 | 466 | -38.52% | 480 | -36.68%

q19 | 38503 | 2167 | -94.37% | 2176 | -94.35%

q20 | 119 | 120 | 0.84% | 126 | 5.88%

q24a | 16429 | 16838 | 2.49% | 17103 | 4.10%

q24b | 16592 | 16999 | 2.45% | 17268 | 4.07%

q25 | 3558 | 3556 | -0.06% | 3675 | 3.29%

q33 | 362 | 361 | -0.28% | 380 | 4.97%

q52 | 1020 | 1032 | 1.18% | 1052 | 3.14%

q55 | 927 | 938 | 1.19% | 961 | 3.67%

q72 | 24169 | 13377 | -44.65% | 24306 | 0.57%

q81 | 1285 | 1185 | -7.78% | 1168 | -9.11%

q91 | 324 | 336 | 3.70% | 337 | 4.01%

q98 | 126 | 129 | 2.38% | 131 | 3.97%

All times are in ms, the change is compared to the situation in the master branch (absolute).

The proposed cost function (multiplicative) significantlly improves the performance on q18, q19 and q72. The original cost function (additive) has similar improvements at q18 and q19. All other chagnes are within the error bars and I would ignore them - perhaps q81 has also improved.

### How was this patch tested?

PlanStabilitySuite

Closes#32014 from tanelk/SPARK-34922_cbo_better_cost_function.

Lead-authored-by: Tanel Kiis <tanel.kiis@gmail.com>

Co-authored-by: tanel.kiis@gmail.com <tanel.kiis@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

1. Added new method `toYearMonthIntervalString()` to `IntervalUtils` which converts an year-month interval as a number of month to a string in the form **"INTERVAL '[sign]yearField-monthField' YEAR TO MONTH"**.

2. Extended the `Cast` expression to support casting of `YearMonthIntervalType` to `StringType`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires to support such casting.

### Does this PR introduce _any_ user-facing change?

Should not because new year-month interval has not been released yet.

### How was this patch tested?

Added new tests for casting:

```

$ build/sbt "testOnly *CastSuite*"

```

Closes#32056 from MaxGekk/cast-ym-interval-to-string.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Changes the metadata propagation framework.

Previously, most `LogicalPlan`'s propagated their `children`'s `metadataOutput`. This did not make sense in cases where the `LogicalPlan` did not even propagate their `children`'s `output`.

I set the metadata output for plans that do not propagate their `children`'s `output` to be `Nil`. Notably, `Project` and `View` no longer have metadata output.

### Why are the changes needed?

Previously, `SELECT m from (SELECT a from tb)` would output `m` if it were metadata. This did not make sense.

### Does this PR introduce _any_ user-facing change?

Yes. Now, `SELECT m from (SELECT a from tb)` will encounter an `AnalysisException`.

### How was this patch tested?

Added unit tests. I did not cover all cases, as they are fairly extensive. However, the new tests cover major cases (and an existing test already covers Join).

Closes#32017 from karenfeng/spark-34923.

Authored-by: Karen Feng <karen.feng@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

GROUP BY ... GROUPING SETS (...) is a weird SQL syntax we copied from Hive. It's not in the SQL standard or any other mainstream databases. This syntax requires users to repeat the expressions inside `GROUPING SETS (...)` after `GROUP BY`, and has a weird null semantic if `GROUP BY` contains extra expressions than `GROUPING SETS (...)`.

This PR deprecates this syntax:

1. Do not promote it in the document and only mention it as a Hive compatible sytax.

2. Simplify the code to only keep it for Hive compatibility.

### Why are the changes needed?

Deprecate a weird grammar.

### Does this PR introduce _any_ user-facing change?

No breaking change, but it removes a check to simplify the code: `GROUP BY a GROUPING SETS(a, b)` fails before and forces users to also put `b` after `GROUP BY`. Now this works just as `GROUP BY GROUPING SETS(a, b)`.

### How was this patch tested?

existing tests

Closes#32022 from cloud-fan/followup.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

https://github.com/apache/spark/pull/32015 added a way to run benchmarks much more easily in the same GitHub Actions build. This PR updates the benchmark results by using the way.