### What changes were proposed in this pull request?

Close idle connections at shuffle server side when an `IdleStateEvent` is triggered after `spark.shuffle.io.connectionTimeout` or `spark.network.timeout` time. It's based on following investigations.

1. We found connections on our clusters building up continuously (> 10k for some nodes). Is that normal ? We don't think so.

2. We looked into the connections on one node and found there were a lot of half-open connections. (connections only existed on one node)

3. We also checked those connections were very old (> 21 hours). (FYI, https://superuser.com/questions/565991/how-to-determine-the-socket-connection-up-time-on-linux)

4. Looking at the code, TransportContext registers an IdleStateHandler which should fire an IdleStateEvent when timeout. We did a heap dump of the YarnShuffleService and checked the attributes of IdleStateHandler. It turned out firstAllIdleEvent of many IdleStateHandlers were already false so IdleStateEvent were already fired.

5. Finally, we realized the IdleStateEvent would not be handled since closeIdleConnections are hardcoded to false for YarnShuffleService.

### Why are the changes needed?

Idle connections to YarnShuffleService could never be closed, and will be accumulating and taking up memory and file descriptors.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27998 from manuzhang/spark-31219.

Authored-by: manuzhang <owenzhang1990@gmail.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to add new benchmark `DateTimeRebaseBenchmark` which should measure the performance of rebasing of dates/timestamps from/to to the hybrid calendar (Julian+Gregorian) to/from Proleptic Gregorian calendar:

1. In write, it saves separately dates and timestamps before and after 1582 year w/ and w/o rebasing.

2. In read, it loads previously saved parquet files by vectorized reader and by regular reader.

Here is the summary of benchmarking:

- Saving timestamps is **~6 times slower**

- Loading timestamps w/ vectorized **off** is **~4 times slower**

- Loading timestamps w/ vectorized **on** is **~10 times slower**

### Why are the changes needed?

To know the impact of date-time rebasing introduced by #27915, #27953, #27807.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Run the `DateTimeRebaseBenchmark` benchmark using Amazon EC2:

| Item | Description |

| ---- | ----|

| Region | us-west-2 (Oregon) |

| Instance | r3.xlarge |

| AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) |

| Java | OpenJDK8/11 |

Closes#28057 from MaxGekk/rebase-bechmark.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

add a common method `computeChiSq` and reuse it in both `chiSquaredDenseFeatures` and `chiSquaredSparseFeatures`

### Why are the changes needed?

to simplify ChiSq

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing testsuites

Closes#28045 from zhengruifeng/simplify_chisq.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

`DataFrameStatFunctions` now works correctly with fully qualified column name (Table.Column syntax) by properly resolving the name instead of relying on field names from schema, notably:

* `approxQuantile`

* `freqItems`

* `cov`

* `corr`

(other functions from `DataFrameStatFunctions` already work correctly).

See code examples below.

### Why are the changes needed?

With current implementation some stat functions are impossible to use when joining datasets with similar column names.

### Does this PR introduce any user-facing change?

Yes. Before the change, the following code would fail with `AnalysisException`.

```scala

scala> val df1 = sc.parallelize(0 to 10).toDF("num").as("table1")

df1: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [num: int]

scala> val df2 = sc.parallelize(0 to 10).toDF("num").as("table2")

df2: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [num: int]

scala> val dfx = df2.crossJoin(df1)

dfx: org.apache.spark.sql.DataFrame = [num: int, num: int]

scala> dfx.stat.approxQuantile("table1.num", Array(0.1), 0.0)

res0: Array[Double] = Array(1.0)

scala> dfx.stat.corr("table1.num", "table2.num")

res1: Double = 1.0

scala> dfx.stat.cov("table1.num", "table2.num")

res2: Double = 11.0

scala> dfx.stat.freqItems(Array("table1.num", "table2.num"))

res3: org.apache.spark.sql.DataFrame = [table1.num_freqItems: array<int>, table2.num_freqItems: array<int>]

```

### How was this patch tested?

Corresponding unit tests are added to `DataFrameStatSuite.scala` (marked as "SPARK-30532").

Closes#27916 from kachayev/fix-spark-30532.

Authored-by: Oleksii Kachaiev <kachayev@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to update the doc for the `timeZone` option in JSON/CSV datasources and for the `tz` parameter of the `from_utc_timestamp()`/`to_utc_timestamp()` functions, and to restrict format of config's values to 2 forms:

1. Geographical regions, such as `America/Los_Angeles`.

2. Fixed offsets - a fully resolved offset from UTC. For example, `-08:00`.

### Why are the changes needed?

Other formats such as three-letter time zone IDs are ambitious, and depend on the locale. For example, `CST` could be U.S. `Central Standard Time` and `China Standard Time`. Such formats have been already deprecated in JDK, see [Three-letter time zone IDs](https://docs.oracle.com/javase/8/docs/api/java/util/TimeZone.html).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running `./dev/scalastyle`, and manual testing.

Closes#28051 from MaxGekk/doc-time-zone-option.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR (SPARK-31293) fixes wrong command examples, parameter descriptions and help message format for Amazon Kinesis integration with Spark Streaming.

### Why are the changes needed?

To improve usability of those commands.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

I ran the fixed commands manually and confirmed they worked as expected.

Closes#28063 from sekikn/SPARK-31293.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

```sql

scala> spark.sql(" select * from values(1), (2) t(key) where key in (select 1 as key where 1=0)").queryExecution

res15: org.apache.spark.sql.execution.QueryExecution =

== Parsed Logical Plan ==

'Project [*]

+- 'Filter 'key IN (list#39 [])

: +- Project [1 AS key#38]

: +- Filter (1 = 0)

: +- OneRowRelation

+- 'SubqueryAlias t

+- 'UnresolvedInlineTable [key], [List(1), List(2)]

== Analyzed Logical Plan ==

key: int

Project [key#40]

+- Filter key#40 IN (list#39 [])

: +- Project [1 AS key#38]

: +- Filter (1 = 0)

: +- OneRowRelation

+- SubqueryAlias t

+- LocalRelation [key#40]

== Optimized Logical Plan ==

Join LeftSemi, (key#40 = key#38)

:- LocalRelation [key#40]

+- LocalRelation <empty>, [key#38]

== Physical Plan ==

*(1) BroadcastHashJoin [key#40], [key#38], LeftSemi, BuildRight

:- *(1) LocalTableScan [key#40]

+- Br...

```

`LocalRelation <empty> ` should be able to propagate after subqueries are lift up to joins

### Why are the changes needed?

optimize query

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

add new tests

Closes#28043 from yaooqinn/SPARK-31280.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Fix a broken link and make the relevant docs reference to the new doc

### Why are the changes needed?

### Does this PR introduce any user-facing change?

Yes, make CACHE TABLE, UNCACHE TABLE, CLEAR CACHE, REFRESH TABLE link to the new doc

### How was this patch tested?

Manually build and check

Closes#28065 from huaxingao/spark-30363-follow-up.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- functions.toDegrees/toRadians

- functions.approxCountDistinct

- functions.monotonicallyIncreasingId

- Column.!==

- Dataset.explode

- Dataset.registerTempTable

- SQLContext.getOrCreate, setActive, clearActive, constructors

Below is the other removed APIs in the original PR, but not added back in this PR [https://issues.apache.org/jira/browse/SPARK-25908]:

- Remove some AccumulableInfo .apply() methods

- Remove non-label-specific multiclass precision/recall/fScore in favor of accuracy

- Remove unused Python StorageLevel constants

- Remove unused multiclass option in libsvm parsing

- Remove references to deprecated spark configs like spark.yarn.am.port

- Remove TaskContext.isRunningLocally

- Remove ShuffleMetrics.shuffle* methods

- Remove BaseReadWrite.context in favor of session

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

Added a new test suite for these APIs.

Author: gatorsmile <gatorsmile@gmail.com>

Author: yi.wu <yi.wu@databricks.com>

Closes#27821 from gatorsmile/addAPIBackV2.

### What changes were proposed in this pull request?

This PR proposes to make pandas function APIs (`groupby.(cogroup.)applyInPandas` and `mapInPandas`) to ignore Python type hints.

### Why are the changes needed?

Python type hints are optional. It shouldn't affect where pandas UDFs are not used.

This is also a future work for them to support other type hints. We shouldn't at least throw an exception at this moment.

### Does this PR introduce any user-facing change?

No, it's master-only change.

```python

import pandas as pd

def pandas_plus_one(pdf: pd.DataFrame) -> pd.DataFrame:

return pdf + 1

spark.range(10).groupby('id').applyInPandas(pandas_plus_one, schema="id long").show()

```

```python

import pandas as pd

def pandas_plus_one(left: pd.DataFrame, right: pd.DataFrame) -> pd.DataFrame:

return left + 1

spark.range(10).groupby('id').cogroup(spark.range(10).groupby("id")).applyInPandas(pandas_plus_one, schema="id long").show()

```

```python

from typing import Iterator

import pandas as pd

def pandas_plus_one(iter: Iterator[pd.DataFrame]) -> Iterator[pd.DataFrame]:

return map(lambda v: v + 1, iter)

spark.range(10).mapInPandas(pandas_plus_one, schema="id long").show()

```

**Before:**

Exception

**After:**

```

+---+

| id|

+---+

| 1|

| 2|

| 3|

| 4|

| 5|

| 6|

| 7|

| 8|

| 9|

| 10|

+---+

```

### How was this patch tested?

Closes#28052 from HyukjinKwon/SPARK-31287.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

SPARK-25387 avoids npe for bad csv input, but when reading bad csv input with `columnNameCorruptRecord` specified, `getCurrentInput` is called and it still throws npe.

### Why are the changes needed?

Bug fix.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Add a test.

Closes#28029 from wzhfy/corrupt_column_npe.

Authored-by: Zhenhua Wang <wzh_zju@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds "Pandas Function API" into the menu.

### Why are the changes needed?

To be consistent and to make easier to navigate.

### Does this PR introduce any user-facing change?

No, master only.

### How was this patch tested?

Manually verified by `SKIP_API=1 jekyll build`.

Closes#28054 from HyukjinKwon/followup-spark-30722.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR replaces the method calls of `toSet.toSeq` with `distinct`.

### Why are the changes needed?

`toSet.toSeq` is intended to make its elements unique but a bit verbose. Using `distinct` instead is easier to understand and improves readability.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Tested with the existing unit tests and found no problem.

Closes#28062 from sekikn/SPARK-31292.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR aims to copy a test resource file to a local file in `OrcTest` suite before reading it.

### Why are the changes needed?

SPARK-31238 and SPARK-31284 added test cases to access the resouce file in `sql/core` module from `sql/hive` module. In **Maven** test environment, this causes a failure.

```

- SPARK-31238: compatibility with Spark 2.4 in reading dates *** FAILED ***

java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI:

jar:file:/home/jenkins/workspace/spark-master-test-maven-hadoop-3.2-hive-2.3-jdk-11/sql/core/target/spark-sql_2.12-3.1.0-SNAPSHOT-tests.jar!/test-data/before_1582_date_v2_4.snappy.orc

```

```

- SPARK-31284: compatibility with Spark 2.4 in reading timestamps *** FAILED ***

java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI:

jar:file:/home/jenkins/workspace/spark-master-test-maven-hadoop-3.2-hive-2.3/sql/core/target/spark-sql_2.12-3.1.0-SNAPSHOT-tests.jar!/test-data/before_1582_ts_v2_4.snappy.orc

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Pass the Jenkins with Maven.

Closes#28059 from dongjoon-hyun/SPARK-31238.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This patch proposes to prune unnecessary nested fields from Generate which has no Project on top of it.

### Why are the changes needed?

In Optimizer, we can prune nested columns from Project(projectList, Generate). However, unnecessary columns could still possibly be read in Generate, if no Project on top of it. We should prune it too.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit test.

Closes#27517 from viirya/SPARK-29721-2.

Lead-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

The query plan of Spark SQL is a mutually recursive structure: QueryPlan -> Expression (PlanExpression) -> QueryPlan, but the transformations do not take this into account.

This PR refines the comments of `QueryPlan` to highlight this fact.

### Why are the changes needed?

better document.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#28050 from cloud-fan/comment.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose 2 tests to check that rebasing of timestamps from/to the hybrid calendar (Julian + Gregorian) to/from Proleptic Gregorian calendar works correctly.

1. The test `compatibility with Spark 2.4 in reading timestamps` load ORC file saved by Spark 2.4.5 via:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> val df = Seq("1001-01-01 01:02:03.123456").toDF("tsS").select($"tsS".cast("timestamp").as("ts"))

df: org.apache.spark.sql.DataFrame = [ts: timestamp]

scala> df.write.orc("/Users/maxim/tmp/before_1582/2_4_5_ts_orc")

scala> spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_ts_orc").show(false)

+--------------------------+

|ts |

+--------------------------+

|1001-01-01 01:02:03.123456|

+--------------------------+

```

2. The test `rebasing timestamps in write` is round trip test. Since the previous test confirms correct rebasing of timestamps in read. This test should pass only if rebasing works correctly in write.

### Why are the changes needed?

To guarantee that rebasing works correctly for timestamps in ORC datasource.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running `OrcSourceSuite` for Hive 1.2 and 2.3 via the commands:

```

$ build/sbt -Phive-2.3 "test:testOnly *OrcSourceSuite"

```

and

```

$ build/sbt -Phive-1.2 "test:testOnly *OrcSourceSuite"

```

Closes#28047 from MaxGekk/rebase-ts-orc-test.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to change types of `DateTimeTestUtils` values and functions by replacing `java.util.TimeZone` to `java.time.ZoneId`. In particular:

1. Type of `ALL_TIMEZONES` is changed to `Seq[ZoneId]`.

2. Remove `val outstandingTimezones: Seq[TimeZone]`.

3. Change the type of the time zone parameter in `withDefaultTimeZone` to `ZoneId`.

4. Modify affected test suites.

### Why are the changes needed?

Currently, Spark SQL's date-time expressions and functions have been already ported on Java 8 time API but tests still use old time APIs. In particular, `DateTimeTestUtils` exposes functions that accept only TimeZone instances. This is inconvenient, and CPU consuming because need to convert TimeZone instances to ZoneId instances via strings (zone ids).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By affected test suites executed by jenkins builds.

Closes#28033 from MaxGekk/with-default-time-zone.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

OuterReference is one LeafExpression, so it's children is Nil, which makes its SQL representation always be outer(). This makes our explain-command and error msg unclear when OuterReference exists.

e.g.

```scala

org.apache.spark.sql.AnalysisException:

Aggregate/Window/Generate expressions are not valid in where clause of the query.

Expression in where clause: [(in.`value` = max(outer()))]

Invalid expressions: [max(outer())];;

```

This PR override its `sql` method with its `prettyName` and single argment `e`'s `sql` methond

### Why are the changes needed?

improve err message

### Does this PR introduce any user-facing change?

yes, the err msg caused by OuterReference has changed

### How was this patch tested?

modified ut results

Closes#27985 from yaooqinn/SPARK-31225.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- HiveContext

- createExternalTable APIs

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

add a new test suite for createExternalTable APIs.

Closes#27815 from gatorsmile/addAPIsBack.

Lead-authored-by: gatorsmile <gatorsmile@gmail.com>

Co-authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- SQLContext.applySchema

- SQLContext.parquetFile

- SQLContext.jsonFile

- SQLContext.jsonRDD

- SQLContext.load

- SQLContext.jdbc

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

The existing tests.

Closes#27839 from gatorsmile/addAPIBackV3.

Lead-authored-by: gatorsmile <gatorsmile@gmail.com>

Co-authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

1. `DataSourceStrategy.scala` is extended to create `org.apache.spark.sql.sources.Filter` from nested expressions.

2. Translation from nested `org.apache.spark.sql.sources.Filter` to `org.apache.parquet.filter2.predicate.FilterPredicate` is implemented to support nested predicate pushdown for Parquet.

### Why are the changes needed?

Better performance for handling nested predicate pushdown.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New tests are added.

Closes#27728 from dbtsai/SPARK-17636.

Authored-by: DB Tsai <d_tsai@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add ANOVATest and FValueTest to PySpark

### Why are the changes needed?

Parity between Scala and Python.

### Does this PR introduce any user-facing change?

Yes. Python ANOVATest and FValueTest

### How was this patch tested?

doctest

Closes#28012 from huaxingao/stats-python.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

`HiveResult` performs some conversions for commands to be compatible with Hive output, e.g.:

```

// If it is a describe command for a Hive table, we want to have the output format be similar with Hive.

case ExecutedCommandExec(_: DescribeCommandBase) =>

...

// SHOW TABLES in Hive only output table names, while ours output database, table name, isTemp.

case command ExecutedCommandExec(s: ShowTablesCommand) if !s.isExtended =>

```

This conversion is needed for DatasourceV2 commands as well and this PR proposes to add the conversion for v2 commands `SHOW TABLES` and `DESCRIBE TABLE`.

### Why are the changes needed?

This is a bug where conversion is not applied to v2 commands.

### Does this PR introduce any user-facing change?

Yes, now the outputs for v2 commands `SHOW TABLES` and `DESCRIBE TABLE` are compatible with HIVE output.

For example, with a table created as:

```

CREATE TABLE testcat.ns.tbl (id bigint COMMENT 'col1') USING foo

```

The output of `SHOW TABLES` has changed from

```

ns table

```

to

```

table

```

And the output of `DESCRIBE TABLE` has changed from

```

id bigint col1

# Partitioning

Not partitioned

```

to

```

id bigint col1

# Partitioning

Not partitioned

```

### How was this patch tested?

Added unit tests.

Closes#28004 from imback82/hive_result.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In Spark CLI, we create a hive `CliSessionState` and it does not load the `hive-site.xml`. So the configurations in `hive-site.xml` will not take effects like other spark-hive integration apps.

Also, the warehouse directory is not correctly picked. If the `default` database does not exist, the `CliSessionState` will create one during the first time it talks to the metastore. The `Location` of the default DB will be neither the value of `spark.sql.warehousr.dir` nor the user-specified value of `hive.metastore.warehourse.dir`, but the default value of `hive.metastore.warehourse.dir `which will always be `/user/hive/warehouse`.

This PR fixes CLiSuite failure with the hive-1.2 profile in https://github.com/apache/spark/pull/27933.

In https://github.com/apache/spark/pull/27933, we fix the issue in JIRA by deciding the warehouse dir using all properties from spark conf and Hadoop conf, but properties from `--hiveconf` is not included, they will be applied to the `CliSessionState` instance after it initialized. When this command-line option key is `hive.metastore.warehouse.dir`, the actual warehouse dir is overridden. Because of the logic in Hive for creating the non-existing default database changed, that test passed with `Hive 2.3.6` but failed with `1.2`. So in this PR, Hadoop/Hive configurations are ordered by:

` spark.hive.xxx > spark.hadoop.xxx > --hiveconf xxx > hive-site.xml` througth `ShareState.loadHiveConfFile` before sessionState start

### Why are the changes needed?

Bugfix for Spark SQL CLI to pick right confs

### Does this PR introduce any user-facing change?

yes,

1. the non-exists default database will be created in the location specified by the users via `spark.sql.warehouse.dir` or `hive.metastore.warehouse.dir`, or the default value of `spark.sql.warehouse.dir` if none of them specified.

2. configurations from `hive-site.xml` will not override command-line options or the properties defined with `spark.hadoo(hive).` prefix in spark conf.

### How was this patch tested?

add cli ut

Closes#27969 from yaooqinn/SPARK-31170-2.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When `toPandas` API works on duplicate column names produced from operators like join, we see the error like:

```

ValueError: The truth value of a Series is ambiguous. Use a.empty, a.bool(), a.item(), a.any() or a.all().

```

This patch fixes the error in `toPandas` API.

### Why are the changes needed?

To make `toPandas` work on dataframe with duplicate column names.

### Does this PR introduce any user-facing change?

Yes. Previously calling `toPandas` API on a dataframe with duplicate column names will fail. After this patch, it will produce correct result.

### How was this patch tested?

Unit test.

Closes#28025 from viirya/SPARK-31186.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR related to https://github.com/apache/spark/pull/27481.

If test case A uses `--IMPORT` to import test case B contains bracketed comments, the output can't display bracketed comments in golden files well.

The content of `nested-comments.sql` show below:

```

-- This test case just used to test imported bracketed comments.

-- the first case of bracketed comment

--QUERY-DELIMITER-START

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first;

*/

SELECT 'selected content' AS first;

--QUERY-DELIMITER-END

```

The test case `comments.sql` imports `nested-comments.sql` below:

`--IMPORT nested-comments.sql`

Before this PR, the output will be:

```

-- !query

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.catalyst.parser.ParseException

mismatched input '/' expecting {'(', 'ADD', 'ALTER', 'ANALYZE', 'CACHE', 'CLEAR', 'COMMENT', 'COMMIT', 'CREATE', 'DELETE', 'DESC', 'DESCRIBE', 'DFS', 'DROP',

'EXPLAIN', 'EXPORT', 'FROM', 'GRANT', 'IMPORT', 'INSERT', 'LIST', 'LOAD', 'LOCK', 'MAP', 'MERGE', 'MSCK', 'REDUCE', 'REFRESH', 'REPLACE', 'RESET', 'REVOKE', '

ROLLBACK', 'SELECT', 'SET', 'SHOW', 'START', 'TABLE', 'TRUNCATE', 'UNCACHE', 'UNLOCK', 'UPDATE', 'USE', 'VALUES', 'WITH'}(line 1, pos 0)

== SQL ==

/* This is the first example of bracketed comment.

^^^

SELECT 'ommented out content' AS first

-- !query

*/

SELECT 'selected content' AS first

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.catalyst.parser.ParseException

extraneous input '*/' expecting {'(', 'ADD', 'ALTER', 'ANALYZE', 'CACHE', 'CLEAR', 'COMMENT', 'COMMIT', 'CREATE', 'DELETE', 'DESC', 'DESCRIBE', 'DFS', 'DROP', 'EXPLAIN', 'EXPORT', 'FROM', 'GRANT', 'IMPORT', 'INSERT', 'LIST', 'LOAD', 'LOCK', 'MAP', 'MERGE', 'MSCK', 'REDUCE', 'REFRESH', 'REPLACE', 'RESET', 'REVOKE', 'ROLLBACK', 'SELECT', 'SET', 'SHOW', 'START', 'TABLE', 'TRUNCATE', 'UNCACHE', 'UNLOCK', 'UPDATE', 'USE', 'VALUES', 'WITH'}(line 1, pos 0)

== SQL ==

*/

^^^

SELECT 'selected content' AS first

```

After this PR, the output will be:

```

-- !query

/* This is the first example of bracketed comment.

SELECT 'ommented out content' AS first;

*/

SELECT 'selected content' AS first

-- !query schema

struct<first:string>

-- !query output

selected content

```

### Why are the changes needed?

Golden files can't display the bracketed comments in imported test cases.

### Does this PR introduce any user-facing change?

'No'.

### How was this patch tested?

New UT.

Closes#28018 from beliefer/fix-bug-tests-imported-bracketed-comments.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR (SPARK-31238) aims the followings.

1. Modified ORC Vectorized Reader, in particular, OrcColumnVector v1.2 and v2.3. After the changes, it uses `DateTimeUtils. rebaseJulianToGregorianDays()` added by https://github.com/apache/spark/pull/27915 . The method performs rebasing days from the hybrid calendar (Julian + Gregorian) to Proleptic Gregorian calendar. It builds a local date in the original calendar, extracts date fields `year`, `month` and `day` from the local date, and builds another local date in the target calendar. After that, it calculates days from the epoch `1970-01-01` for the resulted local date.

2. Introduced rebasing dates while saving ORC files, in particular, I modified `OrcShimUtils. getDateWritable` v1.2 and v2.3, and returned `DaysWritable` instead of Hive's `DateWritable`. The `DaysWritable` class was added by the PR https://github.com/apache/spark/pull/27890 (and fixed by https://github.com/apache/spark/pull/27962). I moved `DaysWritable` from `sql/hive` to `sql/core` to re-use it in ORC datasource.

### Why are the changes needed?

For the backward compatibility with Spark 2.4 and earlier versions. The changes allow users to read dates/timestamps saved by previous version, and get the same result.

### Does this PR introduce any user-facing change?

Yes. Before the changes, loading the date `1200-01-01` saved by Spark 2.4.5 returns the following:

```scala

scala> spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+----------+

|dt |

+----------+

|1200-01-08|

+----------+

```

After the changes

```scala

scala> spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+----------+

|dt |

+----------+

|1200-01-01|

+----------+

```

### How was this patch tested?

- By running `OrcSourceSuite` and `HiveOrcSourceSuite`.

- Add new test `SPARK-31238: compatibility with Spark 2.4 in reading dates` to `OrcSuite` which reads an ORC file saved by Spark 2.4.5 via the commands:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> sql("select cast('1200-01-01' as date) dt").write.mode("overwrite").orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc")

scala> spark.read.orc("/Users/maxim/tmp/before_1582/2_4_5_date_orc").show(false)

+----------+

|dt |

+----------+

|1200-01-01|

+----------+

```

- Add round trip test `SPARK-31238: rebasing dates in write`. The test `SPARK-31238: compatibility with Spark 2.4 in reading dates` confirms rebasing in read. So, we can check rebasing in write.

Closes#28016 from MaxGekk/rebase-date-orc.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Fix incorrect log of `cureRequestSize`.

### Why are the changes needed?

In batch mode, `curRequestSize` can be the total size of several block groups. And each group should have its own request size instead of using the total size.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

It's only affect log.

Closes#28028 from Ngone51/fix_curRequestSize.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This is the core scheduler changes to support Stage level scheduling.

The main changes here include modification to the DAGScheduler to look at the ResourceProfiles associated with an RDD and have those applied inside the scheduler.

Currently if multiple RDD's in a stage have conflicting ResourceProfiles we throw an error. logic to allow this will happen in SPARK-29153. I added the interfaces to RDD to add and get the REsourceProfile so that I could add unit tests for the scheduler. These are marked as private for now until we finish the feature and will be exposed in SPARK-29150. If you think this is confusing I can remove those and remove the tests and add them back later.

I modified the task scheduler to make sure to only schedule on executor that exactly match the resource profile. It will then check those executors to make sure the current resources meet the task needs before assigning it. In here I changed the way we do the custom resource assignment.

Other changes here include having the cpus per task passed around so that we can properly account for them. Previously we just used the one global config, but now it can change based on the ResourceProfile.

I removed the exceptions that require the cores to be the limiting resource. With this change all the places I found that used executor cores /task cpus as slots has been updated to use the ResourceProfile logic and look to see what resource is limiting.

### Why are the changes needed?

Stage level sheduling feature

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

unit tests and lots of manual testing

Closes#27773 from tgravescs/SPARK-29154.

Lead-authored-by: Thomas Graves <tgraves@nvidia.com>

Co-authored-by: Thomas Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Skew join handling comes with an overhead: we need to read some data repeatedly. We should treat a partition as skewed if it's large enough so that it's beneficial to do so.

Currently the size threshold is the advisory partition size, which is 64 MB by default. This is not large enough for the skewed partition size threshold.

This PR adds a new config for the threshold and set default value as 256 MB.

### Why are the changes needed?

Avoid skew join handling that may introduce a perf regression.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

existing tests

Closes#27967 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Make `mergeSparkConf` in `WithTestConf` respects `spark.sql.legacy.sessionInitWithConfigDefaults`.

### Why are the changes needed?

Without the fix, conf specified by `withSQLConf` can be reverted to original value in a cloned SparkSession. For example, you will fail test below without the fix:

```

withSQLConf(SQLConf.CODEGEN_FALLBACK.key -> "true") {

val cloned = spark.cloneSession()

SparkSession.setActiveSession(cloned)

assert(SQLConf.get.getConf(SQLConf.CODEGEN_FALLBACK) === true)

}

```

So we should fix it just as #24540 did before.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added tests.

Closes#28014 from Ngone51/sparksession_clone.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to define `timestampFormatter`, `dateFormatter` and `zoneId` as methods of the `HiveResult` object. This should guarantee that the formatters pick the current session time zone in `toHiveString()`

### Why are the changes needed?

Currently, date/timestamp formatters in `HiveResult.toHiveString` are initialized once on instantiation of the `HiveResult` object, and pick up the session time zone. If the sessions time zone is changed, the formatters still use the previous one.

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

By existing test suites, in particular, by `HiveResultSuite`

Closes#28024 from MaxGekk/hive-result-datetime-formatters.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR targets for non-nullable null type not to coerce to nullable type in complex types.

Non-nullable fields in struct, elements in an array and entries in map can mean empty array, struct and map. They are empty so it does not need to force the nullability when we find common types.

This PR also reverts and supersedes d7b97a1d0d

### Why are the changes needed?

To make type coercion coherent and consistent. Currently, we correctly keep the nullability even between non-nullable fields:

```scala

import org.apache.spark.sql.types._

import org.apache.spark.sql.functions._

spark.range(1).select(array(lit(1)).cast(ArrayType(IntegerType, false))).printSchema()

spark.range(1).select(array(lit(1)).cast(ArrayType(DoubleType, false))).printSchema()

```

```scala

spark.range(1).selectExpr("concat(array(1), array(1)) as arr").printSchema()

```

### Does this PR introduce any user-facing change?

Yes.

```scala

import org.apache.spark.sql.types._

import org.apache.spark.sql.functions._

spark.range(1).select(array().cast(ArrayType(IntegerType, false))).printSchema()

```

```scala

spark.range(1).selectExpr("concat(array(), array(1)) as arr").printSchema()

```

**Before:**

```

org.apache.spark.sql.AnalysisException: cannot resolve 'array()' due to data type mismatch: cannot cast array<null> to array<int>;;

'Project [cast(array() as array<int>) AS array()#68]

+- Range (0, 1, step=1, splits=Some(12))

at org.apache.spark.sql.catalyst.analysis.package$AnalysisErrorAt.failAnalysis(package.scala:42)

at org.apache.spark.sql.catalyst.analysis.CheckAnalysis$$anonfun$$nestedInanonfun$checkAnalysis$1$2.applyOrElse(CheckAnalysis.scala:149)

at org.apache.spark.sql.catalyst.analysis.CheckAnalysis$$anonfun$$nestedInanonfun$checkAnalysis$1$2.applyOrElse(CheckAnalysis.scala:140)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformUp$2(TreeNode.scala:333)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:72)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:333)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformUp$1(TreeNode.scala:330)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$mapChildren$1(TreeNode.scala:399)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:237)

```

```

root

|-- arr: array (nullable = false)

| |-- element: integer (containsNull = true)

```

**After:**

```

root

|-- array(): array (nullable = false)

| |-- element: integer (containsNull = false)

```

```

root

|-- arr: array (nullable = false)

| |-- element: integer (containsNull = false)

```

### How was this patch tested?

Unittests were added and manually tested.

Closes#27991 from HyukjinKwon/SPARK-31227.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, ResetCommand clear all configurations, including sql configs, static sql configs and spark context level configs.

for example:

```sql

spark-sql> set xyz=abc;

xyz abc

spark-sql> set;

spark.app.id local-1585055396930

spark.app.name SparkSQL::10.242.189.214

spark.driver.host 10.242.189.214

spark.driver.port 65094

spark.executor.id driver

spark.jars

spark.master local[*]

spark.sql.catalogImplementation hive

spark.sql.hive.version 1.2.1

spark.submit.deployMode client

xyz abc

spark-sql> reset;

spark-sql> set;

spark-sql> set spark.sql.hive.version;

spark.sql.hive.version 1.2.1

spark-sql> set spark.app.id;

spark.app.id <undefined>

```

In this PR, we restore spark confs to RuntimeConfig after it is cleared

### Why are the changes needed?

reset command overkills configs which are static.

### Does this PR introduce any user-facing change?

yes, the ResetCommand do not change static configs now

### How was this patch tested?

add ut

Closes#28003 from yaooqinn/SPARK-31234.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

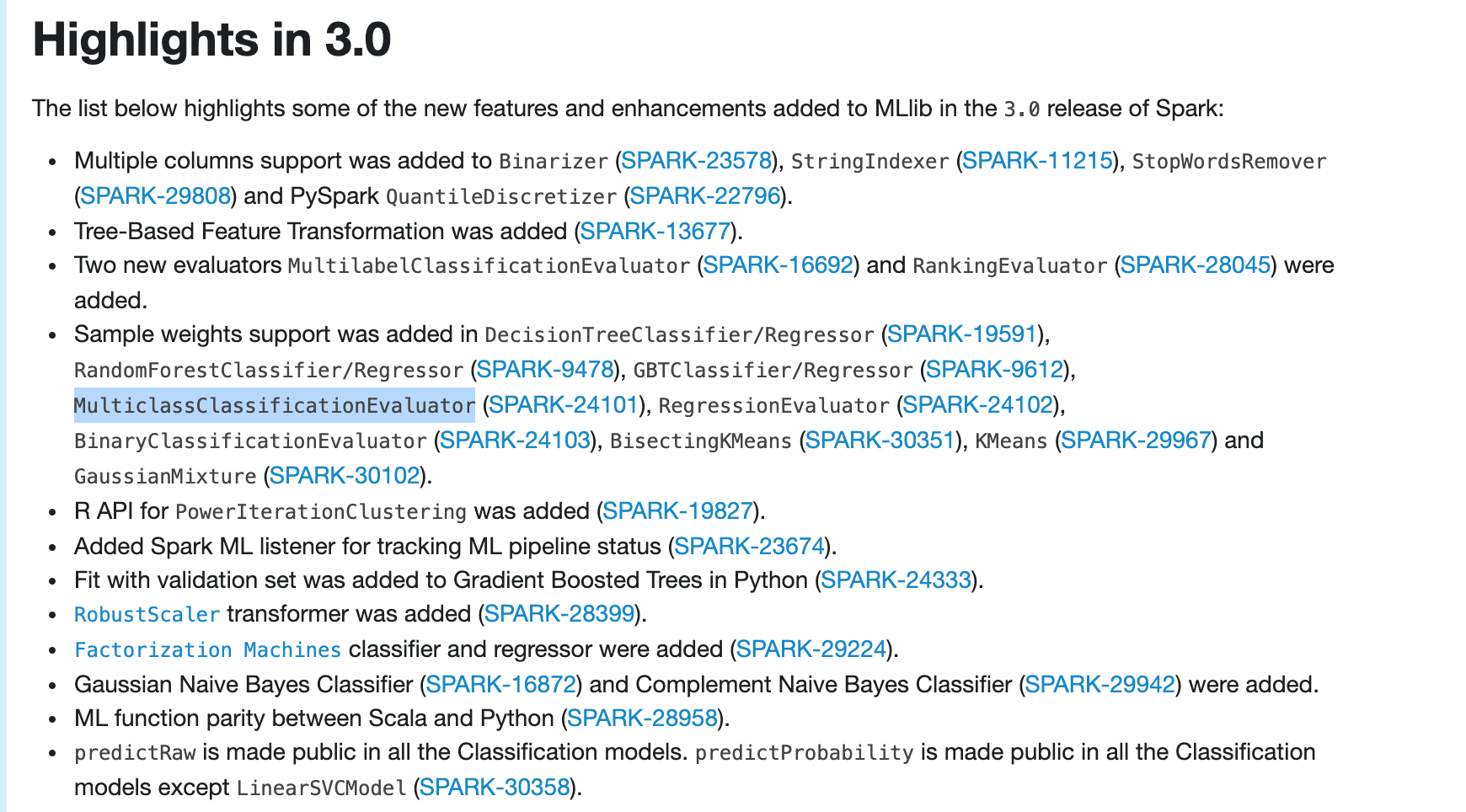

### What changes were proposed in this pull request?

Update ml-guide to include ```MulticlassClassificationEvaluator``` weight support in highlights

### Why are the changes needed?

```MulticlassClassificationEvaluator``` weight support is very important, so should include it in highlights

### Does this PR introduce any user-facing change?

Yes

after:

### How was this patch tested?

manually build and check

Closes#28031 from huaxingao/highlights-followup.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

https://issues.apache.org/jira/browse/SPARK-31223

set seed in np.random when generating test data......

### Why are the changes needed?

so the same set of test data can be regenerated later.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

exiting tests

Closes#27994 from huaxingao/spark-31223.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

In the PR, I propose to add a few `ZoneId` constant values to the `DateTimeTestUtils` object, and reuse the constants in tests. Proposed the following constants:

- PST = -08:00

- UTC = +00:00

- CEST = +02:00

- CET = +01:00

- JST = +09:00

- MIT = -09:30

- LA = America/Los_Angeles

### Why are the changes needed?

All proposed constant values (except `LA`) are initialized by zone offsets according to their definitions. This will allow to avoid:

- Using of 3-letter time zones that have been already deprecated in JDK, see _Three-letter time zone IDs_ in https://docs.oracle.com/javase/8/docs/api/java/util/TimeZone.html

- Incorrect mapping of 3-letter time zones to zone offsets, see SPARK-31237. For example, `PST` is mapped to `America/Los_Angeles` instead of the `-08:00` zone offset.

Also this should improve stability and maintainability of test suites.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running affected test suites.

Closes#28001 from MaxGekk/replace-pst.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Fix the regression caused by #22173.

The original PR changes the logic of handling `ChunkFetchReqeust` from async to sync, that's causes the shuffle benchmark regression. This PR fixes the regression back to the async mode by reusing the config `spark.shuffle.server.chunkFetchHandlerThreadsPercent`.

When the user sets the config, ChunkFetchReqeust will be processed in a separate event loop group, otherwise, the code path is exactly the same as before.

### Why are the changes needed?

Fix the shuffle performance regression described in https://github.com/apache/spark/pull/22173#issuecomment-572459561

### Does this PR introduce any user-facing change?

Yes, this PR disable the separate event loop for FetchRequest by default.

### How was this patch tested?

Existing UT.

Closes#27665 from xuanyuanking/SPARK-24355-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

the link for `partition discovery` is malformed, because for releases, there will contains` /docs/<version>/` in the full URL.

### Why are the changes needed?

fix doc

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

`SKIP_SCALADOC=1 SKIP_RDOC=1 SKIP_SQLDOC=1 jekyll serve` locally verified

Closes#28017 from yaooqinn/doc.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR is a followup of apache/spark#27995. Rather then pining setuptools version, it sets upper bound so Python 3.5 with branch-2.4 tests can pass too.

## Why are the changes needed?

To make the CI build stable

## Does this PR introduce any user-facing change?

No, dev-only change.

## How was this patch tested?

Jenkins will test.

Closes#28005 from HyukjinKwon/investigate-pip-packaging-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

add arvo dep in SparkBuild

### Why are the changes needed?

fix sbt unidoc like https://github.com/apache/spark/pull/28017#issuecomment-603828597

```scala

[warn] Multiple main classes detected. Run 'show discoveredMainClasses' to see the list

[warn] Multiple main classes detected. Run 'show discoveredMainClasses' to see the list

[info] Main Scala API documentation to /home/jenkins/workspace/SparkPullRequestBuilder6/target/scala-2.12/unidoc...

[info] Main Java API documentation to /home/jenkins/workspace/SparkPullRequestBuilder6/target/javaunidoc...

[error] /home/jenkins/workspace/SparkPullRequestBuilder6/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala:123: value createDatumWriter is not a member of org.apache.avro.generic.GenericData

[error] writerCache.getOrElseUpdate(schema, GenericData.get.createDatumWriter(schema))

[error] ^

[info] No documentation generated with unsuccessful compiler run

[error] one error found

```

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

pass jenkins

and verify manually with `sbt dependencyTree`

```scala

kentyaohulk ~/spark dep build/sbt dependencyTree | grep avro | grep -v Resolving

[info] +-org.apache.avro:avro-mapred:1.8.2

[info] | +-org.apache.avro:avro-ipc:1.8.2

[info] | | +-org.apache.avro:avro:1.8.2

[info] +-org.apache.avro:avro:1.8.2

[info] | | +-org.apache.avro:avro:1.8.2

[info] org.apache.spark:spark-avro_2.12:3.1.0-SNAPSHOT [S]

[info] | | | +-org.apache.avro:avro-mapred:1.8.2

[info] | | | | +-org.apache.avro:avro-ipc:1.8.2

[info] | | | | | +-org.apache.avro:avro:1.8.2

[info] | | | +-org.apache.avro:avro:1.8.2

[info] | | | | | +-org.apache.avro:avro:1.8.2

```

Closes#28020 from yaooqinn/dep.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR (SPARK-31244) replaces `Ceph` with `Minio` in K8S `DepsTestSuite`.

### Why are the changes needed?

Currently, `DepsTestsSuite` is using `ceph` for S3 storage. However, the used version and all new releases are broken on new `minikube` releases. We had better use more robust and small one.

```

$ minikube version

minikube version: v1.8.2

$ minikube -p minikube docker-env | source

$ docker run -it --rm -e NETWORK_AUTO_DETECT=4 -e RGW_FRONTEND_PORT=8000 -e SREE_PORT=5001 -e CEPH_DEMO_UID=nano -e CEPH_DAEMON=demo ceph/daemon:v4.0.3-stable-4.0-nautilus-centos-7-x86_64 /bin/sh

2020-03-25 04:26:21 /opt/ceph-container/bin/entrypoint.sh: ERROR- it looks like we have not been able to discover the network settings

$ docker run -it --rm -e NETWORK_AUTO_DETECT=4 -e RGW_FRONTEND_PORT=8000 -e SREE_PORT=5001 -e CEPH_DEMO_UID=nano -e CEPH_DAEMON=demo ceph/daemon:v4.0.11-stable-4.0-nautilus-centos-7 /bin/sh

2020-03-25 04:20:30 /opt/ceph-container/bin/entrypoint.sh: ERROR- it looks like we have not been able to discover the network settings

```

Also, the image size is unnecessarily big (almost `1GB`) and growing while `minio` is `55.8MB` with the same features.

```

$ docker images | grep ceph

ceph/daemon v4.0.3-stable-4.0-nautilus-centos-7-x86_64 a6a05ccdf924 6 months ago 852MB

ceph/daemon v4.0.11-stable-4.0-nautilus-centos-7 87f695550d8e 12 hours ago 901MB

$ docker images | grep minio

minio/minio latest 95c226551ea6 5 days ago 55.8MB

```

### Does this PR introduce any user-facing change?

No. (This is a test case change)

### How was this patch tested?

Pass the existing Jenkins K8s integration test job and test with the latest minikube.

```

$ minikube version

minikube version: v1.8.2

$ kubectl version --short

Client Version: v1.17.4

Server Version: v1.17.4

$ NO_MANUAL=1 ./dev/make-distribution.sh --r --pip --tgz -Pkubernetes

$ resource-managers/kubernetes/integration-tests/dev/dev-run-integration-tests.sh --spark-tgz $PWD/spark-*.tgz

...

KubernetesSuite:

- Run SparkPi with no resources

- Run SparkPi with a very long application name.

- Use SparkLauncher.NO_RESOURCE

- Run SparkPi with a master URL without a scheme.

- Run SparkPi with an argument.

- Run SparkPi with custom labels, annotations, and environment variables.

- All pods have the same service account by default

- Run extraJVMOptions check on driver

- Run SparkRemoteFileTest using a remote data file

- Run SparkPi with env and mount secrets.

- Run PySpark on simple pi.py example

- Run PySpark with Python2 to test a pyfiles example

- Run PySpark with Python3 to test a pyfiles example

- Run PySpark with memory customization

- Run in client mode.

- Start pod creation from template

- PVs with local storage *** FAILED *** // This is irrelevant to this PR.

- Launcher client dependencies // This is the fixed test case by this PR.

- Test basic decommissioning

- Run SparkR on simple dataframe.R example

Run completed in 12 minutes, 4 seconds.

...

```

The following is the working snapshot of `DepsTestSuite` test.

```

$ kubectl get all -ncf9438dd8a65436686b1196a6b73000f

NAME READY STATUS RESTARTS AGE

pod/minio-0 1/1 Running 0 70s

pod/spark-test-app-8494bddca3754390b9e59a2ef47584eb 1/1 Running 0 55s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio-s3 NodePort 10.109.54.180 <none> 9000:30678/TCP 70s

service/spark-test-app-fd916b711061c7b8-driver-svc ClusterIP None <none> 7078/TCP,7079/TCP,4040/TCP 55s

NAME READY AGE

statefulset.apps/minio 1/1 70s

```

Closes#28015 from dongjoon-hyun/SPARK-31244.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Spark introduced CHAR type for hive compatibility but it only works for hive tables. CHAR type is never documented and is treated as STRING type for non-Hive tables.

However, this leads to confusing behaviors

**Apache Spark 3.0.0-preview2**

```

spark-sql> CREATE TABLE t(a CHAR(3));

spark-sql> INSERT INTO TABLE t SELECT 'a ';

spark-sql> SELECT a, length(a) FROM t;

a 2

```

**Apache Spark 2.4.5**

```

spark-sql> CREATE TABLE t(a CHAR(3));

spark-sql> INSERT INTO TABLE t SELECT 'a ';

spark-sql> SELECT a, length(a) FROM t;

a 3

```

According to the SQL standard, `CHAR(3)` should guarantee all the values are of length 3. Since `CHAR(3)` is treated as STRING so Spark doesn't guarantee it.

This PR forbids CHAR type in non-Hive tables as it's not supported correctly.

### Why are the changes needed?

avoid confusing/wrong behavior

### Does this PR introduce any user-facing change?

yes, now users can't create/alter non-Hive tables with CHAR type.

### How was this patch tested?

new tests

Closes#27902 from cloud-fan/char.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This pr intends to add unit tests for the other join hints (`MERGEJOIN`, `SHUFFLE_HASH`, and `SHUFFLE_REPLICATE_NL`). This is a followup PR of #27935.

### Why are the changes needed?

For better test coverage.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added unit tests.

Closes#28013 from maropu/SPARK-25121-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to update the doc for `spark.sql.session.timeZone`, and restrict format of config's values to 2 forms:

1. Geographical regions, such as `America/Los_Angeles`.

2. Fixed offsets - a fully resolved offset from UTC. For example, `-08:00`.

### Why are the changes needed?

Other formats such as three-letter time zone IDs are ambitious, and depend on the locale. For example, `CST` could be U.S. `Central Standard Time` and `China Standard Time`. Such formats have been already deprecated in JDK, see [Three-letter time zone IDs](https://docs.oracle.com/javase/8/docs/api/java/util/TimeZone.html).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running `./dev/scalastyle`, and manual testing.

Closes#27999 from MaxGekk/doc-session-time-zone.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>