### What changes were proposed in this pull request?

```ChiSqSelector ``` depends on ```mllib.ChiSqSelectorModel``` to do the selection logic. Will remove the dependency in this PR.

### Why are the changes needed?

This PR is an intermediate PR. Removing ```ChiSqSelector``` dependency on ```mllib.ChiSqSelectorModel```. Next subtask will extract the common code between ```ChiSqSelector``` and ```FValueSelector``` and put in an abstract ```Selector```.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New and existing tests

Closes#27841 from huaxingao/chisq.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR propose

1. Explicitly include xml-apis. xml-apis is already the part of xerces 2.12.0 (https://repo1.maven.org/maven2/xerces/xercesImpl/2.12.0/xercesImpl-2.12.0.pom). However, we're excluding it by setting `scope` to `test`. This seems causing `spark-shell`, built from Maven, to fail.

Seems like previously xml-apis wasn't reached for some reasons but after we upgrade, it seems requiring. Therefore, this PR proposes to include it.

2. Pins `xerces` version in SBT as well. Seems this dependency is resolved differently from Maven.

Note that Hadoop 3 does not looks requiring this as they replaced xerces as of [HDFS-12221](https://issues.apache.org/jira/browse/HDFS-12221).

### Why are the changes needed?

To make `spark-shell` working from Maven build, and uses the same xerces version.

### Does this PR introduce any user-facing change?

No, it's master only.

### How was this patch tested?

**1.**

```bash

./build/mvn -DskipTests -Psparkr -Phive clean package

./bin/spark-shell

```

Before:

```

Exception in thread "main" java.lang.NoClassDefFoundError: org/w3c/dom/ElementTraversal

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:763)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at org.apache.xerces.parsers.AbstractDOMParser.startDocument(Unknown Source)

at org.apache.xerces.xinclude.XIncludeHandler.startDocument(Unknown Source)

at org.apache.xerces.impl.dtd.XMLDTDValidator.startDocument(Unknown Source)

at org.apache.xerces.impl.XMLDocumentScannerImpl.startEntity(Unknown Source)

at org.apache.xerces.impl.XMLVersionDetector.startDocumentParsing(Unknown Source)

at org.apache.xerces.parsers.XML11Configuration.parse(Unknown Source)

at org.apache.xerces.parsers.XML11Configuration.parse(Unknown Source)

at org.apache.xerces.parsers.XMLParser.parse(Unknown Source)

at org.apache.xerces.parsers.DOMParser.parse(Unknown Source)

at org.apache.xerces.jaxp.DocumentBuilderImpl.parse(Unknown Source)

at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:150)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2482)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2470)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2541)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2494)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2407)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1143)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1115)

at org.apache.spark.deploy.SparkHadoopUtil$.org$apache$spark$deploy$SparkHadoopUtil$$appendS3AndSparkHadoopHiveConfigurations(SparkHadoopUtil.scala:456)

at org.apache.spark.deploy.SparkHadoopUtil$.newConfiguration(SparkHadoopUtil.scala:427)

at org.apache.spark.deploy.SparkSubmit.$anonfun$prepareSubmitEnvironment$2(SparkSubmit.scala:342)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:342)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:871)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1007)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1016)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.w3c.dom.ElementTraversal

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 42 more

```

After:

```

...

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.1.0-SNAPSHOT

/_/

Using Scala version 2.12.10 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_202)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

```

**2.**

```

./build/sbt dependencyTree -Phadoop-2.7 -Phive-2.3 -Phive-thriftserver -Phive

./build/sbt dependencyTree -Phadoop-3.2 -Phive-2.3 -Phive-thriftserver -Phive

```

Closes#27808 from HyukjinKwon/SPARK-30994.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Remove the cases for ```MissingTypesProblem```, ```InheritedNewAbstractMethodProblem```, ```DirectMissingMethodProblem``` and ```ReversedMissingMethodProblem```.

### Why are the changes needed?

After the changes, we don't have ```org.apache.spark.sql.sources.v2``` any more, so the only problem we can get is ```MissingClassProblem```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested

Closes#27731 from huaxingao/spark-28998-followup.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

I found a few unnecessary MiMa excludes when auditing SQL binary incompatible changes.

### Why are the changes needed?

These MiMa excludes are not required any more, so remove.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested

Closes#27729 from huaxingao/mima.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

https://issues.apache.org/jira/browse/SPARK-30928

remove unnecessary MiMa excludes

### Why are the changes needed?

When auditing binary incompatible changes for 3.0, I found several MiMa excludes are not necessary, so remove these.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

run dev/mima to check

Closes#27696 from huaxingao/spark-mima.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

Fix for #27395

### What changes were proposed in this pull request?

The `allGather` method is added to the `BarrierTaskContext`. This method contains the same functionality as the `BarrierTaskContext.barrier` method; it blocks the task until all tasks make the call, at which time they may continue execution. In addition, the `allGather` method takes an input message. Upon returning from the `allGather` the task receives a list of all the messages sent by all the tasks that made the `allGather` call.

### Why are the changes needed?

There are many situations where having the tasks communicate in a synchronized way is useful. One simple example is if each task needs to start a server to serve requests from one another; first the tasks must find a free port (the result of which is undetermined beforehand) and then start making requests, but to do so they each must know the port chosen by the other task. An `allGather` method would allow them to inform each other of the port they will run on.

### Does this PR introduce any user-facing change?

Yes, an `BarrierTaskContext.allGather` method will be available through the Scala, Java, and Python APIs.

### How was this patch tested?

Most of the code path is already covered by tests to the `barrier` method, since this PR includes a refactor so that much code is shared by the `barrier` and `allGather` methods. However, a test is added to assert that an all gather on each tasks partition ID will return a list of every partition ID.

An example through the Python API:

```python

>>> from pyspark import BarrierTaskContext

>>>

>>> def f(iterator):

... context = BarrierTaskContext.get()

... return [context.allGather('{}'.format(context.partitionId()))]

...

>>> sc.parallelize(range(4), 4).barrier().mapPartitions(f).collect()[0]

[u'3', u'1', u'0', u'2']

```

Closes#27640 from sarthfrey/master.

Lead-authored-by: sarthfrey-db <sarth.frey@databricks.com>

Co-authored-by: sarthfrey <sarth.frey@gmail.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

- Add missing `since` annotation.

- Don't show classes under `org.apache.spark.sql.dynamicpruning` package in API docs.

- Fix the scope of `xxxExactNumeric` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27560 from xuanyuanking/SPARK-30809.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to throw exception by default when user use untyped UDF(a.k.a `org.apache.spark.sql.functions.udf(AnyRef, DataType)`).

And user could still use it by setting `spark.sql.legacy.useUnTypedUdf.enabled` to `true`.

### Why are the changes needed?

According to #23498, since Spark 3.0, the untyped UDF will return the default value of the Java type if the input value is null. For example, `val f = udf((x: Int) => x, IntegerType)`, `f($"x")` will return 0 in Spark 3.0 but null in Spark 2.4. And the behavior change is introduced due to Spark3.0 is built with Scala 2.12 by default.

As a result, this might change data silently and may cause correctness issue if user still expect `null` in some cases. Thus, we'd better to encourage user to use typed UDF to avoid this problem.

### Does this PR introduce any user-facing change?

Yeah. User will hit exception now when use untyped UDF.

### How was this patch tested?

Added test and updated some tests.

Closes#27488 from Ngone51/spark_26580_followup.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: wuyi <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR tries #26710 (comment) way to fix the test.

### Why are the changes needed?

To make the tests pass.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Jenkins will test first, and then `on spark-branch-3.0-test-sbt-hadoop-2.7-hive-2.3` will test it out.

Closes#27513 from HyukjinKwon/test-SPARK-30756.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

(cherry picked from commit 8efe367a4e)

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Fix the scope of `Logging.initializeForcefully` so that it doesn't appear in subclasses' public methods. Right now, `sc.initializeForcefully(false, false)` is allowed to called.

- Don't show classes under `org.apache.spark.internal` package in API docs.

- Add missing `since` annotation.

- Fix the scope of `ArrowUtils` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27528 from zsxwing/audit-ss-apis.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

We should also expose it in documentation as we marked it as unstable API as of SPARK-30547

Note that, seems Javadoc -> Scaladoc doesn't work but this PR does not target to fix.

### Why are the changes needed?

To show the documentation of API.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually built the docs via `jykill serve` under `docs` directory:

Closes#27412 from HyukjinKwon/SPARK-30547.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

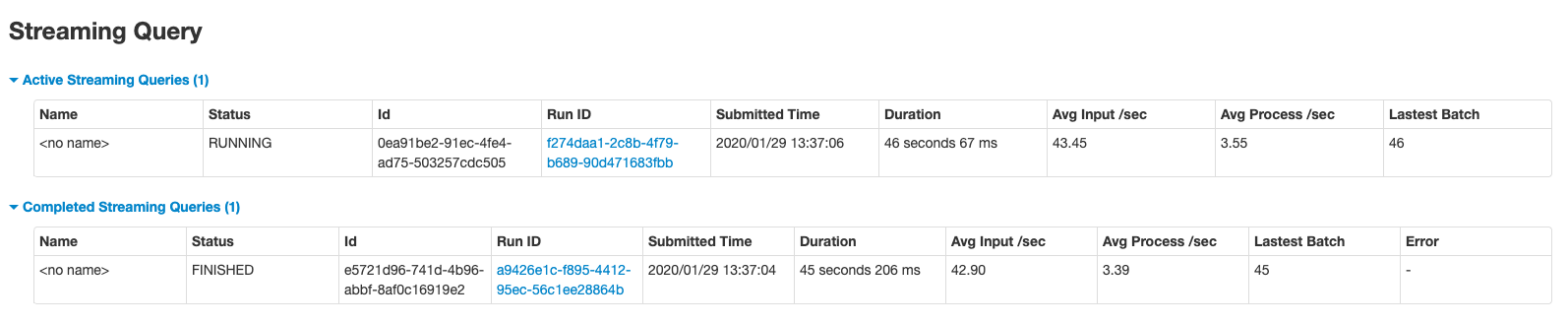

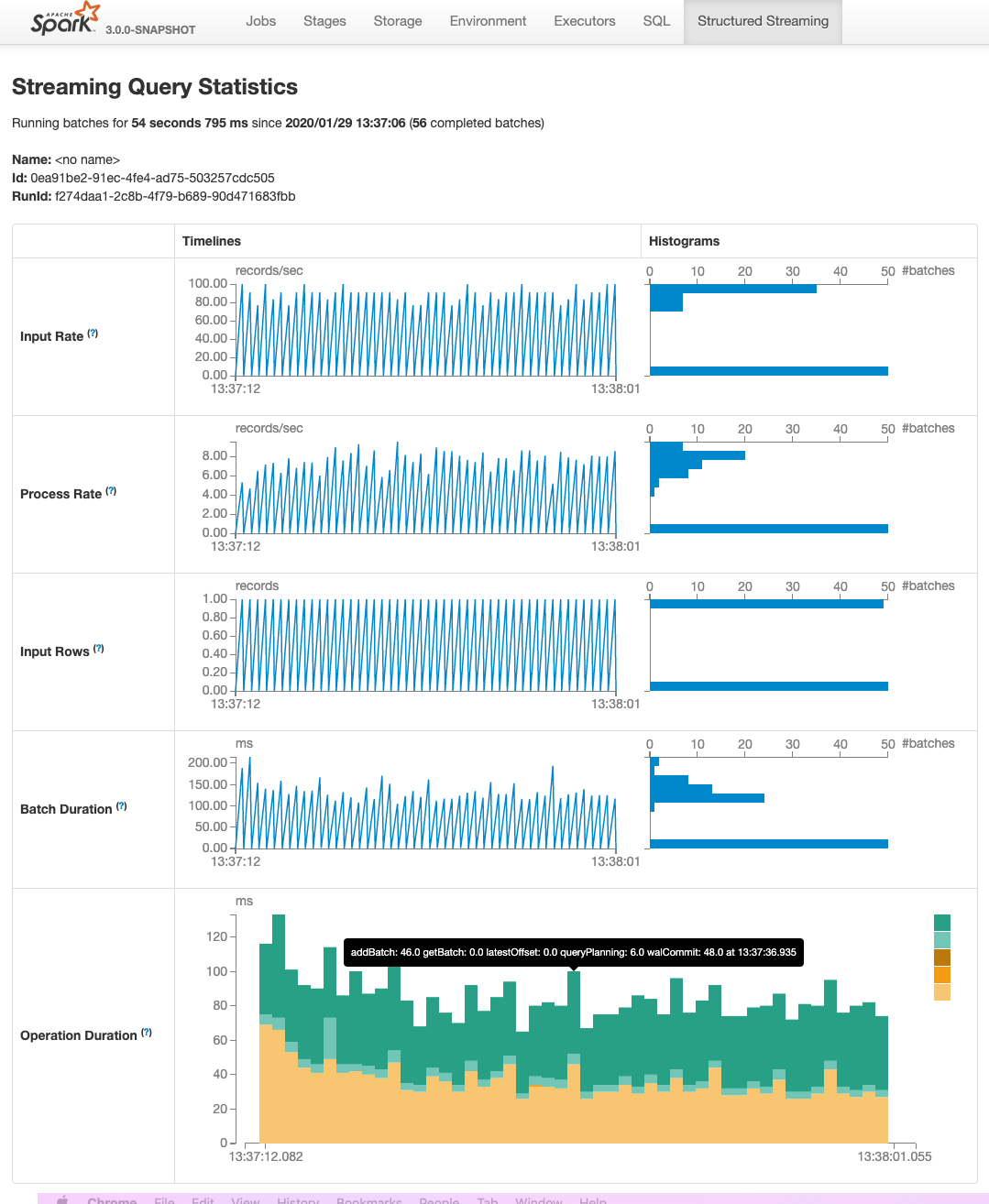

This PR adds two pages to Web UI for Structured Streaming:

- "/streamingquery": Streaming Query Page, providing some aggregate information for running/completed streaming queries.

- "/streamingquery/statistics": Streaming Query Statistics Page, providing detailed information for streaming query, including `Input Rate`, `Process Rate`, `Input Rows`, `Batch Duration` and `Operation Duration`

### Why are the changes needed?

It helps users to better monitor Structured Streaming query.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- new added and existing UTs

- manual test

Closes#26201 from uncleGen/SPARK-29543.

Lead-authored-by: uncleGen <hustyugm@gmail.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Genmao Yu <hustyugm@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

Remove ```numTrees``` in GBT in 3.0.0.

### Why are the changes needed?

Currently, GBT has

```

/**

* Number of trees in ensemble

*/

Since("2.0.0")

val getNumTrees: Int = trees.length

```

and

```

/** Number of trees in ensemble */

val numTrees: Int = trees.length

```

I think we should remove one of them. We deprecated it in 2.4.5 via https://github.com/apache/spark/pull/27352.

### Does this PR introduce any user-facing change?

Yes, remove ```numTrees``` in GBT in 3.0.0

### How was this patch tested?

existing tests

Closes#27330 from huaxingao/spark-numTrees.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Upgrade the version of Genjavadoc from 0.14 to 0.15.

### Why are the changes needed?

To enable to build for Scala 2.13.1.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

I confirmed there is no dependency error related to genjavadoc by manual build.

Also, I generated javadoc by `LANG=C build/sbt -Pkinesis-asl -Pyarn -Pkubernetes -Phive-thriftserver unidoc` for both code with/without this change and did `diff -r` target/javadoc.

Closes#27255 from sarutak/upgrade-genjavadoc.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is the second PR for the Stage Level Scheduling. This is adding in the necessary executor side changes:

1) executors to know what ResourceProfile they should be using

2) handle parsing the resource profile settings - these are not in the global configs

3) then reporting back to the driver what resource profile it was started with.

This PR adds all the piping for YARN to pass the information all the way to executors, but it just uses the default ResourceProfile (which is the global applicatino level configs).

At a high level these changes include:

1) adding a new --resourceProfileId option to the CoarseGrainedExecutorBackend

2) Add the ResourceProfile settings to new internal confs that gets passed into the Executor

3) Executor changes that use the resource profile id passed in to read the corresponding ResourceProfile confs and then parse those requests and discover resources as necessary

4) Executor registers to Driver with the Resource profile id so that the ExecutorMonitor can track how many executor with each profile are running

5) YARN side changes to show that passing the resource profile id and confs actually works. Just uses the DefaultResourceProfile for now.

I also removed a check from the CoarseGrainedExecutorBackend that used to check to make sure there were task requirements before parsing any custom resource executor requests. With the resource profiles this becomes much more expensive because we would then have to pass the task requests to each executor and the check was just a short cut and not really needed. It was much cleaner just to remove it.

Note there were some changes to the ResourceProfile, ExecutorResourceRequests, and TaskResourceRequests in this PR as well because I discovered some issues with things not being immutable. That api now look like:

val rpBuilder = new ResourceProfileBuilder()

val ereq = new ExecutorResourceRequests()

val treq = new TaskResourceRequests()

ereq.cores(2).memory("6g").memoryOverhead("2g").pysparkMemory("2g").resource("gpu", 2, "/home/tgraves/getGpus")

treq.cpus(2).resource("gpu", 2)

val resourceProfile = rpBuilder.require(ereq).require(treq).build

This makes is so that ResourceProfile is immutable and Spark can use it directly without worrying about the user changing it.

### Why are the changes needed?

These changes are needed for the executor to report which ResourceProfile they are using so that ultimately the dynamic allocation manager can use that information to know how many with a profile are running and how many more it needs to request. Its also needed to get the resource profile confs to the executor so that it can run the appropriate discovery script if needed.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit tests and manually on YARN.

Closes#26682 from tgravescs/SPARK-29306.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Make Regressors extend abstract class Regressor:

```AFTSurvivalRegression extends Estimator => extends Regressor```

```DecisionTreeRegressor extends Predictor => extends Regressor```

```FMRegressor extends Predictor => extends Regressor```

```GBTRegressor extends Predictor => extends Regressor```

```RandomForestRegressor extends Predictor => extends Regressor```

We will not make ```IsotonicRegression``` extend ```Regressor``` because it is tricky to handle both DoubleType and VectorType.

### Why are the changes needed?

Make class hierarchy consistent for all Regressors

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#27168 from huaxingao/spark-30377.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Make ```MultilayerPerceptronClassificationModel``` extend ```MultilayerPerceptronParams```

### Why are the changes needed?

Make ```MultilayerPerceptronClassificationModel``` extend ```MultilayerPerceptronParams``` to expose the training params, so user can see these params when calling ```extractParamMap```

### Does this PR introduce any user-facing change?

Yes. The ```MultilayerPerceptronParams``` such as ```seed```, ```maxIter``` ... are available in ```MultilayerPerceptronClassificationModel``` now

### How was this patch tested?

Manually tested ```MultilayerPerceptronClassificationModel.extractParamMap()``` to verify all the new params are there.

Closes#26838 from huaxingao/spark-30144.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

1, add new foreach-like methods: foreach/foreachNonZero

2, add iterator: iterator/activeIterator/nonZeroIterator

### Why are the changes needed?

see the [ticke](https://issues.apache.org/jira/browse/SPARK-30329) for details

foreach/foreachNonZero: for both convenience and performace (SparseVector.foreach should be faster than current traversal method)

iterator/activeIterator/nonZeroIterator: add the three iterators, so that we can futuremore add/change some impls based on those iterators for both ml and mllib sides, to avoid vector conversions.

### Does this PR introduce any user-facing change?

Yes, new methods are added

### How was this patch tested?

added testsuites

Closes#26982 from zhengruifeng/vector_iter.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

This reverts commit 709387d660.

See https://issues.apache.org/jira/browse/SPARK-27300?focusedCommentId=16990048&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16990048 and previous mailing list discussions.

### What changes were proposed in this pull request?

Revert the addition of skeleton graph API modules for Spark 3.0.

### Why are the changes needed?

It does not appear that content will be added to the module for Spark 3, so I propose avoiding committing to the modules, which are no-ops now, in the upcoming major 3.0 release.

### Does this PR introduce any user-facing change?

No, the modules were not released.

### How was this patch tested?

Existing tests, but mostly N/A.

Closes#26928 from srowen/Revert27300.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR proposes to exclude Unidoc checking in Hive domain. We don't publish this as a part of Spark documentation (see also https://github.com/apache/spark/blob/master/docs/_plugins/copy_api_dirs.rb#L30) and most of them are copy of Hive thrift server so that we can officially use Hive 2.3 release.

It doesn't much make sense to check the documentation generation against another domain, and that we don't use in documentation publish.

### Why are the changes needed?

To avoid unnecessary computation.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

By Jenkins:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-2.7 -Phive-2.3 -Phive -Pmesos -Pkubernetes -Phive-thriftserver -Phadoop-cloud -Pkinesis-asl -Pspark-ganglia-lgpl -Pyarn test:package streaming-kinesis-asl-assembly/assembly

...

========================================================================

Building Unidoc API Documentation

========================================================================

[info] Building Spark unidoc using SBT with these arguments: -Phadoop-2.7 -Phive-2.3 -Phive -Pmesos -Pkubernetes -Phive-thriftserver -Phadoop-cloud -Pkinesis-asl -Pspark-ganglia-lgpl -Pyarn unidoc

...

[info] Main Java API documentation successful.

...

[info] Main Scala API documentation successful.

```

Closes#26800 from HyukjinKwon/do-not-merge.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Observable metrics are named arbitrary aggregate functions that can be defined on a query (Dataframe). As soon as the execution of a Dataframe reaches a completion point (e.g. finishes batch query or reaches streaming epoch) a named event is emitted that contains the metrics for the data processed since the last completion point.

A user can observe these metrics by attaching a listener to spark session, it depends on the execution mode which listener to attach:

- Batch: `QueryExecutionListener`. This will be called when the query completes. A user can access the metrics by using the `QueryExecution.observedMetrics` map.

- (Micro-batch) Streaming: `StreamingQueryListener`. This will be called when the streaming query completes an epoch. A user can access the metrics by using the `StreamingQueryProgress.observedMetrics` map. Please note that we currently do not support continuous execution streaming.

### Why are the changes needed?

This enabled observable metrics.

### Does this PR introduce any user-facing change?

Yes. It adds the `observe` method to `Dataset`.

### How was this patch tested?

- Added unit tests for the `CollectMetrics` logical node to the `AnalysisSuite`.

- Added unit tests for `StreamingProgress` JSON serialization to the `StreamingQueryStatusAndProgressSuite`.

- Added integration tests for streaming to the `StreamingQueryListenerSuite`.

- Added integration tests for batch to the `DataFrameCallbackSuite`.

Closes#26127 from hvanhovell/SPARK-29348.

Authored-by: herman <herman@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR tries to fix flakiness in `HiveThriftServer2ListenerSuite` by using a dedicated JVM (after we switch to Hive 2.3 by default in PR builders). Likewise in 4a73bed318, there's no explicit evidence for this fix.

See https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/114653/testReport/org.apache.spark.sql.hive.thriftserver.ui/HiveThriftServer2ListenerSuite/_It_is_not_a_test_it_is_a_sbt_testing_SuiteSelector_/

```

sbt.ForkMain$ForkError: sbt.ForkMain$ForkError: java.lang.LinkageError: loader constraint violation: loader (instance of net/bytebuddy/dynamic/loading/MultipleParentClassLoader) previously initiated loading for a different type with name "org/apache/hive/service/ServiceStateChangeListener"

at org.mockito.codegen.HiveThriftServer2$MockitoMock$1974707245.<clinit>(Unknown Source)

at sun.reflect.GeneratedSerializationConstructorAccessor164.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.objenesis.instantiator.sun.SunReflectionFactoryInstantiator.newInstance(SunReflectionFactoryInstantiator.java:48)

at org.objenesis.ObjenesisBase.newInstance(ObjenesisBase.java:73)

at org.mockito.internal.creation.instance.ObjenesisInstantiator.newInstance(ObjenesisInstantiator.java:19)

at org.mockito.internal.creation.bytebuddy.SubclassByteBuddyMockMaker.createMock(SubclassByteBuddyMockMaker.java:47)

at org.mockito.internal.creation.bytebuddy.ByteBuddyMockMaker.createMock(ByteBuddyMockMaker.java:25)

at org.mockito.internal.util.MockUtil.createMock(MockUtil.java:35)

at org.mockito.internal.MockitoCore.mock(MockitoCore.java:62)

at org.mockito.Mockito.mock(Mockito.java:1908)

at org.mockito.Mockito.mock(Mockito.java:1880)

at org.apache.spark.sql.hive.thriftserver.ui.HiveThriftServer2ListenerSuite.createAppStatusStore(HiveThriftServer2ListenerSuite.scala:156)

at org.apache.spark.sql.hive.thriftserver.ui.HiveThriftServer2ListenerSuite.$anonfun$new$3(HiveThriftServer2ListenerSuite.scala:47)

at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85)

at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83)

at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104)

at org.scalatest.Transformer.apply(Transformer.scala:22)

at org.scalatest.Transformer.apply(Transformer.scala:20)

```

### Why are the changes needed?

To make test cases more robust.

### Does this PR introduce any user-facing change?

No (dev only).

### How was this patch tested?

Jenkins build.

Closes#26720 from shahidki31/mock.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Currently, Apache Spark PR Builder using `hive-1.2` for `hadoop-2.7` and `hive-2.3` for `hadoop-3.2`. This PR aims to support

- `[test-hive1.2]` in PR builder

- `[test-hive2.3]` in PR builder to be consistent and independent of the default profile

- After this PR, all PR builders will use Hive 2.3 by default (because Spark uses Hive 2.3 by default as of c98e5eb339)

- Use default profile in AppVeyor build.

Note that this was reverted due to unexpected test failure at `ThriftServerPageSuite`, which was investigated in https://github.com/apache/spark/pull/26706 . This PR fixed it by letting it use their own forked JVM. There is no explicit evidence for this fix and it was just my speculation, and thankfully it fixed at least.

### Why are the changes needed?

This new tag allows us more flexibility.

### Does this PR introduce any user-facing change?

No. (This is a dev-only change.)

### How was this patch tested?

Check the Jenkins triggers in this PR.

Default:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-2.7 -Phive-2.3 -Phive-thriftserver -Pmesos -Pspark-ganglia-lgpl -Phadoop-cloud -Phive -Pkubernetes -Pkinesis-asl -Pyarn test:package streaming-kinesis-asl-assembly/assembly

```

`[test-hive1.2][test-hadoop3.2]`:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-3.2 -Phive-1.2 -Phadoop-cloud -Pyarn -Pspark-ganglia-lgpl -Phive -Phive-thriftserver -Pmesos -Pkubernetes -Pkinesis-asl test:package streaming-kinesis-asl-assembly/assembly

```

`[test-maven][test-hive-2.3]`:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using Maven with these arguments: -Phadoop-2.7 -Phive-2.3 -Pspark-ganglia-lgpl -Pyarn -Phive -Phadoop-cloud -Pkinesis-asl -Pmesos -Pkubernetes -Phive-thriftserver clean package -DskipTests

```

Closes#26710 from HyukjinKwon/SPARK-29991.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

support `modelType` `gaussian`

### Why are the changes needed?

current modelTypes do not support continuous data

### Does this PR introduce any user-facing change?

yes, add a `modelType` option

### How was this patch tested?

existing testsuites and added ones

Closes#26413 from zhengruifeng/gnb.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

This PR aims to add `io.netty.tryReflectionSetAccessible=true` to the testing configuration for JDK11 because this is an officially documented requirement of Apache Arrow.

Apache Arrow community documented this requirement at `0.15.0` ([ARROW-6206](https://github.com/apache/arrow/pull/5078)).

> #### For java 9 or later, should set "-Dio.netty.tryReflectionSetAccessible=true".

> This fixes `java.lang.UnsupportedOperationException: sun.misc.Unsafe or java.nio.DirectByteBuffer.(long, int) not available`. thrown by netty.

### Why are the changes needed?

After ARROW-3191, Arrow Java library requires the property `io.netty.tryReflectionSetAccessible` to be set to true for JDK >= 9. After https://github.com/apache/spark/pull/26133, JDK11 Jenkins job seem to fail.

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-3.2-jdk-11/676/

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-3.2-jdk-11/677/

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-3.2-jdk-11/678/

```scala

Previous exception in task:

sun.misc.Unsafe or java.nio.DirectByteBuffer.<init>(long, int) not available

io.netty.util.internal.PlatformDependent.directBuffer(PlatformDependent.java:473)

io.netty.buffer.NettyArrowBuf.getDirectBuffer(NettyArrowBuf.java:243)

io.netty.buffer.NettyArrowBuf.nioBuffer(NettyArrowBuf.java:233)

io.netty.buffer.ArrowBuf.nioBuffer(ArrowBuf.java:245)

org.apache.arrow.vector.ipc.message.ArrowRecordBatch.computeBodyLength(ArrowRecordBatch.java:222)

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with JDK11.

Closes#26552 from dongjoon-hyun/SPARK-ARROW-JDK11.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

SPARK-29397 added new interfaces for creating driver and executor

plugins. These were added in a new, more isolated package that does

not pollute the main o.a.s package.

The old interface is now redundant. Since it's a DeveloperApi and

we're about to have a new major release, let's remove it instead of

carrying more baggage forward.

Closes#26390 from vanzin/SPARK-29399.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade ASM to 7.2.

- https://issues.apache.org/jira/browse/XBEAN-322 (Upgrade to ASM 7.2)

- https://asm.ow2.io/versions.html

### Why are the changes needed?

This will bring the following patches.

- 317875: Infinite loop when parsing invalid method descriptor

- 317873: Add support for RET instruction in AdviceAdapter

- 317872: Throw an exception if visitFrame used incorrectly

- add support for Java 14

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with the existing UTs.

Closes#26373 from dongjoon-hyun/SPARK-29729.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch addresses CI build issue on sbt Hadoop-3.2 Jenkins job: SparkSQLEnvSuite are failing. Looks like the reason of test failure is the test checks registered listeners from active SparkSession which could be interfered with other test suites running concurrently. If we isolate test suite the problem should be gone.

### Why are the changes needed?

CI builds for "spark-master-test-sbt-hadoop-3.2" are failing.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

I've run the single test suite with below command and it passed 3 times sequentially:

```

build/sbt "hive-thriftserver/testOnly *.SparkSQLEnvSuite" -Phadoop-3.2 -Phive-thriftserver

```

so we expect the test suite will pass if we isolate the test suite.

Closes#26342 from HeartSaVioR/SPARK-29604-FOLLOWUP.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1, add shared param `relativeError`

2, `Imputer`/`RobusterScaler`/`QuantileDiscretizer` extend `HasRelativeError`

### Why are the changes needed?

It makes sense to expose RelativeError to end users, since it controls both the precision and memory overhead.

`QuantileDiscretizer` had already added this param, while other algs not yet.

### Does this PR introduce any user-facing change?

yes, new param is added in `Imputer`/`RobusterScaler`

### How was this patch tested?

existing testsutes

Closes#26305 from zhengruifeng/add_relative_err.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

This add `typesafe` bintray repo for `sbt-mima-plugin`.

### Why are the changes needed?

Since Oct 21, the following plugin causes [Jenkins failures](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-branch-2.4-test-sbt-hadoop-2.6/611/console

) due to the missing jar.

- `branch-2.4`: `sbt-mima-plugin:0.1.17` is missing.

- `master`: `sbt-mima-plugin:0.3.0` is missing.

These versions of `sbt-mima-plugin` seems to be removed from the old repo.

```

$ rm -rf ~/.ivy2/

$ build/sbt scalastyle test:scalastyle

...

[warn] ::::::::::::::::::::::::::::::::::::::::::::::

[warn] :: UNRESOLVED DEPENDENCIES ::

[warn] ::::::::::::::::::::::::::::::::::::::::::::::

[warn] :: com.typesafe#sbt-mima-plugin;0.1.17: not found

[warn] ::::::::::::::::::::::::::::::::::::::::::::::

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Check `GitHub Action` linter result. This PR should pass. Or, manual check.

(Note that Jenkins PR builder didn't fail until now due to the local cache.)

Closes#26217 from dongjoon-hyun/SPARK-29560.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

We added a TaskContext.resources() api, but I realized this is returning a scala Map which is not ideal for access from Java. Here I add a resourcesJMap function which returns a java.util.Map to make it easily accessible from Java.

### Why are the changes needed?

Java API access

### Does this PR introduce any user-facing change?

<!--

If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description and/or an example to show the behavior difference if possible.

If no, write 'No'.

-->

Yes, new TaskContext function to access from Java

### How was this patch tested?

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

-->

new unit test

Closes#26083 from tgravescs/SPARK-29417.

Lead-authored-by: Thomas Graves <tgraves@ngvpn01-168-221.dyn.scz.us.nvidia.com>

Co-authored-by: Thomas Graves <tgraves@TGRAVES-MLT.local>

Co-authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

### What changes were proposed in this pull request?

This PR aims to specify the JDK8 default configurations `-XX:+UseParallelGC -XX:-UseDynamicNumberOfGCThreads` explicitly. As we see in this PR [here](https://github.com/apache/spark/pull/25966/files#diff-12b89b7ee67c63c2254b749c8f8d0694R10), this will make the comparison between JDK8 and JDK11 easier by removing a misleading regression.

**NOTE THAT THESE JVM CONFS ARE ONLY FOR BENCHMARK COMPARISON, NOT FOR A PRODUCTION**

### Why are the changes needed?

There exists many JVM-level changes between JDK8 and JDK11. For example, the followings are notable changes and it turns out that especially (1) and (2) shows a misleading regression in our micro-benchmark environment because our microbenchmark uses small VM memory.

1. [JEP 248: Make G1 the Default Garbage Collector](https://bugs.openjdk.java.net/browse/JDK-8073273) **JDK9+**

2. [Enable UseDynamicNumberOfGCThreads by default](https://bugs.openjdk.java.net/browse/JDK-8198547) **JDK11+**

3. [Change default value of HeapSizePerGCThread](https://bugs.openjdk.java.net/browse/JDK-8200417) **JDK11+**

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

This is a test-only JVM configuration change. Manually, run the benchmark.

Closes#25966 from dongjoon-hyun/SPARK-29282.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Copy any "spark.hive.foo=bar" spark properties into hadoop conf as "hive.foo=bar"

### Why are the changes needed?

Providing spark side config entry for hive configurations.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

UT.

Closes#25661 from WeichenXu123/add_hive_conf.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

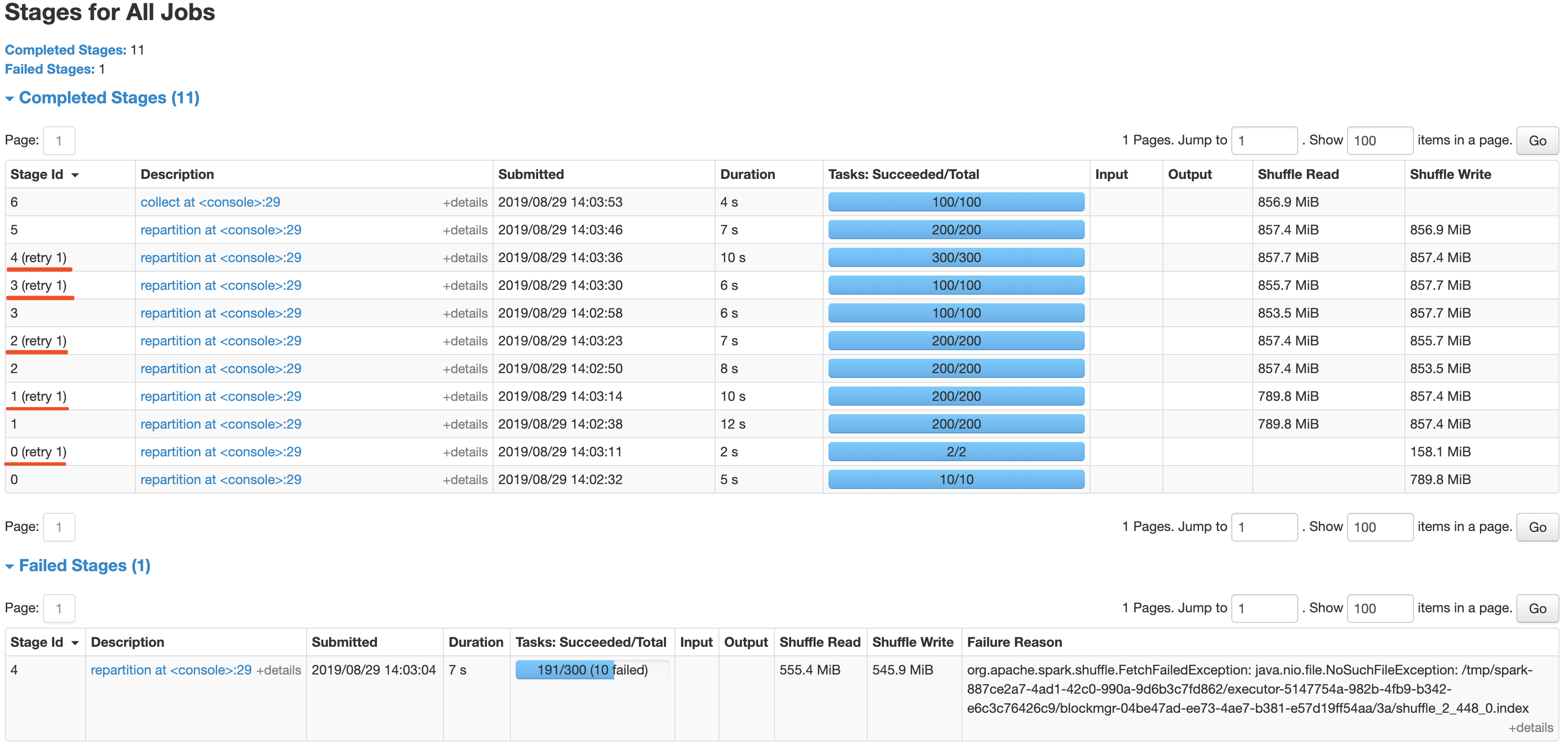

After the newly added shuffle block fetching protocol in #24565, we can keep this work by extending the FetchShuffleBlocks message.

### What changes were proposed in this pull request?

In this patch, we achieve the indeterminate shuffle rerun by reusing the task attempt id(unique id within an application) in shuffle id, so that each shuffle write attempt has a different file name. For the indeterministic stage, when the stage resubmits, we'll clear all existing map status and rerun all partitions.

All changes are summarized as follows:

- Change the mapId to mapTaskAttemptId in shuffle related id.

- Record the mapTaskAttemptId in MapStatus.

- Still keep mapId in ShuffleFetcherIterator for fetch failed scenario.

- Add the determinate flag in Stage and use it in DAGScheduler and the cleaning work for the intermediate stage.

### Why are the changes needed?

This is a follow-up work for #22112's future improvment[1]: `Currently we can't rollback and rerun a shuffle map stage, and just fail.`

Spark will rerun a finished shuffle write stage while meeting fetch failures, currently, the rerun shuffle map stage will only resubmit the task for missing partitions and reuse the output of other partitions. This logic is fine in most scenarios, but for indeterministic operations(like repartition), multiple shuffle write attempts may write different data, only rerun the missing partition will lead a correctness bug. So for the shuffle map stage of indeterministic operations, we need to support rolling back the shuffle map stage and re-generate the shuffle files.

### Does this PR introduce any user-facing change?

Yes, after this PR, the indeterminate stage rerun will be accepted by rerunning the whole stage. The original behavior is aborting the stage and fail the job.

### How was this patch tested?

- UT: Add UT for all changing code and newly added function.

- Manual Test: Also providing a manual test to verify the effect.

```

import scala.sys.process._

import org.apache.spark.TaskContext

val determinateStage0 = sc.parallelize(0 until 1000 * 1000 * 100, 10)

val indeterminateStage1 = determinateStage0.repartition(200)

val indeterminateStage2 = indeterminateStage1.repartition(200)

val indeterminateStage3 = indeterminateStage2.repartition(100)

val indeterminateStage4 = indeterminateStage3.repartition(300)

val fetchFailIndeterminateStage4 = indeterminateStage4.map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId == 190 &&

TaskContext.get.stageAttemptNumber == 0) {

throw new Exception("pkill -f -n java".!!)

}

x

}

val indeterminateStage5 = fetchFailIndeterminateStage4.repartition(200)

val finalStage6 = indeterminateStage5.repartition(100).collect().distinct.length

```

It's a simple job with multi indeterminate stage, it will get a wrong answer while using old Spark version like 2.2/2.3, and will be killed after #22112. With this fix, the job can retry all indeterminate stage as below screenshot and get the right result.

Closes#25620 from xuanyuanking/SPARK-25341-8.27.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR upgrade Scala to **2.12.10**.

Release notes:

- Fix regression in large string interpolations with non-String typed splices

- Revert "Generate shallower ASTs in pattern translation"

- Fix regression in classpath when JARs have 'a.b' entries beside 'a/b'

- Faster compiler: 5–10% faster since 2.12.8

- Improved compatibility with JDK 11, 12, and 13

- Experimental support for build pipelining and outline type checking

More details:

https://github.com/scala/scala/releases/tag/v2.12.10https://github.com/scala/scala/releases/tag/v2.12.9

## How was this patch tested?

Existing tests

Closes#25404 from wangyum/SPARK-28683.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This proposes to improve Spark instrumentation by adding a hook for user-defined metrics, extending Spark’s Dropwizard/Codahale metrics system.

The original motivation of this work was to add instrumentation for S3 filesystem access metrics by Spark job. Currently, [[ExecutorSource]] instruments HDFS and local filesystem metrics. Rather than extending the code there, we proposes with this JIRA to add a metrics plugin system which is of more flexible and general use.

Context: The Spark metrics system provides a large variety of metrics, see also , useful to monitor and troubleshoot Spark workloads. A typical workflow is to sink the metrics to a storage system and build dashboards on top of that.

Highlights:

- The metric plugin system makes it easy to implement instrumentation for S3 access by Spark jobs.

- The metrics plugin system allows for easy extensions of how Spark collects HDFS-related workload metrics. This is currently done using the Hadoop Filesystem GetAllStatistics method, which is deprecated in recent versions of Hadoop. Recent versions of Hadoop Filesystem recommend using method GetGlobalStorageStatistics, which also provides several additional metrics. GetGlobalStorageStatistics is not available in Hadoop 2.7 (had been introduced in Hadoop 2.8). Using a metric plugin for Spark would allow an easy way to “opt in” using such new API calls for those deploying suitable Hadoop versions.

- We also have the use case of adding Hadoop filesystem monitoring for a custom Hadoop compliant filesystem in use in our organization (EOS using the XRootD protocol). The metrics plugin infrastructure makes this easy to do. Others may have similar use cases.

- More generally, this method makes it straightforward to plug in Filesystem and other metrics to the Spark monitoring system. Future work on plugin implementation can address extending monitoring to measure usage of external resources (OS, filesystem, network, accelerator cards, etc), that maybe would not normally be considered general enough for inclusion in Apache Spark code, but that can be nevertheless useful for specialized use cases, tests or troubleshooting.

Implementation:

The proposed implementation extends and modifies the work on Executor Plugin of SPARK-24918. Additionally, this is related to recent work on extending Spark executor metrics, such as SPARK-25228.

As discussed during the review, the implementaiton of this feature modifies the Developer API for Executor Plugins, such that the new version is incompatible with the original version in Spark 2.4.

## How was this patch tested?

This modifies existing tests for ExecutorPluginSuite to adapt them to the API changes. In addition, the new funtionality for registering pluginMetrics has been manually tested running Spark on YARN and K8S clusters, in particular for monitoring S3 and for extending HDFS instrumentation with the Hadoop Filesystem “GetGlobalStorageStatistics” metrics. Executor metric plugin example and code used for testing are available, for example at: https://github.com/cerndb/SparkExecutorPluginsCloses#24901 from LucaCanali/executorMetricsPlugin.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

- Remove SQLContext.createExternalTable and Catalog.createExternalTable, deprecated in favor of createTable since 2.2.0, plus tests of deprecated methods

- Remove HiveContext, deprecated in 2.0.0, in favor of `SparkSession.builder.enableHiveSupport`

- Remove deprecated KinesisUtils.createStream methods, plus tests of deprecated methods, deprecate in 2.2.0

- Remove deprecated MLlib (not Spark ML) linear method support, mostly utility constructors and 'train' methods, and associated docs. This includes methods in LinearRegression, LogisticRegression, Lasso, RidgeRegression. These have been deprecated since 2.0.0

- Remove deprecated Pyspark MLlib linear method support, including LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD

- Remove 'runs' argument in KMeans.train() method, which has been a no-op since 2.0.0

- Remove deprecated ChiSqSelector isSorted protected method

- Remove deprecated 'yarn-cluster' and 'yarn-client' master argument in favor of 'yarn' and deploy mode 'cluster', etc

Notes:

- I was not able to remove deprecated DataFrameReader.json(RDD) in favor of DataFrameReader.json(Dataset); the former was deprecated in 2.2.0, but, it is still needed to support Pyspark's .json() method, which can't use a Dataset.

- Looks like SQLContext.createExternalTable was not actually deprecated in Pyspark, but, almost certainly was meant to be? Catalog.createExternalTable was.

- I afterwards noted that the toDegrees, toRadians functions were almost removed fully in SPARK-25908, but Felix suggested keeping just the R version as they hadn't been technically deprecated. I'd like to revisit that. Do we really want the inconsistency? I'm not against reverting it again, but then that implies leaving SQLContext.createExternalTable just in Pyspark too, which seems weird.

- I *kept* LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD, RidgeRegressionWithSGD in Pyspark, though deprecated, as it is hard to remove them (still used by StreamingLogisticRegressionWithSGD?) and they are not fully removed in Scala. Maybe should not have been deprecated.

### Why are the changes needed?

Deprecated items are easiest to remove in a major release, so we should do so as much as possible for Spark 3. This does not target items deprecated 'recently' as of Spark 2.3, which is still 18 months old.

### Does this PR introduce any user-facing change?

Yes, in that deprecated items are removed from some public APIs.

### How was this patch tested?

Existing tests.

Closes#25684 from srowen/SPARK-28980.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Add HasNumFeatures in the scala side, with `1<<18` as the default value

### Why are the changes needed?

HasNumFeatures is already added in the py side, it is reasonable to keep them in sync.

I don't find other similar place.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing testsuites

Closes#25671 from zhengruifeng/add_HasNumFeatures.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Delete the incorrect method `def setWeightCol(value: Double): this.type = set(threshold, value)` in `LinearSVCModel`

### Why are the changes needed?

`LinearSVCModel` should not provide this setter, moreover, this method is wrongly defined.

### Does this PR introduce any user-facing change?

yes, a public method is removed

### How was this patch tested?

existing suites

Closes#25510 from zhengruifeng/linearsvc_model_set_weightcol.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Fixes a vulnerability from the GitHub Security Advisory Database:

_Moderate severity vulnerability that affects com.puppycrawl.tools:checkstyle_

Checkstyle prior to 8.18 loads external DTDs by default, which can potentially lead to denial of service attacks or the leaking of confidential information.

https://github.com/checkstyle/checkstyle/issues/6474

Affected versions: < 8.18

## How was this patch tested?

Ran checkstyle locally.

Closes#25432 from Fokko/SPARK-28713.

Authored-by: Fokko Driesprong <fokko@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This patch tries to keep consistency whenever UTF-8 charset is needed, as using `StandardCharsets.UTF_8` instead of using "UTF-8". If the String type is needed, `StandardCharsets.UTF_8.name()` is used.

This change also brings the benefit of getting rid of `UnsupportedEncodingException`, as we're providing `Charset` instead of `String` whenever possible.

This also changes some private Catalyst helper methods to operate on encodings as `Charset` objects rather than strings.

## How was this patch tested?

Existing unit tests.

Closes#25335 from HeartSaVioR/SPARK-28601.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Currently, PythonBroadcast may delete its data file while a python worker still needs it. This happens because PythonBroadcast overrides the `finalize()` method to delete its data file. So, when GC happens and no references on broadcast variable, it may trigger `finalize()` to delete

data file. That's also means, data under python Broadcast variable couldn't be deleted when `unpersist()`/`destroy()` called but relys on GC.

In this PR, we removed the `finalize()` method, and map the PythonBroadcast data file to a BroadcastBlock(which has the same broadcast id with the broadcast variable who wrapped this PythonBroadcast) when PythonBroadcast is deserializing. As a result, the data file could be deleted just like other pieces of the Broadcast variable when `unpersist()`/`destroy()` called and do not rely on GC any more.

## How was this patch tested?

Added a Python test, and tested manually(verified create/delete the broadcast block).

Closes#25262 from Ngone51/SPARK-28486.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR proposes to make Javadoc in org.apache.spark.shuffle.api visible.

## How was this patch tested?

Manually built the doc and checked:

Closes#25323 from HyukjinKwon/SPARK-28568.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Prior to this change, in an executor, on each heartbeat, memory metrics are polled and sent in the heartbeat. The heartbeat interval is 10s by default. With this change, in an executor, memory metrics can optionally be polled in a separate poller at a shorter interval.

For each executor, we use a map of (stageId, stageAttemptId) to (count of running tasks, executor metric peaks) to track what stages are active as well as the per-stage memory metric peaks. When polling the executor memory metrics, we attribute the memory to the active stage(s), and update the peaks. In a heartbeat, we send the per-stage peaks (for stages active at that time), and then reset the peaks. The semantics would be that the per-stage peaks sent in each heartbeat are the peaks since the last heartbeat.

We also keep a map of taskId to memory metric peaks. This tracks the metric peaks during the lifetime of the task. The polling thread updates this as well. At end of a task, we send the peak metric values in the task result. In case of task failure, we send the peak metric values in the `TaskFailedReason`.

We continue to do the stage-level aggregation in the EventLoggingListener.

For the driver, we still only poll on heartbeats. What the driver sends will be the current values of the metrics in the driver at the time of the heartbeat. This is semantically the same as before.

## How was this patch tested?

Unit tests. Manually tested applications on an actual system and checked the event logs; the metrics appear in the SparkListenerTaskEnd and SparkListenerStageExecutorMetrics events.

Closes#23767 from wypoon/wypoon_SPARK-26329.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

I remove the deprecate `ImageSchema.readImages`.

Move some useful methods from class `ImageSchema` into class `ImageFileFormat`.

In pyspark, I rename `ImageSchema` class to be `ImageUtils`, and keep some useful python methods in it.

## How was this patch tested?

UT.

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25245 from WeichenXu123/remove_image_schema.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Right now `Error` is not sent to `QueryExecutionListener.onFailure`. If there is any `Error` (such as `AssertionError`) when running a query, `QueryExecutionListener.onFailure` cannot be triggered.

This PR changes `onFailure` to accept a `Throwable` instead.

## How was this patch tested?

Jenkins

Closes#25292 from zsxwing/fix-QueryExecutionListener.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This patch proposes moving all Trigger implementations to `Triggers.scala`, to avoid exposing these implementations to the end users and let end users only deal with `Trigger.xxx` static methods. This fits the intention of deprecation of `ProcessingTIme`, and we agree to move others without deprecation as this patch will be shipped in major version (Spark 3.0.0).

## How was this patch tested?

UTs modified to work with newly introduced class.

Closes#24996 from HeartSaVioR/SPARK-28199.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Track tasks separately for each stage attempt (instead of tracking by stage), and do NOT reset the numRunningTasks to 0 on StageCompleted.

In the case of stage retry, the `taskEnd` event from the zombie stage sometimes makes the number of `totalRunningTasks` negative, which will causes the job to get stuck.

Similar problem also exists with `stageIdToTaskIndices` & `stageIdToSpeculativeTaskIndices`.

If it is a failed `taskEnd` event of the zombie stage, this will cause `stageIdToTaskIndices` or `stageIdToSpeculativeTaskIndices` to remove the task index of the active stage, and the number of `totalPendingTasks` will increase unexpectedly.

## How was this patch tested?

unit test properly handle task end events from completed stages

Closes#24497 from cxzl25/fix_stuck_job_follow_up.

Authored-by: sychen <sychen@ctrip.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

This PR introduces the necessary Maven modules for the new [Spark Graph](https://issues.apache.org/jira/browse/SPARK-25994) feature for Spark 3.0.

* `spark-graph` is a parent module that users depend on to get all graph functionalities (Cypher and Graph Algorithms)

* `spark-graph-api` defines the [Property Graph API](https://docs.google.com/document/d/1Wxzghj0PvpOVu7XD1iA8uonRYhexwn18utdcTxtkxlI) that is being shared between Cypher and Algorithms

* `spark-cypher` contains a Cypher query engine implementation

Both, `spark-graph-api` and `spark-cypher` depend on Spark SQL.

Note, that the Maven module for Graph Algorithms is not part of this PR and will be introduced in https://issues.apache.org/jira/browse/SPARK-27302

A PoC for a running Cypher implementation can be found in this WIP PR https://github.com/apache/spark/pull/24297

## How was this patch tested?

Pass the Jenkins with all profiles and manually build and check the followings.

```

$ ls assembly/target/scala-2.12/jars/spark-cypher*

assembly/target/scala-2.12/jars/spark-cypher_2.12-3.0.0-SNAPSHOT.jar

$ ls assembly/target/scala-2.12/jars/spark-graph* | grep -v graphx

assembly/target/scala-2.12/jars/spark-graph-api_2.12-3.0.0-SNAPSHOT.jar

assembly/target/scala-2.12/jars/spark-graph_2.12-3.0.0-SNAPSHOT.jar

```

Closes#24490 from s1ck/SPARK-27300.

Lead-authored-by: Martin Junghanns <martin.junghanns@neotechnology.com>

Co-authored-by: Max Kießling <max@kopfueber.org>

Co-authored-by: Martin Junghanns <martin.junghanns@neo4j.com>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Move methods that implement v2 catalog operations to CatalogV2Util so they can be used in #24768.

## How was this patch tested?

Behavior is validated by existing tests.

Closes#24813 from rdblue/SPARK-27964-add-catalog-v2-util.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Currently we are in a strange status that, some data source v2 interfaces(catalog related) are in sql/catalyst, some data source v2 interfaces(Table, ScanBuilder, DataReader, etc.) are in sql/core.

I don't see a reason to keep data source v2 API in 2 modules. If we should pick one module, I think sql/catalyst is the one to go.

Catalyst module already has some user-facing stuff like DataType, Row, etc. And we have to update `Analyzer` and `SessionCatalog` to support the new catalog plugin, which needs to be in the catalyst module.

This PR can solve the problem we have in https://github.com/apache/spark/pull/24246

## How was this patch tested?

existing tests

Closes#24416 from cloud-fan/move.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR adds support to schedule tasks with extra resource requirements (eg. GPUs) on executors with available resources. It also introduce a new method `TaskContext.resources()` so tasks can access available resource addresses allocated to them.

## How was this patch tested?

* Added new end-to-end test cases in `SparkContextSuite`;

* Added new test case in `CoarseGrainedSchedulerBackendSuite`;

* Added new test case in `CoarseGrainedExecutorBackendSuite`;

* Added new test case in `TaskSchedulerImplSuite`;

* Added new test case in `TaskSetManagerSuite`;

* Updated existing tests.

Closes#24374 from jiangxb1987/gpu.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

For the below three thread configuration items applied to both driver and executor,

spark.rpc.io.serverThreads

spark.rpc.io.clientThreads

spark.rpc.netty.dispatcher.numThreads,

we separate them to driver specifics and executor specifics.

spark.driver.rpc.io.serverThreads < - > spark.executor.rpc.io.serverThreads

spark.driver.rpc.io.clientThreads < - > spark.executor.rpc.io.clientThreads

spark.driver.rpc.netty.dispatcher.numThreads < - > spark.executor.rpc.netty.dispatcher.numThreads

Spark reads these specifics first and fall back to the common configurations.

## How was this patch tested?

We ran the SimpleMap app without shuffle for benchmark purpose to test Spark's scalability in HPC with omini-path NIC which has higher bandwidth than normal ethernet NIC.

Spark's base version is 2.4.0.

Spark ran in the Standalone mode. Driver was in a standalone node.

After the separation, the performance is improved a lot in 256 nodes and 512 nodes. see below test results of SimpleMapTask before and after the enhancement. You can view the tables in the [JIRA](https://issues.apache.org/jira/browse/SPARK-26632) too.

ds: spark.driver.rpc.io.serverThreads

dc: spark.driver.rpc.io.clientThreads

dd: spark.driver.rpc.netty.dispatcher.numThreads

ed: spark.executor.rpc.netty.dispatcher.numThreads

time: Overall Time (s)

old time: Overall Time without Separation (s)

**Before:**

nodes | ds | dc | dd | ed | time

-- |-- | -- | -- | -- | --

128 nodes | 8 | 8 | 8 | 8 | 108

256 nodes | 8 | 8 | 8 | 8 | 196

512 nodes | 8 | 8 | 8 | 8 | 377

**After:**

nodes | ds | dc | dd | ed | time | improvement

-- | -- | -- | -- | -- | -- | --

128 nodes | 15 | 15 | 10 | 30 | 107 | 0.9%

256 nodes | 12 | 15 | 10 | 30 | 159 | 18.8%

512 nodes | 12 | 15 | 10 | 30 | 283 | 24.9%

Closes#23560 from zjf2012/thread_conf_separation.

Authored-by: jiafu.zhang@intel.com <jiafu.zhang@intel.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fixed the `spark-<version>-yarn-shuffle.jar` artifact packaging to shade the native netty libraries:

- shade the `META-INF/native/libnetty_*` native libraries when packagin

the yarn shuffle service jar. This is required as netty library loader

derives that based on shaded package name.

- updated the `org/spark_project` shade package prefix to `org/sparkproject`

(i.e. removed underscore) as the former breaks the netty native lib loading.

This was causing the yarn external shuffle service to fail

when spark.shuffle.io.mode=EPOLL

## How was this patch tested?

Manual tests

Closes#24502 from amuraru/SPARK-27610_master.

Authored-by: Adi Muraru <amuraru@adobe.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Kind of related to https://github.com/gatorsmile/spark/pull/5 - let's update genjavadoc to see if it generates fewer spurious javadoc errors to begin with.

## How was this patch tested?

Existing docs build

Closes#24443 from srowen/genjavadoc013.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This is a follow-up of https://github.com/apache/spark/pull/24395. This PR update `plugins.sbt`, too.

## How was this patch tested?

Pass the Jenkins.

Closes#24444 from dongjoon-hyun/SPARK-ASM71-2.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

The test time of `HiveClientVersions` is around 3.5 minutes.

This PR is to add it into the parallel test suite list. To make sure there is no colliding warehouse location, we can change the warehouse path to a temporary directory.

## How was this patch tested?

Unit test

Closes#24404 from gengliangwang/parallelTestFollowUp.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This patch modifies SparkBuild so that the largest / slowest test suites (or collections of suites) can run in their own forked JVMs, allowing them to be run in parallel with each other. This opt-in / whitelisting approach allows us to increase parallelism without having to fix a long-tail of flakiness / brittleness issues in tests which aren't performance bottlenecks.

See comments in SparkBuild.scala for information on the details, including a summary of why we sometimes opt to run entire groups of tests in a single forked JVM .

The time of full new pull request test in Jenkins is reduced by around 53%:

before changes: 4hr 40min

after changes: 2hr 13min

## How was this patch tested?

Unit test

Closes#24373 from gengliangwang/parallelTest.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The API docs should not include the "org.apache.spark.util.kvstore" package because they are internal private APIs. See the doc link: https://spark.apache.org/docs/latest/api/java/org/apache/spark/util/kvstore/LevelDB.html

## How was this patch tested?

N/A

Closes#24386 from gatorsmile/rmDoc.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Remove deprecated / no-op mllib.KMeans getRuns, setRuns

mllib.KMeans has getRuns, setRuns methods which haven't done anything since Spark 2.1. They're deprecated, and no-ops, and should be removed for Spark 3.

## How was this patch tested?

Existing tests.

Closes#24320 from srowen/SPARK-27410.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Remove Scala 2.11 support in build files and docs, and in various parts of code that accommodated 2.11. See some targeted comments below.

## How was this patch tested?

Existing tests.

Closes#23098 from srowen/SPARK-26132.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

LEGACY_DRIVER_IDENTIFIER and its reference are removed.

corresponding references test are updated.

## How was this patch tested?

tested UT test cases

Closes#24026 from shivusondur/newjira2.

Authored-by: shivusondur <shivusondur@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Use the sbt maven plugin option`antlr4TreatWarningsAsErrors` to make sure the warnings are treated as errors while generating the parser. In the absence of it, we may inadvertently introduce problems while making grammar changes. Please refer to PR-23897 to know more about the context. We made a change in [pr-23925](https://github.com/apache/spark/pull/23925) which handled only the maven build.

In this PR, we handle the sbt build. I had submitted [PR-23](https://github.com/ihji/sbt-antlr4/pull/23) to enhance the sbt-antlr plugin to make is possible to pass the error on warning option.

## How was this patch tested?

Force an warning in the grammar file to check if the build fails. Then remove the warning to verify the build succeeds.

Closes#24060 from dilipbiswal/sbt_build_antlr.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

`Saveable` interface introduces `formatVersion` which is protected and it is used nowhere. So the PR proposes to remove it.

## How was this patch tested?

existing tests

Closes#22830 from mgaido91/SPARK-25838.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The java version here means versions of javac source, javac target, scalac target. They could be consolidated as a single version (currently 1.8)

| |javac|scalac |

|------|-----|---------|

|source|1.8 |2.12/2.11|

|target|1.8 |1.8 |

The current issues are as follows

* Maven build defines a single property (`java.version`) to specify java version while SBT build defines different properties for javac (`javacJVMVersion`) and scalac (`scalacJVMVersion`). SBT build should use a single property as Maven build does.

* Furthermore, it's better for SBT build to refer to `java.version` defined by Maven build. This is possible since we've already been using sbt-pom-reader.

## How was this patch tested?

Tested locally.

```

build/mvn clean compile

build/sbt clean compile

```

Closes#23724 from seancxmao/specify-java-version-once.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently ANTLR v4 versions used by Maven and SBT are slightly different. Maven uses `4.7.1` while SBT uses `4.7`.

* Maven(`pom.xml`): `<antlr4.version>4.7.1</antlr4.version>`

* SBT(`project/SparkBuild.scala`): `antlr4Version in Antlr4 := "4.7"`

We should make Maven and SBT use a single version. Furthermore we'd better specify antlr4 version in one place to avoid mismatch between Maven and SBT in the future.

This PR lets SBT use antlr4 version specified in Maven POM file, rather than specify its own antlr4 version. This is in the same as how `hadoop.version` is specified in `project/SparkBuild.scala`

## How was this patch tested?

Test locally.

After run `sbt compile`, Java files generated by ANTLR are located at:

```

sql/catalyst/target/scala-2.12/src_managed/main/antlr4/org/apache/spark/sql/catalyst/parser/*.java

```

These Java files have a comment at the head. We can see now SBT uses ANTLR `4.7.1`.

```

// Generated from .../spark/sql/catalyst/src/main/antlr4/org/apache/spark/sql/catalyst/parser/SqlBase.g4 by ANTLR 4.7.1

```

Closes#23713 from seancxmao/antlr4-version-consistent.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This patch proposes adding a new configuration on SHS: custom executor log URL pattern. This will enable end users to replace executor logs to other than RM provide, like external log service, which enables to serve executor logs when NodeManager becomes unavailable in case of YARN.

End users can build their own of custom executor log URLs with pre-defined patterns which would be vary on each resource manager. This patch adds some patterns to YARN resource manager. (For others, there's even no executor log url available so cannot define patterns as well.)

Please refer the doc change as well as added UTs in this patch to see how to set up the feature.

## How was this patch tested?

Added UT, as well as manual test with YARN cluster

Closes#23260 from HeartSaVioR/SPARK-26311.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>