### What changes were proposed in this pull request?

Bugfix: link to correction location of Pyspark Dataframe documentation

### Why are the changes needed?

Current website returns "Not found"

### Does this PR introduce _any_ user-facing change?

Website fix

### How was this patch tested?

Documentation change

Closes#33420 from dominikgehl/feature/SPARK-36209.

Authored-by: Dominik Gehl <dog@open.ch>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Rework [PR](https://github.com/apache/spark/pull/33212) with suggestions.

This PR make `spark.read.json()` has the same behavior with Datasource API `spark.read.format("json").load("path")`. Spark should turn a non-nullable schema into nullable when using API `spark.read.json()` by default.

Here is an example:

```scala

val schema = StructType(Seq(StructField("value",

StructType(Seq(

StructField("x", IntegerType, nullable = false),

StructField("y", IntegerType, nullable = false)

)),

nullable = true

)))

val testDS = Seq("""{"value":{"x":1}}""").toDS

spark.read

.schema(schema)

.json(testDS)

.printSchema()

spark.read

.schema(schema)

.format("json")

.load("/tmp/json/t1")

.printSchema()

// root

// |-- value: struct (nullable = true)

// | |-- x: integer (nullable = true)

// | |-- y: integer (nullable = true)

```

Before this pr:

```

// output of spark.read.json()

root

|-- value: struct (nullable = true)

| |-- x: integer (nullable = false)

| |-- y: integer (nullable = false)

```

After this pr:

```

// output of spark.read.json()

root

|-- value: struct (nullable = true)

| |-- x: integer (nullable = true)

| |-- y: integer (nullable = true)

```

- `spark.read.csv()` also has the same problem.

- Datasource API `spark.read.format("json").load("path")` do this logical when resolve relation.

c77acf0bbc/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSource.scala (L415-L421)

### Does this PR introduce _any_ user-facing change?

Yes, `spark.read.json()` and `spark.read.csv()` not respect the user-given schema and always turn it into a nullable schema by default.

### How was this patch tested?

New test.

Closes#33436 from cfmcgrady/SPARK-35912-v3.

Authored-by: Fu Chen <cfmcgrady@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Update trasform's doc to latest code.

### Why are the changes needed?

keep consistence

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

No

Closes#33362 from AngersZhuuuu/SPARK-36153.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Spark 3.2.0 will use parquet-mr.1.12.0 version (or higher), that contains the column encryption feature which can be called from Spark SQL. The aim of this PR is to document the use of Parquet encryption in Spark.

### Why are the changes needed?

- To provide information on how to use Parquet column encryption

### Does this PR introduce _any_ user-facing change?

Yes, documents a new feature.

### How was this patch tested?

bundle exec jekyll build

Closes#32895 from ggershinsky/parquet-encryption-doc.

Authored-by: Gidon Gershinsky <ggershinsky@apple.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR documents about unicode literals added in SPARK-34051 (#31096) and a past PR in `sql-ref-literals.md`.

### Why are the changes needed?

Notice users about the literals.

### Does this PR introduce _any_ user-facing change?

Yes, but just add a sentence.

### How was this patch tested?

Built the document and confirmed the result.

```

SKIP_API=1 bundle exec jekyll build

```

Closes#33434 from sarutak/unicode-literal-doc.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes two issues highlighted in https://issues.apache.org/jira/browse/SPARK-36163:

- JDBC connection provider propagates incorrect connection properties.

- Ambiguity when more than one JDBC connection provider is available.

I updated `BasicConnectionProvider` to use `jdbcOptions.asConnectionProperties` to remove JDBC data source specific options.

I also added `connectionProvider` data source option that specifies the name of the provider, e.g. `db2`, `presto`, to allow enforcing this specific provider in case of ambiguity.

### Why are the changes needed?

Users can leverage `spark.sql.sources.disabledJdbcConnProviderList` but it is cumbersome and requires them to disable all other providers which could be problematic when using ambiguous providers in two or more different JDBC queries.

### Does this PR introduce _any_ user-facing change?

Yes

PROBLEM DESCRIPTION:

This introduces new JDBC data source option `connectionProvider` that allows users to select a specific JDBC connection provider based on the short name. I updated the SQL guide doc and README.

Before this change, the only way to resolve ambiguity was SQL conf to blacklist all of the other JDBC connection providers. After this change users will be able to specify the exact connection provider they need per data source.

### How was this patch tested?

I updated the existing `ConnectionProviderSuite` and added a new `BasicConnectionProviderSuite`.

Closes#33370 from sadikovi/fix-jdbc-conn-provider.

Authored-by: Ivan Sadikov <ivan.sadikov@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add the support for specifiying executor/driver node selector:

- spark.kubernetes.driver.node.selector.

- spark.kubernetes.executor.node.selector.

### Why are the changes needed?

Now we can only use "spark.kubernetes.node.selector" to set lable for executor/driver. Sometimes, we need set executor/driver pods to different selector separately.

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

- KubernetesConfSuite for new added configure

- BasicDriverFeatureStepSuite to make sure driver pods node selector set properly

- BasicExecutorFeatureStepSuite to make sure excutor pods node selector set properly

Closes#33283 from Yikun/SPARK-36075.

Authored-by: Yikun Jiang <yikunkero@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

```logtalk

21/05/20 21:41:21 WARN ExecutorPodsSnapshotsStoreImpl: Exception when notifying snapshot subscriber.

io.fabric8.kubernetes.client.KubernetesClientException: Failure executing: POST at: https://kubernetes.docker.internal:6443/api/v1/namespaces/default/pods. Message: Pod "spark_exec-exec-688" is invalid: [metadata.name: Invalid value: "spark_exec-exec-688": a DNS-1123 subdomain must consist of lower case alphanumeric characters, '-' or '.', and must start and end with an alphanumeric character (e.g. 'example.com', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'), spec.hostname: Invalid value: "spark_exec-exec-688": a DNS-1123 label must consist of lower case alphanumeric characters or '-', and must start and end with an alphanumeric character (e.g. 'my-name', or '123-abc', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?')]. Received status: Status(apiVersion=v1, code=422, details=StatusDetails(causes=[StatusCause(field=metadata.name, message=Invalid value: "spark_exec-exec-688": a DNS-1123 subdomain must consist of lower case alphanumeric characters, '-' or '.', and must start and end with an alphanumeric character (e.g. 'example.com', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'), reason=FieldValueInvalid, additionalProperties={}), StatusCause(field=spec.hostname, message=Invalid value: "spark_exec-exec-688": a DNS-1123 label must consist of lower case alphanumeric characters or '-', and must start and end with an alphanumeric character (e.g. 'my-name', or '123-abc', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?'), reason=FieldValueInvalid, additionalProperties={})], group=null, kind=Pod, name=spark_exec-exec-688, retryAfterSeconds=null, uid=null, additionalProperties={}), kind=Status, message=Pod "spark_exec-exec-688" is invalid: [metadata.name: Invalid value: "spark_exec-exec-688": a DNS-1123 subdomain must consist of lower case alphanumeric characters, '-' or '.', and must start and end with an alphanumeric character (e.g. 'example.com', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'), spec.hostname: Invalid value: "spark_exec-exec-688": a DNS-1123 label must consist of lower case alphanumeric characters or '-', and must start and end with an alphanumeric character (e.g. 'my-name', or '123-abc', regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?')], metadata=ListMeta(_continue=null, remainingItemCount=null, resourceVersion=null, selfLink=null, additionalProperties={}), reason=Invalid, status=Failure, additionalProperties={}).

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.requestFailure(OperationSupport.java:583)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.assertResponseCode(OperationSupport.java:522)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:487)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:448)

at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleCreate(OperationSupport.java:263)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.handleCreate(BaseOperation.java:870)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.create(BaseOperation.java:365)

at io.fabric8.kubernetes.client.dsl.base.BaseOperation.create(BaseOperation.java:86)

```

When `spark.kubernetes.executor.podNamePrefix` contains invalid characters, the driver will continuously fail to request executors from k8s master, which causes the app to hang with the above message - `'Message: Pod "spark_exec-exec-688" is invalid'`.

In this PR we fail the app when the setting is wrong.

### Why are the changes needed?

`spark.kubernetes.executor.podNamePrefix` is used when users may want full control of executor pod names. It will hang apps w/ wrong characters, it's better to fail directly.

### Does this PR introduce _any_ user-facing change?

yes, invalid `spark.kubernetes.executor.podNamePrefix` cause app to fail not to hang.

### How was this patch tested?

new tests

Closes#32610 from yaooqinn/SPARK-35460.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Add reference to kubernetes-client's version

### Why are the changes needed?

Running Spark on Kubernetes potentially has upper limitation of Kubernetes version.

I think it is better for users to notice it because Kubernetes update speed is so fast that users tends to run Spark Jobs on unsupported version.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

SKIP_API=1 bundle exec jekyll build

Closes#33255 from yoda-mon/add-reference-kubernetes-client.

Authored-by: yoda-mon <yodal@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR modifies comment for `UTF8String.trimAll` and`sql-migration-guide.mld`.

The comment for `UTF8String.trimAll` says like as follows.

```

Trims whitespaces ({literal <=} ASCII 32) from both ends of this string.

```

Similarly, `sql-migration-guide.md` mentions about the behavior of `cast` like as follows.

```

In Spark 3.0, when casting string value to integral types(tinyint, smallint, int and bigint),

datetime types(date, timestamp and interval) and boolean type,

the leading and trailing whitespaces (<= ASCII 32) will be trimmed before converted to these type values,

for example, `cast(' 1\t' as int)` results `1`, `cast(' 1\t' as boolean)` results `true`,

`cast('2019-10-10\t as date)` results the date value `2019-10-10`.

In Spark version 2.4 and below, when casting string to integrals and booleans,

it does not trim the whitespaces from both ends; the foregoing results is `null`,

while to datetimes, only the trailing spaces (= ASCII 32) are removed.

```

But SPARK-32559 (#29375) changed the behavior and only whitespace ASCII characters will be trimmed since Spark 3.0.1.

### Why are the changes needed?

To follow the previous change.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Confirmed the document built by the following command.

```

SKIP_API=1 bundle exec jekyll build

```

Closes#33287 from sarutak/fix-utf8string-trim-issue.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR allow the parser to parse unit list interval literals like `'3' day '10' hours '3' seconds` or `'8' years '3' months` as `YearMonthIntervalType` or `DayTimeIntervalType`.

### Why are the changes needed?

For ANSI compliance.

### Does this PR introduce _any_ user-facing change?

Yes. I noted the following things in the `sql-migration-guide.md`.

* Unit list interval literals are parsed as `YearMonthIntervaType` or `DayTimeIntervalType` instead of `CalendarIntervalType`.

* `WEEK`, `MILLISECONS`, `MICROSECOND` and `NANOSECOND` are not valid units for unit list interval literals.

* Units of year-month and day-time cannot be mixed like `1 YEAR 2 MINUTES`.

### How was this patch tested?

New tests and modified tests.

Closes#32949 from sarutak/day-time-multi-units.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to update the SQL migration guide, in particular the section about the migration from Spark 2.4 to 3.0. New item informs users about the following issue:

**What**: Spark doesn't detect encoding (charset) in CSV files with BOM correctly. Such files can be read only in the multiLine mode when the CSV option encoding matches to the actual encoding of CSV files. For example, Spark cannot read UTF-16BE CSV files when encoding is set to UTF-8 which is the default mode. This is the case of the current ES ticket.

**Why**: In previous Spark versions, encoding wasn't propagated to the underlying library that means the lib tried to detect file encoding automatically. It could success for some encodings that require BOM presents at the beginning of files. Starting from the versions 3.0, users can specify file encoding via the CSV option encoding which has UTF-8 as the default value. Spark propagates such default to the underlying library (uniVocity), and as a consequence this turned off encoding auto-detection.

**When**: Since Spark 3.0. In particular, the commit 2df34db586 causes the issue.

**Workaround**: Enabling the encoding auto-detection mechanism in uniVocity by passing null as the value of CSV option encoding. A more recommended approach is to set the encoding option explicitly.

### Why are the changes needed?

To improve user experience with Spark SQL. This should help to users in their migration from Spark 2.4.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Should be checked by building docs in GA/jenkins.

Closes#33300 from MaxGekk/csv-encoding-migration-guide.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Update AQE is `disabled` to `enabled` in sql-performance-tuning docs

### Why are the changes needed?

Make docs correct.

### Does this PR introduce _any_ user-facing change?

yes, docs changed.

### How was this patch tested?

Not need.

Closes#33295 from ulysses-you/enable-AQE.

Lead-authored-by: ulysses-you <ulyssesyou18@gmail.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

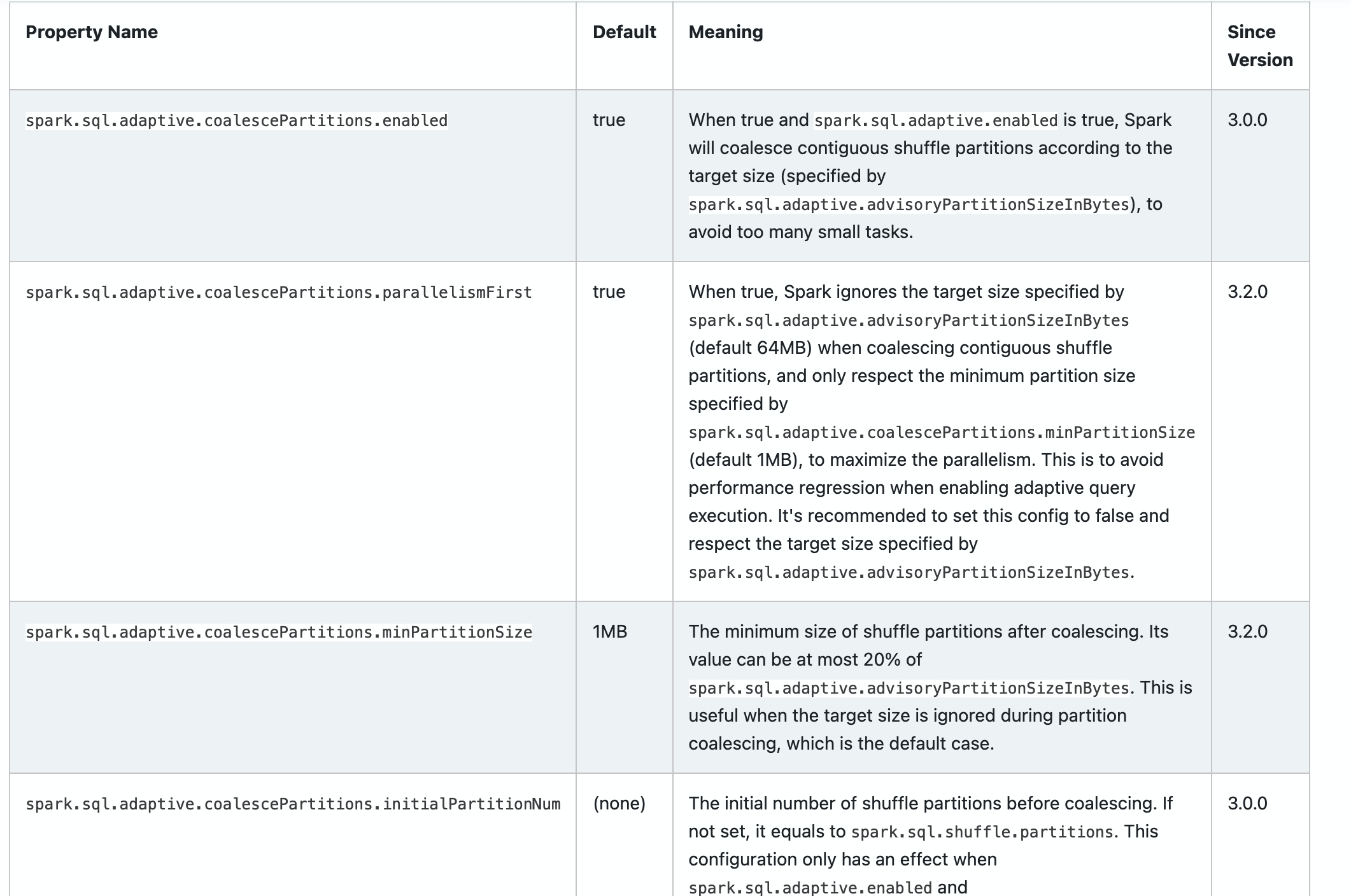

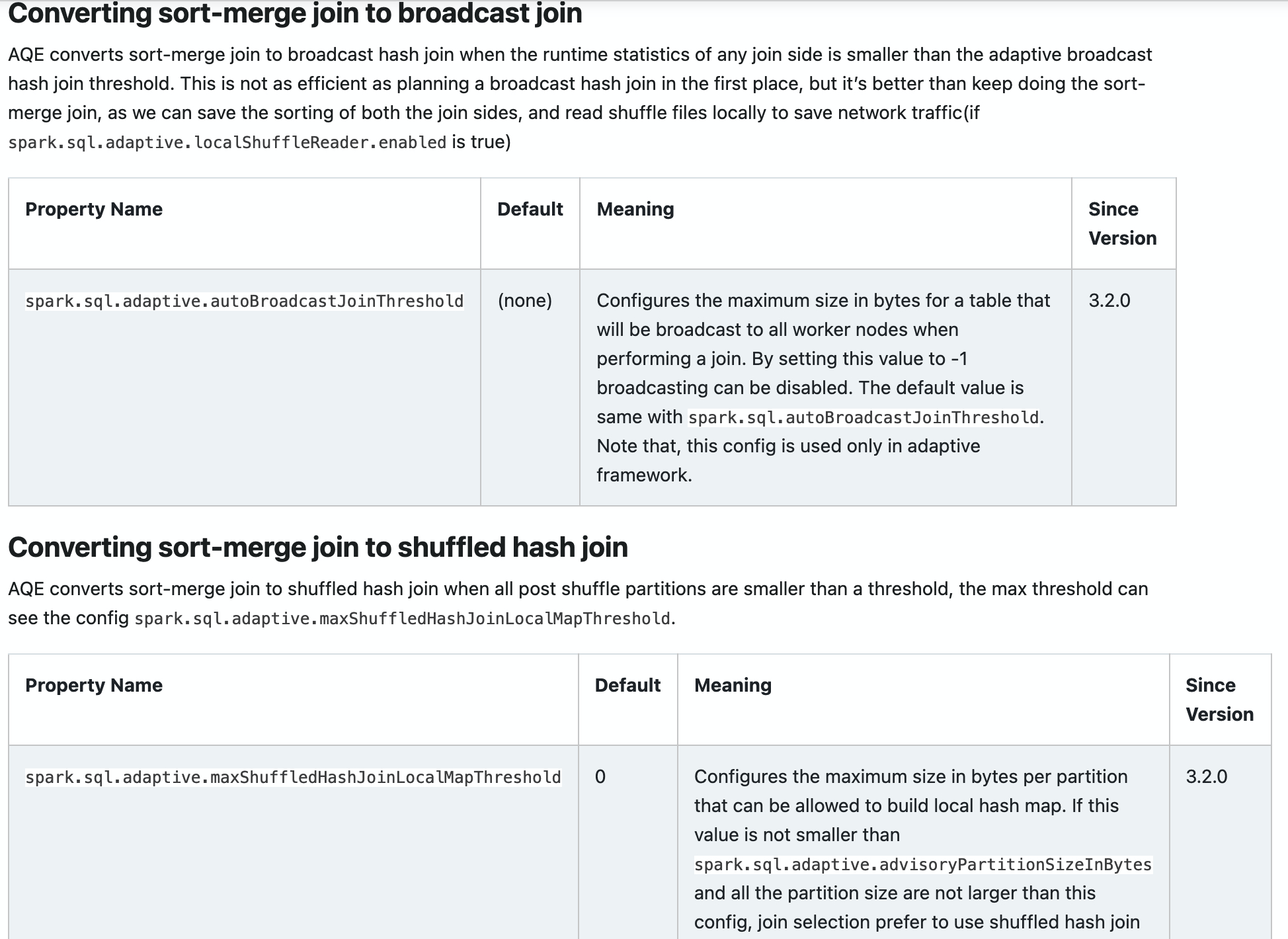

### What changes were proposed in this pull request?

Add new configs in sql-performance-tuning docs.

* spark.sql.adaptive.coalescePartitions.parallelismFirst

* spark.sql.adaptive.coalescePartitions.minPartitionSize

* spark.sql.adaptive.autoBroadcastJoinThreshold

* spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold

### Why are the changes needed?

Help user to find them.

### Does this PR introduce _any_ user-facing change?

yes, docs changed.

### How was this patch tested?

Closes#32960 from ulysses-you/SPARK-35813.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to remove the automatic build guides of documentation in `docs/README.md`.

### Why are the changes needed?

This doesn't work very well:

1. It doesn't detect the changes in RST files. But PySpark internally generates RST files so we can't just simply include it in the detection. Otherwise, it goes to an infinite loop

2. During PySpark documentation generation, it launches some jobs to generate plot images now. This is broken with `entr` command, and the job fails. Seems like it's related to how `entr` creates the process internally.

3. Minor issue but the documentation build directory was changed (`_build` -> `build` in `python/docs`)

I don't think it's worthwhile testing and fixing the docs to show an working example because dev people are already able to do it manually.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested.

Closes#33266 from HyukjinKwon/SPARK-36051.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to update `master` branch version to 3.3.0-SNAPSHOT.

### Why are the changes needed?

Start to prepare Apache Spark 3.3.0 and the published snapshot version should not conflict with `branch-3.2`.

### Does this PR introduce _any_ user-facing change?

N/A.

### How was this patch tested?

Pass the CIs.

Closes#33196 from dongjoon-hyun/SPARK-35996.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

As described in #32831, Spark has compatible issues when querying a view created by an

older version. The root cause is that Spark changed the auto-generated alias name. To avoid

this in the future, we could ask the user to specify explicit column names when creating

a view.

### Why are the changes needed?

Avoid compatible issue when querying a view

### Does this PR introduce _any_ user-facing change?

Yes. User will get error when running query below after this change

```

CREATE OR REPLACE VIEW v AS SELECT CAST(t.a AS INT), to_date(t.b, 'yyyyMMdd') FROM t

```

### How was this patch tested?

not yet

Closes#32832 from linhongliu-db/SPARK-35686-no-auto-alias.

Authored-by: Linhong Liu <linhong.liu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In https://issues.apache.org/jira/browse/SPARK-34862, we added support for ORC nested column vectorized reader, and it is disabled by default for now. So we would like to add the user-facing documentation for it, and user can opt-in to use it if they want.

### Why are the changes needed?

To make user be aware of the feature, and let them know the instruction to use the feature.

### Does this PR introduce _any_ user-facing change?

Yes, the documentation itself.

### How was this patch tested?

Manually check generated documentation as below.

<img width="1153" alt="Screen Shot 2021-07-01 at 12 19 40 AM" src="https://user-images.githubusercontent.com/4629931/124083422-b0724280-da02-11eb-93aa-a25d118ba56e.png">

<img width="1147" alt="Screen Shot 2021-07-01 at 12 19 52 AM" src="https://user-images.githubusercontent.com/4629931/124083442-b5cf8d00-da02-11eb-899f-827d55b8558d.png">

Closes#33168 from c21/orc-doc.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to deprecate old Java 8 versions prior to 8u201.

### Why are the changes needed?

This is a preparation of using G1GC during the migration among Java LTS versions (8/11/17).

8u162 has the following fix.

- JDK-8205376: JVM Crash during G1 GC

8u201 has the following fix.

- JDK-8208873: C1: G1 barriers don't preserve FP registers

### Does this PR introduce _any_ user-facing change?

No, Today's Java8 is usually 1.8.0_292 and this is just a deprecation in documentation.

### How was this patch tested?

N/A

Closes#33166 from dongjoon-hyun/SPARK-35962.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Deprecate Python 3.6 in Spark documentation

### Why are the changes needed?

According to https://endoflife.date/python, Python 3.6 will be EOL on 23 Dec, 2021.

We should prepare for the deprecation of Python 3.6 support in Spark in advance.

### Does this PR introduce _any_ user-facing change?

N/A.

### How was this patch tested?

Manual tests.

Closes#33141 from xinrong-databricks/deprecate3.6_doc.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

There are two new Avro options `datetimeRebaseMode` and `positionalFieldMatching` after Spark 3.2.

We should document the since version so that users can know whether the option works in their Spark version.

### Why are the changes needed?

Better documentation.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manual preview on local setup.

<img width="828" alt="Screen Shot 2021-06-30 at 5 05 54 PM" src="https://user-images.githubusercontent.com/1097932/123934000-ba833b00-d947-11eb-9ca5-ce8ff8add74b.png">

<img width="711" alt="Screen Shot 2021-06-30 at 5 06 34 PM" src="https://user-images.githubusercontent.com/1097932/123934126-d4bd1900-d947-11eb-8d80-69df8f3d9900.png">

Closes#33153 from gengliangwang/version.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

Provide the (configurable) ability to perform Avro-to-Catalyst schema field matching using the position of the fields instead of their names. A new `option` is added for the Avro datasource, `positionalFieldMatching`, which instructs `AvroSerializer`/`AvroDeserializer` to perform positional field matching instead of matching by name.

### Why are the changes needed?

This by-name matching is somewhat recent; prior to PR #24635, at least on the write path, schemas were matched by positionally ("structural" comparison). While by-name is better behavior as a default, it will be better to make this configurable by a user. Even at the time that PR #24635 was handled, there was [interest in making this behavior configurable](https://github.com/apache/spark/pull/24635#issuecomment-494205251), but it appears it went unaddressed.

There is precedence for configurability of this behavior as seen in PR #29737, which added this support for ORC. Besides this precedence, the behavior of Hive is to perform matching positionally ([ref](https://cwiki.apache.org/confluence/display/Hive/AvroSerDe#AvroSerDe-WritingtablestoAvrofiles)), so this is behavior that Hadoop/Hive ecosystem users are familiar with.

### Does this PR introduce _any_ user-facing change?

Yes, a new option is provided for the Avro datasource, `positionalFieldMatching`, which provides compatibility with Hive and pre-3.0.0 Spark behavior.

### How was this patch tested?

New unit tests are added within `AvroSuite`, `AvroSchemaHelperSuite`, and `AvroSerdeSuite`; and most of the existing tests within `AvroSerdeSuite` are adapted to perform the same test using by-name and positional matching to ensure feature parity.

Closes#31490 from xkrogen/xkrogen-SPARK-34365-avro-positional-field-matching.

Authored-by: Erik Krogen <xkrogen@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

This adds two new additional metrics to `ExternalBlockHandler`:

- `blockTransferRate` -- for indicating the rate of transferring blocks, vs. the data within them

- `blockTransferAvgSize_1min` -- a 1-minute trailing average of block sizes transferred by the ESS

Additionally, this enhances `YarnShuffleServiceMetrics` to expose the histogram/`Snapshot` information from `Timer` metrics within `ExternalBlockHandler`.

### Why are the changes needed?

Currently `ExternalBlockHandler` exposes some useful metrics, but is lacking around metrics for the rate of block transfers. We have `blockTransferRateBytes` to tell us the rate of _bytes_, but no metric to tell us the rate of _blocks_, which is especially relevant when running the ESS on HDDs that are sensitive to random reads. Many small block transfers can have a negative impact on performance, but won't show up as a spike in `blockTransferRateBytes` since the sizes are small. Thus the new metrics to show information around average block size and block transfer rate are very useful to monitor the health/performance of the ESS, especially when running on HDDs.

For the `YarnShuffleServiceMetrics`, currently the three `Timer` metrics exposed by `ExternalBlockHandler` are being underutilized in a YARN-based environment -- they are basically treated as a `Meter`, only exposing rate-based information, when the metrics themselves are collected detailed histograms of timing information. We should expose this information for better observability.

### Does this PR introduce _any_ user-facing change?

Yes, there are two entirely new metrics for the ESS, as documented in `monitoring.md`. Additionally in a YARN environment, `Timer` metrics exposed by the ESS will include more rich timing information.

### How was this patch tested?

New unit tests are added to verify that new metrics are showing up as expected.

We have been running this patch internally for approx. 1 year and have found it to be useful for monitoring the health of ESS and diagnosing performance issues.

Closes#32388 from xkrogen/xkrogen-SPARK-35258-ess-new-metrics.

Authored-by: Erik Krogen <xkrogen@apache.org>

Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com>

### What changes were proposed in this pull request?

The hyperlinks in Python code blocks in [Spark SQL Guide - Getting Started](https://spark.apache.org/docs/latest/sql-getting-started.html) currently point to invalid addresses and return 404. This pull request fixes that issue by pointing them to correct links in Python API docs.

### Why are the changes needed?

Error in documentation classifies as a bug and hence needs to be fixed.

### Does this PR introduce _any_ user-facing change?

Yes. This PR fixes documentation error in https://spark.apache.org/docs/latest/sql-getting-started.html

### How was this patch tested?

This patch was locally built after cloning the repo from scratch and then doing a clean build after fixing the required problems.

Closes#33107 from dhruvildave/sql-doc.

Authored-by: Dhruvil Dave <dhruvil.dave@outlook.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Update "Caching Data in Memory" section, add suggestion to call DataFrame `unpersist` method to make it consistent with previous suggestion of using `persist` method.

### Why are the changes needed?

Keep documentation consistent.

### Does this PR introduce _any_ user-facing change?

Yes, fixes the user-facing docs.

### How was this patch tested?

Manually.

Closes#33069 from Silverlight42/caching-data-doc.

Authored-by: Carlos Peña <Cdpm42@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR changes the unionByName with null filling logic to append new nested struct fields from the right side of the union to the schema versus sorting fields alphabetically. It removes the need to use UpdateField expressions, and just directly projects new nested structs from each side of the union with the correct schema. This changes the union'd schema from being alphabetically sorted previously to now "left dominant", where the fields from the left side of the union are included and then the missing ones from the right are added in the same order found originally.

### Why are the changes needed?

Certain nested structs would cause unionByName with null filling to error out due to part of the logic for rewriting the expression tree to sort the structs.

### Does this PR introduce _any_ user-facing change?

Yes, nested struct fields will be in a different order after unionByName with null filling than before, though shouldn't cause much effective difference.

### How was this patch tested?

Updated existing tests based on the new StructField ordering and added a new test for the case that was broken originally.

Closes#33040 from Kimahriman/union-by-name-struct-order.

Authored-by: Adam Binford <adamq43@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

* Add a new repartition operator `RebalanceRepartition`.

* Support a new hint `REBALANCE`

After this patch, user can run this query:

```sql

SELECT /*+ REBALANCE(c) */ * FROM t

```

### Why are the changes needed?

Add a new hint to distingush if we can optimize it safely.

This new hint can let AQE optimize with `CustomShuffleReaderExec` safely. Currently, AQE can only coalesce shuffle partitions but can not expand shuffle partitions due to the semantics of output partitioning.

Let's say we have a query:

```sql

SELECT /*+ REPARTITION(col) */ * FROM t

```

AQE can not expand the shuffle partitions even if `col` is skewed because expanding shuffle partitions will break the hashed output paritioning of `RepartitionByExpression`. But if the query is use`REPARTITION_BY_AQE`, AQE can optimize it without considering the semantics of output partitioning.

### Does this PR introduce _any_ user-facing change?

Yes, a new hint.

### How was this patch tested?

Add test.

Closes#32932 from ulysses-you/SPARK-35786.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This's a follow-up of https://github.com/apache/spark/pull/30710.

Rename the conf from `spark.speculation.min.threshold` to `spark.speculation.minTaskRuntime`.

### Why are the changes needed?

To follow the [config naming policy](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/internal/config/ConfigEntry.scala#L21).

### Does this PR introduce _any_ user-facing change?

No (since Spark 3.2 hasn't been released).

### How was this patch tested?

Pass existing tests.

Closes#33037 from Ngone51/spark-33741-followup.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to introduce the strategy on mismatched offset for start offset timestamp on Kafka data source.

Please read the section `Why are the changes needed?` to understand the rationalization of the functionality.

This would be pretty much helpful for the case where there's a skew between partitions and some partitions have older records.

* AS-IS: Spark simply fails the query and end users have to deal with workarounds requiring manual steps.

* TO-BE: Spark will assign the latest offset for these partitions, so that Spark can read newer records from these partitions in further micro-batches.

To retain the existing behavior and also give some help for the proposed "TO-BE" behavior, we'd like to introduce the strategy on mismatched offset for start offset timestamp to let end users choose from them.

The strategy will be added as source option, to ensure end users set the behavior explicitly (otherwise simply "known" default value).

* New source option to be added: startingOffsetsByTimestampStrategy

* Available values: `error` (fail the query as referred as AS-IS), `latest` (set the offset to the latest as referred as TO-BE)

Doc changes are following:

### Why are the changes needed?

We encountered a real-world case Spark fails the query if some of the partitions don't have matching offset by timestamp.

This is intended behavior to avoid bring unintended output for some cases like:

* timestamp 2 is presented as timestamp-offset, but the some of partitions don't have the record yet

* record with timestamp 1 comes "later" in the following micro-batch

which is possible since Kafka allows to specify the timestamp in record.

Here the unintended output we talked about was the risk of reading record with timestamp 1 in the next micro-batch despite the option specifying timestamp 2.

But for many cases end users just suppose timestamp is increasing monotonically with wall clocks are all in sync, and current behavior blocks these cases to make progress.

### Does this PR introduce _any_ user-facing change?

Yes, but not a breaking change. It's up to end users to choose the behavior which the default value is "error" (current behavior). And it's a source option (not config) so they need to explicitly set the behavior to let the functionality takes effect.

### How was this patch tested?

New UTs.

Closes#32747 from HeartSaVioR/SPARK-35611.

Authored-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

PySpark added pinned thread mode at https://github.com/apache/spark/pull/24898 to sync Python thread to JVM thread. Previously, one JVM thread could be reused which ends up with messed inheritance hierarchy such as thread local especially when multiple jobs run in parallel. To completely fix this, we should enable this mode by default.

### Why are the changes needed?

To correctly support parallel job submission and management.

### Does this PR introduce _any_ user-facing change?

Yes, now Python thread is mapped to JVM thread one to one.

### How was this patch tested?

Existing tests should cover it.

Closes#32429 from HyukjinKwon/SPARK-35303.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/32513

It's hard to keep the command execution name for `DataFrameWriter`, as the command logical plan is a bit messy (DS v1, file source and hive and different command logical plans) and sometimes it's hard to distinguish "insert" and "save".

However, `DataFrameWriterV2` only produce v2 commands which are pretty clean. It's easy to keep the command execution name for them.

### Why are the changes needed?

less breaking changes.

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

N/A

Closes#32919 from cloud-fan/follow.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR updates the document about building Spark with Hadoop for Hadoop 3.x and Hadoop 3.2.

### Why are the changes needed?

The document says about how to build like as follows:

```

./build/mvn -Pyarn -Dhadoop.version=2.8.5 -DskipTests clean package

```

But this command fails because the default build settings are for Hadoop 3.x.

So, we need to modify the command example.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed both of these commands successfully finished.

```

./build/mvn -Pyarn -Dhadoop.version=3.3.0 -DskipTests package

./build/mvn -Phadoop-2.7 -Pyarn -Dhadoop.version=2.8.5 -DskipTests package

```

I also built the document and confirmed the result.

This is before:

And this is after:

Closes#32917 from sarutak/fix-build-doc-with-hadoop.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adda a feature which allow the parser parse any day-time interval types in SQL.

### Why are the changes needed?

To comply with ANSI standard, we additionally need to support the following types.

* INTERVAL DAY

* INTERVAL DAY TO HOUR

* INTERVAL DAY TO MINUTE

* INTERVAL HOUR

* INTERVAL HOUR TO MINUTE

* INTERVAL HOUR TO SECOND

* INTERVAL MINUTE

* INTERVAL MINUTE TO SECOND

* INTERVAL SECOND

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New tests.

Closes#32893 from sarutak/parse-any-day-time.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

The STRUCT type syntax is defined like this:

STRUCT(fieldNmae: fileType [NOT NULL][COMMENT stringLiteral][,.....])

So the field list is nearly the same as a column list

if we could make ':' optional it would be so much cleaner an less proprietary

### Why are the changes needed?

ease of use

### Does this PR introduce _any_ user-facing change?

Yes, you can use Struct type list is nearly the same as a column list

### How was this patch tested?

unit tests

Closes#32858 from jerqi/master.

Authored-by: RoryQi <1242949407@qq.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr upgrades built-in Hive to 2.3.9. Hive 2.3.9 changes:

- [HIVE-17155] - findConfFile() in HiveConf.java has some issues with the conf path

- [HIVE-24797] - Disable validate default values when parsing Avro schemas

- [HIVE-24608] - Switch back to get_table in HMS client for Hive 2.3.x

- [HIVE-21200] - Vectorization: date column throwing java.lang.UnsupportedOperationException for parquet

- [HIVE-21563] - Improve Table#getEmptyTable performance by disabling registerAllFunctionsOnce

- [HIVE-19228] - Remove commons-httpclient 3.x usage

### Why are the changes needed?

Fix regression caused by AVRO-2035.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#32750 from wangyum/SPARK-34512.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR updates documentation by removing reference to [hashicorp/nomad-spark](https://github.com/hashicorp/nomad-spark) which has been deprecated in April 2020, and will not be developed any longer.

### Why are the changes needed?

To keep the documentation updated and remove confusion for potential users being interested in running with Nomad.

### Does this PR introduce _any_ user-facing change?

Yes. A change to the documentation.

### How was this patch tested?

Generated to documentation, and checked everything is alright in the output.

Closes#32860 from pptaszynski/doc/remove-spark-nomad-project-reference.

Authored-by: Pawel Ptaszynski <pawel.ptaszynski@bolt.eu>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR adds support for lateral subqueries. A lateral subquery is a subquery preceded by the `LATERAL` keyword in the FROM clause of a query that can reference columns in the preceding FROM items. For example:

```sql

SELECT * FROM t1, LATERAL (SELECT * FROM t2 WHERE t1.a = t2.c)

```

A new subquery expression`LateralSubquery` is used to represent a lateral subquery. It is similar to `ScalarSubquery` but can return multiple rows and columns. A new logical unary node `LateralJoin` is used to represent a lateral join.

Here is the analyzed plan for the above query:

```scala

Project [a, b, c, d]

+- LateralJoin lateral-subquery [a], Inner

: +- Project [c, d]

: +- Filter (outer(a) = c)

: +- Relation [c, d]

+- Relation [a, b]

```

Similar to a correlated subquery, a lateral subquery can be viewed as a dependent (nested loop) join where the evaluation of the right subtree depends on the current value of the left subtree. The same technique to decorrelate a subquery is used to decorrelate a lateral join:

```scala

Project [a, b, c, d]

+- LateralJoin lateral-subquery [a && a = c], Inner // pull up correlated predicates as join conditions

: +- Project [c, d]

: +- Relation [c, d]

+- Relation [a, b]

```

Then the lateral join can be rewritten into a normal join:

```scala

Join Inner (a = c)

:- Relation [a, b]

+- Relation [c, d]

```

#### Follow-ups:

1. Similar to rewriting correlated scalar subqueries, rewriting lateral joins is also subject to the COUNT bug (See SPARK-15370 for more details). This is **not** handled in the current PR as it requires a sizeable amount of refactoring. It will be addressed in a subsequent PR (SPARK-35551).

2. Currently Spark does use outer query references to resolve star expressions in subqueries. This is not lateral subquery specific and can be handled in a separate PR (SPARK-35618)

### Why are the changes needed?

To support an ANSI SQL feature.

### Does this PR introduce _any_ user-facing change?

Yes. It allows users to use lateral subqueries in the FROM clause of a query.

### How was this patch tested?

- Parser test: `PlanParserSuite.scala`

- Analyzer test: `ResolveSubquerySuite.scala`

- Optimizer test: `PullupCorrelatedPredicatesSuite.scala`

- SQL test: `join-lateral.sql`, `postgreSQL/join.sql`

Closes#32303 from allisonwang-db/spark-34382-lateral.

Lead-authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR enhances `RepartitionByExpression` to make it coalesce partitions efficiently by AQE. Usually used to merge small files.

The basic logic is: Spark first tries to coalesce partitions, if it cannot be coalesced, then use the local shuffle reader to read data to avoid exchange the data over the network.

Usage:

```sql

SELECT /*+ REPARTITION */ * FROM t

```

```scala

df.repartition()

```

For example:

coalesce small output files | local shuffle reader

--- | ---

|

### Why are the changes needed?

Coalesce partitions efficiently.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#32781 from wangyum/SPARK-35650.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, Spark eagerly executes commands on the caller side of `QueryExecution`, which is a bit hacky as `QueryExecution` is not aware of it and leads to confusion.

For example, if you run `sql("show tables").collect()`, you will see two queries with identical query plans in the web UI.

The first query is triggered at `Dataset.logicalPlan`, which eagerly executes the command.

The second query is triggered at `Dataset.collect`, which is the normal query execution.

From the web UI, it's hard to tell that these two queries are caused by eager command execution.

This PR proposes to move the eager command execution to `QueryExecution`, and turn the command plan to `CommandResult` to indicate that command has been executed already. Now `sql("show tables").collect()` still triggers two queries, but the quey plans are not identical. The second query becomes:

In addition to the UI improvements, this PR also has other benefits:

1. Simplifies code as caller side no need to worry about eager command execution. `QueryExecution` takes care of it.

2. It helps https://github.com/apache/spark/pull/32442 , where there can be more plan nodes above commands, and we need to replace commands with something like local relation that produces unsafe rows.

### Why are the changes needed?

Explained above.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

existing tests

Closes#32513 from beliefer/SPARK-35378.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: beliefer <beliefer@163.com>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch introduces a new option to specify the minimum number of offsets to read per trigger i.e. minOffsetsPerTrigger and maxTriggerDelay to avoid the infinite wait for the trigger.

This new option will allow skipping trigger/batch when the number of records available in Kafka is low. This is a very useful feature in cases where we have a sudden burst of data at certain intervals in a day and data volume is low for the rest of the day.

'maxTriggerDelay' option will help to avoid cases of infinite delay in scheduling trigger and the trigger will happen irrespective of records available if the maxTriggerDelay time exceeds the last trigger. It would be an optional parameter with a default value of 15 mins. This option will be only applicable if minOffsetsPerTrigger is set.

minOffsetsPerTrigger option would be optional of course, but once specified it would take precedence over maxOffestsPerTrigger which will be honored only after minOffsetsPerTrigger is satisfied.

### Why are the changes needed?

There are many scenarios where there is a sudden burst of data at certain intervals in a day and data volume is low for the rest of the day. Tunning such jobs is difficult as decreasing trigger processing time increasing the number of batches and hence cluster resource usage and adds to small file issues. Increasing trigger processing time adds consumer lag. This patch tries to address this issue.

### How was this patch tested?

This patch was tested by adding test cases as well as manually on a cluster where the job was running for a full one day with a data burst happening once a day.

Here is the picture of databurst and hence consumer lag:

<img width="1198" alt="Screenshot 2021-04-29 at 11 39 35 PM" src="https://user-images.githubusercontent.com/1044003/116997587-9b2ab180-acfa-11eb-91fd-524802ce3316.png">

This is how the job behaved at burst time running every 4.5 mins (which is the specified trigger time):

<img width="1154" alt="Burst Time" src="https://user-images.githubusercontent.com/1044003/116997919-12f8dc00-acfb-11eb-9b0a-98387fc67560.png">

This is job behavior during the non-burst time where it is skipping 2 to 3 triggers and running once every 9 to 13.5 mins

<img width="1154" alt="Non Burst Time" src="https://user-images.githubusercontent.com/1044003/116998244-8b5f9d00-acfb-11eb-8340-33d47149ef81.png">

Here are some more stats from the two-run i.e. one normal run and the other with minOffsetsperTrigger set:

| Run | Data Size | Number of Batch Runs | Number of Files |

| ------------- | ------------- |------------- |------------- |

| Normal Run | 54.2 GB | 320 | 21968 |

| Run with minOffsetsperTrigger | 54.2 GB | 120 | 12104 |

Closes#32653 from satishgopalani/SPARK-35312.

Authored-by: Satish Gopalani <satish.gopalani@pubmatic.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

Override `getJDBCType` method in `MySQLDialect` so that `FloatType` is mapped to `FLOAT` instead of `REAL`

### Why are the changes needed?

MySQL treats `REAL` as a synonym to `DOUBLE` by default (see https://dev.mysql.com/doc/refman/8.0/en/numeric-types.html). Therefore, when creating a table with a column of `REAL` type, it will be created as `DOUBLE`. However, currently, `MySQLDialect` does not provide an implementation for `getJDBCType`, and will thus ultimately fall back to `JdbcUtils.getCommonJDBCType`, which maps `FloatType` to `REAL`. This change is needed so that we can properly map the `FloatType` to `FLOAT` for MySQL.

### Does this PR introduce _any_ user-facing change?

Prior to this PR, when writing a dataframe with a `FloatType` column to a MySQL table, it will create a `DOUBLE` column. After the PR, it will create a `FLOAT` column.

### How was this patch tested?

Added a test case in `JDBCSuite` that verifies the mapping.

Closes#32605 from mariosmeim-db/SPARK-35446.

Authored-by: Marios Meimaris <marios.meimaris@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes fix the default value in Data Source Option page based on the Scala documentation.

### Why are the changes needed?

Some of the existing default value in Data Source Option page follow the Python documentation, which has `None` as the default value for all options.

### Does this PR introduce _any_ user-facing change?

Yes, the default value in the Data Source Option page is fixed (from `None` to proper default value)

- Before

<img width="361" alt="Screen Shot 2021-06-02 at 6 31 12 PM" src="https://user-images.githubusercontent.com/44108233/120456594-b8719f00-c3d0-11eb-9778-071ab2ba9f45.png">

- After

<img width="562" alt="Screen Shot 2021-06-02 at 6 32 47 PM" src="https://user-images.githubusercontent.com/44108233/120456844-f1117880-c3d0-11eb-9c7c-9dcd66776444.png">

### How was this patch tested?

Manually built the docs and checked one by one.

Closes#32745 from itholic/SPARK-35523.

Lead-authored-by: itholic <haejoon.lee@databricks.com>

Co-authored-by: Haejoon Lee <44108233+itholic@users.noreply.github.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to port Koalas documentation to PySpark documentation as its initial step.

It ports almost as is except these differences:

- Renamed import from `databricks.koalas` to `pyspark.pandas`.

- Renamed `to_koalas` -> `to_pandas_on_spark`

- Renamed `(Series|DataFrame).koalas` -> `(Series|DataFrame).pandas_on_spark`

- Added a `ps_` prefix in the RST file names of Koalas documentation

Other then that,

- Excluded `python/docs/build/html` in linter

- Fixed GA dependency installataion

### Why are the changes needed?

To document pandas APIs on Spark.

### Does this PR introduce _any_ user-facing change?

Yes, it adds new documentations.

### How was this patch tested?

Manually built the docs and checked the output.

Closes#32726 from HyukjinKwon/SPARK-35587.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes adding more methods to set data source option to `Data Source Option` page for each data source.

For example, Data Source Option page for JSON as below:

- Before

<img width="322" alt="Screen Shot 2021-06-03 at 10 51 54 AM" src="https://user-images.githubusercontent.com/44108233/120574245-eb13aa00-c459-11eb-9f81-0b356023bcb5.png">

- After

<img width="470" alt="Screen Shot 2021-06-03 at 10 52 21 AM" src="https://user-images.githubusercontent.com/44108233/120574253-ed760400-c459-11eb-9008-1f075e0b9267.png">

### Why are the changes needed?

To provide users various options when they set options for data source.

### Does this PR introduce _any_ user-facing change?

Yes, now the document provides more methods for setting options than before, as in above screen capture.

### How was this patch tested?

Manually built the docs and check one by one.

Closes#32757 from itholic/SPARK-35528.

Authored-by: itholic <haejoon.lee@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to support special datetime values introduced by #25708 and by #25716 only in typed literals, and don't recognize them in parsing strings to dates/timestamps. The following string values are supported only in typed timestamp literals:

- `epoch [zoneId]` - `1970-01-01 00:00:00+00 (Unix system time zero)`

- `today [zoneId]` - midnight today.

- `yesterday [zoneId]` - midnight yesterday

- `tomorrow [zoneId]` - midnight tomorrow

- `now` - current query start time.

For example:

```sql

spark-sql> SELECT timestamp 'tomorrow';

2019-09-07 00:00:00

```

Similarly, the following special date values are supported only in typed date literals:

- `epoch [zoneId]` - `1970-01-01`

- `today [zoneId]` - the current date in the time zone specified by `spark.sql.session.timeZone`.

- `yesterday [zoneId]` - the current date -1

- `tomorrow [zoneId]` - the current date + 1

- `now` - the date of running the current query. It has the same notion as `today`.

For example:

```sql

spark-sql> SELECT date 'tomorrow' - date 'yesterday';

2

```

### Why are the changes needed?

In the current implementation, Spark supports the special date/timestamp value in any input strings casted to dates/timestamps that leads to the following problems:

- If executors have different system time, the result is inconsistent, and random. Column values depend on where the conversions were performed.

- The special values play the role of distributed non-deterministic functions though users might think of the values as constants.

### Does this PR introduce _any_ user-facing change?

Yes but the probability should be small.

### How was this patch tested?

By running existing test suites:

```

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z interval.sql"

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z date.sql"

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z timestamp.sql"

$ build/sbt "test:testOnly *DateTimeUtilsSuite"

```

Closes#32714 from MaxGekk/remove-datetime-special-values.

Lead-authored-by: Max Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>