…not synchronized to the UI display

## What changes were proposed in this pull request?

The amount of memory used by the broadcast variable is not synchronized to the UI display.

I added the case for BroadcastBlockId and updated the memory usage.

## How was this patch tested?

We can test this patch with unit tests.

Closes#23649 from httfighter/SPARK-26726.

Lead-authored-by: 韩田田00222924 <han.tiantian@zte.com.cn>

Co-authored-by: han.tiantian@zte.com.cn <han.tiantian@zte.com.cn>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This patch proposes adding a new configuration on SHS: custom executor log URL pattern. This will enable end users to replace executor logs to other than RM provide, like external log service, which enables to serve executor logs when NodeManager becomes unavailable in case of YARN.

End users can build their own of custom executor log URLs with pre-defined patterns which would be vary on each resource manager. This patch adds some patterns to YARN resource manager. (For others, there's even no executor log url available so cannot define patterns as well.)

Please refer the doc change as well as added UTs in this patch to see how to set up the feature.

## How was this patch tested?

Added UT, as well as manual test with YARN cluster

Closes#23260 from HeartSaVioR/SPARK-26311.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

This fix replaces the Threshold with a Filter for ConsoleAppender which checks

to ensure that either the logLevel is greater than thresholdLevel (shell log

level) or the log originated from a custom defined logger. In these cases, it

lets a log event go through, otherwise it doesn't.

1. Ensured that custom log level works when set by default (via log4j.properties)

2. Ensured that logs are not printed twice when log level is changed by setLogLevel

3. Ensured that custom logs are printed when log level is changed back by setLogLevel

Closes#23675 from ankuriitg/ankurgupta/SPARK-26753.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

This avoids trying to get delegation tokens when a TGT is not available, e.g.

when running in yarn-cluster mode without a keytab. That would result in an

error since that is not allowed.

Tested with some (internal) integration tests that started failing with the

patch for SPARK-25689.

Closes#23689 from vanzin/SPARK-25689.followup.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Otherwise the RDD data may be out of date by the time the test tries to check it.

Tested with an artificial delay inserted in AppStatusListener.

Closes#23654 from vanzin/SPARK-26732.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

This change addes a new mode for credential renewal that does not require

a keytab; it uses the local ticket cache instead, so it works while the

user keeps the cache valid.

This can be useful for, e.g., people running long spark-shell sessions where

their kerberos login is kept up-to-date.

The main change to enable this behavior is in HadoopDelegationTokenManager,

with a small change in the HDFS token provider. The other changes are to avoid

creating duplicate tokens when submitting the application to YARN; they allow

the tokens from the scheduler to be sent to the YARN AM, reducing the round trips

to HDFS.

For that, the scheduler initialization code was changed a little bit so that

the tokens are available when the YARN client is initialized. That basically

takes care of a long-standing TODO that was in the code to clean up configuration

propagation to the driver's RPC endpoint (in CoarseGrainedSchedulerBackend).

Tested with an app designed to stress this functionality, with both keytab and

cache-based logins. Some basic kerberos tests on k8s also.

Closes#23525 from vanzin/SPARK-26595.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

While obtaining token from hbase service , spark uses deprecated API of hbase ,

```public static Token<AuthenticationTokenIdentifier> obtainToken(Configuration conf)```

This deprecated API is already been removed from hbase 2.x version as part of the hbase 2.x major release. https://issues.apache.org/jira/browse/HBASE-14713_

there is one more stable API in

```public static Token<AuthenticationTokenIdentifier> obtainToken(Connection conn)``` in TokenUtil class

spark shall use this stable api for getting the delegation token.

To invoke this api first connection object has to be retrieved from ConnectionFactory and the same connection can be passed to obtainToken(Connection conn) for getting token.

eg: Call ```public static Connection createConnection(Configuration conf)```

, then call ```public static Token<AuthenticationTokenIdentifier> obtainToken( Connection conn)```.

## How was this patch tested?

Manual testing is been done.

Manual test result:

Before fix:

After fix:

1. Create 2 tables in hbase shell

>Launch hbase shell

>Enter commands to create tables and load data

create 'table1','cf'

put 'table1','row1','cf:cid','20'

create 'table2','cf'

put 'table2','row1','cf:cid','30'

>Show values command

get 'table1','row1','cf:cid' will diplay value as 20

get 'table2','row1','cf:cid' will diplay value as 30

2.Run SparkHbasetoHbase class in testSpark.jar using spark-submit

spark-submit --master yarn-cluster --class com.mrs.example.spark.SparkHbasetoHbase --conf "spark.yarn.security.credentials.hbase.enabled"="true" --conf "spark.security.credentials.hbase.enabled"="true" --keytab /opt/client/user.keytab --principal sen testSpark.jar

The SparkHbasetoHbase test class will update the value of table2 with sum of values of table1 & table2.

table2 = table1+table2

As we can see in the snapshot the spark job has been successfully able to interact with hbase service and able to update the row count.

Closes#23429 from sujith71955/master_hbase_service.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Manually release stdin writer and stderr reader thread when task is finished. This commit also marks

ShuffleBlockFetchIterator as fully consumed if isZombie is set.

## How was this patch tested?

Added new test

Closes#23638 from advancedxy/SPARK-26713.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a followup of #16989

The fetch-big-block-to-disk feature is disabled by default, because it's not compatible with external shuffle service prior to Spark 2.2. The client sends stream request to fetch block chunks, and old shuffle service can't support it.

After 2 years, Spark 2.2 has EOL, and now it's safe to turn on this feature by default

## How was this patch tested?

existing tests

Closes#23625 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Adjust mem settings in UnifiedMemoryManager used in test suites to ha…ve execution memory > 0

Ref: https://github.com/apache/spark/pull/23457#issuecomment-457409976

## How was this patch tested?

Existing tests

Closes#23645 from srowen/SPARK-26725.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR makes hardcoded configs about spark memory and storage to use `ConfigEntry` and put them in the config package.

## How was this patch tested?

Existing unit tests.

Closes#23623 from SongYadong/configEntry_for_mem_storage.

Authored-by: SongYadong <song.yadong1@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There are ugly provided dependencies inside core for the following:

* Hive

* Kafka

In this PR I've extracted them out. This PR contains the following:

* Token providers are now loaded with service loader

* Hive token provider moved to hive project

* Kafka token provider extracted into a new project

## How was this patch tested?

Existing + newly added unit tests.

Additionally tested on cluster.

Closes#23499 from gaborgsomogyi/SPARK-26254.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

SPARK-21568 made a change to ensure that progress bar is enabled for spark-shell

by default but not for other apps. Before that change, this was distinguished

using log-level which is not a good way to determine the same as users can change

the default log-level. That commit changed the way to determine whether current

app is running in spark-shell or not but it left the log-level part as it is,

which causes this regression. SPARK-25118 changed the default log level to INFO

for spark-shell because of which the progress bar is not enabled anymore.

This commit will remove the log-level check for enabling progress bar for

spark-shell as it is not necessary and seems to be a leftover from SPARK-21568

## How was this patch tested?

1. Ensured that progress bar is enabled with spark-shell by default

2. Ensured that progress bar is not enabled with spark-submit

Closes#23618 from ankuriitg/ankurgupta/SPARK-26694.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

To help debugging failed or slow tasks, its really useful to know the

size of the blocks getting fetched. Though that is available at the

debug level, debug logs aren't on in general -- but there is already an

info level log line that this augments a little.

## How was this patch tested?

Ran very basic local-cluster mode app, looked at logs. Example line:

```

INFO ShuffleBlockFetcherIterator: Getting 2 (194.0 B) non-empty blocks including 1 (97.0 B) local blocks and 1 (97.0 B) remote blocks

```

Full suite via jenkins.

Closes#23621 from squito/SPARK-26697.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Currently, heartbeat related arguments is not validated in spark, so if these args are inproperly specified, the Application may run for a while and not failed until the max executor failures reached(especially with spark.dynamicAllocation.enabled=true), thus may incurs resources waste.

This PR is to precheck these arguments in HeartbeatReceiver to fix this problem.

## How was this patch tested?

NA-just validation changes

Closes#23445 from liupc/validate-heartbeat-arguments-in-SparkSubmitArguments.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Currently, some ML library may generate large ml model, which may be referenced in the task closure, so driver will broadcasting large task binary, and executor may not able to deserialize it and result in OOM failures(for instance, executor's memory is not enough). This problem not only affects apps using ml library, some user specified closure or function which refers large data may also have this problem.

In order to facilitate the debuging of memory problem caused by large taskBinary broadcast, we can add same warning logs for it.

This PR will add some warning logs on the driver side when broadcasting a large task binary, and it also included some minor log changes in the reading of broadcast.

## How was this patch tested?

NA-Just log changes.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23580 from liupc/Add-warning-logs-for-large-taskBinary-size.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Followup of #20091. We could also use existing partitioner when defaultNumPartitions is equal to the maxPartitioner's numPartitions.

## How was this patch tested?

Existed.

Closes#23581 from Ngone51/dev-use-existing-partitioner-when-defaultNumPartitions-equalTo-MaxPartitioner#-numPartitions.

Authored-by: Ngone51 <ngone_5451@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`ByteBuffer.allocate` may throw `OutOfMemoryError` when the block is large but no enough memory is available. However, when this happens, right now BlockTransferService.fetchBlockSync will just hang forever as its `BlockFetchingListener. onBlockFetchSuccess` doesn't complete `Promise`.

This PR catches `Throwable` and uses the error to complete `Promise`.

## How was this patch tested?

Added a unit test. Since I cannot make `ByteBuffer.allocate` throw `OutOfMemoryError`, I passed a negative size to make `ByteBuffer.allocate` fail. Although the error type is different, it should trigger the same code path.

Closes#23590 from zsxwing/SPARK-26665.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.dynamicAllocation`, `spark.scheduler`, `spark.rpc`, `spark.task`, `spark.speculation`, and `spark.cleaner` configs to use `ConfigEntry`.

## How was this patch tested?

Existing tests

Closes#23416 from kiszk/SPARK-26463.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR proposes to extend the spark-submit option --num-executors to be applicable to Spark on K8S too. It is motivated by convenience, for example when migrating jobs written for YARN to run on K8S.

## How was this patch tested?

Manually tested on a K8S cluster.

Author: Luca Canali <luca.canali@cern.ch>

Closes#23573 from LucaCanali/addNumExecutorsToK8s.

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.unsafe` configs to use ConfigEntry and put them in the `config` package.

## How was this patch tested?

Existing UTs

Closes#23412 from kiszk/SPARK-26477.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Misc code cleanup from lgtm.com analysis. See comments below for details.

## How was this patch tested?

Existing tests.

Closes#23571 from srowen/SPARK-26640.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Co-authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Try to make labels more obvious

"avg hash probe" avg hash probe bucket iterations

"partition pruning time (ms)" dynamic partition pruning time

"total number of files in the table" file count

"number of files that would be returned by partition pruning alone" file count after partition pruning

"total size of files in the table" file size

"size of files that would be returned by partition pruning alone" file size after partition pruning

"metadata time (ms)" metadata time

"aggregate time" time in aggregation build

"aggregate time" time in aggregation build

"time to construct rdd bc" time to build

"total time to remove rows" time to remove

"total time to update rows" time to update

Add proper metric type to some metrics:

"bytes of written output" written output - createSizeMetric

"metadata time" - createTimingMetric

"dataSize" - createSizeMetric

"collectTime" - createTimingMetric

"buildTime" - createTimingMetric

"broadcastTIme" - createTimingMetric

## How is this patch tested?

Existing tests.

Author: Stacy Kerkela <stacy.kerkeladatabricks.com>

Signed-off-by: Juliusz Sompolski <julekdatabricks.com>

Closes#23551 from juliuszsompolski/SPARK-26622.

Lead-authored-by: Juliusz Sompolski <julek@databricks.com>

Co-authored-by: Stacy Kerkela <stacy.kerkela@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.shuffle` configs to use ConfigEntry and put them in the config package.

## How was this patch tested?

Existing unit tests

Closes#23550 from 10110346/ConfigEntry_shuffle.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

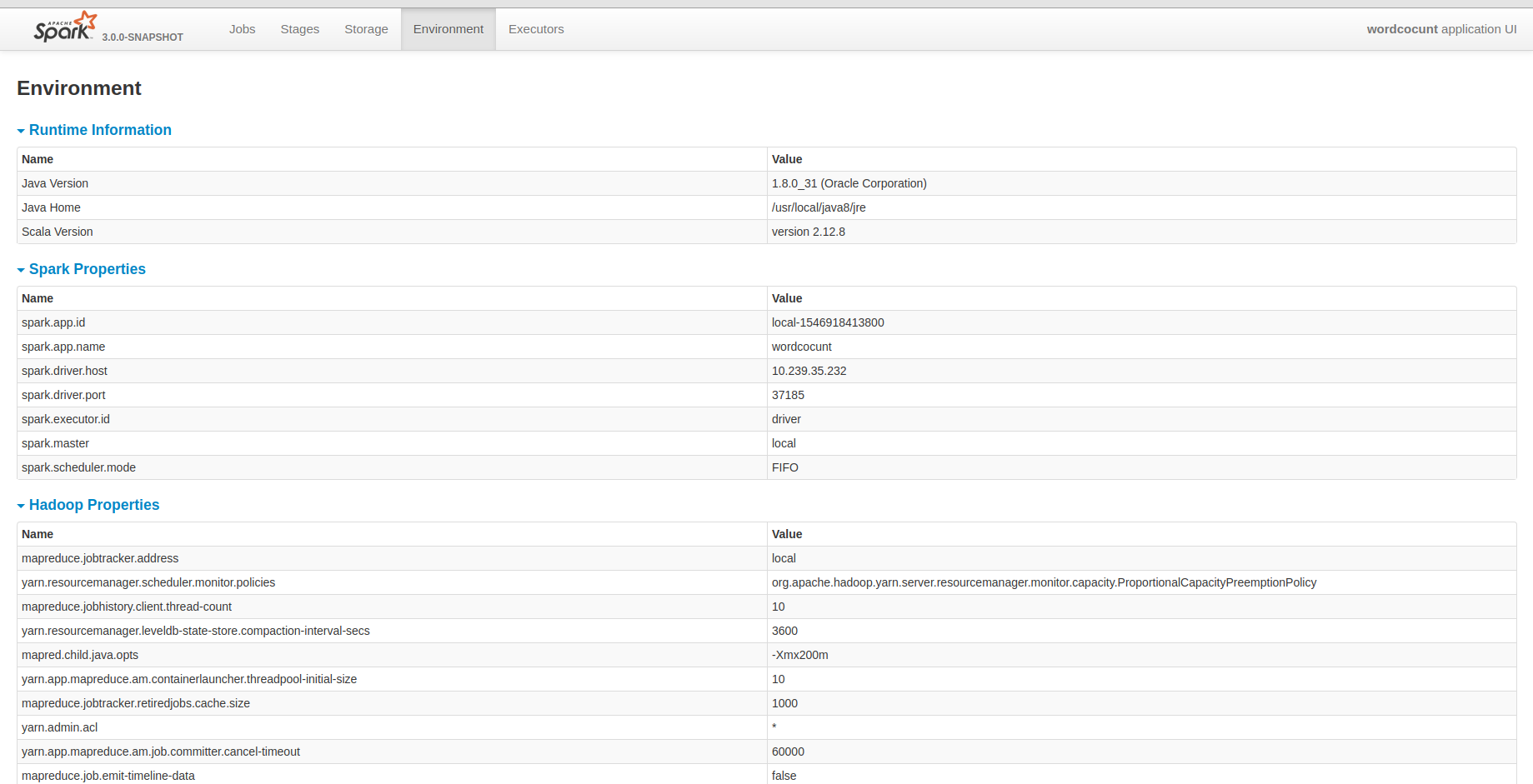

I know that yarn provided all hadoop configurations. But I guess it may be fine that the historyserver unify all configuration in it. It will be convenient for us to debug some problems.

## How was this patch tested?

Closes#23486 from deshanxiao/spark-26457.

Lead-authored-by: xiaodeshan <xiaodeshan@xiaomi.com>

Co-authored-by: deshanxiao <42019462+deshanxiao@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The PR makes hardcoded configs below to use `ConfigEntry`.

* spark.kryo

* spark.kryoserializer

* spark.serializer

* spark.jars

* spark.files

* spark.submit

* spark.deploy

* spark.worker

This patch doesn't change configs which are not relevant to SparkConf (e.g. system properties).

## How was this patch tested?

Existing tests.

Closes#23532 from HeartSaVioR/SPARK-26466-v2.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Spark-submit usage message should be put in sync with recent changes in particular regarding K8S support. These are the proposed changes to the usage message:

--executor-cores NUM -> can be useed for Spark on YARN and K8S

--principal PRINCIPAL and --keytab KEYTAB -> can be used for Spark on YARN and K8S

--total-executor-cores NUM> can be used for Spark standalone, YARN and K8S

In addition this PR proposes to remove certain implementation details from the --keytab argument description as the implementation details vary between YARN and K8S, for example.

## How was this patch tested?

Manually tested

Closes#23518 from LucaCanali/updateSparkSubmitArguments.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The regex (spark.redaction.regex) that is used to decide which config properties or environment settings are sensitive should also include oauthToken to match spark.kubernetes.authenticate.submission.oauthToken

## How was this patch tested?

Simple regex addition - happy to add a test if needed.

Author: Vinoo Ganesh <vganesh@palantir.com>

Closes#23555 from vinooganesh/vinooganesh/SPARK-26625.

## What changes were proposed in this pull request?

Fixing resource leaks where TransportClient/TransportServer instances are not closed properly.

In StandaloneSchedulerBackend the null check is added because during the SparkContextSchedulerCreationSuite #"local-cluster" test it turned out that client is not initialised as org.apache.spark.scheduler.cluster.StandaloneSchedulerBackend#start isn't called. It throw an NPE and some resource remained in open.

## How was this patch tested?

By executing the unittests and using some extra temporary logging for counting created and closed TransportClient/TransportServer instances.

Closes#23540 from attilapiros/leaks.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Now in `TransportRequestHandler.processStreamRequest`, when a stream request is processed, the stream id is not registered with the current channel in stream manager. It should do that so in case of that the channel gets terminated we can remove associated streams of stream requests too.

This also cleans up channel registration in `StreamManager`. Since `StreamManager` doesn't register channel but only `OneForOneStreamManager` does it, this removes `registerChannel` from `StreamManager`. When `OneForOneStreamManager` goes to register stream, it will also register channel for the stream.

## How was this patch tested?

Existing tests.

Closes#23521 from viirya/SPARK-26604.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Make the following hardcoded configs to use ConfigEntry.

spark.memory

spark.storage

spark.io

spark.buffer

spark.rdd

spark.locality

spark.broadcast

spark.reducer

## How was this patch tested?

Existing tests.

Closes#23447 from pralabhkumar/execution_categories.

Authored-by: Pralabh Kumar <pkumar2@linkedin.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Kafka is not yet support to obtain delegation token with proxy user. It has to be turned off until https://issues.apache.org/jira/browse/KAFKA-6945 implemented.

In this PR an exception will be thrown when this situation happens.

## How was this patch tested?

Additional unit test.

Closes#23511 from gaborgsomogyi/SPARK-26592.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fix typos in comments by replacing "in-heap" with "on-heap".

## How was this patch tested?

Existing Tests.

Closes#23533 from SongYadong/typos_inheap_to_onheap.

Authored-by: SongYadong <song.yadong1@zte.com.cn>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

If users set equivalent values to spark.network.timeout and spark.executor.heartbeatInterval, they get the following message:

```

java.lang.IllegalArgumentException: requirement failed: The value of spark.network.timeout=120s must be no less than the value of spark.executor.heartbeatInterval=120s.

```

But it's misleading since it can be read as they could be equal. So this PR replaces "no less than" with "greater than". Also, it fixes similar inconsistencies found in MLlib and SQL components.

## How was this patch tested?

Ran Spark with equivalent values for them manually and confirmed that the revised message was displayed.

Closes#23488 from sekikn/SPARK-26564.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The PR makes hardcoded configs below to use `ConfigEntry`.

* spark.ui

* spark.ssl

* spark.authenticate

* spark.master.rest

* spark.master.ui

* spark.metrics

* spark.admin

* spark.modify.acl

This patch doesn't change configs which are not relevant to SparkConf (e.g. system properties).

## How was this patch tested?

Existing tests.

Closes#23423 from HeartSaVioR/SPARK-26466.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Remove spark.memory.useLegacyMode and StaticMemoryManager. Update tests that used the StaticMemoryManager to equivalent use of UnifiedMemoryManager.

## How was this patch tested?

Existing tests, with modifications to make them work with a different mem manager.

Closes#23457 from srowen/SPARK-26539.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Introducing shared polled ByteBuf allocators.

This feature can be enabled via the "spark.network.sharedByteBufAllocators.enabled" configuration.

When it is on then only two pooled ByteBuf allocators are created:

- one for transport servers where caching is allowed and

- one for transport clients where caching is disabled

This way the cache allowance remains as before.

Both shareable pools are created with numCores parameter set to 0 (which defaults to the available processors) as conf.serverThreads() and conf.clientThreads() are module dependant and the lazy creation of this allocators would lead to unpredicted behaviour.

When "spark.network.sharedByteBufAllocators.enabled" is false then a new allocator is created for every transport client and server separately as was before this PR.

## How was this patch tested?

Existing unit tests.

Closes#23278 from attilapiros/SPARK-24920.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Currently there is code scattered in a bunch of places to do different

things related to HTTP security, such as access control, setting

security-related headers, and filtering out bad content. This makes it

really easy to miss these things when writing new UI code.

This change creates a new filter that does all of those things, and

makes sure that all servlet handlers that are attached to the UI get

the new filter and any user-defined filters consistently. The extent

of the actual features should be the same as before.

The new filter is added at the end of the filter chain, because authentication

is done by custom filters and thus needs to happen first. This means that

custom filters see unfiltered HTTP requests - which is actually the current

behavior anyway.

As a side-effect of some of the code refactoring, handlers added after

the initial set also get wrapped with a GzipHandler, which didn't happen

before.

Tested with added unit tests and in a history server with SPNEGO auth

configured.

Closes#23302 from vanzin/SPARK-24522.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.test` and `spark.testing` configs to use `ConfigEntry` and put them in the config package.

## How was this patch tested?

existing UTs

Closes#23413 from mgaido91/SPARK-26491.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

This change modifies the behavior of the delegation token code when running

on YARN, so that the driver controls the renewal, in both client and cluster

mode. For that, a few different things were changed:

* The AM code only runs code that needs DTs when DTs are available.

In a way, this restores the AM behavior to what it was pre-SPARK-23361, but

keeping the fix added in that bug. Basically, all the AM code is run in a

"UGI.doAs()" block; but code that needs to talk to HDFS (basically the

distributed cache handling code) was delayed to the point where the driver

is up and running, and thus when valid delegation tokens are available.

* SparkSubmit / ApplicationMaster now handle user login, not the token manager.

The previous AM code was relying on the token manager to keep the user

logged in when keytabs are used. This required some odd APIs in the token

manager and the AM so that the right UGI was exposed and used in the right

places.

After this change, the logged in user is handled separately from the token

manager, so the API was cleaned up, and, as explained above, the whole AM

runs under the logged in user, which also helps with simplifying some more code.

* Distributed cache configs are sent separately to the AM.

Because of the delayed initialization of the cached resources in the AM, it

became easier to write the cache config to a separate properties file instead

of bundling it with the rest of the Spark config. This also avoids having

to modify the SparkConf to hide things from the UI.

* Finally, the AM doesn't manage the token manager anymore.

The above changes allow the token manager to be completely handled by the

driver's scheduler backend code also in YARN mode (whether client or cluster),

making it similar to other RMs. To maintain the fix added in SPARK-23361 also

in client mode, the AM now sends an extra message to the driver on initialization

to fetch delegation tokens; and although it might not really be needed, the

driver also keeps the running AM updated when new tokens are created.

Tested in a kerberized cluster with the same tests used to validate SPARK-23361,

in both client and cluster mode. Also tested with a non-kerberized cluster.

Closes#23338 from vanzin/SPARK-25689.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

When acquiring unroll memory from `StaticMemoryManager`, let it fail fast if required space exceeds memory limit, just like acquiring storage memory.

I think this may reduce some computation and memory evicting costs especially when required space(`numBytes`) is very big.

## How was this patch tested?

Existing unit tests.

Closes#23426 from SongYadong/acquireUnrollMemory_fail_fast.

Authored-by: SongYadong <song.yadong1@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR upgrades Mockito from 1.10.19 to 2.23.4. The following changes are required.

- Replace `org.mockito.Matchers` with `org.mockito.ArgumentMatchers`

- Replace `anyObject` with `any`

- Replace `getArgumentAt` with `getArgument` and add type annotation.

- Use `isNull` matcher in case of `null` is invoked.

```scala

saslHandler.channelInactive(null);

- verify(handler).channelInactive(any(TransportClient.class));

+ verify(handler).channelInactive(isNull());

```

- Make and use `doReturn` wrapper to avoid [SI-4775](https://issues.scala-lang.org/browse/SI-4775)

```scala

private def doReturn(value: Any) = org.mockito.Mockito.doReturn(value, Seq.empty: _*)

```

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#23452 from dongjoon-hyun/SPARK-26536.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

The PR makes hardcoded spark.driver, spark.executor, and spark.cores.max configs to use `ConfigEntry`.

Note that some config keys are from `SparkLauncher` instead of defining in the config package object because the string is already defined in it and it does not depend on core module.

## How was this patch tested?

Existing tests.

Closes#23415 from ueshin/issues/SPARK-26445/hardcoded_driver_executor_configs.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR makes hardcoded configs below to use ConfigEntry.

* spark.pyspark

* spark.python

* spark.r

This patch doesn't change configs which are not relevant to SparkConf (e.g. system properties, python source code)

## How was this patch tested?

Existing tests.

Closes#23428 from HeartSaVioR/SPARK-26489.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

The overriden of SparkSubmit's exitFn at some previous tests in SparkSubmitSuite may cause the following tests pass even they failed when they were run separately. This PR is to fix this problem.

## How was this patch tested?

unittest

Closes#23404 from liupc/Fix-SparkSubmitSuite-exitFn.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This should make tests in core modules pass for Java 11.

## How was this patch tested?

Existing tests, with modifications.

Closes#23419 from srowen/Java11.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR addresses warning messages in Java files reported at [lgtm.com](https://lgtm.com).

[lgtm.com](https://lgtm.com) provides automated code review of Java/Python/JavaScript files for OSS projects. [Here](https://lgtm.com/projects/g/apache/spark/alerts/?mode=list&severity=warning) are warning messages regarding Apache Spark project.

This PR addresses the following warnings:

- Result of multiplication cast to wider type

- Implicit narrowing conversion in compound assignment

- Boxed variable is never null

- Useless null check

NOTE: `Potential input resource leak` looks false positive for now.

## How was this patch tested?

Existing UTs

Closes#23420 from kiszk/SPARK-26508.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There's some inconsistency for log level while logging error messages in

delegation token providers. (DEBUG, INFO, WARNING)

Given that failing to obtain token would often crash the query, I guess

it would be nice to set higher log level for error log messages.

## How was this patch tested?

The patch just changed the log level.

Closes#23418 from HeartSaVioR/FIX-inconsistency-log-level-between-delegation-token-providers.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.eventLog` configs to use `ConfigEntry` and put them in the `config` package.

## How was this patch tested?

existing tests

Closes#23395 from mgaido91/SPARK-26470.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In the method `taskList`(since https://github.com/apache/spark/pull/21688), the executor log value is queried in KV store for every task(method `constructTaskData`).

This PR propose to use a hashmap for reducing duplicated KV store lookups in the method.

## How was this patch tested?

Manual check

Closes#23310 from gengliangwang/removeExecutorLog.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This pr makes hardcoded "spark.history" configs to use `ConfigEntry` and put them in `History` config object.

## How was this patch tested?

Existing tests.

Closes#23384 from ueshin/issues/SPARK-26443/hardcoded_history_configs.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Add docs to describe how remove policy act while considering the property `spark.dynamicAllocation.cachedExecutorIdleTimeout` in ExecutorAllocationManager

## How was this patch tested?

comment-only PR.

Closes#23386 from TopGunViper/SPARK-26446.

Authored-by: wuqingxin <wuqingxin@baidu.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…leAccumulator

## What changes were proposed in this pull request?

This PR implements metric sources for LongAccumulator and DoubleAccumulator, such that a user can register these accumulators easily and have their values be reported by the driver's metric namespace.

## How was this patch tested?

Unit tests, and manual tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23242 from abellina/SPARK-26285_accumulator_source.

Lead-authored-by: Alessandro Bellina <abellina@yahoo-inc.com>

Co-authored-by: Alessandro Bellina <abellina@oath.com>

Co-authored-by: Alessandro Bellina <abellina@gmail.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

Recently, the ability to expose the metrics for YARN Shuffle Service was added as part of [SPARK-18364](https://github.com/apache/spark/pull/22485). We need to add some metrics to be able to determine the number of active connections as well as open connections to the external shuffle service to benchmark network and connection issues on large cluster environments.

Added two more shuffle server metrics for Spark Yarn shuffle service: numRegisteredConnections which indicate the number of registered connections to the shuffle service and numActiveConnections which indicate the number of active connections to the shuffle service at any given point in time.

If these metrics are outputted to a file, we get something like this:

1533674653489 default.shuffleService: Hostname=server1.abc.com, openBlockRequestLatencyMillis_count=729, openBlockRequestLatencyMillis_rate15=0.7110833548897356, openBlockRequestLatencyMillis_rate5=1.657808981793011, openBlockRequestLatencyMillis_rate1=2.2404486061620474, openBlockRequestLatencyMillis_rateMean=0.9242558551196706,

numRegisteredConnections=35,

blockTransferRateBytes_count=2635880512, blockTransferRateBytes_rate15=2578547.6094160094, blockTransferRateBytes_rate5=6048721.726302424, blockTransferRateBytes_rate1=8548922.518223226, blockTransferRateBytes_rateMean=3341878.633637769, registeredExecutorsSize=5, registerExecutorRequestLatencyMillis_count=5, registerExecutorRequestLatencyMillis_rate15=0.0027973949328659836, registerExecutorRequestLatencyMillis_rate5=0.0021278007987206426, registerExecutorRequestLatencyMillis_rate1=2.8270296777387467E-6, registerExecutorRequestLatencyMillis_rateMean=0.006339206380043053, numActiveConnections=35

Closes#22498 from pgandhi999/SPARK-18364.

Authored-by: pgandhi <pgandhi@oath.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

When NoClassDefFoundError thrown,it will cause job hang.

`Exception in thread "dag-scheduler-event-loop" java.lang.NoClassDefFoundError: Lcom/xxx/data/recommend/aggregator/queue/QueueName;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2436)

at java.lang.Class.getDeclaredField(Class.java:1946)

at java.io.ObjectStreamClass.getDeclaredSUID(ObjectStreamClass.java:1659)

at java.io.ObjectStreamClass.access$700(ObjectStreamClass.java:72)

at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:480)

at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:468)

at java.security.AccessController.doPrivileged(Native Method)

at java.io.ObjectStreamClass.<init>(ObjectStreamClass.java:468)

at java.io.ObjectStreamClass.lookup(ObjectStreamClass.java:365)

at java.io.ObjectOutputStream.writeClass(ObjectOutputStream.java:1212)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1119)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1173)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377)`

It is caused by NoClassDefFoundError will not catch up during task seriazation.

`var taskBinary: Broadcast[Array[Byte]] = null

try {

// For ShuffleMapTask, serialize and broadcast (rdd, shuffleDep).

// For ResultTask, serialize and broadcast (rdd, func).

val taskBinaryBytes: Array[Byte] = stage match {

case stage: ShuffleMapStage =>

JavaUtils.bufferToArray(

closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef))

case stage: ResultStage =>

JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef))

}

taskBinary = sc.broadcast(taskBinaryBytes)

} catch {

// In the case of a failure during serialization, abort the stage.

case e: NotSerializableException =>

abortStage(stage, "Task not serializable: " + e.toString, Some(e))

runningStages -= stage

// Abort execution

return

case NonFatal(e) =>

abortStage(stage, s"Task serialization failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}`

image below shows that stage 33 blocked and never be scheduled.

<img width="1273" alt="2018-06-28 4 28 42" src="https://user-images.githubusercontent.com/26762018/42621188-b87becca-85ef-11e8-9a0b-0ddf07504c96.png">

<img width="569" alt="2018-06-28 4 28 49" src="https://user-images.githubusercontent.com/26762018/42621191-b8b260e8-85ef-11e8-9d10-e97a5918baa6.png">

## How was this patch tested?

UT

Closes#21664 from caneGuy/zhoukang/fix-noclassdeferror.

Authored-by: zhoukang <zhoukang199191@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

This change hooks up the k8s backed to the updated HadoopDelegationTokenManager,

so that delegation tokens are also available in client mode, and keytab-based token

renewal is enabled.

The change re-works the k8s feature steps related to kerberos so

that the driver does all the credential management and provides all

the needed information to executors - so nothing needs to be added

to executor pods. This also makes cluster mode behave a lot more

similarly to client mode, since no driver-related config steps are run

in the latter case.

The main two things that don't need to happen in executors anymore are:

- adding the Hadoop config to the executor pods: this is not needed

since the Spark driver will serialize the Hadoop config and send

it to executors when running tasks.

- mounting the kerberos config file in the executor pods: this is

not needed once you remove the above. The Hadoop conf sent by

the driver with the tasks is already resolved (i.e. has all the

kerberos names properly defined), so executors do not need access

to the kerberos realm information anymore.

The change also avoids creating delegation tokens unnecessarily.

This means that they'll only be created if a secret with tokens

was not provided, and if a keytab is not provided. In either of

those cases, the driver code will handle delegation tokens: in

cluster mode by creating a secret and stashing them, in client

mode by using existing mechanisms to send DTs to executors.

One last feature: the change also allows defining a keytab with

a "local:" URI. This is supported in client mode (although that's

the same as not saying "local:"), and in k8s cluster mode. This

allows the keytab to be mounted onto the image from a pre-existing

secret, for example.

Finally, the new code always sets SPARK_USER in the driver and

executor pods. This is in line with how other resource managers

behave: the submitting user reflects which user will access

Hadoop services in the app. (With kerberos, that's overridden

by the logged in user.) That user is unrelated to the OS user

the app is running as inside the containers.

Tested:

- client and cluster mode with kinit

- cluster mode with keytab

- cluster mode with local: keytab

- YARN cluster with keytab (to make sure it isn't broken)

Closes#22911 from vanzin/SPARK-25815.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Change microseconds to milliseconds in annotation of Utils.timeStringAsMs.

Closes#23346 from stczwd/stczwd.

Authored-by: Jackey Lee <qcsd2011@163.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is kind of a followup of https://github.com/apache/spark/pull/23239

The `UnsafeProject` will normalize special float/double values(NaN and -0.0), so the sorter doesn't have to handle it.

However, for consistency and future-proof, this PR proposes to normalize `-0.0` in the prefix comparator, so that it's same with the normal ordering. Note that prefix comparator handles NaN as well.

This is not a bug fix, but a safe guard.

## How was this patch tested?

existing tests

Closes#23334 from cloud-fan/sort.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Multiple SparkContexts are discouraged and it has been warning for last 4 years, see SPARK-4180. It could cause arbitrary and mysterious error cases, see SPARK-2243.

Honestly, I didn't even know Spark still allows it, which looks never officially supported, see SPARK-2243.

I believe It should be good timing now to remove this configuration.

## How was this patch tested?

Each doc was manually checked and manually tested:

```

$ ./bin/spark-shell --conf=spark.driver.allowMultipleContexts=true

...

scala> new SparkContext()

org.apache.spark.SparkException: Only one SparkContext should be running in this JVM (see SPARK-2243).The currently running SparkContext was created at:

org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:939)

...

org.apache.spark.SparkContext$.$anonfun$assertNoOtherContextIsRunning$2(SparkContext.scala:2435)

at scala.Option.foreach(Option.scala:274)

at org.apache.spark.SparkContext$.assertNoOtherContextIsRunning(SparkContext.scala:2432)

at org.apache.spark.SparkContext$.markPartiallyConstructed(SparkContext.scala:2509)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:80)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:112)

... 49 elided

```

Closes#23311 from HyukjinKwon/SPARK-26362.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Based on the [comment](https://github.com/apache/spark/pull/23272#discussion_r240735509), it seems to be better to put `freePage` into a `finally` block. This patch as a follow-up to do so.

## How was this patch tested?

Existing tests.

Closes#23294 from viirya/SPARK-26265-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Currently this check is only performed for dynamic allocation use case in

ExecutorAllocationManager.

## What changes were proposed in this pull request?

Checks that cpu per task is lower than number of cores per executor otherwise throw an exception

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23290 from ashangit/master.

Authored-by: n.fraison <n.fraison@criteo.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

These three condition descriptions should be updated, follow #23228 :

<li>no Ordering is specified,</li>

<li>no Aggregator is specified, and</li>

<li>the number of partitions is less than

<code>spark.shuffle.sort.bypassMergeThreshold</code>.

</li>

1、If the shuffle dependency specifies aggregation, but it only aggregates at the reduce-side, BypassMergeSortShuffle can still be used.

2、If the number of output partitions is spark.shuffle.sort.bypassMergeThreshold(eg.200), we can use BypassMergeSortShuffle.

## How was this patch tested?

N/A

Closes#23281 from lcqzte10192193/wid-lcq-1211.

Authored-by: lichaoqun <li.chaoqun@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

When Kafka delegation token obtained, SCRAM `sasl.mechanism` has to be configured for authentication. This can be configured on the related source/sink which is inconvenient from user perspective. Such granularity is not required and this configuration can be implemented with one central parameter.

In this PR `spark.kafka.sasl.token.mechanism` added to configure this centrally (default: `SCRAM-SHA-512`).

## How was this patch tested?

Existing unit tests + on cluster.

Closes#23274 from gaborgsomogyi/SPARK-26322.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

YARN applicationMaster metrics registration introduced in SPARK-24594 causes further registration of static metrics (Codegenerator and HiveExternalCatalog) and of JVM metrics, which I believe do not belong in this context.

This looks like an unintended side effect of using the start method of [[MetricsSystem]].

A possible solution proposed here, is to introduce startNoRegisterSources to avoid these additional registrations of static sources and of JVM sources in the case of YARN applicationMaster metrics (this could be useful for other metrics that may be added in the future).

## How was this patch tested?

Manually tested on a YARN cluster,

Closes#22279 from LucaCanali/YarnMetricsRemoveExtraSourceRegistration.

Lead-authored-by: Luca Canali <luca.canali@cern.ch>

Co-authored-by: LucaCanali <luca.canali@cern.ch>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Follow up pr for #23207, include following changes:

- Rename `SQLShuffleMetricsReporter` to `SQLShuffleReadMetricsReporter` to make it match with write side naming.

- Display text changes for read side for naming consistent.

- Rename function in `ShuffleWriteProcessor`.

- Delete `private[spark]` in execution package.

## How was this patch tested?

Existing tests.

Closes#23286 from xuanyuanking/SPARK-26193-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This proposes an alternative way to load secret keys into a Spark application that is running on Kubernetes. Instead of automatically generating the secret, the secret key can reside in a file that is shared between both the driver and executor containers.

Unit tests.

Closes#23252 from mccheah/auth-secret-with-file.

Authored-by: mcheah <mcheah@palantir.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In `BytesToBytesMap.MapIterator.advanceToNextPage`, We will first lock this `MapIterator` and then `TaskMemoryManager` when going to free a memory page by calling `freePage`. At the same time, it is possibly that another memory consumer first locks `TaskMemoryManager` and then this `MapIterator` when it acquires memory and causes spilling on this `MapIterator`.

So it ends with the `MapIterator` object holds lock to the `MapIterator` object and waits for lock on `TaskMemoryManager`, and the other consumer holds lock to `TaskMemoryManager` and waits for lock on the `MapIterator` object.

To avoid deadlock here, this patch proposes to keep reference to the page to free and free it after releasing the lock of `MapIterator`.

## How was this patch tested?

Added test and manually test by running the test 100 times to make sure there is no deadlock.

Closes#23272 from viirya/SPARK-26265.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

… incorrect.

## What changes were proposed in this pull request?

In the reported heartbeat information, the unit of the memory data is bytes, which is converted by the formatBytes() function in the utils.js file before being displayed in the interface. The cardinality of the unit conversion in the formatBytes function is 1000, which should be 1024.

Change the cardinality of the unit conversion in the formatBytes function to 1024.

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22683 from httfighter/SPARK-25696.

Lead-authored-by: 韩田田00222924 <han.tiantian@zte.com.cn>

Co-authored-by: han.tiantian@zte.com.cn <han.tiantian@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

This adds the entire memory used by spark’s executor (as measured by procfs) to the executor metrics. The memory usage is collected from the entire process tree under the executor. The metrics are subdivided into memory used by java, by python, and by other processes, to aid users in diagnosing the source of high memory usage.

The additional metrics are sent to the driver in heartbeats, using the mechanism introduced by SPARK-23429. This also slightly extends that approach to allow one ExecutorMetricType to collect multiple metrics.

Added unit tests and also tested on a live cluster.

Closes#22612 from rezasafi/ptreememory2.

Authored-by: Reza Safi <rezasafi@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

`1. The shuffle dependency specifies no aggregation or output ordering.`

If the shuffle dependency specifies aggregation, but it only aggregates at the reduce-side, serialized shuffle can still be used.

`3. The shuffle produces fewer than 16777216 output partitions.`

If the number of output partitions is 16777216 , we can use serialized shuffle.

We can see this mothod: `canUseSerializedShuffle`

## How was this patch tested?

N/A

Closes#23228 from 10110346/SerializedShuffle_doc.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Add MAXIMUM_PAGE_SIZE_BYTES Exception test

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23226 from wangjiaochun/BytesToBytesMapSuite.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

If there are no records in memory, then we don't need to create an empty temp spill file.

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23225 from wangjiaochun/ShufflSorter.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Root cause: Prior to Spark2.4, When we enable zst for eventLog compression, for inprogress application, It always throws exception in the Application UI, when we open from the history server. But after 2.4 it will display the UI information based on the completed frames in the zstd compressed eventLog. But doesn't read incomplete frames for inprogress application.

In this PR, we have added 'setContinous(true)' for reading input stream from eventLog, so that it can read from open frames also. (By default 'isContinous=false' for zstd inputStream and when we try to read an open frame, it throws truncated error)

## How was this patch tested?

Test steps:

1) Add the configurations in the spark-defaults.conf

(i) spark.eventLog.compress true

(ii) spark.io.compression.codec zstd

2) Restart history server

3) bin/spark-shell

4) sc.parallelize(1 to 1000, 1000).count

5) Open app UI from the history server UI

**Before fix**

**After fix:**

Closes#23241 from shahidki31/zstdEventLog.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

1. Implement `SQLShuffleWriteMetricsReporter` on the SQL side as the customized `ShuffleWriteMetricsReporter`.

2. Add shuffle write metrics to `ShuffleExchangeExec`, and use these metrics to create corresponding `SQLShuffleWriteMetricsReporter` in shuffle dependency.

3. Rework on `ShuffleMapTask` to add new class named `ShuffleWriteProcessor` which control shuffle write process, we use sql shuffle write metrics by customizing a ShuffleWriteProcessor on SQL side.

## How was this patch tested?

Add UT in SQLMetricsSuite.

Manually test locally, update screen shot to document attached in JIRA.

Closes#23207 from xuanyuanking/SPARK-26193.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

spark.kafka.sasl.kerberos.service.name is an optional parameter but most of the time value `kafka` has to be set. As I've written in the jira the following reasoning is behind:

* Kafka's configuration guide suggest the same value: https://kafka.apache.org/documentation/#security_sasl_kerberos_brokerconfig

* It would be easier for spark users by providing less configuration

* Other streaming engines are doing the same

In this PR I've changed the parameter from optional to `WithDefault` and set `kafka` as default value.

## How was this patch tested?

Available unit tests + on cluster.

Closes#23254 from gaborgsomogyi/SPARK-26304.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Delete unnecessary If statement, because it Impossible execution when

records less than or equal to zero.it is only execution when records begin zero.

...................

if (inMemSorter == null || inMemSorter.numRecords() <= 0) {

return 0L;

}

....................

if (inMemSorter.numRecords() > 0) {

.....................

}

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23247 from wangjiaochun/inMemSorter.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Adds a new method to SparkAppHandle called getError which returns

the exception (if present) that caused the underlying Spark app to

fail.

New tests added to SparkLauncherSuite for the new method.

Closes#21849Closes#23221 from vanzin/SPARK-24243.

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

`enablePerfMetrics `was originally designed in `BytesToBytesMap `to control `getNumHashCollisions getTimeSpentResizingNs getAverageProbesPerLookup`.

However, as the Spark version gradual progress. this parameter is only used for `getAverageProbesPerLookup ` and always given to true when using `BytesToBytesMap`.

it is also dangerous to determine whether `getAverageProbesPerLookup `opens and throws an `IllegalStateException `exception.

So this pr will be remove `enablePerfMetrics `parameter from `BytesToBytesMap`. thanks.

## How was this patch tested?

the existed test cases.

Closes#23244 from heary-cao/enablePerfMetrics.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This change modifies the logic in the SecurityManager to do two

things:

- generate unique app secrets also when k8s is being used

- only store the secret in the user's UGI on YARN

The latter is needed so that k8s won't unnecessarily create

k8s secrets for the UGI credentials when only the auth token

is stored there.

On the k8s side, the secret is propagated to executors using

an environment variable instead. This ensures it works in both

client and cluster mode.

Security doc was updated to mention the feature and clarify that

proper access control in k8s should be enabled for it to be secure.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#23174 from vanzin/SPARK-26194.

## What changes were proposed in this pull request?

We explicitly avoid files with hdfs erasure coding for the streaming WAL

and for event logs, as hdfs EC does not support all relevant apis.

However, the new builder api used has different semantics -- it does not

create parent dirs, and it does not resolve relative paths. This

updates createNonEcFile to have similar semantics to the old api.

## How was this patch tested?

Ran tests with the WAL pointed at a non-existent dir, which failed before this change. Manually tested the new function with a relative path as well.

Unit tests via jenkins.

Closes#23092 from squito/SPARK-26094.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In my local setup, I set log4j root category as ERROR (https://stackoverflow.com/questions/27781187/how-to-stop-info-messages-displaying-on-spark-console , first item show up if we google search "set spark log level".) When I run such command

```

spark-submit --class foo bar.jar

```

Nothing shows up, and the script exits.

After quick investigation, I think the log level for ClassNotFoundException/NoClassDefFoundError in SparkSubmit should be ERROR instead of WARN. Since the whole process exit because of the exception/error.

Before https://github.com/apache/spark/pull/20925, the message is not controlled by `log4j.rootCategory`.

## How was this patch tested?

Manual check.

Closes#23189 from gengliangwang/changeLogLevel.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Correct some document description errors.

## How was this patch tested?

N/A

Closes#23162 from 10110346/docerror.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, the common `withTempDir` function is used in Spark SQL test cases. To handle `val dir = Utils. createTempDir()` and `Utils. deleteRecursively (dir)`. Unfortunately, the `withTempDir` function cannot be used in the Spark Core test case. This PR Sharing `withTempDir` function in Spark Sql and SparkCore to clean up SparkCore test cases. thanks.

## How was this patch tested?

N / A

Closes#23151 from heary-cao/withCreateTempDir.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Python with rpc and disk encryption enabled along with a python broadcast variable and just read the value back on the driver side the job failed with:

Traceback (most recent call last): File "broadcast.py", line 37, in <module> words_new.value File "/pyspark.zip/pyspark/broadcast.py", line 137, in value File "pyspark.zip/pyspark/broadcast.py", line 122, in load_from_path File "pyspark.zip/pyspark/broadcast.py", line 128, in load EOFError: Ran out of input

To reproduce use configs: --conf spark.network.crypto.enabled=true --conf spark.io.encryption.enabled=true

Code:

words_new = sc.broadcast(["scala", "java", "hadoop", "spark", "akka"])

words_new.value

print(words_new.value)

## How was this patch tested?

words_new = sc.broadcast([“scala”, “java”, “hadoop”, “spark”, “akka”])

textFile = sc.textFile(“README.md”)

wordCounts = textFile.flatMap(lambda line: line.split()).map(lambda word: (word + words_new.value[1], 1)).reduceByKey(lambda a, b: a+b)

count = wordCounts.count()

print(count)

words_new.value

print(words_new.value)

Closes#23166 from redsanket/SPARK-26201.

Authored-by: schintap <schintap@oath.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

It adds kafka delegation token support for structured streaming. Please see the relevant [SPIP](https://docs.google.com/document/d/1ouRayzaJf_N5VQtGhVq9FURXVmRpXzEEWYHob0ne3NY/edit?usp=sharing)

What this PR contains:

* Configuration parameters for the feature

* Delegation token fetching from broker

* Usage of token through dynamic JAAS configuration

* Minor refactoring in the existing code

What this PR doesn't contain:

* Documentation changes because design can change

## How was this patch tested?

Existing tests + added small amount of additional unit tests.

Because it's an external service integration mainly tested on cluster.

* 4 node cluster

* Kafka broker version 1.1.0

* Topic with 4 partitions

* security.protocol = SASL_SSL

* sasl.mechanism = SCRAM-SHA-256

An example of obtaining a token:

```

18/10/01 01:07:49 INFO kafka010.TokenUtil: TOKENID HMAC OWNER RENEWERS ISSUEDATE EXPIRYDATE MAXDATE

18/10/01 01:07:49 INFO kafka010.TokenUtil: D1-v__Q5T_uHx55rW16Jwg [hidden] User:user [] 2018-10-01T01:07 2018-10-02T01:07 2018-10-08T01:07

18/10/01 01:07:49 INFO security.KafkaDelegationTokenProvider: Get token from Kafka: Kind: KAFKA_DELEGATION_TOKEN, Service: kafka.server.delegation.token, Ident: 44 31 2d 76 5f 5f 51 35 54 5f 75 48 78 35 35 72 57 31 36 4a 77 67

```

An example token usage:

```

18/10/01 01:08:07 INFO kafka010.KafkaSecurityHelper: Scram JAAS params: org.apache.kafka.common.security.scram.ScramLoginModule required tokenauth=true serviceName="kafka" username="D1-v__Q5T_uHx55rW16Jwg" password="[hidden]";

18/10/01 01:08:07 INFO kafka010.KafkaSourceProvider: Delegation token detected, using it for login.

```

Closes#22598 from gaborgsomogyi/SPARK-25501.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In `BlockManager`, `getRemoteValues` gets a `ChunkedByteBuffer` (by calling `getRemoteBytes`) and creates an `InputStream` from it. `getRemoteBytes`, in turn, gets a `ManagedBuffer` and converts it to a `ChunkedByteBuffer`.

Instead, expose a `getRemoteManagedBuffer` method so `getRemoteValues` can just get this `ManagedBuffer` and use its `InputStream`.

When reading a remote cache block from disk, this reduces heap memory usage significantly.

Retain `getRemoteBytes` for other callers.

## How was this patch tested?

Imran Rashid wrote an application (https://github.com/squito/spark_2gb_test/blob/master/src/main/scala/com/cloudera/sparktest/LargeBlocks.scala), that among other things, tests reading remote cache blocks. I ran this application, using 2500MB blocks, to test reading a cache block on disk. Without this change, with `--executor-memory 5g`, the test fails with `java.lang.OutOfMemoryError: Java heap space`. With the change, the test passes with `--executor-memory 2g`.

I also ran the unit tests in core. In particular, `DistributedSuite` has a set of tests that exercise the `getRemoteValues` code path. `BlockManagerSuite` has several tests that call `getRemoteBytes`; I left these unchanged, so `getRemoteBytes` still gets exercised.

Closes#23058 from wypoon/SPARK-25905.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

This PR changes the broadcast object in TorrentBroadcast from a strong reference to a weak reference. This allows it to be garbage collected even if the Dataset is held in memory. This is ok, because the broadcast object can always be re-read.

## How was this patch tested?

Tested in Spark shell by taking a heap dump, full repro steps listed in https://issues.apache.org/jira/browse/SPARK-25998.

Closes#22995 from bkrieger/bk/torrent-broadcast-weak.

Authored-by: Brandon Krieger <bkrieger@palantir.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

… of hard coded "/" in DependencyUtils

## What changes were proposed in this pull request?

Use Java system property "file.separator" instead of hard coded "/" in DependencyUtils.

## How was this patch tested?

Manual test:

Submit Spark application via REST API that reads data from Elasticsearch using spark-elasticsearch library.

Without fix application fails with error:

18/11/22 10:36:20 ERROR Version: Multiple ES-Hadoop versions detected in the classpath; please use only one

jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar

jar:file:/C:/<...>/myApp-assembly-1.0.jar

18/11/22 10:36:20 ERROR Main: Application [MyApp] failed:

java.lang.Error: Multiple ES-Hadoop versions detected in the classpath; please use only one

jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar

jar:file:/C:/<...>/myApp-assembly-1.0.jar

at org.elasticsearch.hadoop.util.Version.<clinit>(Version.java:73)

at org.elasticsearch.hadoop.rest.RestService.findPartitions(RestService.java:214)

at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions$lzycompute(AbstractEsRDD.scala:73)

at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions(AbstractEsRDD.scala:72)

at org.elasticsearch.spark.rdd.AbstractEsRDD.getPartitions(AbstractEsRDD.scala:44)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:49)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

...

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.worker.DriverWrapper$.main(DriverWrapper.scala:65)

at org.apache.spark.deploy.worker.DriverWrapper.main(DriverWrapper.scala)

With fix application runs successfully.

Closes#23102 from markpavey/JIRA_SPARK-26137_DependencyUtilsFileSeparatorFix.

Authored-by: Mark Pavey <markpavey@exabre.co.uk>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

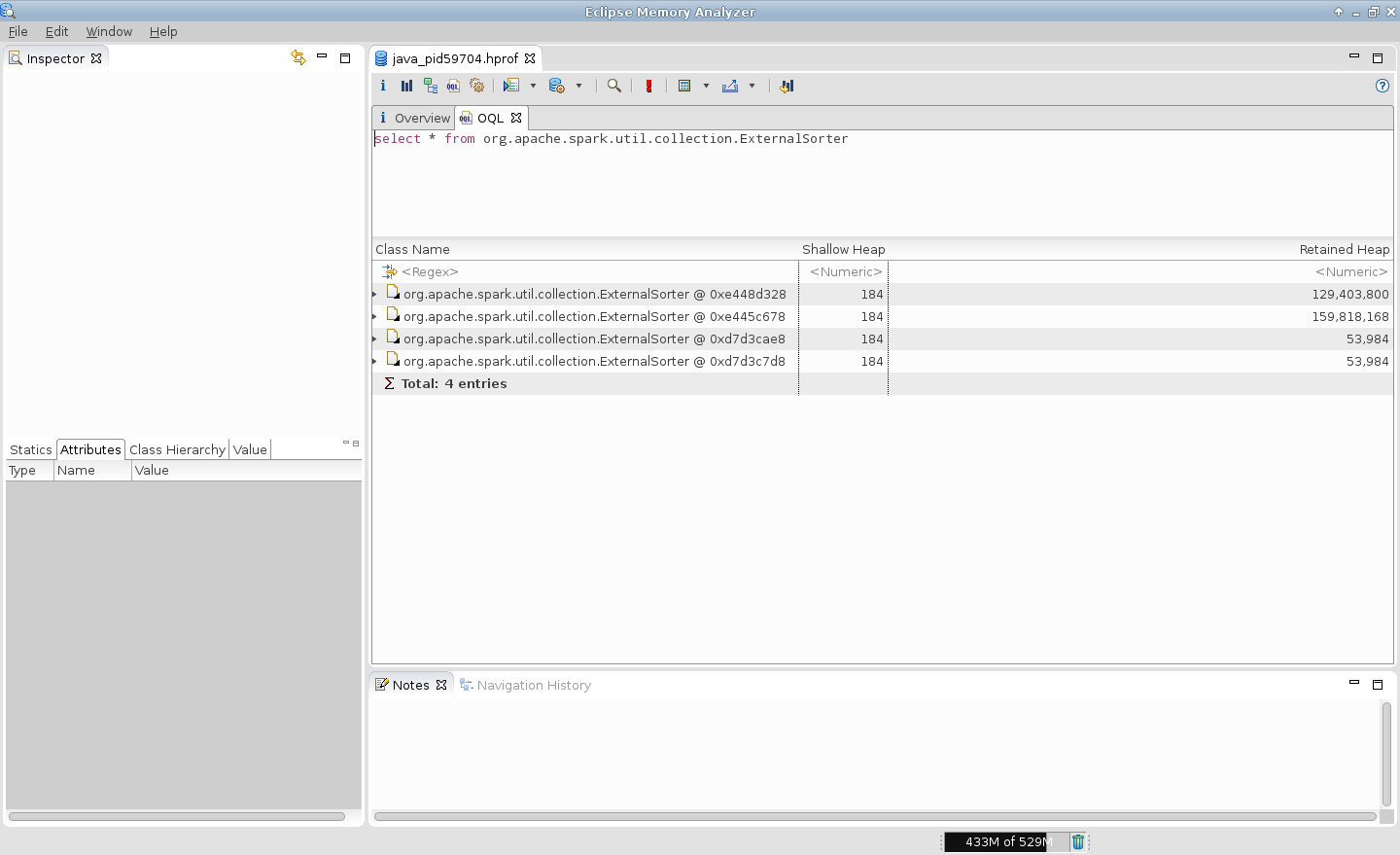

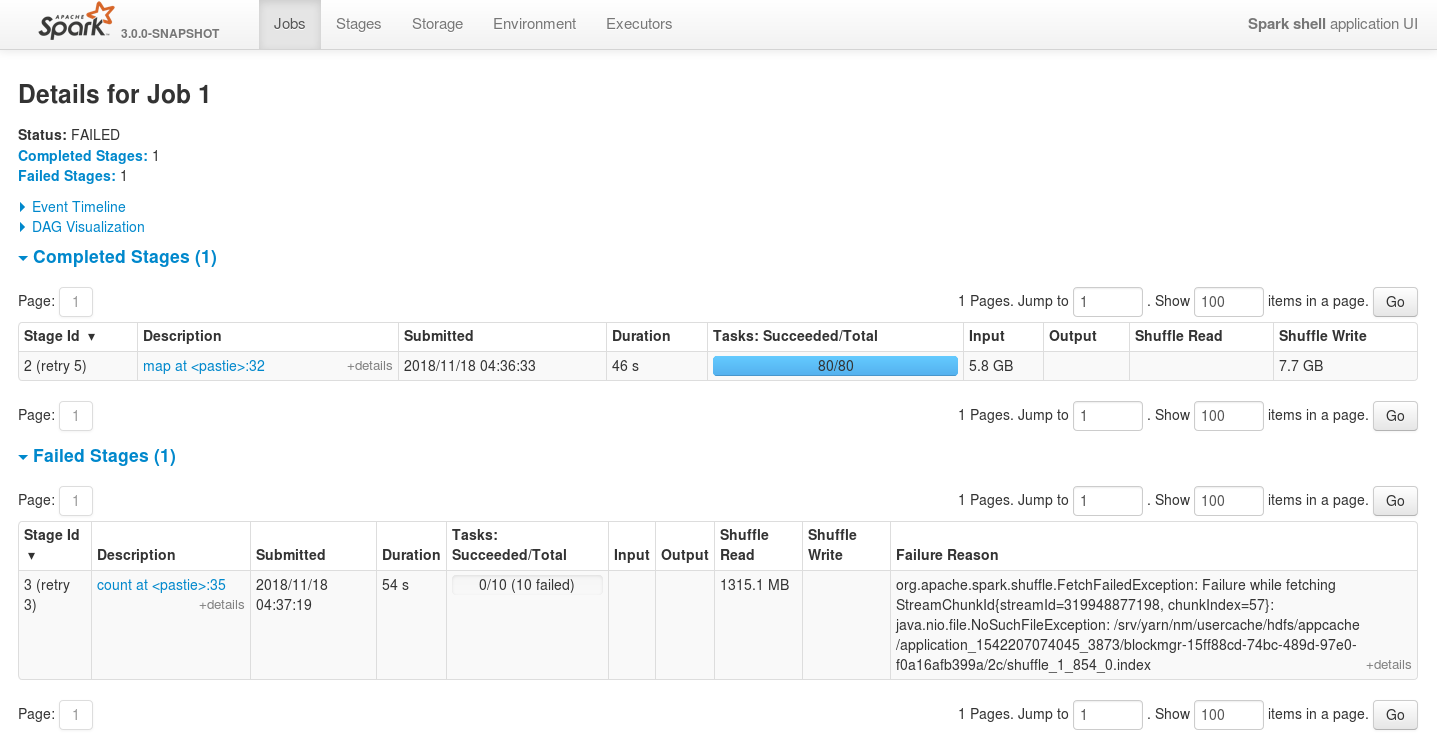

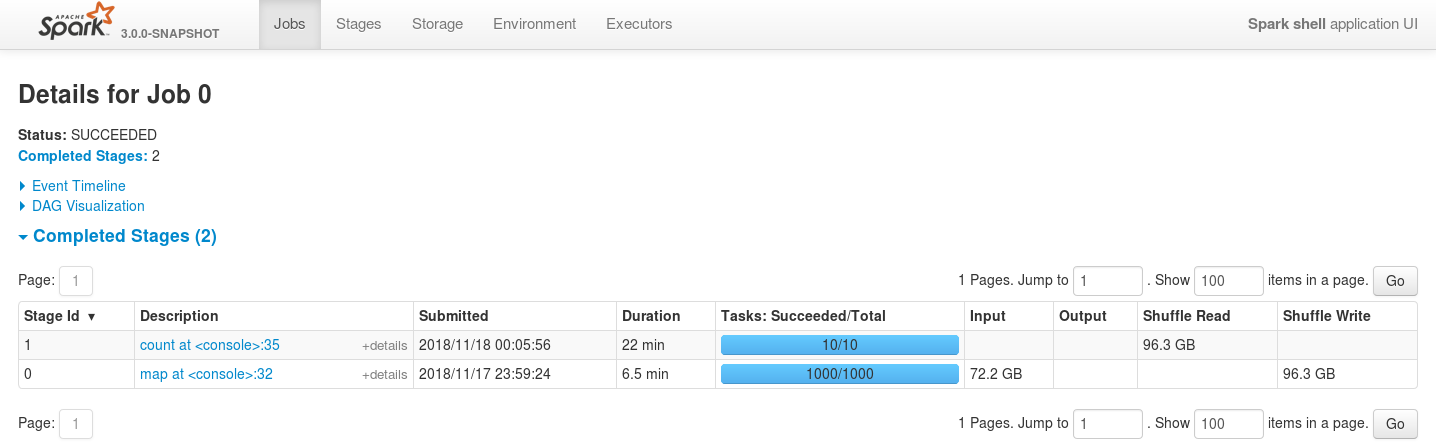

## What changes were proposed in this pull request?

This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations.

The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields.

Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available.

For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned).

For the following data

```scala

import org.apache.hadoop.io._

import org.apache.hadoop.io.compress._

import org.apache.commons.lang._

import org.apache.spark._

// generate 100M records of sample data

sc.makeRDD(1 to 1000, 1000)

.flatMap(item => (1 to 100000)

.map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024))))

.saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec]))

```

and the following job

```scala

import org.apache.hadoop.io._

import org.apache.spark._

import org.apache.spark.storage._

val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text])

rdd

.map(item => item._1.toString -> item._2.toString)

.repartitionAndSortWithinPartitions(new HashPartitioner(1000))

.coalesce(10,false)

.count

```

... executed like the following

```bash

spark-shell \