### What changes were proposed in this pull request?

This PR(SPARK-31101) proposes to upgrade Janino to 3.0.16 which is released recently.

* Merged pull request janino-compiler/janino#114 "Grow the code for relocatables, and do fixup, and relocate".

Please see the commit log.

- https://github.com/janino-compiler/janino/commits/3.0.16

You can see the changelog from the link: http://janino-compiler.github.io/janino/changelog.html / though release note for Janino 3.0.16 is actually incorrect.

### Why are the changes needed?

We got some report on failure on user's query which Janino throws error on compiling generated code. The issue is here: janino-compiler/janino#113 It contains the information of generated code, symptom (error), and analysis of the bug, so please refer the link for more details.

Janino 3.0.16 contains the PR janino-compiler/janino#114 which would enable Janino to succeed to compile user's query properly.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#27932 from HeartSaVioR/SPARK-31101-janino-3.0.16.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

`KafkaDelegationTokenSuite` has been ignored because showed flaky behaviour. In this PR I've changed the approach how the test executed and turning it on again. This PR contains the following:

* The test runs in separate JVM in order to avoid modified security context

* The body of the test runs in `testRetry` which reties if failed

* Additional logs to analyse possible failures

* Enhanced clean-up code

### Why are the changes needed?

`KafkaDelegationTokenSuite ` is ignored.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Executed the test in loop 1k+ times in jenkins (locally much harder to reproduce).

Closes#27877 from gaborgsomogyi/SPARK-30541.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This is a follow up for SPARK-30715 . Kubernetes client version in sync in integration-tests and kubernetes/core

### Why are the changes needed?

More than once, the kubernetes client version has gone out of sync between integration tests and kubernetes/core. So brought them up in sync again and added a comment to save us from future need of this additional followup.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually.

Closes#27948 from ScrapCodes/follow-up-spark-30715.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Hive 2.3+ supports `getTablesByType` API, which will provide an efficient way to get HiveTable with specific type. Now, we have following mappings when using `HiveExternalCatalog`.

```

CatalogTableType.EXTERNAL => HiveTableType.EXTERNAL_TABLE

CatalogTableType.MANAGED => HiveTableType.MANAGED_TABLE

CatalogTableType.VIEW => HiveTableType.VIRTUAL_VIEW

```

Without this API, we need to achieve the goal by `getTables` + `getTablesByName` + `filter with type`.

This PR add `getTablesByType` in `HiveShim`. For those hive versions don't support this API, `UnsupportedOperationException` will be thrown. And the upper logic should catch the exception and fallback to the filter solution mentioned above.

Since the JDK11 related fix in `Hive` is not released yet, manual tests against hive 2.3.7-SNAPSHOT is done by following the instructions of SPARK-29245.

### Why are the changes needed?

This API will provide better usability and performance if we want to get a list of hiveTables with specific type. For example `HiveTableType.VIRTUAL_VIEW` corresponding to `CatalogTableType.VIEW`.

### Does this PR introduce any user-facing change?

No, this is a support function.

### How was this patch tested?

Add tests in VersionsSuite and manually run JDK11 test with following settings:

- Hive 2.3.6 Metastore on JDK8

- Hive 2.3.7-SNAPSHOT library build from source of Hive 2.3 branch

- Spark build with Hive 2.3.7-SNAPSHOT on jdk-11.0.6

Closes#27952 from Eric5553/GetTableByType.

Authored-by: Eric Wu <492960551@qq.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Change BLAS for part of level-1 routines(axpy, dot, scal(double, denseVector)) from java implementation to NativeBLAS when vector size>256

### Why are the changes needed?

In current ML BLAS.scala, all level-1 routines are fixed to use java

implementation. But NativeBLAS(intel MKL, OpenBLAS) can bring up to 11X

performance improvement based on performance test which apply direct

calls against these methods. We should provide a way to allow user take

advantage of NativeBLAS for level-1 routines. Here we do it through

switching to NativeBLAS for these methods from f2jBLAS.

### Does this PR introduce any user-facing change?

Yes, methods axpy, dot, scal in level-1 routines will switch to NativeBLAS when it has more than nativeL1Threshold(fixed value 256) elements and will fallback to f2jBLAS if native BLAS is not properly configured in system.

### How was this patch tested?

Perf test direct calls level-1 routines

Closes#27546 from yma11/SPARK-30773.

Lead-authored-by: yan ma <yan.ma@intel.com>

Co-authored-by: Ma Yan <yan.ma@intel.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Add ANOVA Selector

### Why are the changes needed?

Currently Spark only supports selection of categorical features, while there are many requirements for the selection of continuous distribution features.

https://github.com/apache/spark/pull/27679 added FValueSelector for continuous features and continuous labels.

This PR adds ANOVASelector for continuous features and categorical labels.

### Does this PR introduce any user-facing change?

Yes, add a new Selector.

### How was this patch tested?

add new test suites

Closes#27895 from huaxingao/anova.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

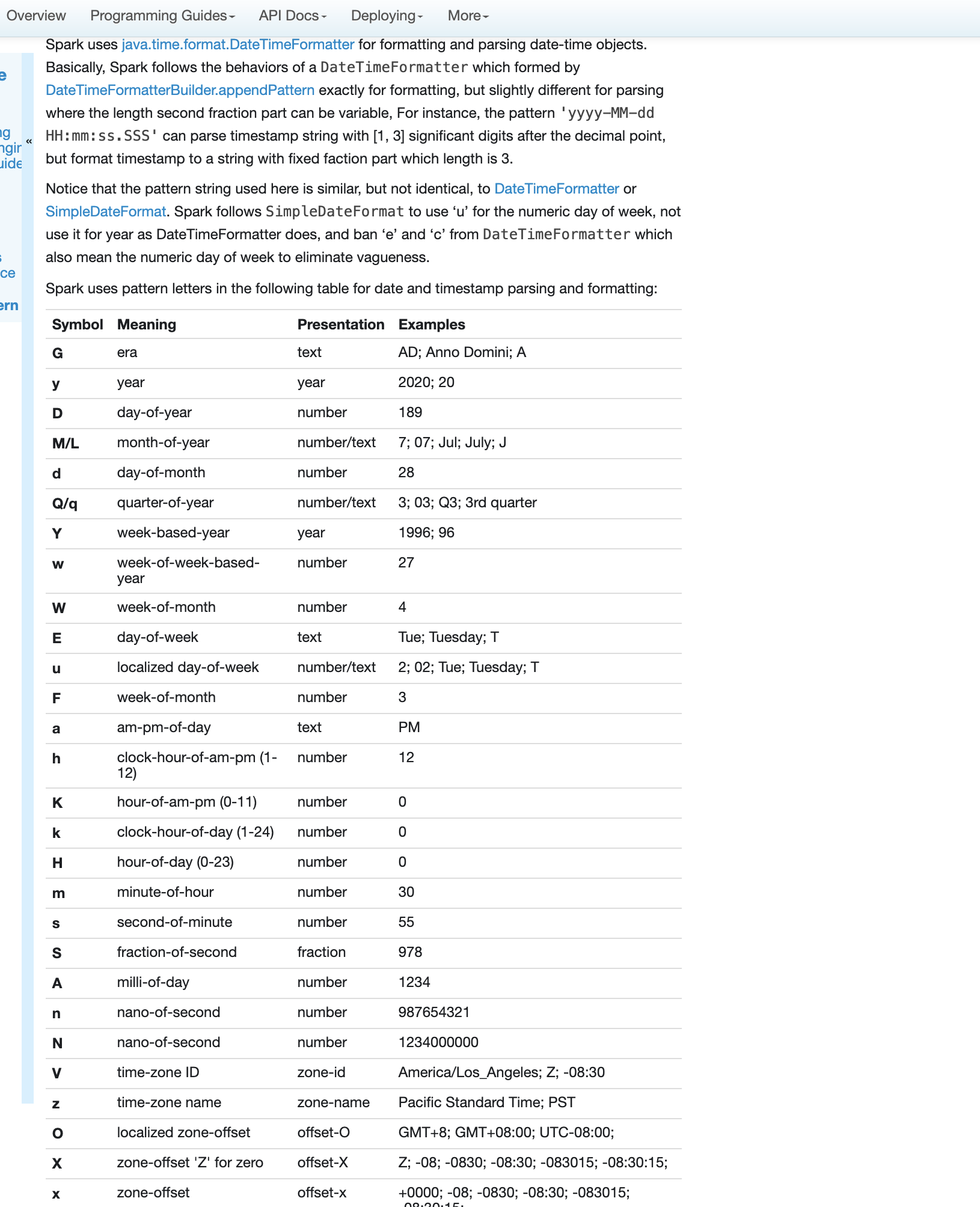

### What changes were proposed in this pull request?

Fix errors and missing parts for datetime pattern document

1. The pattern we use is similar to DateTimeFormatter and SimpleDateFormat but not identical. So we shouldn't use any of them in the API docs but use a link to the doc of our own.

2. Some pattern letters are missing

3. Some pattern letters are explicitly banned - Set('A', 'c', 'e', 'n', 'N')

4. the second fraction pattern different logic for parsing and formatting

### Why are the changes needed?

fix and improve doc

### Does this PR introduce any user-facing change?

yes, new and updated doc

### How was this patch tested?

pass Jenkins

viewed locally with `jekyll serve`

Closes#27956 from yaooqinn/SPARK-31189.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. The tests added by #27953 are moved from `AvroLogicalTypeSuite` to `AvroSuite`.

2. Checking of the `rebaseDateTime` flag is moved out from functions bodies.

### Why are the changes needed?

1. The tests are moved because they are not directly related to logical types.

2. Checking the flag out of functions bodies should improve performance.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running Avro tests via the command `build/sbt avro/test`

Closes#27964 from MaxGekk/rebase-avro-datetime-followup.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to correct and re-use functions from `DateTimeUtils` for rebasing days before the cutover day `1582-10-15` in `org.apache.spark.sql.hive.DaysWritable`.

### Why are the changes needed?

0. Existing rebasing of days in `DaysWritable` is not correct.

1. To deduplicate code in `DaysWritable`

2. To use functions that are better tested and cross checked by loading dates/timestamps from Parquet/Avro files written by Spark 2.4.5

### Does this PR introduce any user-facing change?

This PR can introduce behavior change because the replaced code is different from the re-used code from `DateTimeUtils`.

### How was this patch tested?

By existing test suite, for instance `HiveOrcHadoopFsRelationSuite`.

Closes#27962 from MaxGekk/reuse-rebase-funcs.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The PR addresses the issue of compatibility with Spark 2.4 and earlier version in reading/writing dates and timestamp via **Avro** datasource. Previous releases are based on a hybrid calendar - Julian + Gregorian. Since Spark 3.0, Proleptic Gregorian calendar is used by default, see SPARK-26651. In particular, the issue pops up for dates/timestamps before 1582-10-15 when the hybrid calendar switches from/to Gregorian to/from Julian calendar. The same local date in different calendar is converted to different number of days since the epoch 1970-01-01. For example, the 1001-01-01 date is converted to:

- -719164 in Julian calendar. Spark 2.4 saves the number as a value of DATE type into **Avro** files.

- -719162 in Proleptic Gregorian calendar. Spark 3.0 saves the number as a date value.

The PR proposes rebasing from/to Proleptic Gregorian calendar to the hybrid one under the SQL config:

```

spark.sql.legacy.avro.rebaseDateTime.enabled

```

which is set to `false` by default which means the rebasing is not performed by default.

The details of the implementation:

1. Re-use 2 methods of `DateTimeUtils` added by the PR https://github.com/apache/spark/pull/27915 for rebasing microseconds.

2. Re-use 2 methods of `DateTimeUtils` added by the PR https://github.com/apache/spark/pull/27915 for rebasing days.

3. Use `rebaseGregorianToJulianMicros()` and `rebaseGregorianToJulianDays()` while saving timestamps/dates to **Avro** files if the SQL config is on.

4. Use `rebaseJulianToGregorianMicros()` and `rebaseJulianToGregorianDays()` while loading timestamps/dates from **Avro** files if the SQL config is on.

5. The SQL config `spark.sql.legacy.avro.rebaseDateTime.enabled` controls conversions from/to dates, and timestamps of the `timestamp-millis`, `timestamp-micros` logical types.

### Why are the changes needed?

For the backward compatibility with Spark 2.4 and earlier versions. The changes allow users to read dates/timestamps saved by previous version, and get the same result. Also after the changes, users can enable the rebasing in write, and save dates/timestamps that can be loaded correctly by Spark 2.4 and earlier versions.

### Does this PR introduce any user-facing change?

Yes, the timestamp `1001-01-01 01:02:03.123456` saved by Spark 2.4.5 as `timestamp-micros` is interpreted by Spark 3.0.0-preview2 differently:

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> spark.read.format("avro").load("/Users/maxim/tmp/before_1582/2_4_5_date_avro").show(false)

+----------+

|date |

+----------+

|1001-01-07|

+----------+

```

After the changes:

```scala

scala> spark.conf.set("spark.sql.legacy.avro.rebaseDateTime.enabled", true)

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> spark.read.format("avro").load("/Users/maxim/tmp/before_1582/2_4_5_date_avro").show(false)

+----------+

|date |

+----------+

|1001-01-01|

+----------+

```

### How was this patch tested?

1. Added tests to `AvroLogicalTypeSuite` to check rebasing in read. The test reads back avro files saved by Spark 2.4.5 via:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> val df = Seq("1001-01-01").toDF("dateS").select($"dateS".cast("date").as("date"))

df: org.apache.spark.sql.DataFrame = [date: date]

scala> df.write.format("avro").save("/Users/maxim/tmp/before_1582/2_4_5_date_avro")

scala> val df2 = Seq("1001-01-01 01:02:03.123456").toDF("tsS").select($"tsS".cast("timestamp").as("ts"))

df2: org.apache.spark.sql.DataFrame = [ts: timestamp]

scala> df2.write.format("avro").save("/Users/maxim/tmp/before_1582/2_4_5_ts_avro")

scala> :paste

// Entering paste mode (ctrl-D to finish)

val timestampSchema = s"""

| {

| "namespace": "logical",

| "type": "record",

| "name": "test",

| "fields": [

| {"name": "ts", "type": ["null", {"type": "long","logicalType": "timestamp-millis"}], "default": null}

| ]

| }

|""".stripMargin

// Exiting paste mode, now interpreting.

scala> df3.write.format("avro").option("avroSchema", timestampSchema).save("/Users/maxim/tmp/before_1582/2_4_5_ts_millis_avro")

```

2. Added the following tests to `AvroLogicalTypeSuite` to check rebasing of dates/timestamps (in microsecond and millisecond precision). The tests write rebased a date/timestamps and read them back w/ enabled/disabled rebasing, and compare results. :

- `rebasing microseconds timestamps in write`

- `rebasing milliseconds timestamps in write`

- `rebasing dates in write`

Closes#27953 from MaxGekk/rebase-avro-datetime.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

A few `CREATE TABLE` test cases have some assumption on the default value of `LEGACY_CREATE_HIVE_TABLE_BY_DEFAULT_ENABLED`. This PR (SPARK-31181) makes the test cases more explicit from test-case side.

The configuration change was tested via https://github.com/apache/spark/pull/27894 during discussing SPARK-31136. This PR has only the test case part from that PR.

### Why are the changes needed?

This makes our test case more robust in terms of the default value of `LEGACY_CREATE_HIVE_TABLE_BY_DEFAULT_ENABLED`. Even in the case where we switch the conf value, that will be one-liner with no test case changes.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#27946 from dongjoon-hyun/SPARK-EXPLICIT-TEST.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/26933

Fraction string like "1.23" is definitely not a valid integral format and we should fail to do the cast under the ANSI mode.

### Why are the changes needed?

correct the ANSI cast behavior from string to integral

### Does this PR introduce any user-facing change?

Yes under ANSI mode, but ANSI mode is off by default.

### How was this patch tested?

new test

Closes#27957 from cloud-fan/ansi.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Spark SQL's whole-stage codegen (WSCG) supports dumping the generated code to help with debugging. One way to get the generated code is through `df.queryExecution.debug.codegen`, or SQL `EXPLAIN CODEGEN` statement.

The generated code is currently printed without specific ordering, which can make debugging a bit annoying. This PR makes a minor improvement to sort the codegen dump by the `codegenStageId`, ascending.

After this change, the following query:

```scala

spark.range(10).agg(sum('id)).queryExecution.debug.codegen

```

will always dump the generated code in a natural, stable order. A version of this example with shorter output is:

```

spark.range(10).agg(sum('id)).queryExecution.debug.codegenToSeq.map(_._1).foreach(println)

*(1) HashAggregate(keys=[], functions=[partial_sum(id#8L)], output=[sum#15L])

+- *(1) Range (0, 10, step=1, splits=16)

*(2) HashAggregate(keys=[], functions=[sum(id#8L)], output=[sum(id)#12L])

+- Exchange SinglePartition, true, [id=#30]

+- *(1) HashAggregate(keys=[], functions=[partial_sum(id#8L)], output=[sum#15L])

+- *(1) Range (0, 10, step=1, splits=16)

```

The number of codegen stages within a single SQL query tends to be very small, most likely < 50, so the overhead of adding the sorting shouldn't be significant.

### Why are the changes needed?

Minor improvement to aid WSCG debugging.

### Does this PR introduce any user-facing change?

No user-facing change for end-users; minor change for developers who debug WSCG generated code.

### How was this patch tested?

Manually tested the output; all other tests still pass.

Closes#27955 from rednaxelafx/codegen.

Authored-by: Kris Mok <kris.mok@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

The PR addresses the issue of compatibility with Spark 2.4 and earlier version in reading/writing dates and timestamp via Parquet datasource. Previous releases are based on a hybrid calendar - Julian + Gregorian. Since Spark 3.0, Proleptic Gregorian calendar is used by default, see SPARK-26651. In particular, the issue pops up for dates/timestamps before 1582-10-15 when the hybrid calendar switches from/to Gregorian to/from Julian calendar. The same local date in different calendar is converted to different number of days since the epoch 1970-01-01. For example, the 1001-01-01 date is converted to:

- -719164 in Julian calendar. Spark 2.4 saves the number as a value of DATE type into parquet.

- -719162 in Proleptic Gregorian calendar. Spark 3.0 saves the number as a date value.

According to the parquet spec, parquet timestamps of the `TIMESTAMP_MILLIS`, `TIMESTAMP_MICROS` output type and parquet dates should be based on Proleptic Gregorian calendar but the `INT96` timestamps should be stored as Julian days. Since the version 3.0, Spark conforms the spec but for the backward compatibility with previous version, the PR proposes rebasing from/to Proleptic Gregorian calendar to the hybrid one under the SQL config:

```

spark.sql.legacy.parquet.rebaseDateTime.enabled

```

which is set to `false` by default which means the rebasing is not performed by default.

The details of the implementation:

1. Added 2 methods to `DateTimeUtils` for rebasing microseconds. `rebaseGregorianToJulianMicros()` builds a local timestamp in Proleptic Gregorian calendar, extracts date-time fields `year`, `month`, ..., `second fraction` from the local timestamp and uses them to build another local timestamp based on the hybrid calendar (using `java.util.Calendar` API). After that it calculates the number of microseconds since the epoch using the resulted local timestamp. The function performs the conversion via the system JVM time zone for compatibility with Spark 2.4 and earlier versions. The `rebaseJulianToGregorianMicros()` function does reverse conversion.

2. Added 2 methods to `DateTimeUtils` for rebasing days. `rebaseGregorianToJulianDays()` builds a local date from the passed number of days since the epoch in Proleptic Gregorian calendar, interprets the resulted date as a local date in the hybrid calendar and gets the number of days since the epoch from the resulted local date. The conversion is performed via the `UTC` time zone because the conversion is independent from time zones, and `UTC` is selected to void round issues of casting days to milliseconds and back. The `rebaseJulianToGregorianDays()` functions does revers conversion.

3. Use `rebaseGregorianToJulianMicros()` and `rebaseGregorianToJulianDays()` while saving timestamps/dates to parquet files if the SQL config is on.

4. Use `rebaseJulianToGregorianMicros()` and `rebaseJulianToGregorianDays()` while loading timestamps/dates from parquet files if the SQL config is on.

5. The SQL config `spark.sql.legacy.parquet.rebaseDateTime.enabled` controls conversions from/to dates, timestamps of `TIMESTAMP_MILLIS`, `TIMESTAMP_MICROS`, see the SQL config `spark.sql.parquet.outputTimestampType`.

6. The rebasing is always performed for `INT96` timestamps, independently from `spark.sql.legacy.parquet.rebaseDateTime.enabled`.

7. Supported the vectorized parquet reader, see the SQL config `spark.sql.parquet.enableVectorizedReader`.

### Why are the changes needed?

- For the backward compatibility with Spark 2.4 and earlier versions. The changes allow users to read dates/timestamps saved by previous version, and get the same result. Also after the changes, users can enable the rebasing in write, and save dates/timestamps that can be loaded correctly by Spark 2.4 and earlier versions.

- It fixes the bug of incorrect saving/loading timestamps of the `INT96` type

### Does this PR introduce any user-facing change?

Yes, the timestamp `1001-01-01 01:02:03.123456` saved by Spark 2.4.5 as `TIMESTAMP_MICROS` is interpreted by Spark 3.0.0-preview2 differently:

```scala

scala> spark.read.parquet("/Users/maxim/tmp/before_1582/2_4_5_ts_micros").show(false)

+--------------------------+

|ts |

+--------------------------+

|1001-01-07 11:32:20.123456|

+--------------------------+

```

After the changes:

```scala

scala> spark.conf.set("spark.sql.legacy.parquet.rebaseDateTime.enabled", true)

scala> spark.read.parquet("/Users/maxim/tmp/before_1582/2_4_5_ts_micros").show(false)

+--------------------------+

|ts |

+--------------------------+

|1001-01-01 01:02:03.123456|

+--------------------------+

```

### How was this patch tested?

1. Added tests to `ParquetIOSuite` to check rebasing in read for regular reader and vectorized parquet reader. The test reads back parquet files saved by Spark 2.4.5 via:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> val df = Seq("1001-01-01").toDF("dateS").select($"dateS".cast("date").as("date"))

df: org.apache.spark.sql.DataFrame = [date: date]

scala> df.write.parquet("/Users/maxim/tmp/before_1582/2_4_5_date")

scala> val df = Seq("1001-01-01 01:02:03.123456").toDF("tsS").select($"tsS".cast("timestamp").as("ts"))

df: org.apache.spark.sql.DataFrame = [ts: timestamp]

scala> spark.conf.set("spark.sql.parquet.outputTimestampType", "TIMESTAMP_MICROS")

scala> df.write.parquet("/Users/maxim/tmp/before_1582/2_4_5_ts_micros")

scala> spark.conf.set("spark.sql.parquet.outputTimestampType", "TIMESTAMP_MILLIS")

scala> df.write.parquet("/Users/maxim/tmp/before_1582/2_4_5_ts_millis")

scala> spark.conf.set("spark.sql.parquet.outputTimestampType", "INT96")

scala> df.write.parquet("/Users/maxim/tmp/before_1582/2_4_5_ts_int96")

```

2. Manually check the write code path. Save date/timestamps (TIMESTAMP_MICROS, TIMESTAMP_MILLIS, INT96) by Spark 3.1.0-SNAPSHOT (after the changes):

```bash

$ export TZ="America/Los_Angeles"

```

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> spark.conf.set("spark.sql.legacy.parquet.rebaseDateTime.enabled", true)

scala> spark.conf.set("spark.sql.parquet.outputTimestampType", "TIMESTAMP_MICROS")

scala> val df = Seq(("1001-01-01", "1001-01-01 01:02:03.123456")).toDF("dateS", "tsS").select($"dateS".cast("date").as("d"), $"tsS".cast("timestamp").as("ts"))

df: org.apache.spark.sql.DataFrame = [d: date, ts: timestamp]

scala> df.write.parquet("/Users/maxim/tmp/before_1582/3_0_0_micros")

scala> spark.read.parquet("/Users/maxim/tmp/before_1582/3_0_0_micros").show(false)

+----------+--------------------------+

|d |ts |

+----------+--------------------------+

|1001-01-01|1001-01-01 01:02:03.123456|

+----------+--------------------------+

```

Read the saved date/timestamp by Spark 2.4.5:

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> spark.read.parquet("/Users/maxim/tmp/before_1582/3_0_0_micros").show(false)

+----------+--------------------------+

|d |ts |

+----------+--------------------------+

|1001-01-01|1001-01-01 01:02:03.123456|

+----------+--------------------------+

```

Closes#27915 from MaxGekk/rebase-parquet-datetime.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This PR proposes that we change the return type of the `BarrierTaskContext.allGather` method to `Array[String]` instead of `ArrayBuffer[String]` since it is immutable. Based on discussion in #27640. cc zhengruifeng srowen

Closes#27951 from sarthfrey/all-gather-api.

Authored-by: sarthfrey-db <sarth.frey@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR prevents the execution of V2 DataSource exec nodes multiple times when `collect()` is called on them. For V1 DataSources, commands would be executed as a RunnableCommand, which would cache the result as part of the `ExecutedCommandExec` node. We extend `V2CommandExec` for all the data writing commands so that they only get executed once as well.

### Why are the changes needed?

Calling `collect()` on a SQL command that inserts data or creates a table gets executed multiple times otherwise.

### Does this PR introduce any user-facing change?

Fixes a bug

### How was this patch tested?

Unit tests

Closes#27941 from brkyvz/doubleInsert.

Authored-by: Burak Yavuz <brkyvz@gmail.com>

Signed-off-by: Burak Yavuz <brkyvz@gmail.com>

### What changes were proposed in this pull request?

A followup of https://github.com/apache/spark/pull/27936 to update document.

### Why are the changes needed?

correct document

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27950 from cloud-fan/null.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

pattern `''` means literal `'`

```sql

select date_format(to_timestamp("11111904-01-23 15:02:01", 'y-MM-dd HH:mm:ss'), "y-MM-dd HH:mm:ss''SSSSSSSSS");

5377-02-14 06:27:19'000000519

```

0946a9514f missed this case and this pr add it back.

### Why are the changes needed?

bugfix

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

add ut

Closes#27949 from yaooqinn/SPARK-31150-2.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Prpend `-` to the compare result instead of creating a new reverse comparator for each compare when sorting in DESC order in InterpretedOrdering.

### Why are the changes needed?

Currently, we'll create a new reverse comparator for each compare in InterpretedOrdering, which could generate lots of small and instant object and hurt JVM when there're plenty of data.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27938 from Ngone51/reverse_comparator.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The meaning of 'u' was day number of the week in SimpleDateFormat, it was changed to year in DateTimeFormatter. Now we keep the old meaning of 'u' by substituting 'u' to 'e' internally and use DateTimeFormatter to parse the pattern string. In DateTimeFormatter, the 'e' and 'c' also represents day-of-week. e.g.

```sql

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd uuuu');

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd uuee');

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd eeee');

```

Because of the substitution, they all goes to `.... eeee` silently. The users may congitive problems of their meanings, so we should mark them as illegal pattern characters to stay the same as before.

This pr move the method `convertIncompatiblePattern` from `DatetimeUtils` to `DateTimeFormatterHelper` object, since it is quite specific for `DateTimeFormatterHelper` class.

And 'e' and 'c' char checking in this method.

Besides,`convertIncompatiblePattern` has a bug that will lose the last `'` if it ends with it, this pr fixes this too. e.g.

```sql

spark-sql> select date_format(timestamp "2019-10-06", "yyyy-MM-dd'S'");

20/03/18 11:19:45 ERROR SparkSQLDriver: Failed in [select date_format(timestamp "2019-10-06", "yyyy-MM-dd'S'")]

java.lang.IllegalArgumentException: Pattern ends with an incomplete string literal: uuuu-MM-dd'S

spark-sql> select to_timestamp("2019-10-06S", "yyyy-MM-dd'S'");

NULL

```

### Why are the changes needed?

avoid vagueness

bug fix

### Does this PR introduce any user-facing change?

no, these are not exposed yet

### How was this patch tested?

add ut

Closes#27939 from yaooqinn/SPARK-31176.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

```

<extract expression> ::= EXTRACT <left paren> <extract field> FROM <extract source> <right paren>

<extract source> ::= <datetime value expression> | <interval value expression>

```

We now only support datetime values as extract source for `extract` expression but it's alternative function `date_part` supports both datetime and interval.

This pr adds interval value support for `extract` expression as extract source

### Why are the changes needed?

For ANSI compliance and the semantic consistency between extract and `date_part`, we support intervals for extract expressions.

### Does this PR introduce any user-facing change?

yes, in the `extract(abc from xyz)` expression, the `xyz` can be intervals

### How was this patch tested?

add unit tests

Closes#27876 from yaooqinn/SPARK-31119.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This pr solved the same issue as [pr27919](https://github.com/apache/spark/pull/27919), but this one changes the file names based on comment from previous pr.

### What changes were proposed in this pull request?

Make some of file names the same as class name in R package.

### Why are the changes needed?

Make the file consistence

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

run `./R/run-tests.sh`

Closes#27940 from kevinyu98/spark-30954-r-v2.

Authored-by: Qianyang Yu <qyu@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Use `listLocatedStatus` when `lnMemoryFileIndex` is listing files from a `ViewFileSystem` which should delegate to that of `DistributedFileSystem`.

### Why are the changes needed?

When `ViewFileSystem` is used to manage several `DistributedFileSystem`, the change will improve performance of file listing, especially when there are many files.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27801 from manuzhang/spark-31047.

Authored-by: manuzhang <owenzhang1990@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Change what we consider a deleted pod to not include "Terminating"

### Why are the changes needed?

If we get a new snapshot while a pod is in the process of being cleaned up we shouldn't delete the executor until it is fully terminated.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

This should be covered by the decommissioning tests in that they currently are flaky because we sometimes delete the executor instead of allowing it to decommission all the way.

I also ran this in a loop locally ~80 times with the only failures being the PV suite because of unrelated minikube mount issues.

Closes#27905 from holdenk/SPARK-31125-Processing-state-snapshots-incorrect.

Authored-by: Holden Karau <hkarau@apple.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Make `size(null)` return null under ANSI mode, regardless of the `spark.sql.legacy.sizeOfNull` config.

### Why are the changes needed?

In https://github.com/apache/spark/pull/27834, we change the result of `size(null)` to be -1 to match the 2.4 behavior and avoid breaking changes.

However, it's true that the "return -1" behavior is error-prone when being used with aggregate functions. The current ANSI mode controls a bunch of "better behaviors" like failing on overflow. We don't enable these "better behaviors" by default because they are too breaking. The "return null" behavior of `size(null)` is a good fit of the ANSI mode.

### Does this PR introduce any user-facing change?

No as ANSI mode is off by default.

### How was this patch tested?

new tests

Closes#27936 from cloud-fan/null.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This pull request fixes an issue with rolling event logs. The rolling event log directory is created ignoring the dfs umask setting. This allows the history server to prune old rolling logs when run as the group owner of the event log folder.

### Why are the changes needed?

For non-rolling event logs, log files are created ignoring the umask setting by calling setPermission after creating the file. The default umask of 022 currently causes rolling log directories to be created without group write permissions, preventing the history server from pruning logs of applications not run as the same user as the history server. This adds the same behavior for rolling event logs so users don't need to worry about the umask setting causing different behavior.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually. The folder is created with the correct 770 permission. The status file is still affected by the umask setting, but that doesn't stop the folder from being deleted by the history server. I'm not sure if that causes any other issues. I'm not sure how to test something involving a Hadoop setting.

Closes#27764 from Kimahriman/bug/rolling-log-permissions.

Authored-by: Adam Binford <adam.binford@radiantsolutions.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

In Spark CLI, we create a hive `CliSessionState` and it does not load the `hive-site.xml`. So the configurations in `hive-site.xml` will not take effects like other spark-hive integration apps.

Also, the warehouse directory is not correctly picked. If the `default` database does not exist, the `CliSessionState` will create one during the first time it talks to the metastore. The `Location` of the default DB will be neither the value of `spark.sql.warehousr.dir` nor the user-specified value of `hive.metastore.warehourse.dir`, but the default value of `hive.metastore.warehourse.dir `which will always be `/user/hive/warehouse`.

### Why are the changes needed?

fix bug for Spark SQL cli to pick right confs

### Does this PR introduce any user-facing change?

yes, the non-exists default database will be created in the location specified by the users via `spark.sql.warehouse.dir` or `hive.metastore.warehouse.dir`, or the default value of `spark.sql.warehouse.dir` if none of them specified.

### How was this patch tested?

add cli ut

Closes#27933 from yaooqinn/SPARK-31170.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is to support parsing timestamp values with variable length second fraction parts.

e.g. 'yyyy-MM-dd HH:mm:ss.SSSSSS[zzz]' can parse timestamp with 0~6 digit-length second fraction but fail >=7

```sql

select to_timestamp(v, 'yyyy-MM-dd HH:mm:ss.SSSSSS[zzz]') from values

('2019-10-06 10:11:12.'),

('2019-10-06 10:11:12.0'),

('2019-10-06 10:11:12.1'),

('2019-10-06 10:11:12.12'),

('2019-10-06 10:11:12.123UTC'),

('2019-10-06 10:11:12.1234'),

('2019-10-06 10:11:12.12345CST'),

('2019-10-06 10:11:12.123456PST') t(v)

2019-10-06 03:11:12.123

2019-10-06 08:11:12.12345

2019-10-06 10:11:12

2019-10-06 10:11:12

2019-10-06 10:11:12.1

2019-10-06 10:11:12.12

2019-10-06 10:11:12.1234

2019-10-06 10:11:12.123456

select to_timestamp('2019-10-06 10:11:12.1234567PST', 'yyyy-MM-dd HH:mm:ss.SSSSSS[zzz]')

NULL

```

Since 3.0, we use java 8 time API to parse and format timestamp values. when we create the `DateTimeFormatter`, we use `appendPattern` to create the build first, where the 'S..S' part will be parsed to a fixed-length(= `'S..S'.length`). This fits the formatting part but too strict for the parsing part because the trailing zeros are very likely to be truncated.

### Why are the changes needed?

improve timestamp parsing and more compatible with 2.4.x

### Does this PR introduce any user-facing change?

no, the related changes are newly added

### How was this patch tested?

add uts

Closes#27906 from yaooqinn/SPARK-31150.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Run the `OptimizeSkewedJoin` rule after the `CoalesceShufflePartitions` rule.

### Why are the changes needed?

Remove duplicated coalescing code in `OptimizeSkewedJoin`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#27893 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

For a bucketed table, when deciding output partitioning, if the output doesn't contain all bucket columns, the result is `UnknownPartitioning`. But when generating rdd, current Spark uses `createBucketedReadRDD` because it doesn't check if the output contains all bucket columns. So the rdd and its output partitioning are inconsistent.

### Why are the changes needed?

To fix a bug.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Modified existing tests.

Closes#27924 from wzhfy/inconsistent_rdd_partitioning.

Authored-by: Zhenhua Wang <wzh_zju@163.com>

Signed-off-by: Zhenhua Wang <wzh_zju@163.com>

### What changes were proposed in this pull request?

This PR adds the proxy user on the spark-submit command to the childArgs, so the proxy user can be retrieved and used in the KubernetesAplication to add the proxy user in the driver container args

### Why are the changes needed?

The proxy user when used on the spark submit doesn't work on the Kubernetes environment since it doesn't add the `--proxy-user` argument on the driver container and when I added it manually to the Pod definition it worked just fine.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Tests were added

Closes#27422 from PedroRossi/SPARK-25355.

Authored-by: Pedro Rossi <pgrr@cin.ufpe.br>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

After https://github.com/apache/spark/pull/27542, `map()` returns `map<null, null>` instead of `map<string, string>`. However, this breaks queries which union `map()` and other maps.

The reason is, `TypeCoercion` rules and `Cast` think it's illegal to cast null type map key to other types, as it makes the key nullable, but it's actually legal. This PR fixes it.

### Why are the changes needed?

To avoid breaking queries.

### Does this PR introduce any user-facing change?

Yes, now some queries that work in 2.x can work in 3.0 as well.

### How was this patch tested?

new test

Closes#27926 from cloud-fan/bug.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

remove unused variables;

### Why are the changes needed?

remove unused variables;

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing testsuites

Closes#27922 from zhengruifeng/test_cleanup.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

- Adds following overloaded variants to Scala `o.a.s.sql.functions`:

- `percentile_approx(e: Column, percentage: Array[Double], accuracy: Long): Column`

- `percentile_approx(columnName: String, percentage: Array[Double], accuracy: Long): Column`

- `percentile_approx(e: Column, percentage: Double, accuracy: Long): Column`

- `percentile_approx(columnName: String, percentage: Double, accuracy: Long): Column`

- `percentile_approx(e: Column, percentage: Seq[Double], accuracy: Long): Column` (primarily for

Python interop).

- `percentile_approx(columnName: String, percentage: Seq[Double], accuracy: Long): Column`

- Adds `percentile_approx` to `pyspark.sql.functions`.

- Adds `percentile_approx` function to SparkR.

### Why are the changes needed?

Currently we support `percentile_approx` only in SQL expression. It is inconvenient and makes this function relatively unknown.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New unit tests for SparkR an PySpark.

As for now there are no additional tests in Scala API ‒ `ApproximatePercentile` is well tested and Python (including docstrings) and R tests provide additional tests, so it seems unnecessary.

Closes#27278 from zero323/SPARK-30569.

Lead-authored-by: zero323 <mszymkiewicz@gmail.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

As discovered here https://github.com/apache/spark/pull/27910#issuecomment-599027190, pydocstyle tests were not running anywhere (not on Jenkins; not on GitHub).

~This PR enables those tests.~

It also seems like a [large hill to climb](https://github.com/apache/spark/pull/27912#issuecomment-599167117) to enable any meaningful checks, so we're going to just rip pydocstyle out for now.

### Why are the changes needed?

Presumably, we defined those doc style tests because we care about whatever it is they enforce. Since we're not actually testing anything, though, it's better to clear the cruft.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Will check the GitHub workflow logs on this PR.

Closes#27912 from nchammas/SPARK-31155-pydocstyle.

Authored-by: Nicholas Chammas <nicholas.chammas@liveramp.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This fix#26956

Wrap try-catch on `fs.getFileStatus(path)` within acl/permission in case of the path doesn't exist.

### Why are the changes needed?

`truncate table` may fail to re-create path in case of interruption or something else. As a result, next time we `truncate table` on the same table with acl/permission, it will fail due to `FileNotFoundException`. And it also brings behavior change compares to previous Spark version, which could still `truncate table` successfully even if the path doesn't exist.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UT.

Closes#27923 from Ngone51/fix_truncate.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR is kind of a followup of #26808. It leverages the helper method for aliasing in built-in SQL expressions to use the alias as its output column name where it's applicable.

- `Expression`, `UnaryMathExpression` and `BinaryMathExpression` search the alias in the tags by default.

- When the naming is different in its implementation, it has to be overwritten for the expression specifically. E.g., `CallMethodViaReflection`, `Remainder`, `CurrentTimestamp`,

`FormatString` and `XPathDouble`.

This PR fixes the aliases of the functions below:

| class | alias |

|--------------------------|------------------|

|`Rand` |`random` |

|`Ceil` |`ceiling` |

|`Remainder` |`mod` |

|`Pow` |`pow` |

|`Signum` |`sign` |

|`Chr` |`char` |

|`Length` |`char_length` |

|`Length` |`character_length`|

|`FormatString` |`printf` |

|`Substring` |`substr` |

|`Upper` |`ucase` |

|`XPathDouble` |`xpath_number` |

|`DayOfMonth` |`day` |

|`CurrentTimestamp` |`now` |

|`Size` |`cardinality` |

|`Sha1` |`sha` |

|`CallMethodViaReflection` |`java_method` |

Note: `EqualTo`, `=` and `==` aliases were excluded because it's unable to leverage this helper method. It should fix the parser.

Note: this PR also excludes some instances such as `ToDegrees`, `ToRadians`, `UnaryMinus` and `UnaryPositive` that needs an explicit name overwritten to make the scope of this PR smaller.

### Why are the changes needed?

To respect expression name.

### Does this PR introduce any user-facing change?

Yes, it will change the output column name.

### How was this patch tested?

Manually tested, and unittests were added.

Closes#27901 from HyukjinKwon/31146.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

jira link: https://issues.apache.org/jira/browse/SPARK-30930

Remove ML/MLLIB DeveloperApi annotations.

### Why are the changes needed?

The Developer APIs in ML/MLLIB have been there for a long time. They are stable now and are very unlikely to be changed or removed, so I unmark these Developer APIs in this PR.

### Does this PR introduce any user-facing change?

Yes. DeveloperApi annotations are removed from docs.

### How was this patch tested?

existing tests

Closes#27859 from huaxingao/spark-30930.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR (SPARK-31116) add caseSensitive parameter to ParquetRowConverter so that it handle materialize parquet properly with respect to case sensitivity

### Why are the changes needed?

From spark 3.0.0, below statement throws IllegalArgumentException in caseInsensitive mode because of explicit field index searching in ParquetRowConverter. As we already constructed parquet requested schema and catalyst requested schema during schema clipping in ParquetReadSupport, just follow these behavior.

```scala

val path = "/some/temp/path"

spark

.range(1L)

.selectExpr("NAMED_STRUCT('lowercase', id, 'camelCase', id + 1) AS StructColumn")

.write.parquet(path)

val caseInsensitiveSchema = new StructType()

.add(

"StructColumn",

new StructType()

.add("LowerCase", LongType)

.add("camelcase", LongType))

spark.read.schema(caseInsensitiveSchema).parquet(path).show()

```

### Does this PR introduce any user-facing change?

No. The changes are only in unreleased branches (`master` and `branch-3.0`).

### How was this patch tested?

Passed new test cases that check parquet column selection with respect to schemas and case sensitivities

Closes#27888 from kimtkyeom/parquet_row_converter_case_sensitivity.

Authored-by: Tae-kyeom, Kim <kimtkyeom@devsisters.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR will add the user guide for AQE and the detailed configurations about the three mainly features in AQE.

### Why are the changes needed?

Add the detailed configurations.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

only add doc no need ut.

Closes#27616 from JkSelf/aqeuserguide.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Move the code related to days rebasing from/to Julian calendar from `HiveInspectors` to new class `DaysWritable`.

### Why are the changes needed?

To improve maintainability of the `HiveInspectors` trait which is already pretty complex.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By `HiveOrcHadoopFsRelationSuite`.

Closes#27890 from MaxGekk/replace-DateWritable-by-DaysWritable.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

It's not needed at all as now we replace "y" with "u" if there is no "G". So the era is either explicitly specified (e.g. "yyyy G") or can be inferred from the year (e.g. "uuuu").

### Why are the changes needed?

By default we use "uuuu" as the year pattern, which indicates the era already. If we set a default era, it can get conflicted and fail the parsing.

### Does this PR introduce any user-facing change?

yea, now spark can parse date/timestamp with negative year via the "yyyy" pattern, which will be converted to "uuuu" under the hood.

### How was this patch tested?

new tests

Closes#27707 from cloud-fan/bug.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Upgrdade `docker-client` version.

### Why are the changes needed?

`docker-client` what Spark uses is super old. Snippet from the project page:

```

Spotify no longer uses recent versions of this project internally.

The version of docker-client we're using is whatever helios has in its pom.xml. => 8.14.1

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

```

build/mvn install -DskipTests

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.DB2IntegrationSuite test`

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.MsSqlServerIntegrationSuite test`

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.PostgresIntegrationSuite test`

```

Closes#27892 from gaborgsomogyi/docker-client.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Fix the IllegalArgumentException in broadcast exchange when numRows over 341 million but less than 512 million.

Since the maximum number of keys that `BytesToBytesMap` supports is 1 << 29, and only 70% of the slots can be used before growing in `HashedRelation`, So here the limitation should not be greater equal than 341 million (1 << 29 / 1.5(357913941)) instead of 512 million.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually test.

Closes#27828 from LantaoJin/SPARK-31068.

Lead-authored-by: LantaoJin <jinlantao@gmail.com>

Co-authored-by: Alan Jin <jinlantao@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

…a sbt on Intellij IDEA.

### What changes were proposed in this pull request?

Read from java property "sbt.maven.profiles", the maven profiles to be enabled while importing to intellij IDEA via SBT.

### Why are the changes needed?

Without this change one needs to set an os-wide environment variable `SBT_MAVEN_PROFILES`, on mac it is even trickier (I have not figured out, what can be done).

### Does this PR introduce any user-facing change?

None

### How was this patch tested?

Manually tested by applying multiple profiles or a single profile.

Please see the attached images to see the steps.

<img width="802" alt="Screenshot 2020-03-11 at 4 09 57 PM" src="https://user-images.githubusercontent.com/992952/76411667-46223280-63b8-11ea-9a77-dc014b66d48b.png">

<img width="867" alt="Screenshot 2020-03-11 at 4 18 09 PM" src="https://user-images.githubusercontent.com/992952/76411676-4ae6e680-63b8-11ea-895d-ed9d6cc223c5.png">

Closes#27878 from ScrapCodes/SPARK-31120/idea-load-maven-profiles.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>