## What changes were proposed in this pull request?

Alternative take on https://github.com/apache/spark/pull/22063 that does not introduce udfInternal.

Resolve issue with inferring func types in 2.12 by instead using info captured when UDF is registered -- capturing which types are nullable (i.e. not primitive)

## How was this patch tested?

Existing tests.

Closes#22259 from srowen/SPARK-25044.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This changes the calls of `toPandas()` and `createDataFrame()` to use the Arrow stream format, when Arrow is enabled. Previously, Arrow data was written to byte arrays where each chunk is an output of the Arrow file format. This was mainly due to constraints at the time, and caused some overhead by writing the schema/footer on each chunk of data and then having to read multiple Arrow file inputs and concat them together.

Using the Arrow stream format has improved these by increasing performance, lower memory overhead for the average case, and simplified the code. Here are the details of this change:

**toPandas()**

_Before:_

Spark internal rows are converted to Arrow file format, each group of records is a complete Arrow file which contains the schema and other metadata. Next a collect is done and an Array of Arrow files is the result. After that each Arrow file is sent to Python driver which then loads each file and concats them to a single Arrow DataFrame.

_After:_

Spark internal rows are converted to ArrowRecordBatches directly, which is the simplest Arrow component for IPC data transfers. The driver JVM then immediately starts serving data to Python as an Arrow stream, sending the schema first. It then starts a Spark job with a custom handler that sends Arrow RecordBatches to Python. Partitions arriving in order are sent immediately, and out-of-order partitions are buffered until the ones that precede it come in. This improves performance, simplifies memory usage on executors, and improves the average memory usage on the JVM driver. Since the order of partitions must be preserved, the worst case is that the first partition will be the last to arrive all data must be buffered in memory until then. This case is no worse that before when doing a full collect.

**createDataFrame()**

_Before:_

A Pandas DataFrame is split into parts and each part is made into an Arrow file. Then each file is prefixed by the buffer size and written to a temp file. The temp file is read and each Arrow file is parallelized as a byte array.

_After:_

A Pandas DataFrame is split into parts, then an Arrow stream is written to a temp file where each part is an ArrowRecordBatch. The temp file is read as a stream and the Arrow messages are examined. If the message is an ArrowRecordBatch, the data is saved as a byte array. After reading the file, each ArrowRecordBatch is parallelized as a byte array. This has slightly more processing than before because we must look each Arrow message to extract the record batches, but performance ends up a litle better. It is cleaner in the sense that IPC from Python to JVM is done over a single Arrow stream.

## How was this patch tested?

Added new unit tests for the additions to ArrowConverters in Scala, existing tests for Python.

## Performance Tests - toPandas

Tests run on a 4 node standalone cluster with 32 cores total, 14.04.1-Ubuntu and OpenJDK 8

measured wall clock time to execute `toPandas()` and took the average best time of 5 runs/5 loops each.

Test code

```python

df = spark.range(1 << 25, numPartitions=32).toDF("id").withColumn("x1", rand()).withColumn("x2", rand()).withColumn("x3", rand()).withColumn("x4", rand())

for i in range(5):

start = time.time()

_ = df.toPandas()

elapsed = time.time() - start

```

Current Master | This PR

---------------------|------------

5.803557 | 5.16207

5.409119 | 5.133671

5.493509 | 5.147513

5.433107 | 5.105243

5.488757 | 5.018685

Avg Master | Avg This PR

------------------|--------------

5.5256098 | 5.1134364

Speedup of **1.08060595**

## Performance Tests - createDataFrame

Tests run on a 4 node standalone cluster with 32 cores total, 14.04.1-Ubuntu and OpenJDK 8

measured wall clock time to execute `createDataFrame()` and get the first record. Took the average best time of 5 runs/5 loops each.

Test code

```python

def run():

pdf = pd.DataFrame(np.random.rand(10000000, 10))

spark.createDataFrame(pdf).first()

for i in range(6):

start = time.time()

run()

elapsed = time.time() - start

gc.collect()

print("Run %d: %f" % (i, elapsed))

```

Current Master | This PR

--------------------|----------

6.234608 | 5.665641

6.32144 | 5.3475

6.527859 | 5.370803

6.95089 | 5.479151

6.235046 | 5.529167

Avg Master | Avg This PR

---------------|----------------

6.4539686 | 5.4784524

Speedup of **1.178064192**

## Memory Improvements

**toPandas()**

The most significant improvement is reduction of the upper bound space complexity in the JVM driver. Before, the entire dataset was collected in the JVM first before sending it to Python. With this change, as soon as a partition is collected, the result handler immediately sends it to Python, so the upper bound is the size of the largest partition. Also, using the Arrow stream format is more efficient because the schema is written once per stream, followed by record batches. The schema is now only send from driver JVM to Python. Before, multiple Arrow file formats were used that each contained the schema. This duplicated schema was created in the executors, sent to the driver JVM, and then Python where all but the first one received are discarded.

I verified the upper bound limit by running a test that would collect data that would exceed the amount of driver JVM memory available. Using these settings on a standalone cluster:

```

spark.driver.memory 1g

spark.executor.memory 5g

spark.sql.execution.arrow.enabled true

spark.sql.execution.arrow.fallback.enabled false

spark.sql.execution.arrow.maxRecordsPerBatch 0

spark.driver.maxResultSize 2g

```

Test code:

```python

from pyspark.sql.functions import rand

df = spark.range(1 << 25, numPartitions=32).toDF("id").withColumn("x1", rand()).withColumn("x2", rand()).withColumn("x3", rand())

df.toPandas()

```

This makes total data size of 33554432×8×4 = 1073741824

With the current master, it fails with OOM but passes using this PR.

**createDataFrame()**

No significant change in memory except that using the stream format instead of separate file formats avoids duplicated the schema, similar to toPandas above. The process of reading the stream and parallelizing the batches does cause the record batch message metadata to be copied, but it's size is insignificant.

Closes#21546 from BryanCutler/arrow-toPandas-stream-SPARK-23030.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Support Filter in ConvertToLocalRelation, similar to how Project works.

Additionally, in Optimizer, run ConvertToLocalRelation earlier to simplify the plan. This is good for very short queries which often are queries on local relations.

## How was this patch tested?

New test. Manual benchmark.

Author: Bogdan Raducanu <bogdan@databricks.com>

Author: Shixiong Zhu <zsxwing@gmail.com>

Author: Yinan Li <ynli@google.com>

Author: Li Jin <ice.xelloss@gmail.com>

Author: s71955 <sujithchacko.2010@gmail.com>

Author: DB Tsai <d_tsai@apple.com>

Author: jaroslav chládek <mastermism@gmail.com>

Author: Huangweizhe <huangweizhe@bbdservice.com>

Author: Xiangrui Meng <meng@databricks.com>

Author: hyukjinkwon <gurwls223@apache.org>

Author: Kent Yao <yaooqinn@hotmail.com>

Author: caoxuewen <cao.xuewen@zte.com.cn>

Author: liuxian <liu.xian3@zte.com.cn>

Author: Adam Bradbury <abradbury@users.noreply.github.com>

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Author: Yuming Wang <yumwang@ebay.com>

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#22205 from bogdanrdc/local-relation-filter.

## What changes were proposed in this pull request?

This adds `spark.executor.pyspark.memory` to configure Python's address space limit, [`resource.RLIMIT_AS`](https://docs.python.org/3/library/resource.html#resource.RLIMIT_AS). Limiting Python's address space allows Python to participate in memory management. In practice, we see fewer cases of Python taking too much memory because it doesn't know to run garbage collection. This results in YARN killing fewer containers. This also improves error messages so users know that Python is consuming too much memory:

```

File "build/bdist.linux-x86_64/egg/package/library.py", line 265, in fe_engineer

fe_eval_rec.update(f(src_rec_prep, mat_rec_prep))

File "build/bdist.linux-x86_64/egg/package/library.py", line 163, in fe_comp

comparisons = EvaluationUtils.leven_list_compare(src_rec_prep.get(item, []), mat_rec_prep.get(item, []))

File "build/bdist.linux-x86_64/egg/package/evaluationutils.py", line 25, in leven_list_compare

permutations = sorted(permutations, reverse=True)

MemoryError

```

The new pyspark memory setting is used to increase requested YARN container memory, instead of sharing overhead memory between python and off-heap JVM activity.

## How was this patch tested?

Tested memory limits in our YARN cluster and verified that MemoryError is thrown.

Author: Ryan Blue <blue@apache.org>

Closes#21977 from rdblue/SPARK-25004-add-python-memory-limit.

## What changes were proposed in this pull request?

In the PR, I propose to not perform recursive parallel listening of files in the `scanPartitions` method because it can cause a deadlock. Instead of that I propose to do `scanPartitions` in parallel for top level partitions only.

## How was this patch tested?

I extended an existing test to trigger the deadlock.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#22233 from MaxGekk/fix-recover-partitions.

## What changes were proposed in this pull request?

This PR implements the possibility of the user to override the maximum number of buckets when saving to a table.

Currently the limit is a hard-coded 100k, which might be insufficient for large workloads.

A new configuration entry is proposed: `spark.sql.bucketing.maxBuckets`, which defaults to the previous 100k.

## How was this patch tested?

Added unit tests in the following spark.sql test suites:

- CreateTableAsSelectSuite

- BucketedWriteSuite

Author: Fernando Pereira <fernando.pereira@epfl.ch>

Closes#21087 from ferdonline/enh/configurable_bucket_limit.

## What changes were proposed in this pull request?

The PR excludes Python UDFs filters in FileSourceStrategy so that they don't ExtractPythonUDF rule to throw exception. It doesn't make sense to pass Python UDF filters in FileSourceStrategy anyway because they cannot be used as push down filters.

## How was this patch tested?

Add a new regression test

Closes#22104 from icexelloss/SPARK-24721-udf-filter.

Authored-by: Li Jin <ice.xelloss@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

this pr add a configuration parameter to configure the capacity of fast aggregation.

Performance comparison:

```

Java HotSpot(TM) 64-Bit Server VM 1.8.0_60-b27 on Windows 7 6.1

Intel64 Family 6 Model 94 Stepping 3, GenuineIntel

Aggregate w multiple keys: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

fasthash = default 5612 / 5882 3.7 267.6 1.0X

fasthash = config 3586 / 3595 5.8 171.0 1.6X

```

## How was this patch tested?

the existed test cases.

Closes#21931 from heary-cao/FastHashCapacity.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is based on the discussion https://github.com/apache/spark/pull/16677/files#r212805327.

As SQL standard doesn't mandate that a nested order by followed by a limit has to respect that ordering clause, this patch removes the `child.outputOrdering` check.

## How was this patch tested?

Unit tests.

Closes#22239 from viirya/improve-global-limit-parallelism-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Improved the documentation for the datetime functions in `org.apache.spark.sql.functions` by adding details about the supported column input types, the column return type, behaviour on invalid input, supporting examples and clarifications.

## How was this patch tested?

Manually testing each of the datetime functions with different input to ensure that the corresponding Javadoc/Scaladoc matches the behaviour of the function. Successfully ran the `unidoc` SBT process.

Closes#20901 from abradbury/SPARK-23792.

Authored-by: Adam Bradbury <abradbury@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR generates the code that to refer a `StructType` generated in the scala code instead of generating `StructType` in Java code.

The original code has two issues.

1. Avoid to used the field name such as `key.name`

1. Support complicated schema (e.g. nested DataType)

At first, [the JIRA entry](https://issues.apache.org/jira/browse/SPARK-25178) proposed to change the generated field name of the keySchema / valueSchema to a dummy name in `RowBasedHashMapGenerator` and `VectorizedHashMapGenerator.scala`. This proposal can addresse issue 1.

Ueshin suggested an approach to refer to a `StructType` generated in the scala code using `ctx.addReferenceObj()`. This approach can address issues 1 and 2. Finally, this PR uses this approach.

## How was this patch tested?

Existing UTs

Closes#22187 from kiszk/SPARK-25178.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

(Link to Jira: https://issues.apache.org/jira/browse/SPARK-4502)

_N.B. This is a restart of PR #16578 which includes a subset of that code. Relevant review comments from that PR should be considered incorporated by reference. Please avoid duplication in review by reviewing that PR first. The summary below is an edited copy of the summary of the previous PR._

## What changes were proposed in this pull request?

One of the hallmarks of a column-oriented data storage format is the ability to read data from a subset of columns, efficiently skipping reads from other columns. Spark has long had support for pruning unneeded top-level schema fields from the scan of a parquet file. For example, consider a table, `contacts`, backed by parquet with the following Spark SQL schema:

```

root

|-- name: struct

| |-- first: string

| |-- last: string

|-- address: string

```

Parquet stores this table's data in three physical columns: `name.first`, `name.last` and `address`. To answer the query

```SQL

select address from contacts

```

Spark will read only from the `address` column of parquet data. However, to answer the query

```SQL

select name.first from contacts

```

Spark will read `name.first` and `name.last` from parquet.

This PR modifies Spark SQL to support a finer-grain of schema pruning. With this patch, Spark reads only the `name.first` column to answer the previous query.

### Implementation

There are two main components of this patch. First, there is a `ParquetSchemaPruning` optimizer rule for gathering the required schema fields of a `PhysicalOperation` over a parquet file, constructing a new schema based on those required fields and rewriting the plan in terms of that pruned schema. The pruned schema fields are pushed down to the parquet requested read schema. `ParquetSchemaPruning` uses a new `ProjectionOverSchema` extractor for rewriting a catalyst expression in terms of a pruned schema.

Second, the `ParquetRowConverter` has been patched to ensure the ordinals of the parquet columns read are correct for the pruned schema. `ParquetReadSupport` has been patched to address a compatibility mismatch between Spark's built in vectorized reader and the parquet-mr library's reader.

### Limitation

Among the complex Spark SQL data types, this patch supports parquet column pruning of nested sequences of struct fields only.

## How was this patch tested?

Care has been taken to ensure correctness and prevent regressions. A more advanced version of this patch incorporating optimizations for rewriting queries involving aggregations and joins has been running on a production Spark cluster at VideoAmp for several years. In that time, one bug was found and fixed early on, and we added a regression test for that bug.

We forward-ported this patch to Spark master in June 2016 and have been running this patch against Spark 2.x branches on ad-hoc clusters since then.

Closes#21320 from mallman/spark-4502-parquet_column_pruning-foundation.

Lead-authored-by: Michael Allman <msa@allman.ms>

Co-authored-by: Adam Jacques <adam@technowizardry.net>

Co-authored-by: Michael Allman <michael@videoamp.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Dataset.apply calls dataset.deserializer (to provide an early error) which ends up calling the full Analyzer on the deserializer. This can take tens of milliseconds, depending on how big the plan is.

Since Dataset.apply is called for many Dataset operations such as Dataset.where it can be a significant overhead for short queries.

According to a comment in the PR that introduced this check, we can at least remove this check for DataFrames: https://github.com/apache/spark/pull/20402#discussion_r164338267

## How was this patch tested?

Existing tests + manual benchmark

Author: Bogdan Raducanu <bogdan@databricks.com>

Closes#22201 from bogdanrdc/deserializer-fix.

## What changes were proposed in this pull request?

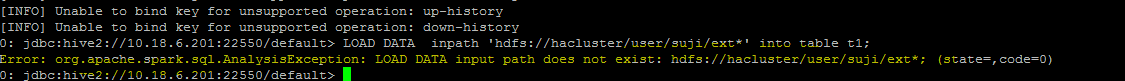

**Problem statement**

load data command with hdfs file paths consists of wild card strings like * are not working

eg:

"load data inpath 'hdfs://hacluster/user/ext* into table t1"

throws Analysis exception while executing this query

**Analysis -**

Currently fs.exists() API which is used for path validation in load command API cannot resolve the path with wild card pattern, To mitigate this problem i am using globStatus() API another api which can resolve the paths with hdfs supported wildcards like *,? etc(inline with hive wildcard support).

**Improvement identified as part of this issue -**

Currently system wont support wildcard character to be used for folder level path in a local file system. This PR has handled this scenario, the same globStatus API will unify the validation logic of local and non local file systems, this will ensure the behavior consistency between the hdfs and local file path in load command.

with this improvement user will be able to use a wildcard character in folder level path of a local file system in load command inline with hive behaviour, in older versions user can use wildcards only in file path of the local file system if they use in folder path system use to give an error by mentioning that not supported.

eg: load data local inpath '/localfilesystem/folder* into table t1

## How was this patch tested?

a) Manually tested by executing test-cases in HDFS yarn cluster. Reports is been attached in below section.

b) Existing test-case can verify the impact and functionality for local file path scenarios

c) A test-case is been added for verifying the functionality when wild card is been used in folder level path of a local file system

## Test Results

Note: all ip's were updated to localhost for security reasons.

HDFS path details

```

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith1

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith1/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith1/typeddata61.txt

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith2

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith2/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith2/typeddata61.txt

```

positive scenario by specifying complete file path to know about record size

```

0: jdbc:hive2://localhost:22550/default> create table wild_spark (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.217 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata60.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (4.236 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata61.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.602 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from wild_spark;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (18.529 seconds)

0: jdbc:hive2://localhost:22550/default>

```

With wild card character in file path

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.409 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/type*' into table spark_withWildChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.502 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

```

with ? wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_DiffChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata60.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata61.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.644 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_DiffChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (16.078 seconds)

```

with folder level wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_folderlevel (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/suji*/*' into table spark_withWildChar_folderlevel;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_folderlevel;

+-----------+--+

| count(1) |

+-----------+--+

| 242 |

+-----------+--+

1 row selected (16.078 seconds)

```

Negative scenario invalid path

```

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujiinvalid*/*' into table spark_withWildChar_folder;

Error: org.apache.spark.sql.AnalysisException: LOAD DATA input path does not exist: /user/data/sujiinvalid*/*; (state=,code=0)

0: jdbc:hive2://localhost:22550/default>

```

Hive Test results- file level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_files (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.723 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/sujith1/type*' into table hive_withWildChar_files;

INFO : Loading data to table default.hive_withwildchar_files from hdfs://hacluster/user/sujith1/type*

No rows affected (0.682 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_files;

+------+--+

| _c0 |

+------+--+

| 121 |

+------+--+

1 row selected (50.832 seconds)

```

Hive Test results- folder level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_folder (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.459 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/suji*/*' into table hive_withWildChar_folder;

INFO : Loading data to table default.hive_withwildchar_folder from hdfs://hacluster/user/data/suji*/*

No rows affected (0.76 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_folder;

+------+--+

| _c0 |

+------+--+

| 242 |

+------+--+

1 row selected (46.483 seconds)

```

Closes#20611 from sujith71955/master_wldcardsupport.

Lead-authored-by: s71955 <sujithchacko.2010@gmail.com>

Co-authored-by: sujith71955 <sujithchacko.2010@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Fix a race in the rate source tests. We need a better way of testing restart behavior.

## How was this patch tested?

unit test

Closes#22191 from jose-torres/racetest.

Authored-by: Jose Torres <torres.joseph.f+github@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

Casting to `DecimalType` is not always needed to force nullable.

If the decimal type to cast is wider than original type, or only truncating or precision loss, the casted value won't be `null`.

## How was this patch tested?

Added and modified tests.

Closes#22200 from ueshin/issues/SPARK-25208/cast_nullable_decimal.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

[SPARK-25126] (https://issues.apache.org/jira/browse/SPARK-25126)

reports loading a large number of orc files consumes a lot of memory

in both 2.0 and 2.3. The issue is caused by creating a Reader for every

orc file in order to infer the schema.

In OrFileOperator.ReadSchema, a Reader is created for every file

although only the first valid one is used. This uses significant

amount of memory when there `paths` have a lot of files. In 2.3

a different code path (OrcUtils.readSchema) is used for inferring

schema for orc files. This commit changes both functions to create

Reader lazily.

## How was this patch tested?

Pass the Jenkins with a newly added test case by dongjoon-hyun

Closes#22157 from raofu/SPARK-25126.

Lead-authored-by: Rao Fu <rao@coupang.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Co-authored-by: Rao Fu <raofu04@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

VectorizedParquetRecordReader::initializeInternal rebuilds the column list and path list once for each column. Therefore, it indirectly iterates 2\*colCount\*colCount times for each parquet file.

This inefficiency impacts jobs that read parquet-backed tables with many columns and many files. Jobs that read tables with few columns or few files are not impacted.

This PR changes initializeInternal so that it builds each list only once.

I ran benchmarks on my laptop with 1 worker thread, running this query:

<pre>

sql("select * from parquet_backed_table where id1 = 1").collect

</pre>

There are roughly one matching row for every 425 rows, and the matching rows are sprinkled pretty evenly throughout the table (that is, every page for column <code>id1</code> has at least one matching row).

6000 columns, 1 million rows, 67 32M files:

master | branch | improvement

-------|---------|-----------

10.87 min | 6.09 min | 44%

6000 columns, 1 million rows, 23 98m files:

master | branch | improvement

-------|---------|-----------

7.39 min | 5.80 min | 21%

600 columns 10 million rows, 67 32M files:

master | branch | improvement

-------|---------|-----------

1.95 min | 1.96 min | -0.5%

60 columns, 100 million rows, 67 32M files:

master | branch | improvement

-------|---------|-----------

0.55 min | 0.55 min | 0%

## How was this patch tested?

- sql unit tests

- pyspark-sql tests

Closes#22188 from bersprockets/SPARK-25164.

Authored-by: Bruce Robbins <bersprockets@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This pr proposed to show RDD/relation names in RDD/Hive table scan nodes.

This change made these names show up in the webUI and explain results.

For example;

```

scala> sql("CREATE TABLE t(c1 int) USING hive")

scala> sql("INSERT INTO t VALUES(1)")

scala> spark.table("t").explain()

== Physical Plan ==

Scan hive default.t [c1#8], HiveTableRelation `default`.`t`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [c1#8]

^^^^^^^^^^^

```

<img width="212" alt="spark-pr-hive" src="https://user-images.githubusercontent.com/692303/44501013-51264c80-a6c6-11e8-94f8-0704aee83bb6.png">

Closes#20226

## How was this patch tested?

Added tests in `DataFrameSuite`, `DatasetSuite`, and `HiveExplainSuite`

Closes#22153 from maropu/pr20226.

Lead-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Co-authored-by: Tejas Patil <tejasp@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

They depend on internal Expression APIs. Let's see how far we can get without it.

## How was this patch tested?

Just some code removal. There's no existing tests as far as I can tell so it's easy to remove.

Closes#22185 from rxin/SPARK-25127.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The race condition that caused test failure is between 2 threads.

- The MicrobatchExecution thread that processes inputs to produce answers and then generates progress events.

- The test thread that generates some input data, checked the answer and then verified the query generated progress event.

The synchronization structure between these threads is as follows

1. MicrobatchExecution thread, in every batch, does the following in order.

a. Processes batch input to generate answer.

b. Signals `awaitProgressLockCondition` to wake up threads waiting for progress using `awaitOffset`

c. Generates progress event

2. Test execution thread

a. Calls `awaitOffset` to wait for progress, which waits on `awaitProgressLockCondition`.

b. As soon as `awaitProgressLockCondition` is signaled, it would move on the in the test to check answer.

c. Finally, it would verify the last generated progress event.

What can happen is the following sequence of events: 2a -> 1a -> 1b -> 2b -> 2c -> 1c.

In other words, the progress event may be generated after the test tries to verify it.

The solution has two steps.

1. Signal the waiting thread after the progress event has been generated, that is, after `finishTrigger()`.

2. Increase the timeout of `awaitProgressLockCondition.await(100 ms)` to a large value.

This latter is to ensure that test thread for keeps waiting on `awaitProgressLockCondition`until the MicroBatchExecution thread explicitly signals it. With the existing small timeout of 100ms the following sequence can occur.

- MicroBatchExecution thread updates committed offsets

- Test thread waiting on `awaitProgressLockCondition` accidentally times out after 100 ms, finds that the committed offsets have been updated, therefore returns from `awaitOffset` and moves on to the progress event tests.

- MicroBatchExecution thread then generates progress event and signals. But the test thread has already attempted to verify the event and failed.

By increasing the timeout to large (e.g., `streamingTimeoutMs = 60 seconds`, similar to `awaitInitialization`), this above type of race condition is also avoided.

## How was this patch tested?

Ran locally many times.

Closes#22182 from tdas/SPARK-25184.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

Improve the data source v2 API according to the [design doc](https://docs.google.com/document/d/1DDXCTCrup4bKWByTalkXWgavcPdvur8a4eEu8x1BzPM/edit?usp=sharing)

summary of the changes

1. rename `ReadSupport` -> `DataSourceReader` -> `InputPartition` -> `InputPartitionReader` to `BatchReadSupportProvider` -> `BatchReadSupport` -> `InputPartition`/`PartitionReaderFactory` -> `PartitionReader`. Similar renaming also happens at streaming and write APIs.

2. create `ScanConfig` to store query specific information like operator pushdown result, streaming offsets, etc. This makes batch and streaming `ReadSupport`(previouslly named `DataSourceReader`) immutable. All other methods take `ScanConfig` as input, which implies applying operator pushdown and getting streaming offsets happen before all other things(get input partitions, report statistics, etc.).

3. separate `InputPartition` to `InputPartition` and `PartitionReaderFactory`. This is a natural separation, data splitting and reading are orthogonal and we should not mix them in one interfaces. This also makes the naming consistent between read and write API: `PartitionReaderFactory` vs `DataWriterFactory`.

4. separate the batch and streaming interfaces. Sometimes it's painful to force the streaming interface to extend batch interface, as we may need to override some batch methods to return false, or even leak the streaming concept to batch API(e.g. `DataWriterFactory#createWriter(partitionId, taskId, epochId)`)

Some follow-ups we should do after this PR (tracked by https://issues.apache.org/jira/browse/SPARK-25186 ):

1. Revisit the life cycle of `ReadSupport` instances. Currently I keep it same as the previous `DataSourceReader`, i.e. the life cycle is bound to the batch/stream query. This fits streaming very well but may not be perfect for batch source. We can also consider to let `ReadSupport.newScanConfigBuilder` take `DataSourceOptions` as parameter, if we decide to change the life cycle.

2. Add `WriteConfig`. This is similar to `ScanConfig` and makes the write API more flexible. But it's only needed when we add the `replaceWhere` support, and it needs to change the streaming execution engine for this new concept, which I think is better to be done in another PR.

3. Refine the document. This PR adds/changes a lot of document and it's very likely that some people may have better ideas.

4. Figure out the life cycle of `CustomMetrics`. It looks to me that it should be bound to a `ScanConfig`, but we need to change `ProgressReporter` to get the `ScanConfig`. Better to be done in another PR.

5. Better operator pushdown API. This PR keeps the pushdown API as it was, i.e. using the `SupportsPushdownXYZ` traits. We can design a better API using build pattern, but this is a complicated design and deserves an individual JIRA ticket and design doc.

6. Improve the continuous streaming engine to only create a new `ScanConfig` when re-configuring.

7. Remove `SupportsPushdownCatalystFilter`. This is actually not a must-have for file source, we can change the hive partition pruning to use the public `Filter`.

## How was this patch tested?

existing tests.

Closes#22009 from cloud-fan/redesign.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This fixes a perf regression caused by https://github.com/apache/spark/pull/21376 .

We should not use `RDD#toLocalIterator`, which triggers one Spark job per RDD partition. This is very bad for RDDs with a lot of small partitions.

To fix it, this PR introduces a way to access SQLConf in the scheduler event loop thread, so that we don't need to use `RDD#toLocalIterator` anymore in `JsonInferSchema`.

## How was this patch tested?

a new test

Closes#22152 from cloud-fan/conf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This pr is to fix bugs when expr codegen fails; we need to catch `java.util.concurrent.ExecutionException` instead of `InternalCompilerException` and `CompileException` . This handling is the same with the `WholeStageCodegenExec ` one: 60af2501e1/sql/core/src/main/scala/org/apache/spark/sql/execution/WholeStageCodegenExec.scala (L585)

## How was this patch tested?

Added tests in `CodeGeneratorWithInterpretedFallbackSuite`

Closes#22154 from maropu/SPARK-25140.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Two back to PRs implicitly conflicted by one PR removing an existing import that the other PR needed. This did not cause explicit conflict as the import already existed, but not used.

https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Compile/job/spark-master-compile-maven-hadoop-2.7/8226/consoleFull

```

[info] Compiling 342 Scala sources and 97 Java sources to /home/jenkins/workspace/spark-master-compile-maven-hadoop-2.7/sql/core/target/scala-2.11/classes...

[warn] /home/jenkins/workspace/spark-master-compile-maven-hadoop-2.7/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala:128: value ENABLE_JOB_SUMMARY in object ParquetOutputFormat is deprecated: see corresponding Javadoc for more information.

[warn] && conf.get(ParquetOutputFormat.ENABLE_JOB_SUMMARY) == null) {

[warn] ^

[error] /home/jenkins/workspace/spark-master-compile-maven-hadoop-2.7/sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/statefulOperators.scala:95: value asJava is not a member of scala.collection.immutable.Map[String,Long]

[error] new java.util.HashMap(customMetrics.mapValues(long2Long).asJava)

[error] ^

[warn] one warning found

[error] one error found

[error] Compile failed at Aug 21, 2018 4:04:35 PM [12.827s]

```

## How was this patch tested?

It compiles!

Closes#22175 from tdas/fix-build.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

This patch exposes the estimation of size of cache (loadedMaps) in HDFSBackedStateStoreProvider as a custom metric of StateStore.

The rationalize of the patch is that state backed by HDFSBackedStateStoreProvider will consume more memory than the number what we can get from query status due to caching multiple versions of states. The memory footprint to be much larger than query status reports in situations where the state store is getting a lot of updates: while shallow-copying map incurs additional small memory usages due to the size of map entities and references, but row objects will still be shared across the versions. If there're lots of updates between batches, less row objects will be shared and more row objects will exist in memory consuming much memory then what we expect.

While HDFSBackedStateStore refers loadedMaps in HDFSBackedStateStoreProvider directly, there would be only one `StateStoreWriter` which refers a StateStoreProvider, so the value is not exposed as well as being aggregated multiple times. Current state metrics are safe to aggregate for the same reason.

## How was this patch tested?

Tested manually. Below is the snapshot of UI page which is reflected by the patch:

<img width="601" alt="screen shot 2018-06-05 at 10 16 16 pm" src="https://user-images.githubusercontent.com/1317309/40978481-b46ad324-690e-11e8-9b0f-e80528612a62.png">

Please refer "estimated size of states cache in provider total" as well as "count of versions in state cache in provider".

Closes#21469 from HeartSaVioR/SPARK-24441.

Authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

In https://issues.apache.org/jira/browse/SPARK-24924, the data source provider com.databricks.spark.avro is mapped to the new package org.apache.spark.sql.avro .

As per the discussion in the [Jira](https://issues.apache.org/jira/browse/SPARK-24924) and PR #22119, we should make the mapping configurable.

This PR also improve the error message when data source of Avro/Kafka is not found.

## How was this patch tested?

Unit test

Closes#22133 from gengliangwang/configurable_avro_mapping.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This patch proposes a new flag option for stateful aggregation: remove redundant key data from value.

Enabling new option runs similar with current, and uses less memory for state according to key/value fields of state operator.

Please refer below link to see detailed perf. test result:

https://issues.apache.org/jira/browse/SPARK-24763?focusedCommentId=16536539&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16536539

Since the state between enabling the option and disabling the option is not compatible, the option is set to 'disable' by default (to ensure backward compatibility), and OffsetSeqMetadata would prevent modifying the option after executing query.

## How was this patch tested?

Modify unit tests to cover both disabling option and enabling option.

Also did manual tests to see whether propose patch improves state memory usage.

Closes#21733 from HeartSaVioR/SPARK-24763.

Authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/22079#discussion_r209705612 It is possible for two objects to be unequal and yet we consider them as equal with this code, if the long values are separated by Int.MaxValue.

This PR fixes the issue.

## How was this patch tested?

Add new test cases in `RecordBinaryComparatorSuite`.

Closes#22101 from jiangxb1987/fix-rbc.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Spark SQL returns NULL for a column whose Hive metastore schema and Parquet schema are in different letter cases, regardless of spark.sql.caseSensitive set to true or false. This PR aims to add case-insensitive field resolution for ParquetFileFormat.

* Do case-insensitive resolution only if Spark is in case-insensitive mode.

* Field resolution should fail if there is ambiguity, i.e. more than one field is matched.

## How was this patch tested?

Unit tests added.

Closes#22148 from seancxmao/SPARK-25132-Parquet.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When column pruning is turned on the checking of headers in the csv should only be for the fields in the requiredSchema, not the dataSchema, because column pruning means only requiredSchema is read.

## How was this patch tested?

Added 2 unit tests where column pruning is turned on/off and csv headers are checked againt schema

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22123 from koertkuipers/feat-csv-column-pruning-and-check-header.

Authored-by: Koert Kuipers <koert@tresata.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

[SPARK-25144](https://issues.apache.org/jira/browse/SPARK-25144) reports memory leaks on Apache Spark 2.0.2 ~ 2.3.2-RC5. The bug is already fixed via #21738 as a part of SPARK-21743. This PR only adds a test case to prevent any future regression.

```scala

scala> case class Foo(bar: Option[String])

scala> val ds = List(Foo(Some("bar"))).toDS

scala> val result = ds.flatMap(_.bar).distinct

scala> result.rdd.isEmpty

18/08/19 23:01:54 WARN Executor: Managed memory leak detected; size = 8650752 bytes, TID = 125

res0: Boolean = false

```

## How was this patch tested?

Pass the Jenkins with a new added test case.

Closes#22155 from dongjoon-hyun/SPARK-25144-2.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to skip invoking of the CSV/JSON parser per each line in the case if the required schema is empty. Added benchmarks for `count()` shows performance improvement up to **3.5 times**.

Before:

```

Count a dataset with 10 columns: Best/Avg Time(ms) Rate(M/s) Per Row(ns)

--------------------------------------------------------------------------------------

JSON count() 7676 / 7715 1.3 767.6

CSV count() 3309 / 3363 3.0 330.9

```

After:

```

Count a dataset with 10 columns: Best/Avg Time(ms) Rate(M/s) Per Row(ns)

--------------------------------------------------------------------------------------

JSON count() 2104 / 2156 4.8 210.4

CSV count() 2332 / 2386 4.3 233.2

```

## How was this patch tested?

It was tested by `CSVSuite` and `JSONSuite` as well as on added benchmarks.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Author: Maxim Gekk <max.gekk@gmail.com>

Closes#21909 from MaxGekk/empty-schema-optimization.

## What changes were proposed in this pull request?

This pr adds `transform_values` function which applies the function to each entry of the map and transforms the values.

```javascript

> SELECT transform_values(map(array(1, 2, 3), array(1, 2, 3)), (k,v) -> v + 1);

map(1->2, 2->3, 3->4)

> SELECT transform_values(map(array(1, 2, 3), array(1, 2, 3)), (k,v) -> k + v);

map(1->2, 2->4, 3->6)

```

## How was this patch tested?

New Tests added to

`DataFrameFunctionsSuite`

`HigherOrderFunctionsSuite`

`SQLQueryTestSuite`

Closes#22045 from codeatri/SPARK-23940.

Authored-by: codeatri <nehapatil6@gmail.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Merges the two given arrays, element-wise, into a single array using function. If one array is shorter, nulls are appended at the end to match the length of the longer array, before applying function:

```

SELECT zip_with(ARRAY[1, 3, 5], ARRAY['a', 'b', 'c'], (x, y) -> (y, x)); -- [ROW('a', 1), ROW('b', 3), ROW('c', 5)]

SELECT zip_with(ARRAY[1, 2], ARRAY[3, 4], (x, y) -> x + y); -- [4, 6]

SELECT zip_with(ARRAY['a', 'b', 'c'], ARRAY['d', 'e', 'f'], (x, y) -> concat(x, y)); -- ['ad', 'be', 'cf']

SELECT zip_with(ARRAY['a'], ARRAY['d', null, 'f'], (x, y) -> coalesce(x, y)); -- ['a', null, 'f']

```

## How was this patch tested?

Added tests

Closes#22031 from techaddict/SPARK-23932.

Authored-by: Sandeep Singh <sandeep@techaddict.me>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

This pr adds transform_keys function which applies the function to each entry of the map and transforms the keys.

```javascript

> SELECT transform_keys(map(array(1, 2, 3), array(1, 2, 3)), (k,v) -> k + 1);

map(2->1, 3->2, 4->3)

> SELECT transform_keys(map(array(1, 2, 3), array(1, 2, 3)), (k,v) -> k + v);

map(2->1, 4->2, 6->3)

```

## How was this patch tested?

Added tests.

Closes#22013 from codeatri/SPARK-23939.

Authored-by: codeatri <nehapatil6@gmail.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Correct the javadoc for expm1() function.

## How was this patch tested?

None. It is a minor issue.

Closes#22115 from bomeng/25082.

Authored-by: Bo Meng <bo.meng@jd.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Upgrade Apache Arrow to 0.10.0

Version 0.10.0 has a number of bug fixes and improvements with the following pertaining directly to usage in Spark:

* Allow for adding BinaryType support ARROW-2141

* Bug fix related to array serialization ARROW-1973

* Python2 str will be made into an Arrow string instead of bytes ARROW-2101

* Python bytearrays are supported in as input to pyarrow ARROW-2141

* Java has common interface for reset to cleanup complex vectors in Spark ArrowWriter ARROW-1962

* Cleanup pyarrow type equality checks ARROW-2423

* ArrowStreamWriter should not hold references to ArrowBlocks ARROW-2632, ARROW-2645

* Improved low level handling of messages for RecordBatch ARROW-2704

## How was this patch tested?

existing tests

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#21939 from BryanCutler/arrow-upgrade-010.

## What changes were proposed in this pull request?

This PR adds a new SQL function called ```map_zip_with```. It merges the two given maps into a single map by applying function to the pair of values with the same key.

## How was this patch tested?

Added new tests into:

- DataFrameFunctionsSuite.scala

- HigherOrderFunctionsSuite.scala

Closes#22017 from mn-mikke/SPARK-23938.

Authored-by: Marek Novotny <mn.mikke@gmail.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

This PR fixes the an example for `to_json` in doc and function description.

- http://spark.apache.org/docs/2.3.0/api/sql/#to_json

- `describe function extended`

## How was this patch tested?

Pass the Jenkins with the updated test.

Closes#22096 from dongjoon-hyun/minor_json.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`ANALYZE TABLE ... PARTITION(...) COMPUTE STATISTICS` can fail with a NPE if a partition column contains a NULL value.

The PR avoids the NPE, replacing the `NULL` values with the default partition placeholder.

## How was this patch tested?

added UT

Closes#22036 from mgaido91/SPARK-25028.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a follow-up pr of #21954 to address comments.

- Rename ambiguous name `inputs` to `arguments`.

- Add argument type check and remove hacky workaround.

- Address other small comments.

## How was this patch tested?

Existing tests and some additional tests.

Closes#22075 from ueshin/issues/SPARK-23908/fup1.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The PR removes a restriction for element types of array type which exists in `from_json` for the root type. Currently, the function can handle only arrays of structs. Even array of primitive types is disallowed. The PR allows arrays of any types currently supported by JSON datasource. Here is an example of an array of a primitive type:

```

scala> import org.apache.spark.sql.functions._

scala> val df = Seq("[1, 2, 3]").toDF("a")

scala> val schema = new ArrayType(IntegerType, false)

scala> val arr = df.select(from_json($"a", schema))

scala> arr.printSchema

root

|-- jsontostructs(a): array (nullable = true)

| |-- element: integer (containsNull = true)

```

and result of converting of the json string to the `ArrayType`:

```

scala> arr.show

+----------------+

|jsontostructs(a)|

+----------------+

| [1, 2, 3]|

+----------------+

```

## How was this patch tested?

I added a few positive and negative tests:

- array of primitive types

- array of arrays

- array of structs

- array of maps

Closes#21439 from MaxGekk/from_json-array.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Support Avro logical date type:

https://avro.apache.org/docs/1.8.2/spec.html#Decimal

## How was this patch tested?

Unit test

Closes#22037 from gengliangwang/avro_decimal.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Fix scaladoc in Column

## How was this patch tested?

None

Closes#22069 from sadhen/fix_doc_minor.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

This PR adds codes to ``"Test `spark.sql.parquet.compression.codec` config"` in `ParquetCompressionCodecPrecedenceSuite`.

## How was this patch tested?

Existing UTs

Closes#22083 from kiszk/ParquetCompressionCodecPrecedenceSuite.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Fixing typos is sometimes very hard. It's not so easy to visually review them. Recently, I discovered a very useful tool for it, [misspell](https://github.com/client9/misspell).

This pull request fixes minor typos detected by [misspell](https://github.com/client9/misspell) except for the false positives. If you would like me to work on other files as well, let me know.

## How was this patch tested?

### before

```

$ misspell . | grep -v '.js'

R/pkg/R/SQLContext.R:354:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:424:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:445:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:495:43: "definiton" is a misspelling of "definition"

NOTICE-binary:454:16: "containd" is a misspelling of "contained"

R/pkg/R/context.R:46:43: "definiton" is a misspelling of "definition"

R/pkg/R/context.R:74:43: "definiton" is a misspelling of "definition"

R/pkg/R/DataFrame.R:591:48: "persistance" is a misspelling of "persistence"

R/pkg/R/streaming.R:166:44: "occured" is a misspelling of "occurred"

R/pkg/inst/worker/worker.R:65:22: "ouput" is a misspelling of "output"

R/pkg/tests/fulltests/test_utils.R:106:25: "environemnt" is a misspelling of "environment"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/InMemoryStoreSuite.java:38:39: "existant" is a misspelling of "existent"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/LevelDBSuite.java:83:39: "existant" is a misspelling of "existent"

common/network-common/src/main/java/org/apache/spark/network/crypto/TransportCipher.java:243:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:234:19: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:238:63: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:244:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:276:39: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

common/unsafe/src/test/scala/org/apache/spark/unsafe/types/UTF8StringPropertyCheckSuite.scala:195:15: "orgin" is a misspelling of "origin"

core/src/main/scala/org/apache/spark/api/python/PythonRDD.scala:621:39: "gauranteed" is a misspelling of "guaranteed"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/main/scala/org/apache/spark/storage/DiskStore.scala:282:18: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/util/ListenerBus.scala:64:17: "overriden" is a misspelling of "overridden"

core/src/test/scala/org/apache/spark/ShuffleSuite.scala:211:7: "substracted" is a misspelling of "subtracted"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:2468:84: "truely" is a misspelling of "truly"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:25:18: "persistance" is a misspelling of "persistence"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:26:69: "persistance" is a misspelling of "persistence"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

dev/run-pip-tests:55:28: "enviroments" is a misspelling of "environments"

dev/run-pip-tests:91:37: "virutal" is a misspelling of "virtual"

dev/merge_spark_pr.py:377:72: "accross" is a misspelling of "across"

dev/merge_spark_pr.py:378:66: "accross" is a misspelling of "across"

dev/run-pip-tests:126:25: "enviroments" is a misspelling of "environments"

docs/configuration.md:1830:82: "overriden" is a misspelling of "overridden"

docs/structured-streaming-programming-guide.md:525:45: "processs" is a misspelling of "processes"

docs/structured-streaming-programming-guide.md:1165:61: "BETWEN" is a misspelling of "BETWEEN"

docs/sql-programming-guide.md:1891:810: "behaivor" is a misspelling of "behavior"

examples/src/main/python/sql/arrow.py:98:8: "substract" is a misspelling of "subtract"

examples/src/main/python/sql/arrow.py:103:27: "substract" is a misspelling of "subtract"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/clustering/StreamingKMeans.scala:230:24: "inital" is a misspelling of "initial"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

mllib/src/test/scala/org/apache/spark/ml/clustering/KMeansSuite.scala:237:26: "descripiton" is a misspelling of "descriptions"

python/pyspark/find_spark_home.py:30:13: "enviroment" is a misspelling of "environment"

python/pyspark/context.py:937:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:938:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:939:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:940:12: "supress" is a misspelling of "suppress"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:713:8: "probabilty" is a misspelling of "probability"

python/pyspark/ml/clustering.py:1038:8: "Currenlty" is a misspelling of "Currently"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/ml/regression.py:1378:20: "paramter" is a misspelling of "parameter"

python/pyspark/mllib/stat/_statistics.py:262:8: "probabilty" is a misspelling of "probability"

python/pyspark/rdd.py:1363:32: "paramter" is a misspelling of "parameter"

python/pyspark/streaming/tests.py:825:42: "retuns" is a misspelling of "returns"

python/pyspark/sql/tests.py:768:29: "initalization" is a misspelling of "initialization"

python/pyspark/sql/tests.py:3616:31: "initalize" is a misspelling of "initialize"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerBackendUtil.scala:120:39: "arbitary" is a misspelling of "arbitrary"

resource-managers/mesos/src/test/scala/org/apache/spark/deploy/mesos/MesosClusterDispatcherArgumentsSuite.scala:26:45: "sucessfully" is a misspelling of "successfully"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerUtils.scala:358:27: "constaints" is a misspelling of "constraints"

resource-managers/yarn/src/test/scala/org/apache/spark/deploy/yarn/YarnClusterSuite.scala:111:24: "senstive" is a misspelling of "sensitive"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/SessionCatalog.scala:1063:5: "overwirte" is a misspelling of "overwrite"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala:1348:17: "compatability" is a misspelling of "compatibility"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/basicLogicalOperators.scala:77:36: "paramter" is a misspelling of "parameter"

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:1374:22: "precendence" is a misspelling of "precedence"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:238:27: "unnecassary" is a misspelling of "unnecessary"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ConditionalExpressionSuite.scala:212:17: "whn" is a misspelling of "when"

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/StreamingSymmetricHashJoinHelper.scala:147:60: "timestmap" is a misspelling of "timestamp"

sql/core/src/test/scala/org/apache/spark/sql/TPCDSQuerySuite.scala:150:45: "precentage" is a misspelling of "percentage"

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVInferSchemaSuite.scala:135:29: "infered" is a misspelling of "inferred"

sql/hive/src/test/resources/golden/udf_instr-1-2e76f819563dbaba4beb51e3a130b922:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_instr-2-32da357fc754badd6e3898dcc8989182:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-1-6e41693c9c6dceea4d7fab4c02884e4e:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-2-d9b5934457931447874d6bb7c13de478:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:9:79: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:13:110: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/annotate_stats_join.q:46:105: "distint" is a misspelling of "distinct"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/auto_sortmerge_join_11.q:29:3: "Currenly" is a misspelling of "Currently"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/avro_partitioned.q:72:15: "existant" is a misspelling of "existent"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/decimal_udf.q:25:3: "substraction" is a misspelling of "subtraction"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby2_map_multi_distinct.q:16:51: "funtion" is a misspelling of "function"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby_sort_8.q:15:30: "issueing" is a misspelling of "issuing"

sql/hive/src/test/scala/org/apache/spark/sql/sources/HadoopFsRelationTest.scala:669:52: "wiht" is a misspelling of "with"

sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/session/HiveSessionImpl.java:474:9: "Refering" is a misspelling of "Referring"

```

### after

```

$ misspell . | grep -v '.js'

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

```

Closes#22070 from seratch/fix-typo.

Authored-by: Kazuhiro Sera <seratch@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

"distribute by" on multiple columns (wrap in brackets) may lead to codegen issue.

Simple way to reproduce:

```scala

val df = spark.range(1000)

val columns = (0 until 400).map{ i => s"id as id$i" }

val distributeExprs = (0 until 100).map(c => s"id$c").mkString(",")

df.selectExpr(columns : _*).createTempView("test")

spark.sql(s"select * from test distribute by ($distributeExprs)").count()

```

## How was this patch tested?

Add UT.

Closes#22066 from yucai/SPARK-25084.

Authored-by: yucai <yyu1@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Parquet file provides six codecs: "snappy", "gzip", "lzo", "lz4", "brotli", "zstd".

This pr add missing compression codec :"lz4", "brotli", "zstd" .

## How was this patch tested?

N/A

Closes#22068 from 10110346/nosupportlz4.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

A logical `Limit` is performed physically by two operations `LocalLimit` and `GlobalLimit`.

Most of time, we gather all data into a single partition in order to run `GlobalLimit`. If we use a very big limit number, shuffling data causes performance issue also reduces parallelism.

We can avoid shuffling into single partition if we don't care data ordering. This patch implements this idea by doing a map stage during global limit. It collects the info of row numbers at each partition. For each partition, we locally retrieves limited data without any shuffling to finish this global limit.

For example, we have three partitions with rows (100, 100, 50) respectively. In global limit of 100 rows, we may take (34, 33, 33) rows for each partition locally. After global limit we still have three partitions.

If the data partition has certain ordering, we can't distribute required rows evenly to each partitions because it could change data ordering. But we still can avoid shuffling.

## How was this patch tested?

Jenkins tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#16677 from viirya/improve-global-limit-parallelism.

## What changes were proposed in this pull request?

Support for text socket stream in spark structured streaming "continuous" mode. This is roughly based on the idea of ContinuousMemoryStream where the executor queries the data from driver over an RPC endpoint.

This makes it possible to create Structured streaming continuous pipeline to ingest data via "nc" and run examples.

## How was this patch tested?

Unit test and ran spark examples in structured streaming continuous mode.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#21199 from arunmahadevan/SPARK-24127.

Authored-by: Arun Mahadevan <arunm@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This pr adds `exists` function which tests whether a predicate holds for one or more elements in the array.

```sql

> SELECT exists(array(1, 2, 3), x -> x % 2 == 0);

true

```

## How was this patch tested?

Added tests.

Closes#22052 from ueshin/issues/SPARK-25068/exists.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?