### What changes were proposed in this pull request?

Line 425 in `MasterSuite` is considered as unused expression by Intellij IDE,

bfba7fadd2/core/src/test/scala/org/apache/spark/deploy/master/MasterSuite.scala (L421-L426)

If we merge lines 424 and 425 into one as:

```

System.getProperty("spark.ui.proxyBase") should startWith (s"$reverseProxyUrl/proxy/worker-")

```

this assertion will fail:

```

- master/worker web ui available behind front-end reverseProxy *** FAILED ***

The code passed to eventually never returned normally. Attempted 45 times over 5.091914027 seconds. Last failure message: "http://proxyhost:8080/path/to/spark" did not start with substring "http://proxyhost:8080/path/to/spark/proxy/worker-". (MasterSuite.scala:405)

```

`System.getProperty("spark.ui.proxyBase")` should be `reverseProxyUrl` because `Master#onStart` and `Worker#handleRegisterResponse` have not changed it.

So the main purpose of this pr is to fix the condition of this assertion.

### Why are the changes needed?

Bug fix.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

- Pass the Jenkins or GitHub Action

- Manual test:

1. merge lines 424 and 425 in `MasterSuite` into one to eliminate the unused expression:

```

System.getProperty("spark.ui.proxyBase") should startWith (s"$reverseProxyUrl/proxy/worker-")

```

2. execute `mvn clean test -pl core -Dtest=none -DwildcardSuites=org.apache.spark.deploy.master.MasterSuite`

**Before**

```

- master/worker web ui available behind front-end reverseProxy *** FAILED ***

The code passed to eventually never returned normally. Attempted 45 times over 5.091914027 seconds. Last failure message: "http://proxyhost:8080/path/to/spark" did not start with substring "http://proxyhost:8080/path/to/spark/proxy/worker-". (MasterSuite.scala:405)

Run completed in 1 minute, 14 seconds.

Total number of tests run: 32

Suites: completed 2, aborted 0

Tests: succeeded 31, failed 1, canceled 0, ignored 0, pending 0

*** 1 TEST FAILED ***

```

**After**

```

Run completed in 1 minute, 11 seconds.

Total number of tests run: 32

Suites: completed 2, aborted 0

Tests: succeeded 32, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#32105 from LuciferYang/SPARK-35004.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

One of the main performance bottlenecks in query compilation is overly-generic tree transformation methods, namely `mapChildren` and `withNewChildren` (defined in `TreeNode`). These methods have an overly-generic implementation to iterate over the children and rely on reflection to create new instances. We have observed that, especially for queries with large query plans, a significant amount of CPU cycles are wasted in these methods. In this PR we make these methods more efficient, by delegating the iteration and instantiation to concrete node types. The benchmarks show that we can expect significant performance improvement in total query compilation time in queries with large query plans (from 30-80%) and about 20% on average.

#### Problem detail

The `mapChildren` method in `TreeNode` is overly generic and costly. To be more specific, this method:

- iterates over all the fields of a node using Scala’s product iterator. While the iteration is not reflection-based, thanks to the Scala compiler generating code for `Product`, we create many anonymous functions and visit many nested structures (recursive calls).

The anonymous functions (presumably compiled to Java anonymous inner classes) also show up quite high on the list in the object allocation profiles, so we are putting unnecessary pressure on GC here.

- does a lot of comparisons. Basically for each element returned from the product iterator, we check if it is a child (contained in the list of children) and then transform it. We can avoid that by just iterating over children, but in the current implementation, we need to gather all the fields (only transform the children) so that we can instantiate the object using the reflection.

- creates objects using reflection, by delegating to the `makeCopy` method, which is several orders of magnitude slower than using the constructor.

#### Solution

The proposed solution in this PR is rather straightforward: we rewrite the `mapChildren` method using the `children` and `withNewChildren` methods. The default `withNewChildren` method suffers from the same problems as `mapChildren` and we need to make it more efficient by specializing it in concrete classes. Similar to how each concrete query plan node already defines its children, it should also define how they can be constructed given a new list of children. Actually, the implementation is quite simple in most cases and is a one-liner thanks to the copy method present in Scala case classes. Note that we cannot abstract over the copy method, it’s generated by the compiler for case classes if no other type higher in the hierarchy defines it. For most concrete nodes, the implementation of `withNewChildren` looks like this:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = copy(children = newChildren)

```

The current `withNewChildren` method has two properties that we should preserve:

- It returns the same instance if the provided children are the same as its children, i.e., it preserves referential equality.

- It copies tags and maintains the origin links when a new copy is created.

These properties are hard to enforce in the concrete node type implementation. Therefore, we propose a template method `withNewChildrenInternal` that should be rewritten by the concrete classes and let the `withNewChildren` method take care of referential equality and copying:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = {

if (childrenFastEquals(children, newChildren)) {

this

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildrenInternal(newChildren)

res.copyTagsFrom(this)

res

}

}

}

```

With the refactoring done in a previous PR (https://github.com/apache/spark/pull/31932) most tree node types fall in one of the categories of `Leaf`, `Unary`, `Binary` or `Ternary`. These traits have a more efficient implementation for `mapChildren` and define a more specialized version of `withNewChildrenInternal` that avoids creating unnecessary lists. For example, the `mapChildren` method in `UnaryLike` is defined as follows:

```

override final def mapChildren(f: T => T): T = {

val newChild = f(child)

if (newChild fastEquals child) {

this.asInstanceOf[T]

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildInternal(newChild)

res.copyTagsFrom(this.asInstanceOf[T])

res

}

}

}

```

#### Results

With this PR, we have observed significant performance improvements in query compilation time, more specifically in the analysis and optimization phases. The table below shows the TPC-DS queries that had more than 25% speedup in compilation times. Biggest speedups are observed in queries with large query plans.

| Query | Speedup |

| ------------- | ------------- |

|q4 |29%|

|q9 |81%|

|q14a |31%|

|q14b |28%|

|q22 |33%|

|q33 |29%|

|q34 |25%|

|q39 |27%|

|q41 |27%|

|q44 |26%|

|q47 |28%|

|q48 |76%|

|q49 |46%|

|q56 |26%|

|q58 |43%|

|q59 |46%|

|q60 |50%|

|q65 |59%|

|q66 |46%|

|q67 |52%|

|q69 |31%|

|q70 |30%|

|q96 |26%|

|q98 |32%|

#### Binary incompatibility

Changing the `withNewChildren` in `TreeNode` breaks the binary compatibility of the code compiled against older versions of Spark because now it is expected that concrete `TreeNode` subclasses all implement the `withNewChildrenInternal` method. This is a problem, for example, when users write custom expressions. This change is the right choice, since it forces all newly added expressions to Catalyst implement it in an efficient manner and will prevent future regressions.

Please note that we have not completely removed the old implementation and renamed it to `legacyWithNewChildren`. This method will be removed in the future and for now helps the transition. There are expressions such as `UpdateFields` that have a complex way of defining children. Writing `withNewChildren` for them requires refactoring the expression. For now, these expressions use the old, slow method. In a future PR we address these expressions.

### Does this PR introduce _any_ user-facing change?

This PR does not introduce user facing changes but my break binary compatibility of the code compiled against older versions. See the binary compatibility section.

### How was this patch tested?

This PR is mainly a refactoring and passes existing tests.

Closes#32030 from dbaliafroozeh/ImprovedMapChildren.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR replaces 127.0.0.1 to `localhost`.

### Why are the changes needed?

- https://github.com/apache/spark/pull/32096#discussion_r610349269

- https://github.com/apache/spark/pull/32096#issuecomment-816442481

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

I didn't test it because it's CI specific issue. I will test it in Github Actions build in this PR.

Closes#32102 from HyukjinKwon/SPARK-35002.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

Implement toString() and sql() methods for TRY_CAST

### Why are the changes needed?

The new expression should have a different name from `CAST` in SQL/String representation.

### Does this PR introduce _any_ user-facing change?

Yes, in the result of `explain()`, users can see try_cast if the new expression is used.

### How was this patch tested?

Unit tests.

Closes#32098 from gengliangwang/tryCastString.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Now that we merged the Koalas main code into the PySpark code base (#32036), we should port the Koalas DataFrame unit test to PySpark.

### Why are the changes needed?

Currently, the pandas-on-Spark modules are not tested at all. We should enable the DataFrame unit test first.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Enable the DataFrame unit test.

Closes#32083 from xinrong-databricks/port.test_dataframe.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR tries to fix the `java.net.BindException` when testing with Github Action:

```

[info] org.apache.spark.sql.kafka010.producer.InternalKafkaProducerPoolSuite *** ABORTED *** (282 milliseconds)

[info] java.net.BindException: Cannot assign requested address: Service 'sparkDriver' failed after 100 retries (on a random free port)! Consider explicitly setting the appropriate binding address for the service 'sparkDriver' (for example spark.driver.bindAddress for SparkDriver) to the correct binding address.

[info] at sun.nio.ch.Net.bind0(Native Method)

[info] at sun.nio.ch.Net.bind(Net.java:461)

[info] at sun.nio.ch.Net.bind(Net.java:453)

```

https://github.com/apache/spark/pull/32090/checks?check_run_id=2295418529

### Why are the changes needed?

Fix test framework.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Test by Github Action.

Closes#32096 from wangyum/SPARK_LOCAL_IP=localhost.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Close SparkContext after the Main method has finished, to allow SparkApplication on K8S to complete

### Why are the changes needed?

if I don't call the method sparkContext.stop() explicitly, then a Spark driver process doesn't terminate even after its Main method has been completed. This behaviour is different from spark on yarn, where the manual sparkContext stopping is not required. It looks like, the problem is in using non-daemon threads, which prevent the driver jvm process from terminating.

So I have inserted code that closes sparkContext automatically.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manually on the production AWS EKS environment in my company.

Closes#32081 from kotlovs/close-spark-context-on-exit.

Authored-by: skotlov <skotlov@joom.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Remove some unused fields and methods in `SpecificParquetRecordReaderBase` and `VectorizedColumnReader`.

### Why are the changes needed?

Some fields and methods in these classes are no longer used since years ago. It's better to clean them up to make the code easier to maintain and read.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests

Closes#32071 from sunchao/cleanup-parquet.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Extend `HiveResult.toHiveString()` to support new interval types `YearMonthIntervalType` and `DayTimeIntervalType`.

### Why are the changes needed?

To fix failures while formatting ANSI intervals as Hive strings. For example:

```sql

spark-sql> select timestamp'now' - date'2021-01-01';

21/04/08 09:42:49 ERROR SparkSQLDriver: Failed in [select timestamp'now' - date'2021-01-01']

scala.MatchError: (PT2337H42M46.649S,DayTimeIntervalType) (of class scala.Tuple2)

at org.apache.spark.sql.execution.HiveResult$.toHiveString(HiveResult.scala:97)

```

### Does this PR introduce _any_ user-facing change?

Yes. After the changes:

```sql

spark-sql> select timestamp'now' - date'2021-01-01';

INTERVAL '97 09:37:52.171' DAY TO SECOND

```

### How was this patch tested?

By running new tests:

```

$ build/sbt -Phive-2.3 -Phive-thriftserver "testOnly *HiveResultSuite"

```

Closes#32087 from MaxGekk/ansi-interval-hiveResultString.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Change UpdateAction and InsertAction of MergeIntoTable to explicitly represent star,

### Why are the changes needed?

Currently, UpdateAction and InsertAction in the MergeIntoTable implicitly represent `update set *` and `insert *` with empty assignments. That means there is no way to differentiate between the representations of "update all columns" and "update no columns". For SQL MERGE queries, this inability does not matter because the SQL MERGE grammar that generated the MergeIntoTable plan does not allow "update no columns". However, other ways of generating the MergeIntoTable plan may not have that limitation, and may want to allow specifying "update no columns". For example, in the Delta Lake project we provide a type-safe Scala API for Merge, where it is perfectly valid to produce a Merge query with an update clause but no update assignments. Currently, we cannot use MergeIntoTable to represent this plan, thus complicating the generation, and resolution of merge query from scala API.

Side note: fixed another bug where a merge plan with star and no other expressions with unresolved attributes (e.g. all non-optional predicates are `literal(true)`), then resolution will be skipped and star wont expanded. added test for that.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing unit tests

Closes#32067 from tdas/SPARK-34962-2.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, we can't support use ordinal in CUBE/ROLLUP/GROUPING SETS,

this pr make CUBE/ROLLUP/GROUPING SETS support GROUP BY ordinal

### Why are the changes needed?

Make CUBE/ROLLUP/GROUPING SETS support GROUP BY ordinal.

Postgres SQL and TeraData support this use case.

### Does this PR introduce _any_ user-facing change?

User can use ordinal in CUBE/ROLLUP/GROUPING SETS, such as

```

-- can use ordinal in CUBE

select a, b, count(1) from data group by cube(1, 2);

-- mixed cases: can use ordinal in CUBE

select a, b, count(1) from data group by cube(1, b);

-- can use ordinal with cube

select a, b, count(1) from data group by 1, 2 with cube;

-- can use ordinal in ROLLUP

select a, b, count(1) from data group by rollup(1, 2);

-- mixed cases: can use ordinal in ROLLUP

select a, b, count(1) from data group by rollup(1, b);

-- can use ordinal with rollup

select a, b, count(1) from data group by 1, 2 with rollup;

-- can use ordinal in GROUPING SETS

select a, b, count(1) from data group by grouping sets((1), (2), (1, 2));

-- mixed cases: can use ordinal in GROUPING SETS

select a, b, count(1) from data group by grouping sets((1), (b), (a, 2));

select a, b, count(1) from data group by a, 2 grouping sets((1), (b), (a, 2));

```

### How was this patch tested?

Added UT

Closes#30145 from AngersZhuuuu/SPARK-33233.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR adds two additional checks in `CheckAnalysis` for correlated scalar subquery in Aggregate. It blocks the cases that Spark do not currently support based on the rewrite logic in `RewriteCorrelatedScalarSubquery`:

aff6c0febb/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/subquery.scala (L618-L624)

### Why are the changes needed?

It can be confusing to users when their queries pass the check analysis but cannot be executed. Also, the error messages are confusing:

#### Case 1: correlated scalar subquery in the grouping expressions but not in aggregate expressions

```sql

SELECT SUM(c2) FROM t t1 GROUP BY (SELECT SUM(c2) FROM t t2 WHERE t1.c1 = t2.c1)

```

We get this error:

```

java.lang.AssertionError: assertion failed: Expects 1 field, but got 2; something went wrong in analysis

```

because the correlated scalar subquery is not rewritten properly:

```scala

== Optimized Logical Plan ==

Aggregate [scalar-subquery#5 [(c1#6 = c1#6#93)]], [sum(c2#7) AS sum(c2)#11L]

: +- Aggregate [c1#6], [sum(c2#7) AS sum(c2)#15L, c1#6 AS c1#6#93]

: +- LocalRelation [c1#6, c2#7]

+- LocalRelation [c1#6, c2#7]

```

#### Case 2: correlated scalar subquery in the aggregate expressions but not in the grouping expressions

```sql

SELECT (SELECT SUM(c2) FROM t t2 WHERE t1.c1 = t2.c1), SUM(c2) FROM t t1 GROUP BY c1

```

We get this error:

```

java.lang.IllegalStateException: Couldn't find sum(c2)#69L in [c1#60,sum(c2#61)#64L]

```

because the transformed correlated scalar subquery output is not present in the grouping expression of the Aggregate:

```scala

== Optimized Logical Plan ==

Aggregate [c1#60], [sum(c2)#69L AS scalarsubquery(c1)#70L, sum(c2#61) AS sum(c2)#65L]

+- Project [c1#60, c2#61, sum(c2)#69L]

+- Join LeftOuter, (c1#60 = c1#60#95)

:- LocalRelation [c1#60, c2#61]

+- Aggregate [c1#60], [sum(c2#61) AS sum(c2)#69L, c1#60 AS c1#60#95]

+- LocalRelation [c1#60, c2#61]

```

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

New unit tests

Closes#32054 from allisonwang-db/spark-34946-scalar-subquery-agg.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR upgrades the version of Jetty to 9.4.39.

### Why are the changes needed?

CVE-2021-28165 affects the version of Jetty that Spark uses and it seems to be a little bit serious.

https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2021-28165

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32091 from sarutak/upgrade-jetty-9.4.39.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR fixes an issue that `ADD JAR` command can't add jar files which contain whitespaces in the path though `ADD FILE` and `ADD ARCHIVE` work with such files.

If we have `/some/path/test file.jar` and execute the following command:

```

ADD JAR "/some/path/test file.jar";

```

The following exception is thrown.

```

21/04/05 10:40:38 ERROR SparkSQLDriver: Failed in [add jar "/some/path/test file.jar"]

java.lang.IllegalArgumentException: Illegal character in path at index 9: /some/path/test file.jar

at java.net.URI.create(URI.java:852)

at org.apache.spark.sql.hive.HiveSessionResourceLoader.addJar(HiveSessionStateBuilder.scala:129)

at org.apache.spark.sql.execution.command.AddJarCommand.run(resources.scala:34)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:79)

```

This is because `HiveSessionStateBuilder` and `SessionStateBuilder` don't check whether the form of the path is URI or plain path and it always regards the path as URI form.

Whitespces should be encoded to `%20` so `/some/path/test file.jar` is rejected.

We can resolve this part by checking whether the given path is URI form or not.

Unfortunatelly, if we fix this part, another problem occurs.

When we execute `ADD JAR` command, Hive's `ADD JAR` command is executed in `HiveClientImpl.addJar` and `AddResourceProcessor.run` is transitively invoked.

In `AddResourceProcessor.run`, the command line is just split by `

s+` and the path is also split into `/some/path/test` and `file.jar` and passed to `ss.add_resources`.

f1e8713703/ql/src/java/org/apache/hadoop/hive/ql/processors/AddResourceProcessor.java (L56-L75)

So, the command still fails.

Even if we convert the form of the path to URI like `file:/some/path/test%20file.jar` and execute the following command:

```

ADD JAR "file:/some/path/test%20file";

```

The following exception is thrown.

```

21/04/05 10:40:53 ERROR SessionState: file:/some/path/test%20file.jar does not exist

java.lang.IllegalArgumentException: file:/some/path/test%20file.jar does not exist

at org.apache.hadoop.hive.ql.session.SessionState.validateFiles(SessionState.java:1168)

at org.apache.hadoop.hive.ql.session.SessionState$ResourceType.preHook(SessionState.java:1289)

at org.apache.hadoop.hive.ql.session.SessionState$ResourceType$1.preHook(SessionState.java:1278)

at org.apache.hadoop.hive.ql.session.SessionState.add_resources(SessionState.java:1378)

at org.apache.hadoop.hive.ql.session.SessionState.add_resources(SessionState.java:1336)

at org.apache.hadoop.hive.ql.processors.AddResourceProcessor.run(AddResourceProcessor.java:74)

```

The reason is `Utilities.realFile` invoked in `SessionState.validateFiles` returns `null` as the result of `fs.exists(path)` is `false`.

f1e8713703/ql/src/java/org/apache/hadoop/hive/ql/exec/Utilities.java (L1052-L1064)

`fs.exists` checks the existence of the given path by comparing the string representation of Hadoop's `Path`.

The string representation of `Path` is similar to URI but it's actually different.

`Path` doesn't encode the given path.

For example, the URI form of `/some/path/jar file.jar` is `file:/some/path/jar%20file.jar` but the `Path` form of it is `file:/some/path/jar file.jar`. So `fs.exists` returns false.

So the solution I come up with is removing Hive's `ADD JAR` from `HiveClientimpl.addJar`.

I think Hive's `ADD JAR` was used to add jar files to the class loader for metadata and isolate the class loader from the one for execution.

https://github.com/apache/spark/pull/6758/files#diff-cdb07de713c84779a5308f65be47964af865e15f00eb9897ccf8a74908d581bbR94-R103

But, as of SPARK-10810 and SPARK-10902 (#8909) are resolved, the class loaders for metadata and execution seem to be isolated with different way.

https://github.com/apache/spark/pull/8909/files#diff-8ef7cabf145d3fe7081da799fa415189d9708892ed76d4d13dd20fa27021d149R635-R641

In the current implementation, such class loaders seem to be isolated by `SharedState.jarClassLoader` and `IsolatedClientLoader.classLoader`.

https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/internal/SessionState.scala#L173-L188https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala#L956-L967

So I wonder we can remove Hive's `ADD JAR` from `HiveClientImpl.addJar`.

### Why are the changes needed?

This is a bug.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

Closes#32052 from sarutak/add-jar-whitespace.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to add a documentation on how to read and write TEXT files through various APIs such as Scala, Python and JAVA in Spark to [Data Source documents](https://spark.apache.org/docs/latest/sql-data-sources.html#data-sources).

### Why are the changes needed?

Documentation on how Spark handles TEXT files is missing. It should be added to the document for user convenience.

### Does this PR introduce _any_ user-facing change?

Yes, this PR adds a new page to Data Sources documents.

### How was this patch tested?

Manually build documents and check the page on local as below.

Closes#32053 from itholic/SPARK-34491-TEXT.

Authored-by: itholic <haejoon.lee@databricks.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

1. Added new method `toDayTimeIntervalString()` to `IntervalUtils` which converts a day-time interval as a number of microseconds to a string in the form **"INTERVAL '[sign]days hours:minutes:secondsWithFraction' DAY TO SECOND"**.

2. Extended the `Cast` expression to support casting of `DayTimeIntervalType` to `StringType`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires to support such casting.

### Does this PR introduce _any_ user-facing change?

Should not because new day-time interval has not been released yet.

### How was this patch tested?

Added new tests for casting:

```

$ build/sbt "testOnly *CastSuite*"

```

Closes#32070 from MaxGekk/cast-dt-interval-to-string.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Current trait `GroupingSet` is ambiguous, since `grouping set` in parser level means one set of a group.

Rename this to `BaseGroupingSets` since cube/rollup is syntax sugar for grouping sets.`

### Why are the changes needed?

Refactor class name

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#32073 from AngersZhuuuu/SPARK-34976.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Now that we merged the Koalas main code into PySpark code base (#32036), we should enable doctests on the Spark's infrastructure.

### Why are the changes needed?

Currently the pandas-on-Spark modules are not tested at all.

We should enable doctests first, and we will port other unit tests separately later.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Enabled the whole doctests.

Closes#32069 from ueshin/issues/SPARK-34972/pyspark-pandas_doctests.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

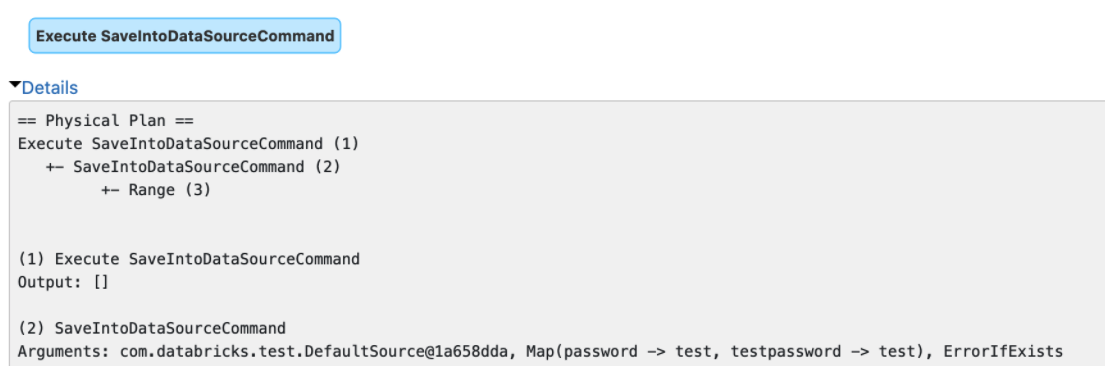

The `explain()` method prints the arguments of tree nodes in logical/physical plans. The arguments could contain a map-type option that contains sensitive data.

We should map-type options in the output of `explain()`. Otherwise, we will see sensitive data in explain output or Spark UI.

### Why are the changes needed?

Data security.

### Does this PR introduce _any_ user-facing change?

Yes, redact the map-type options in the output of `explain()`

### How was this patch tested?

Unit tests

Closes#32066 from gengliangwang/redactOptions.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

## What changes were proposed in this pull request?

This adds a new API for catalog plugins that exposes functions to Spark. The API can list and load functions. This does not include create, delete, or alter operations.

- [Design Document](https://docs.google.com/document/d/1PLBieHIlxZjmoUB0ERF-VozCRJ0xw2j3qKvUNWpWA2U/edit?usp=sharing)

There are 3 types of functions defined:

* A `ScalarFunction` that produces a value for every call

* An `AggregateFunction` that produces a value after updates for a group of rows

Functions loaded from the catalog by name as `UnboundFunction`. Once input arguments are determined `bind` is called on the unbound function to get a `BoundFunction` implementation that is one of the 3 types above. Binding can fail if the function doesn't support the input type. `BoundFunction` returns the result type produced by the function.

## How was this patch tested?

This includes a test that demonstrates the new API.

Closes#24559 from rdblue/SPARK-27658-add-function-catalog-api.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a followup for https://github.com/apache/spark/pull/31932.

In this PR we:

- Introduce the `QuaternaryLike` trait for node types with 4 children.

- Specialize more node types

- Fix a number of style errors that were introduced in the original PR.

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

This is a refactoring, passes existing tests.

Closes#32065 from dbaliafroozeh/FollowupSPARK-34906.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR extends the current function registry and catalog to support table-valued functions by adding a table function registry. It also refactors `range` to be a built-in function in the table function registry.

### Why are the changes needed?

Currently, Spark resolves table-valued functions very differently from the other functions. This change is to make the behavior for table and non-table functions consistent. It also allows Spark to display information about built-in table-valued functions:

Before:

```scala

scala> sql("describe function range").show(false)

+--------------------------+

|function_desc |

+--------------------------+

|Function: range not found.|

+--------------------------+

```

After:

```scala

Function: range

Class: org.apache.spark.sql.catalyst.plans.logical.Range

Usage:

range(start: Long, end: Long, step: Long, numPartitions: Int)

range(start: Long, end: Long, step: Long)

range(start: Long, end: Long)

range(end: Long)

// Extended

Function: range

Class: org.apache.spark.sql.catalyst.plans.logical.Range

Usage:

range(start: Long, end: Long, step: Long, numPartitions: Int)

range(start: Long, end: Long, step: Long)

range(start: Long, end: Long)

range(end: Long)

Extended Usage:

Examples:

> SELECT * FROM range(1);

+---+

| id|

+---+

| 0|

+---+

> SELECT * FROM range(0, 2);

+---+

|id |

+---+

|0 |

|1 |

+---+

> SELECT range(0, 4, 2);

+---+

|id |

+---+

|0 |

|2 |

+---+

Since: 2.0.0

```

### Does this PR introduce _any_ user-facing change?

Yes. User will not be able to create a function with name `range` in the default database:

Before:

```scala

scala> sql("create function range as 'range'")

res3: org.apache.spark.sql.DataFrame = []

```

After:

```

scala> sql("create function range as 'range'")

org.apache.spark.sql.catalyst.analysis.FunctionAlreadyExistsException: Function 'default.range' already exists in database 'default'

```

### How was this patch tested?

Unit test

Closes#31791 from allisonwang-db/spark-34678-table-func-registry.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Changed the cost comparison function of the CBO to use the ratios of row counts and sizes in bytes.

### Why are the changes needed?

In #30965 we changed to CBO cost comparison function so it would be "symetric": `A.betterThan(B)` now implies, that `!B.betterThan(A)`.

With that we caused a performance regressions in some queries - TPCDS q19 for example.

The original cost comparison function used the ratios `relativeRows = A.rowCount / B.rowCount` and `relativeSize = A.size / B.size`. The changed function compared "absolute" cost values `costA = w*A.rowCount + (1-w)*A.size` and `costB = w*B.rowCount + (1-w)*B.size`.

Given the input from wzhfy we decided to go back to the relative values, because otherwise one (size) may overwhelm the other (rowCount). But this time we avoid adding up the ratios.

Originally `A.betterThan(B) => w*relativeRows + (1-w)*relativeSize < 1` was used. Besides being "non-symteric", this also can exhibit one overwhelming other.

For `w=0.5` If `A` size (bytes) is at least 2x larger than `B`, then no matter how many times more rows does the `B` plan have, `B` will allways be considered to be better - `0.5*2 + 0.5*0.00000000000001 > 1`.

When working with ratios, then it would be better to multiply them.

The proposed cost comparison function is: `A.betterThan(B) => relativeRows^w * relativeSize^(1-w) < 1`.

### Does this PR introduce _any_ user-facing change?

Comparison of the changed TPCDS v1.4 query execution times at sf=10:

| absolute | multiplicative | | additive |

-- | -- | -- | -- | -- | --

q12 | 145 | 137 | -5.52% | 141 | -2.76%

q13 | 264 | 271 | 2.65% | 271 | 2.65%

q17 | 4521 | 4243 | -6.15% | 4348 | -3.83%

q18 | 758 | 466 | -38.52% | 480 | -36.68%

q19 | 38503 | 2167 | -94.37% | 2176 | -94.35%

q20 | 119 | 120 | 0.84% | 126 | 5.88%

q24a | 16429 | 16838 | 2.49% | 17103 | 4.10%

q24b | 16592 | 16999 | 2.45% | 17268 | 4.07%

q25 | 3558 | 3556 | -0.06% | 3675 | 3.29%

q33 | 362 | 361 | -0.28% | 380 | 4.97%

q52 | 1020 | 1032 | 1.18% | 1052 | 3.14%

q55 | 927 | 938 | 1.19% | 961 | 3.67%

q72 | 24169 | 13377 | -44.65% | 24306 | 0.57%

q81 | 1285 | 1185 | -7.78% | 1168 | -9.11%

q91 | 324 | 336 | 3.70% | 337 | 4.01%

q98 | 126 | 129 | 2.38% | 131 | 3.97%

All times are in ms, the change is compared to the situation in the master branch (absolute).

The proposed cost function (multiplicative) significantlly improves the performance on q18, q19 and q72. The original cost function (additive) has similar improvements at q18 and q19. All other chagnes are within the error bars and I would ignore them - perhaps q81 has also improved.

### How was this patch tested?

PlanStabilitySuite

Closes#32014 from tanelk/SPARK-34922_cbo_better_cost_function.

Lead-authored-by: Tanel Kiis <tanel.kiis@gmail.com>

Co-authored-by: tanel.kiis@gmail.com <tanel.kiis@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This patch add the `-fr` argument to `xargs rm`.

### Why are the changes needed?

This cmd is unavailable in basic case. If the find command does not get any search results, the rm command is invoked with an empty argument list, and then we will get a `rm: missing operand` and break, then the coverage report does not generate.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

python/run-tests-with-coverage --testnames pyspark.sql.tests.test_arrow --python-executables=python

The coverage report result is generated without break.

Closes#32064 from Yikun/patch-1.

Authored-by: Yikun Jiang <yikunkero@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR removes `.sbtopts` (added in https://github.com/apache/spark/pull/29286) that duplicately sets the default memory. The default memories are set:

3b634f66c3/build/sbt-launch-lib.bash (L119-L124)

### Why are the changes needed?

This file disables the memory option from the `build/sbt` script:

```bash

./build/sbt -mem 6144

```

```

.../jdk-11.0.3.jdk/Contents/Home as default JAVA_HOME.

Note, this will be overridden by -java-home if it is set.

Error occurred during initialization of VM

Initial heap size set to a larger value than the maximum heap size

```

because it adds these memory options at the last:

```bash

/.../bin/java -Xms6144m -Xmx6144m -XX:ReservedCodeCacheSize=256m -Xmx4G -Xss4m -jar build/sbt-launch-1.5.0.jar

```

and Java respects the rightmost memory configurations.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually ran SBT. It will be tested in the CIs in this Pr.

Closes#32062 from HyukjinKwon/SPARK-34965.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1. Added new method `toYearMonthIntervalString()` to `IntervalUtils` which converts an year-month interval as a number of month to a string in the form **"INTERVAL '[sign]yearField-monthField' YEAR TO MONTH"**.

2. Extended the `Cast` expression to support casting of `YearMonthIntervalType` to `StringType`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires to support such casting.

### Does this PR introduce _any_ user-facing change?

Should not because new year-month interval has not been released yet.

### How was this patch tested?

Added new tests for casting:

```

$ build/sbt "testOnly *CastSuite*"

```

Closes#32056 from MaxGekk/cast-ym-interval-to-string.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Changes the metadata propagation framework.

Previously, most `LogicalPlan`'s propagated their `children`'s `metadataOutput`. This did not make sense in cases where the `LogicalPlan` did not even propagate their `children`'s `output`.

I set the metadata output for plans that do not propagate their `children`'s `output` to be `Nil`. Notably, `Project` and `View` no longer have metadata output.

### Why are the changes needed?

Previously, `SELECT m from (SELECT a from tb)` would output `m` if it were metadata. This did not make sense.

### Does this PR introduce _any_ user-facing change?

Yes. Now, `SELECT m from (SELECT a from tb)` will encounter an `AnalysisException`.

### How was this patch tested?

Added unit tests. I did not cover all cases, as they are fairly extensive. However, the new tests cover major cases (and an existing test already covers Join).

Closes#32017 from karenfeng/spark-34923.

Authored-by: Karen Feng <karen.feng@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

As a first step of [SPARK-34849](https://issues.apache.org/jira/browse/SPARK-34849), this PR proposes porting the Koalas main code into PySpark.

This PR contains minimal changes to the existing Koalas code as follows:

1. `databricks.koalas` -> `pyspark.pandas`

2. `from databricks import koalas as ks` -> `from pyspark import pandas as pp`

3. `ks.xxx -> pp.xxx`

Other than them:

1. Added a line to `python/mypy.ini` in order to ignore the mypy test. See related issue at [SPARK-34941](https://issues.apache.org/jira/browse/SPARK-34941).

2. Added a comment to several lines in several files to ignore the flake8 F401. See related issue at [SPARK-34943](https://issues.apache.org/jira/browse/SPARK-34943).

When this PR is merged, all the features that were previously used in [Koalas](https://github.com/databricks/koalas) will be available in PySpark as well.

Users can access to the pandas API in PySpark as below:

```python

>>> from pyspark import pandas as pp

>>> ppdf = pp.DataFrame({"A": [1, 2, 3], "B": [15, 20, 25]})

>>> ppdf

A B

0 1 15

1 2 20

2 3 25

```

The existing "options and settings" in Koalas are also available in the same way:

```python

>>> from pyspark.pandas.config import set_option, reset_option, get_option

>>> ppser1 = pp.Series([1, 2, 3])

>>> ppser2 = pp.Series([3, 4, 5])

>>> ppser1 + ppser2

Traceback (most recent call last):

...

ValueError: Cannot combine the series or dataframe because it comes from a different dataframe. In order to allow this operation, enable 'compute.ops_on_diff_frames' option.

>>> set_option("compute.ops_on_diff_frames", True)

>>> ppser1 + ppser2

0 4

1 6

2 8

dtype: int64

```

Please also refer to the [API Reference](https://koalas.readthedocs.io/en/latest/reference/index.html) and [Options and Settings](https://koalas.readthedocs.io/en/latest/user_guide/options.html) for more detail.

**NOTE** that this PR intentionally ports the main codes of Koalas first almost as are with minimal changes because:

- Koalas project is fairly large. Making some changes together for PySpark will make it difficult to review the individual change.

Koalas dev includes multiple Spark committers who will review. By doing this, the committers will be able to more easily and effectively review and drive the development.

- Koalas tests and documentation require major changes to make it look great together with PySpark whereas main codes do not require.

- We lately froze the Koalas codebase, and plan to work together on the initial porting. By porting the main codes first as are, it unblocks the Koalas dev to work on other items in parallel.

I promise and will make sure on:

- Rename Koalas to PySpark pandas APIs and/or pandas-on-Spark accordingly in documentation, and the docstrings and comments in the main codes.

- Triage APIs to remove that don’t make sense when Koalas is in PySpark

The documentation changes will be tracked in [SPARK-34885](https://issues.apache.org/jira/browse/SPARK-34885), the test code changes will be tracked in [SPARK-34886](https://issues.apache.org/jira/browse/SPARK-34886).

### Why are the changes needed?

Please refer to:

- [[DISCUSS] Support pandas API layer on PySpark](http://apache-spark-developers-list.1001551.n3.nabble.com/DISCUSS-Support-pandas-API-layer-on-PySpark-td30945.html)

- [[VOTE] SPIP: Support pandas API layer on PySpark](http://apache-spark-developers-list.1001551.n3.nabble.com/VOTE-SPIP-Support-pandas-API-layer-on-PySpark-td30996.html)

### Does this PR introduce _any_ user-facing change?

Yes, now users can use the pandas APIs on Spark

### How was this patch tested?

Manually tested for exposed major APIs and options as described above.

### Koalas contributors

Koalas would not have been possible without the following contributors:

ueshin

HyukjinKwon

rxin

xinrong-databricks

RainFung

charlesdong1991

harupy

floscha

beobest2

thunterdb

garawalid

LucasG0

shril

deepyaman

gioa

fwani

90jam

thoo

AbdealiJK

abishekganesh72

gliptak

DumbMachine

dvgodoy

stbof

nitlev

hjoo

gatorsmile

tomspur

icexelloss

awdavidson

guyao

akhilputhiry

scook12

patryk-oleniuk

tracek

dennyglee

athena15

gstaubli

WeichenXu123

hsubbaraj

lfdversluis

ktksq

shengjh

margaret-databricks

LSturtew

sllynn

manuzhang

jijosg

sadikovi

Closes#32036 from itholic/SPARK-34890.

Authored-by: itholic <haejoon.lee@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

CREATE TABLE LIKE should respect the reserved properties of tables and fail if specified, using `spark.sql.legacy.notReserveProperties` to restore.

### Why are the changes needed?

Make DDLs consistently treat reserved properties

### Does this PR introduce _any_ user-facing change?

YES, this is a breaking change as using `create table like` w/ reserved properties will fail.

### How was this patch tested?

new test

Closes#32025 from yaooqinn/SPARK-34935.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

GROUP BY ... GROUPING SETS (...) is a weird SQL syntax we copied from Hive. It's not in the SQL standard or any other mainstream databases. This syntax requires users to repeat the expressions inside `GROUPING SETS (...)` after `GROUP BY`, and has a weird null semantic if `GROUP BY` contains extra expressions than `GROUPING SETS (...)`.

This PR deprecates this syntax:

1. Do not promote it in the document and only mention it as a Hive compatible sytax.

2. Simplify the code to only keep it for Hive compatibility.

### Why are the changes needed?

Deprecate a weird grammar.

### Does this PR introduce _any_ user-facing change?

No breaking change, but it removes a check to simplify the code: `GROUP BY a GROUPING SETS(a, b)` fails before and forces users to also put `b` after `GROUP BY`. Now this works just as `GROUP BY GROUPING SETS(a, b)`.

### How was this patch tested?

existing tests

Closes#32022 from cloud-fan/followup.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR prevents reregistering BlockManager when a Executor is shutting down. It is achieved by checking `executorShutdown` before calling `env.blockManager.reregister()`.

### Why are the changes needed?

This change is required since Spark reports executors as active, even they are removed.

I was testing Dynamic Allocation on K8s with about 300 executors. While doing so, when the executors were torn down due to `spark.dynamicAllocation.executorIdleTimeout`, I noticed all the executor pods being removed from K8s, however, under the "Executors" tab in SparkUI, I could see some executors listed as alive. [spark.sparkContext.statusTracker.getExecutorInfos.length](65da9287bc/core/src/main/scala/org/apache/spark/SparkStatusTracker.scala (L105)) also returned a value greater than 1.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added a new test.

## Logs

Following are the logs of the executor(Id:303) which re-registers `BlockManager`

```

21/04/02 21:33:28 INFO CoarseGrainedExecutorBackend: Got assigned task 1076

21/04/02 21:33:28 INFO Executor: Running task 4.0 in stage 3.0 (TID 1076)

21/04/02 21:33:28 INFO MapOutputTrackerWorker: Updating epoch to 302 and clearing cache

21/04/02 21:33:28 INFO TorrentBroadcast: Started reading broadcast variable 3

21/04/02 21:33:28 INFO TransportClientFactory: Successfully created connection to /100.100.195.227:33703 after 76 ms (62 ms spent in bootstraps)

21/04/02 21:33:28 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 2.4 KB, free 168.0 MB)

21/04/02 21:33:28 INFO TorrentBroadcast: Reading broadcast variable 3 took 168 ms

21/04/02 21:33:28 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 3.9 KB, free 168.0 MB)

21/04/02 21:33:29 INFO MapOutputTrackerWorker: Don't have map outputs for shuffle 1, fetching them

21/04/02 21:33:29 INFO MapOutputTrackerWorker: Doing the fetch; tracker endpoint = NettyRpcEndpointRef(spark://MapOutputTrackerda-lite-test-4-7a57e478947d206d-driver-svc.dex-app-n5ttnbmg.svc:7078)

21/04/02 21:33:29 INFO MapOutputTrackerWorker: Got the output locations

21/04/02 21:33:29 INFO ShuffleBlockFetcherIterator: Getting 2 non-empty blocks including 1 local blocks and 1 remote blocks

21/04/02 21:33:30 INFO TransportClientFactory: Successfully created connection to /100.100.80.103:40971 after 660 ms (528 ms spent in bootstraps)

21/04/02 21:33:30 INFO ShuffleBlockFetcherIterator: Started 1 remote fetches in 1042 ms

21/04/02 21:33:31 INFO Executor: Finished task 4.0 in stage 3.0 (TID 1076). 1276 bytes result sent to driver

.

.

.

21/04/02 21:34:16 INFO CoarseGrainedExecutorBackend: Driver commanded a shutdown

21/04/02 21:34:16 INFO Executor: Told to re-register on heartbeat

21/04/02 21:34:16 INFO BlockManager: BlockManager BlockManagerId(303, 100.100.122.34, 41265, None) re-registering with master

21/04/02 21:34:16 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(303, 100.100.122.34, 41265, None)

21/04/02 21:34:16 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(303, 100.100.122.34, 41265, None)

21/04/02 21:34:16 INFO BlockManager: Reporting 0 blocks to the master.

21/04/02 21:34:16 INFO MemoryStore: MemoryStore cleared

21/04/02 21:34:16 INFO BlockManager: BlockManager stopped

21/04/02 21:34:16 INFO FileDataSink: Closing sink with output file = /tmp/safari-events/.des_analysis/safari-events/hdp_spark_monitoring_random-container-037caf27-6c77-433f-820f-03cd9c7d9b6e-spark-8a492407d60b401bbf4309a14ea02ca2_events.tsv

21/04/02 21:34:16 INFO HonestProfilerBasedThreadSnapshotProvider: Stopping agent

21/04/02 21:34:16 INFO HonestProfilerHandler: Stopping honest profiler agent

21/04/02 21:34:17 INFO ShutdownHookManager: Shutdown hook called

21/04/02 21:34:17 INFO ShutdownHookManager: Deleting directory /var/data/spark-d886588c-2a7e-491d-bbcb-4f58b3e31001/spark-4aa337a0-60c0-45da-9562-8c50eaff3cea

```

Closes#32043 from sumeetgajjar/SPARK-34949.

Authored-by: Sumeet Gajjar <sumeetgajjar93@gmail.com>

Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com>

### What changes were proposed in this pull request?

This PR aims to upgrade SBT to 1.5.0.

### Why are the changes needed?

SBT 1.5.0 is released yesterday with the built-in Scala 3 support.

- https://github.com/sbt/sbt/releases/tag/v1.5.0

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the SBT CIs (Build/Test/Docs/Plugins).

Closes#32055 from dongjoon-hyun/SPARK-34959.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Synchronise access to `registerSource` and `removeSource` method since underlying `ArrayBuffer` is not thread safe.

### Why are the changes needed?

Unexpected behaviours are possible due to lack of thread safety, Like we got `ArrayIndexOutOfBoundsException` while adding new source.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Closes#32024 from BOOTMGR/SPARK-34934.

Lead-authored-by: Harsh Panchal <BOOTMGR@users.noreply.github.com>

Co-authored-by: BOOTMGR <panchal.harsh18@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Fix [SPARK-34492], add Scala examples to read/write CSV files.

### Why are the changes needed?

Fix [SPARK-34492].

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Build the document with "SKIP_API=1 bundle exec jekyll build", and everything looks fine.

Closes#31827 from twoentartian/master.

Authored-by: twoentartian <twoentartian@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to add zstandard codec to the `AvroOptions.compression` comment.

### Why are the changes needed?

SPARK-34479 added zstandard codec.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

N/A

Closes#32050 from williamhyun/avro.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

This PR proposes to set the system encoding as UTF-8. For some reasons, it looks like GitHub Actions machines changed theirs to ASCII by default. This leads to default encoding/decoding to use ASCII in Python, e.g.) `"a".encode()`, and looks like Sphinx depends on that.

### Why are the changes needed?

To recover GItHub Actions build.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Tested in https://github.com/apache/spark/pull/32046Closes#32047 from HyukjinKwon/SPARK-34951.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR replaces the non-ASCII characters to ASCII characters when possible in PySpark documentation

### Why are the changes needed?

To avoid unnecessarily using other non-ASCII characters which could lead to the issue such as https://github.com/apache/spark/pull/32047 or https://github.com/apache/spark/pull/22782

### Does this PR introduce _any_ user-facing change?

Virtually no.

### How was this patch tested?

Found via (Mac OS):

```bash

# In Spark root directory

cd python

pcregrep --color='auto' -n "[\x80-\xFF]" `git ls-files .`

```

Closes#32048 from HyukjinKwon/minor-fix.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This patch catches `IOException`, which is possibly thrown due to unable to deserialize map statuses (e.g., broadcasted value is destroyed), when deserilizing map statuses. Once `IOException` is caught, `MetadataFetchFailedException` is thrown to let Spark handle it.

### Why are the changes needed?

One customer encountered application error. From the log, it is caused by accessing non-existing broadcasted value. The broadcasted value is map statuses. E.g.,

```

[info] Cause: java.io.IOException: org.apache.spark.SparkException: Failed to get broadcast_0_piece0 of broadcast_0

[info] at org.apache.spark.util.Utils$.tryOrIOException(Utils.scala:1410)

[info] at org.apache.spark.broadcast.TorrentBroadcast.readBroadcastBlock(TorrentBroadcast.scala:226)

[info] at org.apache.spark.broadcast.TorrentBroadcast.getValue(TorrentBroadcast.scala:103)

[info] at org.apache.spark.broadcast.Broadcast.value(Broadcast.scala:70)

[info] at org.apache.spark.MapOutputTracker$.$anonfun$deserializeMapStatuses$3(MapOutputTracker.scala:967)

[info] at org.apache.spark.internal.Logging.logInfo(Logging.scala:57)

[info] at org.apache.spark.internal.Logging.logInfo$(Logging.scala:56)

[info] at org.apache.spark.MapOutputTracker$.logInfo(MapOutputTracker.scala:887)

[info] at org.apache.spark.MapOutputTracker$.deserializeMapStatuses(MapOutputTracker.scala:967)

```

There is a race-condition. After map statuses are broadcasted and the executors obtain serialized broadcasted map statuses. If any fetch failure happens after, Spark scheduler invalidates cached map statuses and destroy broadcasted value of the map statuses. Then any executor trying to deserialize serialized broadcasted map statuses and access broadcasted value, `IOException` will be thrown. Currently we don't catch it in `MapOutputTrackerWorker` and above exception will fail the application.

Normally we should throw a fetch failure exception for such case. Spark scheduler will handle this.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Closes#32033 from viirya/fix-broadcast-master.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

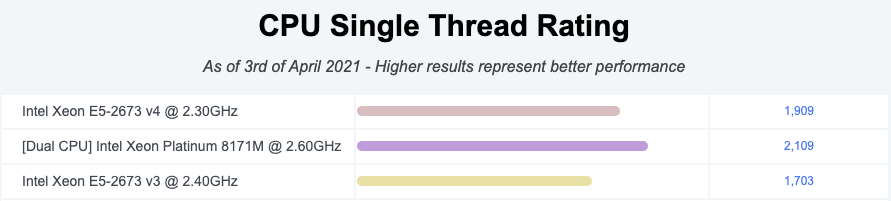

https://github.com/apache/spark/pull/32015 added a way to run benchmarks much more easily in the same GitHub Actions build. This PR updates the benchmark results by using the way.

**NOTE** that looks like GitHub Actions use four types of CPU given my observations:

- Intel(R) Xeon(R) Platinum 8171M CPU 2.60GHz

- Intel(R) Xeon(R) CPU E5-2673 v4 2.30GHz

- Intel(R) Xeon(R) CPU E5-2673 v3 2.40GHz

- Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz

Given my quick research, seems like they perform roughly similarly:

I couldn't find enough information about Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz but the performance seems roughly similar given the numbers.

So shouldn't be a big deal especially given that this way is much easier, encourages contributors to run more and guarantee the same number of cores and same memory with the same softwares.

### Why are the changes needed?

To have a base line of the benchmarks accordingly.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

It was generated from:

- [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465)

- [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337)

Closes#32044 from HyukjinKwon/SPARK-34950.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to add a workflow that allows developers to run benchmarks and download the results files. After this PR, developers can run benchmarks in GitHub Actions in their fork.

### Why are the changes needed?

1. Very easy to use.

2. We can use the (almost) same environment to run the benchmarks. Given my few experiments and observation, the CPU, cores, and memory are same.

3. Does not burden ASF's resource at GitHub Actions.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested in https://github.com/HyukjinKwon/spark/pull/31.

Entire benchmarks are being run as below:

- [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465)

- [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337)

### How do developers use it in their fork?

1. **Go to Actions in your fork, and click "Run benchmarks"**

2. **Run the benchmarks with JDK 8 or 11 with benchmark classes to run. Glob pattern is supported just like `testOnly` in SBT**

3. **After finishing the jobs, the benchmark results are available on the top in the underlying workflow:**

4. **After downloading it, unzip and untar at Spark git root directory:**

```bash

cd .../spark

mv ~/Downloads/benchmark-results-8.zip .

unzip benchmark-results-8.zip

tar -xvf benchmark-results-8.tar

```

5. **Check the results:**

```bash

git status

```

```

...

modified: core/benchmarks/MapStatusesSerDeserBenchmark-results.txt

```

Closes#32015 from HyukjinKwon/SPARK-34821-pr.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to add `ownerReference` to the executor ConfigMap to fix leakage.

### Why are the changes needed?

SPARK-30985 maintains the executor config map explicitly inside Spark. However, this config map can be leaked when Spark drivers die accidentally or are killed by K8s. We need to add `ownerReference` to make K8s do the garbage collection these automatically.

The number of ConfigMap is one of the resource quota. So, the leaked configMaps currently cause Spark jobs submission failures.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs and check manually.

K8s IT is tested manually.

```

KubernetesSuite:

- Run SparkPi with no resources

- Run SparkPi with a very long application name.

- Use SparkLauncher.NO_RESOURCE

- Run SparkPi with a master URL without a scheme.

- Run SparkPi with an argument.

- Run SparkPi with custom labels, annotations, and environment variables.

- All pods have the same service account by default

- Run extraJVMOptions check on driver

- Run SparkRemoteFileTest using a remote data file

- Verify logging configuration is picked from the provided SPARK_CONF_DIR/log4j.properties

- Run SparkPi with env and mount secrets.

- Run PySpark on simple pi.py example

- Run PySpark to test a pyfiles example

- Run PySpark with memory customization

- Run in client mode.

- Start pod creation from template

- PVs with local storage

- Launcher client dependencies

- SPARK-33615: Launcher client archives

- SPARK-33748: Launcher python client respecting PYSPARK_PYTHON

- SPARK-33748: Launcher python client respecting spark.pyspark.python and spark.pyspark.driver.python

- Launcher python client dependencies using a zip file

- Test basic decommissioning

- Test basic decommissioning with shuffle cleanup

- Test decommissioning with dynamic allocation & shuffle cleanups

- Test decommissioning timeouts

- Run SparkR on simple dataframe.R example

Run completed in 19 minutes, 2 seconds.

Total number of tests run: 27

Suites: completed 2, aborted 0

Tests: succeeded 27, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

**BEFORE**

```

$ k get cm spark-exec-450b417895b3b2c7-conf-map -oyaml | grep ownerReferences

```

**AFTER**

```

$ k get cm spark-exec-bb37a27895b1c26c-conf-map -oyaml | grep ownerReferences

f:ownerReferences:

```

Closes#32042 from dongjoon-hyun/SPARK-34948.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Use proper Java doc format for Java classes within `catalyst` module

### Why are the changes needed?

Many Java classes in `catalyst`, especially those for DataSource V2, do not have proper Java doc format. By fixing the format it helps to improve the doc's readability.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

N/A

Closes#32038 from sunchao/javadoc.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

When we insert data into a partition table partition with empty DataFrame. We will call `PartitioningUtils.getPathFragment()`

then to update this partition's metadata too.

When we insert to a partition when partition value is `null`, it will throw exception like

```

[info] java.lang.NullPointerException:

[info] at scala.collection.immutable.StringOps$.length$extension(StringOps.scala:51)

[info] at scala.collection.immutable.StringOps.length(StringOps.scala:51)

[info] at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:35)

[info] at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

[info] at scala.collection.immutable.StringOps.foreach(StringOps.scala:33)

[info] at org.apache.spark.sql.catalyst.catalog.ExternalCatalogUtils$.escapePathName(ExternalCatalogUtils.scala:69)

[info] at org.apache.spark.sql.catalyst.catalog.ExternalCatalogUtils$.getPartitionValueString(ExternalCatalogUtils.scala:126)

[info] at org.apache.spark.sql.execution.datasources.PartitioningUtils$.$anonfun$getPathFragment$1(PartitioningUtils.scala:354)

[info] at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

[info] at scala.collection.Iterator.foreach(Iterator.scala:941)

[info] at scala.collection.Iterator.foreach$(Iterator.scala:941)

[info] at scala.collection.AbstractIterator.foreach(Iterator.scala:1429)

[info] at scala.collection.IterableLike.foreach(IterableLike.scala:74)

[info] at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

```

`PartitioningUtils.getPathFragment()` should support `null` value too

### Why are the changes needed?

Fix bug

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#32018 from AngersZhuuuu/SPARK-34926.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

In the PR, I propose to disable ANSI intervals as the result of dates/timestamp subtraction in `ExtractBenchmark` and benchmark only legacy intervals because `EXTRACT( .. FROM ..)` doesn't support ANSI intervals so far.

### Why are the changes needed?

This fixes the benchmark failure:

```

[info] Running case: YEAR of interval

[error] Exception in thread "main" org.apache.spark.sql.AnalysisException: cannot resolve 'year((subtractdates(CAST(timestamp_seconds(id) AS DATE), DATE '0001-01-01') + subtracttimestamps(timestamp_seconds(id), TIMESTAMP '1000-01-01 01:02:03.123456')))' due to data type mismatch: argument 1 requires date type, however, '(subtractdates(CAST(timestamp_seconds(id) AS DATE), DATE '0001-01-01') + subtracttimestamps(timestamp_seconds(id), TIMESTAMP '1000-01-01 01:02:03.123456'))' is of day-time interval type.; line 1 pos 0;

[error] 'Project [extract(YEAR, (subtractdates(cast(timestamp_seconds(id#1456L) as date), 0001-01-01, false) + subtracttimestamps(timestamp_seconds(id#1456L), 1000-01-01 01:02:03.123456, false, Some(Europe/Moscow)))) AS YEAR#1458]

[error] +- Range (1262304000, 1272304000, step=1, splits=Some(1))

[error] at org.apache.spark.sql.catalyst.analysis.package$AnalysisErrorAt.failAnalysis(package.scala:42)

[error] at org.apache.spark.sql.catalyst.analysis.CheckAnalysis$$anonfun$$nestedInanonfun$checkAnalysis$1$2.applyOrElse(CheckAnalysis.scala:194)

```

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the `ExtractBenchmark` benchmark via:

```

$ build/sbt "sql/test:runMain org.apache.spark.sql.execution.benchmark.ExtractBenchmark"

```

Closes#32035 from MaxGekk/fix-ExtractBenchmark.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR introduces a new analysis rule `DeduplicateRelations`, which deduplicates any duplicate relations in a plan first and then deduplicates conflicting attributes(which resued the `dedupRight` of `ResolveReferences`).

### Why are the changes needed?

`CostBasedJoinReorder` could fail when applying on self-join, e.g.,

```scala

// test in JoinReorderSuite

test("join reorder with self-join") {

val plan = t2.join(t1, Inner, Some(nameToAttr("t1.k-1-2") === nameToAttr("t2.k-1-5")))

.select(nameToAttr("t1.v-1-10"))

.join(t2, Inner, Some(nameToAttr("t1.v-1-10") === nameToAttr("t2.k-1-5")))

// this can fail

Optimize.execute(plan.analyze)

}

```

Besides, with the new rule `DeduplicateRelations`, we'd be able to enable some optimizations, e.g., LeftSemiAnti pushdown, redundant project removal, as reflects in updated unit tests.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

Added and updated unit tests.

Closes#32027 from Ngone51/join-reorder-3.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is to support nested column type in Spark ORC vectorized reader. Currently ORC vectorized reader [does not support nested column type (struct, array and map)](https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/orc/OrcFileFormat.scala#L138). We implemented nested column vectorized reader for FB-ORC in our internal fork of Spark. We are seeing performance improvement compared to non-vectorized reader when reading nested columns. In addition, this can also help improve the non-nested column performance when reading non-nested and nested columns together in one query.

Before this PR:

* `OrcColumnVector` is the implementation class for Spark's `ColumnVector` to wrap Hive's/ORC's `ColumnVector` to read `AtomicType` data.

After this PR:

* `OrcColumnVector` is an abstract class to keep interface being shared between multiple implementation class of orc column vectors, namely `OrcAtomicColumnVector` (for `AtomicType`), `OrcArrayColumnVector` (for `ArrayType`), `OrcMapColumnVector` (for `MapType`), `OrcStructColumnVector` (for `StructType`). So the original logic to read `AtomicType` data is moved from `OrcColumnVector` to `OrcAtomicColumnVector`. The abstract class of `OrcColumnVector` is needed here because of supporting nested column (i.e. nested column vectors).

* A utility method `OrcColumnVectorUtils.toOrcColumnVector` is added to create Spark's `OrcColumnVector` from Hive's/ORC's `ColumnVector`.

* A new user-facing config `spark.sql.orc.enableNestedColumnVectorizedReader` is added to control enabling/disabling vectorized reader for nested columns. The default value is false (i.e. disabling by default). For certain tables having deep nested columns, vectorized reader might take too much memory for each sub-column vectors, compared to non-vectorized reader. So providing a config here to work around OOM for query reading wide and deep nested columns if any. We plan to enable it by default on 3.3. Leave it disable in 3.2 in case for any unknown bugs.

### Why are the changes needed?

Improve query performance when reading nested columns from ORC file format.

Tested with locally adding a small benchmark in `OrcReadBenchmark.scala`. Seeing more than 1x run time improvement.

```

Running benchmark: SQL Nested Column Scan

Running case: Native ORC MR

Stopped after 2 iterations, 37850 ms

Running case: Native ORC Vectorized (Enabled Nested Column)

Stopped after 2 iterations, 15892 ms

Running case: Native ORC Vectorized (Disabled Nested Column)

Stopped after 2 iterations, 37954 ms

Running case: Hive built-in ORC

Stopped after 2 iterations, 35118 ms

Java HotSpot(TM) 64-Bit Server VM 1.8.0_181-b13 on Mac OS X 10.15.7

Intel(R) Core(TM) i9-9980HK CPU 2.40GHz

SQL Nested Column Scan: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------------

Native ORC MR 18706 18925 310 0.1 17839.6 1.0X

Native ORC Vectorized (Enabled Nested Column) 7625 7946 455 0.1 7271.6 2.5X

Native ORC Vectorized (Disabled Nested Column) 18415 18977 796 0.1 17561.5 1.0X

Hive built-in ORC 17469 17559 127 0.1 16660.1 1.1X

```

Benchmark:

```

nestedColumnScanBenchmark(1024 * 1024)

def nestedColumnScanBenchmark(values: Int): Unit = {

val benchmark = new Benchmark(s"SQL Nested Column Scan", values, output = output)

withTempPath { dir =>

withTempTable("t1", "nativeOrcTable", "hiveOrcTable") {

import spark.implicits._

spark.range(values).map(_ => Random.nextLong).map { x =>

val arrayOfStructColumn = (0 until 5).map(i => (x + i, s"$x" * 5))

val mapOfStructColumn = Map(

s"$x" -> (x * 0.1, (x, s"$x" * 100)),

(s"$x" * 2) -> (x * 0.2, (x, s"$x" * 200)),

(s"$x" * 3) -> (x * 0.3, (x, s"$x" * 300)))

(arrayOfStructColumn, mapOfStructColumn)

}.toDF("col1", "col2")

.createOrReplaceTempView("t1")

prepareTable(dir, spark.sql(s"SELECT * FROM t1"))

benchmark.addCase("Native ORC MR") { _ =>

withSQLConf(SQLConf.ORC_VECTORIZED_READER_ENABLED.key -> "false") {

spark.sql("SELECT SUM(SIZE(col1)), SUM(SIZE(col2)) FROM nativeOrcTable").noop()

}

}

benchmark.addCase("Native ORC Vectorized (Enabled Nested Column)") { _ =>

spark.sql("SELECT SUM(SIZE(col1)), SUM(SIZE(col2)) FROM nativeOrcTable").noop()

}

benchmark.addCase("Native ORC Vectorized (Disabled Nested Column)") { _ =>

withSQLConf(SQLConf.ORC_VECTORIZED_READER_NESTED_COLUMN_ENABLED.key -> "false") {

spark.sql("SELECT SUM(SIZE(col1)), SUM(SIZE(col2)) FROM nativeOrcTable").noop()

}

}

benchmark.addCase("Hive built-in ORC") { _ =>

spark.sql("SELECT SUM(SIZE(col1)), SUM(SIZE(col2)) FROM hiveOrcTable").noop()

}

benchmark.run()

}

}

}

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added one simple test in `OrcSourceSuite.scala` to verify correctness.

Definitely need more unit tests and add benchmark here, but I want to first collect feedback before crafting more tests.

Closes#31958 from c21/orc-vector.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>