## What changes were proposed in this pull request?

Misc code cleanup from lgtm.com analysis. See comments below for details.

## How was this patch tested?

Existing tests.

Closes#23571 from srowen/SPARK-26640.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Co-authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Fix implementation of unary negation (`__neg__`) in Pyspark DenseVectors

## How was this patch tested?

Existing tests, plus new doctest

Closes#23570 from srowen/SPARK-26638.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Try to make labels more obvious

"avg hash probe" avg hash probe bucket iterations

"partition pruning time (ms)" dynamic partition pruning time

"total number of files in the table" file count

"number of files that would be returned by partition pruning alone" file count after partition pruning

"total size of files in the table" file size

"size of files that would be returned by partition pruning alone" file size after partition pruning

"metadata time (ms)" metadata time

"aggregate time" time in aggregation build

"aggregate time" time in aggregation build

"time to construct rdd bc" time to build

"total time to remove rows" time to remove

"total time to update rows" time to update

Add proper metric type to some metrics:

"bytes of written output" written output - createSizeMetric

"metadata time" - createTimingMetric

"dataSize" - createSizeMetric

"collectTime" - createTimingMetric

"buildTime" - createTimingMetric

"broadcastTIme" - createTimingMetric

## How is this patch tested?

Existing tests.

Author: Stacy Kerkela <stacy.kerkeladatabricks.com>

Signed-off-by: Juliusz Sompolski <julekdatabricks.com>

Closes#23551 from juliuszsompolski/SPARK-26622.

Lead-authored-by: Juliusz Sompolski <julek@databricks.com>

Co-authored-by: Stacy Kerkela <stacy.kerkela@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.shuffle` configs to use ConfigEntry and put them in the config package.

## How was this patch tested?

Existing unit tests

Closes#23550 from 10110346/ConfigEntry_shuffle.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to use *java.time* classes in `stringToDate` and `stringToTimestamp`. This switches the methods from the hybrid calendar (Gregorian+Julian) to Proleptic Gregorian calendar. And it should make the casting consistent to other Spark classes that converts textual representation of dates/timestamps to `DateType`/`TimestampType`.

## How was this patch tested?

The changes were tested by existing suites - `HashExpressionsSuite`, `CastSuite` and `DateTimeUtilsSuite`.

Closes#23512 from MaxGekk/utf8string-timestamp-parsing.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

Create a framework for file source V2 based on data source V2 API.

As a good example for demonstrating the framework, this PR also migrate ORC source. This is because ORC file source supports both row scan and columnar scan, and the implementation is simpler comparing with Parquet.

Note: Currently only read path of V2 API is done, this framework and migration are only for the read path.

Supports the following scan:

- Scan ColumnarBatch

- Scan UnsafeRow

- Push down filters

- Push down required columns

Not supported( due to the limitation of data source V2 API):

- Stats metrics

- Catalog table

- Writes

## How was this patch tested?

Unit test

Closes#23383 from gengliangwang/latest_orcV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

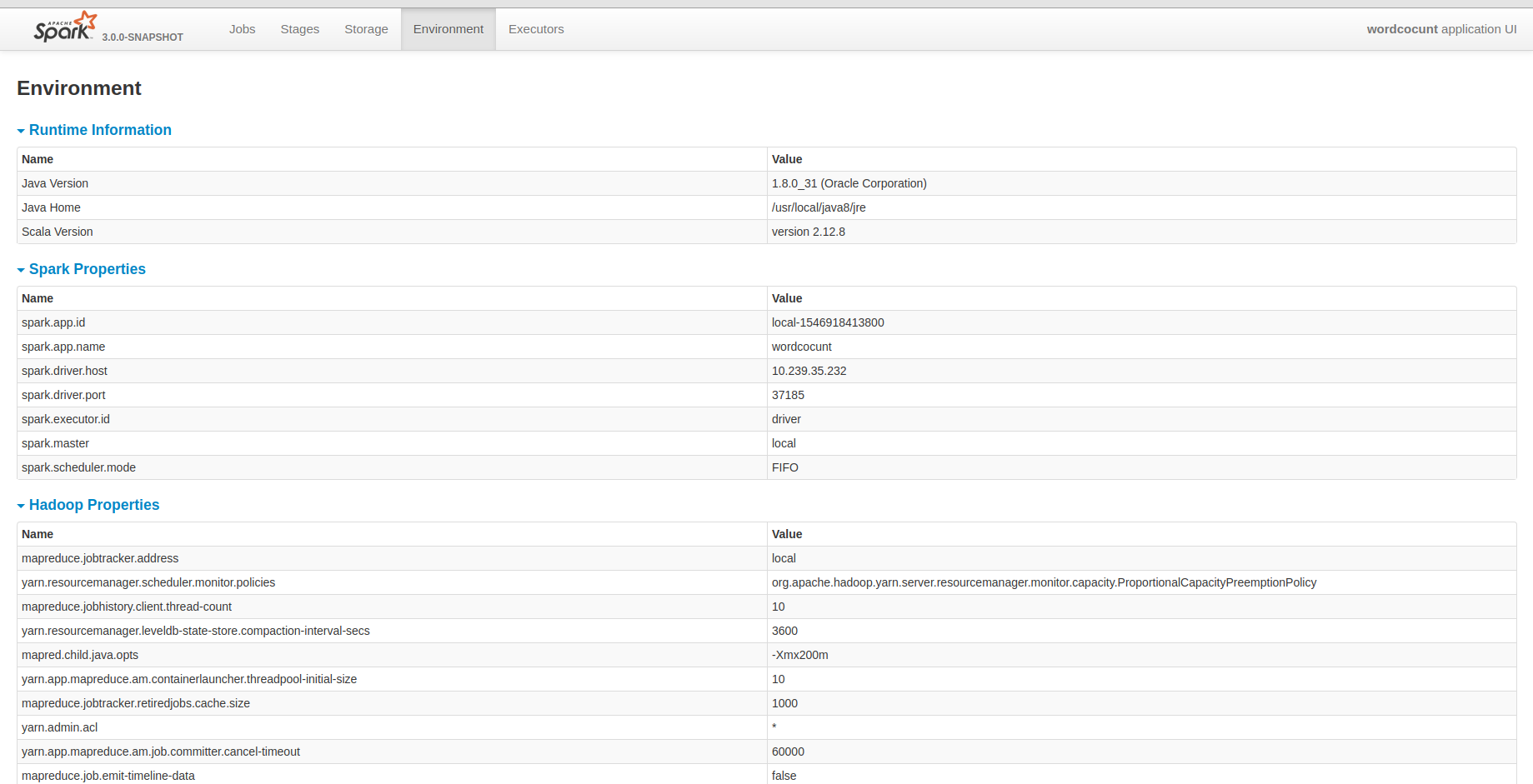

## What changes were proposed in this pull request?

I know that yarn provided all hadoop configurations. But I guess it may be fine that the historyserver unify all configuration in it. It will be convenient for us to debug some problems.

## How was this patch tested?

Closes#23486 from deshanxiao/spark-26457.

Lead-authored-by: xiaodeshan <xiaodeshan@xiaomi.com>

Co-authored-by: deshanxiao <42019462+deshanxiao@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The call to `translate_component` only supplied 2 out of the 3 required arguments. I added a default empty list for the missing argument to avoid a run-time error.

I work for Semmle, and noticed the bug with our LGTM code analyzer:

0655f1624f/files/dev/create-release/releaseutils.py?sort=name&dir=ASC&mode=heatmap#x1434915b6576fb40:1

## How was this patch tested?

I checked that `./dev/run-tests` pass OK.

Closes#23567 from ipwright/wrong-number-of-arguments-fix.

Authored-by: wright <wright@semmle.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The PR makes hardcoded configs below to use `ConfigEntry`.

* spark.kryo

* spark.kryoserializer

* spark.serializer

* spark.jars

* spark.files

* spark.submit

* spark.deploy

* spark.worker

This patch doesn't change configs which are not relevant to SparkConf (e.g. system properties).

## How was this patch tested?

Existing tests.

Closes#23532 from HeartSaVioR/SPARK-26466-v2.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Spark-submit usage message should be put in sync with recent changes in particular regarding K8S support. These are the proposed changes to the usage message:

--executor-cores NUM -> can be useed for Spark on YARN and K8S

--principal PRINCIPAL and --keytab KEYTAB -> can be used for Spark on YARN and K8S

--total-executor-cores NUM> can be used for Spark standalone, YARN and K8S

In addition this PR proposes to remove certain implementation details from the --keytab argument description as the implementation details vary between YARN and K8S, for example.

## How was this patch tested?

Manually tested

Closes#23518 from LucaCanali/updateSparkSubmitArguments.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Add `ExecutorClassLoader.getResourceAsStream`, so that classes dynamically generated by the REPL can be accessed by user code as `InputStream`s for non-class-loading purposes, such as reading the class file for extracting method/constructor parameter names.

Caveat: The convention in Java's `ClassLoader` is that `ClassLoader.getResourceAsStream()` should be considered as a convenience method of `ClassLoader.getResource()`, where the latter provides a `URL` for the resource, and the former invokes `openStream()` on it to serve the resource as an `InputStream`. The former should also catch `IOException` from `openStream()` and convert it to `null`.

This PR breaks this convention by only overriding `ClassLoader.getResourceAsStream()` instead of also overriding `ClassLoader.getResource()`, so after this PR, it would be possible to get a non-null result from the former, but get a null result from the latter. This isn't ideal, but it's sufficient to cover the main use case and practically it shouldn't matter.

To implement the convention properly, we'd need to register a URL protocol handler with Java to allow it to properly handle the `spark://` protocol, etc, which sounds like an overkill for the intent of this PR.

Credit goes to zsxwing for the initial investigation and fix suggestion.

## How was this patch tested?

Added new test case in `ExecutorClassLoaderSuite` and `ReplSuite`.

Closes#23558 from rednaxelafx/executorclassloader-getresourceasstream.

Authored-by: Kris Mok <kris.mok@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The regex (spark.redaction.regex) that is used to decide which config properties or environment settings are sensitive should also include oauthToken to match spark.kubernetes.authenticate.submission.oauthToken

## How was this patch tested?

Simple regex addition - happy to add a test if needed.

Author: Vinoo Ganesh <vganesh@palantir.com>

Closes#23555 from vinooganesh/vinooganesh/SPARK-26625.

## What changes were proposed in this pull request?

`SerializeFromObject` now keeps all serializer expressions for domain object even when only part of output attributes are used by top plan.

We should be able to prune unused serializers from `SerializeFromObject` in such case.

## How was this patch tested?

Added tests.

Closes#23562 from viirya/SPARK-26619.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

In the PR, I propose new built-in datasource with name `noop` which can be used in:

- benchmarking to avoid additional overhead of actions and unnecessary type conversions

- caching of datasets/dataframes

- producing other side effects as a consequence of row materialisations like uploading data to a IO caches.

## How was this patch tested?

Added a test to check that datasource rows are materialised.

Closes#23471 from MaxGekk/none-datasource.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

When a streaming query has multiple file streams, and there is a batch where one of the file streams dont have data in that batch, then if the query has to restart from that, it will throw the following error.

```

java.lang.IllegalStateException: batch 1 doesn't exist

at org.apache.spark.sql.execution.streaming.HDFSMetadataLog$.verifyBatchIds(HDFSMetadataLog.scala:300)

at org.apache.spark.sql.execution.streaming.FileStreamSourceLog.get(FileStreamSourceLog.scala:120)

at org.apache.spark.sql.execution.streaming.FileStreamSource.getBatch(FileStreamSource.scala:181)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$org$apache$spark$sql$execution$streaming$MicroBatchExecution$$populateStartOffsets$2.apply(MicroBatchExecution.scala:294)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$org$apache$spark$sql$execution$streaming$MicroBatchExecution$$populateStartOffsets$2.apply(MicroBatchExecution.scala:291)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at org.apache.spark.sql.execution.streaming.StreamProgress.foreach(StreamProgress.scala:25)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution.org$apache$spark$sql$execution$streaming$MicroBatchExecution$$populateStartOffsets(MicroBatchExecution.scala:291)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$runActivatedStream$1$$anonfun$apply$mcZ$sp$1.apply$mcV$sp(MicroBatchExecution.scala:178)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$runActivatedStream$1$$anonfun$apply$mcZ$sp$1.apply(MicroBatchExecution.scala:175)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$runActivatedStream$1$$anonfun$apply$mcZ$sp$1.apply(MicroBatchExecution.scala:175)

at org.apache.spark.sql.execution.streaming.ProgressReporter$class.reportTimeTaken(ProgressReporter.scala:251)

at org.apache.spark.sql.execution.streaming.StreamExecution.reportTimeTaken(StreamExecution.scala:61)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$runActivatedStream$1.apply$mcZ$sp(MicroBatchExecution.scala:175)

at org.apache.spark.sql.execution.streaming.ProcessingTimeExecutor.execute(TriggerExecutor.scala:56)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution.runActivatedStream(MicroBatchExecution.scala:169)

at org.apache.spark.sql.execution.streaming.StreamExecution.org$apache$spark$sql$execution$streaming$StreamExecution$$runStream(StreamExecution.scala:295)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anon$1.run(StreamExecution.scala:205)

```

Existing `HDFSMetadata.verifyBatchIds` threw error whenever the `batchIds` list was empty. In the context of `FileStreamSource.getBatch` (where verify is called) and `FileStreamSourceLog` (subclass of `HDFSMetadata`), this is usually okay because, in a streaming query with one file stream, the `batchIds` can never be empty:

- A batch is planned only when the `FileStreamSourceLog` has seen new offset (that is, there are new data files).

- So `FileStreamSource.getBatch` will be called on X to Y where X will always be > Y. This calls internally`HDFSMetadata.verifyBatchIds (X+1, Y)` with X+1-Y ids.

For example.,`FileStreamSource.getBatch(4, 5)` will call `verify(batchIds = Seq(5), start = 5, end = 5)`. However, the invariant of X > Y is not true when there are two file stream sources, as a batch may be planned even when only one of the file streams has data. So one of the file stream may not have data, which can call `FileStreamSource.getBatch(X, X)` -> `verify(batchIds = Seq.empty, start = X+1, end = X)` -> failure.

Note that `FileStreamSource.getBatch(X, X)` gets called **only when restarting a query in a batch where a file source did not have data**. This is because in normal planning of batches, `MicroBatchExecution` avoids calling `FileStreamSource.getBatch(X, X)` when offset X has not changed. However, when restarting a stream at such a batch, `MicroBatchExecution.populateStartOffsets()` calls `FileStreamSource.getBatch(X, X)` (DataSource V1 hack to initialize the source with last known offsets) thus hitting this issue.

The minimum solution here is to skip verification when `FileStreamSource.getBatch(X, X)`.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23557 from tdas/SPARK-26629.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

This PR proposes to explicitly document that SparkContext cannot be shared for multiprocessing, and multi-processing execution is not guaranteed in PySpark.

I have seen some cases that users attempt to use multiple processes via `multiprocessing` module time to time. For instance, see the example in the JIRA (https://issues.apache.org/jira/browse/SPARK-25992).

Py4J itself does not support Python's multiprocessing out of the box (sharing the same JavaGateways for instance).

In general, such pattern can cause errors with somewhat arbitrary symptoms difficult to diagnose. For instance, see the error message in JIRA:

```

Traceback (most recent call last):

File "/Users/abdealijk/anaconda3/lib/python3.6/socketserver.py", line 317, in _handle_request_noblock

self.process_request(request, client_address)

File "/Users/abdealijk/anaconda3/lib/python3.6/socketserver.py", line 348, in process_request

self.finish_request(request, client_address)

File "/Users/abdealijk/anaconda3/lib/python3.6/socketserver.py", line 361, in finish_request

self.RequestHandlerClass(request, client_address, self)

File "/Users/abdealijk/anaconda3/lib/python3.6/socketserver.py", line 696, in __init__

self.handle()

File "/usr/local/hadoop/spark2.3.1/python/pyspark/accumulators.py", line 238, in handle

_accumulatorRegistry[aid] += update

KeyError: 0

```

The root cause of this was because global `_accumulatorRegistry` is not shared across processes.

Using thread instead of process is quite easy in Python. See `threading` vs `multiprocessing` in Python - they can be usually direct replacement for each other. For instance, Python also support threadpool as well (`multiprocessing.pool.ThreadPool`) which can be direct replacement of process-based thread pool (`multiprocessing.Pool`).

## How was this patch tested?

Manually tested, and manually built the doc.

Closes#23564 from HyukjinKwon/SPARK-25992.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Fixing resource leaks where TransportClient/TransportServer instances are not closed properly.

In StandaloneSchedulerBackend the null check is added because during the SparkContextSchedulerCreationSuite #"local-cluster" test it turned out that client is not initialised as org.apache.spark.scheduler.cluster.StandaloneSchedulerBackend#start isn't called. It throw an NPE and some resource remained in open.

## How was this patch tested?

By executing the unittests and using some extra temporary logging for counting created and closed TransportClient/TransportServer instances.

Closes#23540 from attilapiros/leaks.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Now in `TransportRequestHandler.processStreamRequest`, when a stream request is processed, the stream id is not registered with the current channel in stream manager. It should do that so in case of that the channel gets terminated we can remove associated streams of stream requests too.

This also cleans up channel registration in `StreamManager`. Since `StreamManager` doesn't register channel but only `OneForOneStreamManager` does it, this removes `registerChannel` from `StreamManager`. When `OneForOneStreamManager` goes to register stream, it will also register channel for the stream.

## How was this patch tested?

Existing tests.

Closes#23521 from viirya/SPARK-26604.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch adds the check to verify consumer group id is given correctly when custom group id is provided to Kafka parameter.

## How was this patch tested?

Modified UT.

Closes#23544 from HeartSaVioR/SPARK-26350-follow-up-actual-verification-on-UT.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

Adjust the batch write API to match the read API refactor after https://github.com/apache/spark/pull/23086

The doc with high-level ideas:

https://docs.google.com/document/d/1vI26UEuDpVuOjWw4WPoH2T6y8WAekwtI7qoowhOFnI4/edit?usp=sharing

Basically it renames `BatchWriteSupportProvider` to `SupportsBatchWrite`, and make it extend `Table`. Renames `WriteSupport` to `Write`. It also cleans up some code as batch API is completed.

This PR also removes the test from https://github.com/apache/spark/pull/22688 . Now data source must return a table for read/write.

A few notes about future changes:

1. We will create `SupportsStreamingWrite` later for streaming APIs

2. We will create `SupportsBatchReplaceWhere`, `SupportsBatchAppend`, etc. for the new end-user write APIs. I think streaming APIs would remain to use `OutputMode`, and new end-user write APIs will apply to batch only, at least in the near future.

3. We will remove `SaveMode` from data source API: https://issues.apache.org/jira/browse/SPARK-26356

## How was this patch tested?

existing tests

Closes#23208 from cloud-fan/refactor-batch.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Added a new configuration 'spark.mesos.driver.memoryOverhead' for providing the driver memory overhead in mesos cluster mode.

## How was this patch tested?

Verified it manually, Resource Scheduler allocates (drivermemory+ driver memoryOverhead) for driver in mesos cluster mode.

Closes#17726 from devaraj-kavali/SPARK-17928.

Authored-by: Devaraj K <devaraj@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Make the following hardcoded configs to use ConfigEntry.

spark.memory

spark.storage

spark.io

spark.buffer

spark.rdd

spark.locality

spark.broadcast

spark.reducer

## How was this patch tested?

Existing tests.

Closes#23447 from pralabhkumar/execution_categories.

Authored-by: Pralabh Kumar <pkumar2@linkedin.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Kafka is not yet support to obtain delegation token with proxy user. It has to be turned off until https://issues.apache.org/jira/browse/KAFKA-6945 implemented.

In this PR an exception will be thrown when this situation happens.

## How was this patch tested?

Additional unit test.

Closes#23511 from gaborgsomogyi/SPARK-26592.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR contains benchmarks for `In` and `InSet` expressions. They cover literals of different data types and will help us to decide where to integrate the switch-based logic for bytes/shorts/ints.

As discussed in [PR-23171](https://github.com/apache/spark/pull/23171), one potential approach is to convert `In` to `InSet` if all elements are literals independently of data types and the number of elements. According to the results of this PR, we might want to keep the threshold for the number of elements. The if-else approach approach might be faster for some data types on a small number of elements (structs? arrays? small decimals?).

### byte / short / int / long

Unless the number of items is really big, `InSet` is slower than `In` because of autoboxing .

Interestingly, `In` scales worse on bytes/shorts than on ints/longs. For example, `InSet` starts to match the performance on around 50 bytes/shorts while this does not happen on the same number of ints/longs. This is a bit strange as shorts/bytes (e.g., `(byte) 1`, `(short) 2`) are represented as ints in the bytecode.

### float / double

Use cases on floats/doubles also suffer from autoboxing. Therefore, `In` outperforms `InSet` on 10 elements.

Similarly to shorts/bytes, `In` scales worse on floats/doubles than on ints/longs because the equality condition is more complicated (e.g., `java.lang.Float.isNaN(filter_valueArg_0) && java.lang.Float.isNaN(9.0F)) || filter_valueArg_0 == 9.0F`).

### decimal

The reason why we have separate benchmarks for small and large decimals is that Spark might use longs to represent decimals in some cases.

If this optimization happens, then `equals` will be nothing else as comparing longs. If this does not happen, Spark will create an instance of `scala.BigDecimal` and use it for comparisons. The latter is more expensive.

`Decimal$hashCode` will always use `scala.BigDecimal$hashCode` even if the number is small enough to fit into a long variable. As a consequence, we see that use cases on small decimals are faster with `In` as they are using long comparisons under the hood. Large decimal values are always faster with `InSet`.

### string

`UTF8String$equals` is not cheap. Therefore, `In` does not really outperform `InSet` as in previous use cases.

### timestamp / date

Under the hood, timestamp/date values will be represented as long/int values. So, `In` allows us to avoid autoboxing.

### array

Arrays are working as expected. `In` is faster on 5 elements while `InSet` is faster on 15 elements. The benchmarks are using `UnsafeArrayData`.

### struct

`InSet` is always faster than `In` for structs. These benchmarks use `GenericInternalRow`.

Closes#23291 from aokolnychyi/spark-26203.

Lead-authored-by: Anton Okolnychyi <aokolnychyi@apple.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Fix typos in comments by replacing "in-heap" with "on-heap".

## How was this patch tested?

Existing Tests.

Closes#23533 from SongYadong/typos_inheap_to_onheap.

Authored-by: SongYadong <song.yadong1@zte.com.cn>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR reverts #22938 per discussion in #23325Closes#23325Closes#23543 from MaxGekk/return-nulls-from-json-parser.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

To skip some steps to remove binary license/notice files in a source release for branch2.3 (these files only exist in master/branch-2.4 now), this pr checked a Spark release version in `dev/create-release/release-build.sh`.

## How was this patch tested?

Manually checked.

Closes#23538 from maropu/FixReleaseScript.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR allows the user to override `kafka.group.id` for better monitoring or security. The user needs to make sure there are not multiple queries or sources using the same group id.

It also fixes a bug that the `groupIdPrefix` option cannot be retrieved.

## How was this patch tested?

The new added unit tests.

Closes#23301 from zsxwing/SPARK-26350.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

In the PR, I propose to switch on `TimestampFormatter`/`DateFormatter` in casting dates/timestamps to strings. The changes should make the date/timestamp casting consistent to JSON/CSV datasources and time-related functions like `to_date`, `to_unix_timestamp`/`from_unixtime`.

Local formatters are moved out from `DateTimeUtils` to where they are actually used. It allows to avoid re-creation of new formatter instance per-each call. Another reason is to have separate parser for `PartitioningUtils` because default parsing pattern cannot be used (expected optional section `[.S]`).

## How was this patch tested?

It was tested by `DateTimeUtilsSuite`, `CastSuite` and `JDBC*Suite`.

Closes#23391 from MaxGekk/thread-local-date-format.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Make sure broadcast hint is applied to partitioned tables.

## How was this patch tested?

- A new unit test in PruneFileSourcePartitionsSuite

- Unit test suites touched by SPARK-14581: JoinOptimizationSuite, FilterPushdownSuite, ColumnPruningSuite, and PruneFiltersSuite

Closes#23507 from jzhuge/SPARK-26576.

Closes#23530 from jzhuge/SPARK-26576-master.

Authored-by: John Zhuge <jzhuge@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This is to fix a bug in #23036 that would cause a join hint to be applied on node it is not supposed to after join reordering. For example,

```

val join = df.join(df, "id")

val broadcasted = join.hint("broadcast")

val join2 = join.join(broadcasted, "id").join(broadcasted, "id")

```

There should only be 2 broadcast hints on `join2`, but after join reordering there would be 4. It is because the hint application in join reordering compares the attribute set for testing relation equivalency.

Moreover, it could still be problematic even if the child relations were used in testing relation equivalency, due to the potential exprId conflict in nested self-join.

As a result, this PR simply reverts the join reorder hint behavior change introduced in #23036, which means if a join hint is present, the join node itself will not participate in the join reordering, while the sub-joins within its children still can.

## How was this patch tested?

Added new tests

Closes#23524 from maryannxue/query-hint-followup-2.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

When creating some unsafe projections, Spark rebuilds the map of schema attributes once for each expression in the projection. Some file format readers create one unsafe projection per input file, others create one per task. ProjectExec also creates one unsafe projection per task. As a result, for wide queries on wide tables, Spark might build the map of schema attributes hundreds of thousands of times.

This PR changes two functions to reuse the same AttributeSeq instance when creating BoundReference objects for each expression in the projection. This avoids the repeated rebuilding of the map of schema attributes.

### Benchmarks

The time saved by this PR depends on size of the schema, size of the projection, number of input files (or number of file splits), number of tasks, and file format. I chose a couple of example cases.

In the following tests, I ran the query

```sql

select * from table where id1 = 1

```

Matching rows are about 0.2% of the table.

#### Orc table 6000 columns, 500K rows, 34 input files

baseline | pr | improvement

----|----|----

1.772306 min | 1.487267 min | 16.082943%

#### Orc table 6000 columns, 500K rows, *17* input files

baseline | pr | improvement

----|----|----

1.656400 min | 1.423550 min | 14.057595%

#### Orc table 60 columns, 50M rows, 34 input files

baseline | pr | improvement

----|----|----

0.299878 min | 0.290339 min | 3.180926%

#### Parquet table 6000 columns, 500K rows, 34 input files

baseline | pr | improvement

----|----|----

1.478306 min | 1.373728 min | 7.074165%

Note: The parquet reader does not create an unsafe projection. However, the filter operation in the query causes the planner to add a ProjectExec, which does create an unsafe projection for each task. So these results have nothing to do with Parquet itself.

#### Parquet table 60 columns, 50M rows, 34 input files

baseline | pr | improvement

----|----|----

0.245006 min | 0.242200 min | 1.145099%

#### CSV table 6000 columns, 500K rows, 34 input files

baseline | pr | improvement

----|----|----

2.390117 min | 2.182778 min | 8.674844%

#### CSV table 60 columns, 50M rows, 34 input files

baseline | pr | improvement

----|----|----

1.520911 min | 1.510211 min | 0.703526%

## How was this patch tested?

SQL unit tests

Python core and SQL test

Closes#23392 from bersprockets/norebuild.

Authored-by: Bruce Robbins <bersprockets@gmail.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

Make it possible for the master to enable TCP keep alive on the RPC connections with clients.

## How was this patch tested?

Manually tested.

Added the following:

```

spark.rpc.io.enableTcpKeepAlive true

```

to spark-defaults.conf.

Observed the following on the Spark master:

```

$ netstat -town | grep 7077

tcp6 0 0 10.240.3.134:7077 10.240.1.25:42851 ESTABLISHED keepalive (6736.50/0/0)

tcp6 0 0 10.240.3.134:44911 10.240.3.134:7077 ESTABLISHED keepalive (4098.68/0/0)

tcp6 0 0 10.240.3.134:7077 10.240.3.134:44911 ESTABLISHED keepalive (4098.68/0/0)

```

Which proves that the keep alive setting is taking effect.

It's currently possible to enable TCP keep alive on the worker / executor, but is not possible to configure on other RPC connections. It's unclear to me why this could be the case. Keep alive is more important for the master to protect it against suddenly departing workers / executors, thus I think it's very important to have it. Particularly this makes the master resilient in case of using preemptible worker VMs in GCE. GCE has the concept of shutdown scripts, which it doesn't guarantee to execute. So workers often don't get shutdown gracefully and the TCP connections on the master linger as there's nothing to close them. Thus the need of enabling keep alive.

This enables keep-alive on connections besides the master's connections, but that shouldn't cause harm.

Closes#20512 from peshopetrov/master.

Authored-by: Petar Petrov <petar.petrov@leanplum.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The SQL config `spark.sql.legacy.timeParser.enabled` was removed by https://github.com/apache/spark/pull/23495. The PR cleans up the SQL migration guide and the comment for `UnixTimestamp`.

Closes#23529 from MaxGekk/get-rid-off-legacy-parser-followup.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

If users set equivalent values to spark.network.timeout and spark.executor.heartbeatInterval, they get the following message:

```

java.lang.IllegalArgumentException: requirement failed: The value of spark.network.timeout=120s must be no less than the value of spark.executor.heartbeatInterval=120s.

```

But it's misleading since it can be read as they could be equal. So this PR replaces "no less than" with "greater than". Also, it fixes similar inconsistencies found in MLlib and SQL components.

## How was this patch tested?

Ran Spark with equivalent values for them manually and confirmed that the revised message was displayed.

Closes#23488 from sekikn/SPARK-26564.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

When determining CatalystType for postgres columns with type `numeric[]` set the type of array element to `DecimalType(38, 18)` instead of `DecimalType(0,0)`.

## How was this patch tested?

Tested with modified `org.apache.spark.sql.jdbc.JDBCSuite`.

Ran the `PostgresIntegrationSuite` manually.

Closes#23456 from a-shkarupin/postgres_numeric_array.

Lead-authored-by: Oleksii Shkarupin <a.shkarupin@gmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

The vote of final release of `branch-2.2` passed and the branch goes EOL. This PR removes Spark 2.2.x from the testing coverage.

## How was this patch tested?

Pass the Jenkins.

Closes#23526 from dongjoon-hyun/SPARK-26607.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This fixes K8S integration test compilation failure introduced by #23423 .

```scala

$ build/sbt -Pkubernetes-integration-tests test:package

...

[error] /Users/dongjoon/APACHE/spark/resource-managers/kubernetes/integration-tests/src/test/scala/org/apache/spark/deploy/k8s/integrationtest/KubernetesTestComponents.scala:71: type mismatch;

[error] found : org.apache.spark.internal.config.OptionalConfigEntry[Boolean]

[error] required: String

[error] .set(IS_TESTING, false)

[error] ^

[error] /Users/dongjoon/APACHE/spark/resource-managers/kubernetes/integration-tests/src/test/scala/org/apache/spark/deploy/k8s/integrationtest/KubernetesTestComponents.scala:71: type mismatch;

[error] found : Boolean(false)

[error] required: String

[error] .set(IS_TESTING, false)

[error] ^

[error] two errors found

```

## How was this patch tested?

Pass the K8S integration test.

Closes#23527 from dongjoon-hyun/SPARK-26482.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Fix race condition where streams can have unexpected conf values.

New streaming queries should run with isolated SparkSessions so that they aren't affected by conf updates after they are started. In StreamExecution, the parent SparkSession is cloned and used to run each batch, but this cloning happens in a separate thread and may happen after DataStreamWriter.start() returns. If a stream is started and a conf key is set immediately after, the stream is likely to have the new value.

## How was this patch tested?

New unit test that fails prior to the production change and passes with it.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23513 from mukulmurthy/26586.

Authored-by: Mukul Murthy <mukul.murthy@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

Schema pruning has errors when selecting one complex field and having is not null predicate on another one:

```scala

val query = sql("select * from contacts")

.where("name.middle is not null")

.select(

"id",

"name.first",

"name.middle",

"name.last"

)

.where("last = 'Jones'")

.select(count("id"))

```

```

java.lang.IllegalArgumentException: middle does not exist. Available: last

[info] at org.apache.spark.sql.types.StructType.$anonfun$fieldIndex$1(StructType.scala:303)

[info] at scala.collection.immutable.Map$Map1.getOrElse(Map.scala:119)

[info] at org.apache.spark.sql.types.StructType.fieldIndex(StructType.scala:302)

[info] at org.apache.spark.sql.execution.ProjectionOverSchema.$anonfun$getProjection$6(ProjectionOverSchema.scala:58)

[info] at scala.Option.map(Option.scala:163)

[info] at org.apache.spark.sql.execution.ProjectionOverSchema.getProjection(ProjectionOverSchema.scala:56)

[info] at org.apache.spark.sql.execution.ProjectionOverSchema.unapply(ProjectionOverSchema.scala:32)

[info] at org.apache.spark.sql.execution.datasources.parquet.ParquetSchemaPruning$$anonfun$$nestedInanonfun$buildNewProjection$1$1.applyOrElse(Parque

tSchemaPruning.scala:153)

```

## How was this patch tested?

Added tests.

Closes#23474 from viirya/SPARK-26551.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

The PR makes hardcoded configs below to use `ConfigEntry`.

* spark.ui

* spark.ssl

* spark.authenticate

* spark.master.rest

* spark.master.ui

* spark.metrics

* spark.admin

* spark.modify.acl

This patch doesn't change configs which are not relevant to SparkConf (e.g. system properties).

## How was this patch tested?

Existing tests.

Closes#23423 from HeartSaVioR/SPARK-26466.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Per discussion in #23391 (comment) this proposes to just remove the old pre-Spark-3 time parsing behavior.

This is a rebase of https://github.com/apache/spark/pull/23411

## How was this patch tested?

Existing tests.

Closes#23495 from srowen/SPARK-26503.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/22732 , we tried our best to keep the behavior of Scala UDF unchanged in Spark 2.4.

However, since Spark 3.0, Scala 2.12 is the default. The trick that was used to keep the behavior unchanged doesn't work with Scala 2.12.

This PR proposes to remove the Scala 2.11 hack, as it's not useful.

## How was this patch tested?

existing tests.

Closes#23498 from cloud-fan/udf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

It's the follow-up PR for #22962, contains the following works:

- Remove `__init__` in TaskContext and BarrierTaskContext.

- Add more comments to explain the fix.

- Rewrite UT in a new class.

## How was this patch tested?

New UT in test_taskcontext.py

Closes#23435 from xuanyuanking/SPARK-25921-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR aims to remove internal ORC configuration to simplify the code path for Spark 3.0.0. This removes the configuration `spark.sql.orc.copyBatchToSpark` and related ORC codes including tests and benchmarks.

## How was this patch tested?

Pass the Jenkins with the reduced test coverage.

Closes#23503 from dongjoon-hyun/SPARK-26584.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Remove spark.memory.useLegacyMode and StaticMemoryManager. Update tests that used the StaticMemoryManager to equivalent use of UnifiedMemoryManager.

## How was this patch tested?

Existing tests, with modifications to make them work with a different mem manager.

Closes#23457 from srowen/SPARK-26539.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/18576

The newly added rule `UpdateNullabilityInAttributeReferences` does the same thing the `FixNullability` does, we only need to keep one of them.

This PR removes `UpdateNullabilityInAttributeReferences`, and use `FixNullability` to replace it. Also rename it to `UpdateAttributeNullability`

## How was this patch tested?

existing tests

Closes#23390 from cloud-fan/nullable.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>