### What changes were proposed in this pull request?

During Spark History Server startup, there are two things happening simultaneously that call into `java.nio.file.FileSystems.getDefault()` and we sometime hit [JDK-8194653](https://bugs.openjdk.java.net/browse/JDK-8194653).

1) start jetty server

2) start ApplicationHistoryProvider (which reads files from HDFS)

We should do these two things sequentially instead of in parallel.

We introduce a start() method in ApplicationHistoryProvider (and its subclass FsHistoryProvider), and we do initialize inside the start() method instead of the constructor.

In HistoryServer, we explicitly call provider.start() after we call bind() which starts the Jetty server.

### Why are the changes needed?

It is a bug that occasionally starting Spark History Server results in process hang due to deadlock among threads.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

I stress tested this PR with a bash script to stop and start Spark History Server more than 1000 times, it worked fine. Previously I can only do the stop/start loop less than 10 times before I hit the deadlock issue.

Closes#25705 from shanyu/shanyu-29003.

Authored-by: Shanyu Zhao <shzhao@microsoft.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

We already have found and fixed the correctness issue before when RDD output is INDETERMINATE. One missing part is sampling-based RDD. This kind of RDDs is order sensitive to its input. A sampling-based RDD with unordered input, should be INDETERMINATE.

### Why are the changes needed?

A sampling-based RDD with unordered input is just like MapPartitionsRDD with isOrderSensitive parameter as true. The RDD output can be different after a rerun.

It is a problem in ML applications.

In ML, sample is used to prepare training data. ML algorithm fits the model based on the sampled data. If rerun tasks of sample produce different output during model fitting, ML results will be unreliable and also buggy.

Each sample is random output, but once you sampled, the output should be determinate.

### Does this PR introduce any user-facing change?

Previously, a sampling-based RDD can possibly come with different output after a rerun.

After this patch, sampling-based RDD is INDETERMINATE. For an INDETERMINATE map stage, currently Spark scheduler will re-try all the tasks of the failed stage.

### How was this patch tested?

Added test.

Closes#25751 from viirya/sample-order-sensitive.

Authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: Liang-Chi Hsieh <liangchi@uber.com>

JIRA :https://issues.apache.org/jira/browse/SPARK-29050

'a hdfs' change into 'an hdfs'

'an unique' change into 'a unique'

'an url' change into 'a url'

'a error' change into 'an error'

Closes#25756 from dengziming/feature_fix_typos.

Authored-by: dengziming <dengziming@growingio.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch ensures accessing recorded values in listener is always after letting listeners fully process all events. To ensure this, this patch adds new class to hide these values and access with methods which will ensure above condition. Without this guard, two threads are running concurrently - 1) listeners process thread 2) test main thread - and race condition would occur.

That's why we also see very odd thing, error message saying condition is met but test failed:

```

- Barrier task failures from the same stage attempt don't trigger multiple stage retries *** FAILED ***

ArrayBuffer(0) did not equal List(0) (DAGSchedulerSuite.scala:2656)

```

which means verification failed, and condition is met just before constructing error message.

The guard is properly placed in many spots, but missed in some places. This patch enforces that it can't be missed.

### Why are the changes needed?

UT fails intermittently and this patch will address the flakyness.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Modified UT.

Also made the flaky tests artificially failing via applying 50ms of sleep on each onXXX method.

I found 3 methods being failed. (They've marked as X. Just ignore ! as they failed on waiting listener in given timeout and these tests don't deal with these recorded values - it uses other timeout value 1000ms than 10000ms for this listener so affected via side-effect.)

When I applied same in this patch all tests marked as X passed.

Closes#25706 from HeartSaVioR/SPARK-26989.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

PR #22112 fixed the todo added by PR #20393(SPARK-23207). We can remove it now.

### Why are the changes needed?

In order not to confuse developers.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

no need to test

Closes#25755 from LinhongLiu/remove-todo.

Authored-by: Liu,Linhong <liulinhong@baidu.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Uses the APIs introduced in SPARK-28209 in the UnsafeShuffleWriter.

## How was this patch tested?

Since this is just a refactor, existing unit tests should cover the relevant code paths. Micro-benchmarks from the original fork where this code was built show no degradation in performance.

Closes#25304 from mccheah/shuffle-writer-refactor-unsafe-writer.

Lead-authored-by: mcheah <mcheah@palantir.com>

Co-authored-by: mccheah <mcheah@palantir.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Running spark on yarn, I got

```

java.lang.ClassCastException: org.apache.hadoop.ipc.CallerContext$Builder cannot be cast to scala.runtime.Nothing$

```

Utils.classForName return Class[Nothing], I think it should be defind as Class[_] to resolve this issue

## How was this patch tested?

not need

Closes#25389 from hddong/SPARK-28657-fix-currentContext-Instance-failed.

Lead-authored-by: hongdd <jn_hdd@163.com>

Co-authored-by: hongdongdong <hongdongdong@cmss.chinamobile.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

- Remove SQLContext.createExternalTable and Catalog.createExternalTable, deprecated in favor of createTable since 2.2.0, plus tests of deprecated methods

- Remove HiveContext, deprecated in 2.0.0, in favor of `SparkSession.builder.enableHiveSupport`

- Remove deprecated KinesisUtils.createStream methods, plus tests of deprecated methods, deprecate in 2.2.0

- Remove deprecated MLlib (not Spark ML) linear method support, mostly utility constructors and 'train' methods, and associated docs. This includes methods in LinearRegression, LogisticRegression, Lasso, RidgeRegression. These have been deprecated since 2.0.0

- Remove deprecated Pyspark MLlib linear method support, including LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD

- Remove 'runs' argument in KMeans.train() method, which has been a no-op since 2.0.0

- Remove deprecated ChiSqSelector isSorted protected method

- Remove deprecated 'yarn-cluster' and 'yarn-client' master argument in favor of 'yarn' and deploy mode 'cluster', etc

Notes:

- I was not able to remove deprecated DataFrameReader.json(RDD) in favor of DataFrameReader.json(Dataset); the former was deprecated in 2.2.0, but, it is still needed to support Pyspark's .json() method, which can't use a Dataset.

- Looks like SQLContext.createExternalTable was not actually deprecated in Pyspark, but, almost certainly was meant to be? Catalog.createExternalTable was.

- I afterwards noted that the toDegrees, toRadians functions were almost removed fully in SPARK-25908, but Felix suggested keeping just the R version as they hadn't been technically deprecated. I'd like to revisit that. Do we really want the inconsistency? I'm not against reverting it again, but then that implies leaving SQLContext.createExternalTable just in Pyspark too, which seems weird.

- I *kept* LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD, RidgeRegressionWithSGD in Pyspark, though deprecated, as it is hard to remove them (still used by StreamingLogisticRegressionWithSGD?) and they are not fully removed in Scala. Maybe should not have been deprecated.

### Why are the changes needed?

Deprecated items are easiest to remove in a major release, so we should do so as much as possible for Spark 3. This does not target items deprecated 'recently' as of Spark 2.3, which is still 18 months old.

### Does this PR introduce any user-facing change?

Yes, in that deprecated items are removed from some public APIs.

### How was this patch tested?

Existing tests.

Closes#25684 from srowen/SPARK-28980.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

If a Spark task is killed due to intentional job kills, automated killing of redundant speculative tasks, etc, ClosedByInterruptException occurs if task has unfinished I/O operation with AbstractInterruptibleChannel. A single cancelled task can result in hundreds of stack trace of ClosedByInterruptException being logged.

In this PR, stack trace of ClosedByInterruptException won't be logged like Executor.run do for InterruptedException.

### Why are the changes needed?

Large numbers of spurious exceptions is confusing to users when they are inspecting Spark logs to diagnose other issues.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#25674 from colinmjj/spark-28340.

Authored-by: colinma <colinma@tencent.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

In spark-shell local mode, in the task page, host name is coming as localhost

This PR changes it to show machine IP, as shown in the "spark.driver.host" in the environment page

### Why are the changes needed?

To show the proper IP in the task page host column

### Does this PR introduce any user-facing change?

It updates the SPARK UI->Task page->Host Column

### How was this patch tested?

verfied in spark UI

Closes#25645 from shivusondur/localhost1.

Authored-by: shivusondur <shivusondur@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This patch fixes the bug which throws ConcurrentModificationException when job with 0 partition is submitted via DAGScheduler.

### Why are the changes needed?

Without this patch, structured streaming query throws ConcurrentModificationException, like below stack trace:

```

19/09/04 09:48:49 ERROR AsyncEventQueue: Listener EventLoggingListener threw an exception

java.util.ConcurrentModificationException

at java.util.Hashtable$Enumerator.next(Hashtable.java:1387)

at scala.collection.convert.Wrappers$JPropertiesWrapper$$anon$6.next(Wrappers.scala:424)

at scala.collection.convert.Wrappers$JPropertiesWrapper$$anon$6.next(Wrappers.scala:420)

at scala.collection.Iterator.foreach(Iterator.scala:941)

at scala.collection.Iterator.foreach$(Iterator.scala:941)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1429)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at scala.collection.TraversableLike.map(TraversableLike.scala:237)

at scala.collection.TraversableLike.map$(TraversableLike.scala:230)

at scala.collection.AbstractTraversable.map(Traversable.scala:108)

at org.apache.spark.util.JsonProtocol$.mapToJson(JsonProtocol.scala:514)

at org.apache.spark.util.JsonProtocol$.$anonfun$propertiesToJson$1(JsonProtocol.scala:520)

at scala.Option.map(Option.scala:163)

at org.apache.spark.util.JsonProtocol$.propertiesToJson(JsonProtocol.scala:519)

at org.apache.spark.util.JsonProtocol$.jobStartToJson(JsonProtocol.scala:155)

at org.apache.spark.util.JsonProtocol$.sparkEventToJson(JsonProtocol.scala:79)

at org.apache.spark.scheduler.EventLoggingListener.logEvent(EventLoggingListener.scala:149)

at org.apache.spark.scheduler.EventLoggingListener.onJobStart(EventLoggingListener.scala:217)

at org.apache.spark.scheduler.SparkListenerBus.doPostEvent(SparkListenerBus.scala:37)

at org.apache.spark.scheduler.SparkListenerBus.doPostEvent$(SparkListenerBus.scala:28)

at org.apache.spark.scheduler.AsyncEventQueue.doPostEvent(AsyncEventQueue.scala:37)

at org.apache.spark.scheduler.AsyncEventQueue.doPostEvent(AsyncEventQueue.scala:37)

at org.apache.spark.util.ListenerBus.postToAll(ListenerBus.scala:99)

at org.apache.spark.util.ListenerBus.postToAll$(ListenerBus.scala:84)

at org.apache.spark.scheduler.AsyncEventQueue.super$postToAll(AsyncEventQueue.scala:102)

at org.apache.spark.scheduler.AsyncEventQueue.$anonfun$dispatch$1(AsyncEventQueue.scala:102)

at scala.runtime.java8.JFunction0$mcJ$sp.apply(JFunction0$mcJ$sp.java:23)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at org.apache.spark.scheduler.AsyncEventQueue.org$apache$spark$scheduler$AsyncEventQueue$$dispatch(AsyncEventQueue.scala:97)

at org.apache.spark.scheduler.AsyncEventQueue$$anon$2.$anonfun$run$1(AsyncEventQueue.scala:93)

at org.apache.spark.util.Utils$.tryOrStopSparkContext(Utils.scala:1319)

at org.apache.spark.scheduler.AsyncEventQueue$$anon$2.run(AsyncEventQueue.scala:93)

```

Please refer https://issues.apache.org/jira/browse/SPARK-28967 for detailed reproducer.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Newly added UT. Also manually tested via running simple structured streaming query in spark-shell.

Closes#25672 from HeartSaVioR/SPARK-28967.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

`ReplayListenerSuite` depends on a listener class to listen for replayed events. This class was implemented by extending `EventLoggingListener`. `EventLoggingListener` does not log executor metrics update events, but uses them to update internal state; on a stage completion event, it then logs stage executor metrics events using this internal state. As executor metrics update events do not get written to the event log, they do not get replayed. The internal state of the replay listener can therefore be different from the original listener, leading to different stage completion events being logged.

We reimplement the replay listener to simply buffer each and every event it receives. This makes it a simpler yet better tool for verifying the events that get sent through the ReplayListenerBus.

### Why are the changes needed?

As explained above. Tests sometimes fail due to events being received by the `EventLoggingListener` that do not get logged (and thus do not get replayed) but influence other events that get logged.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Closes#25673 from wypoon/SPARK-28770.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

### What changes were proposed in this pull request?

Use `KeyLock` added in #25612 to simplify `MapOutputTracker.getStatuses`. It also has some improvement after the refactoring:

- `InterruptedException` is no longer sallowed.

- When a shuffle block is fetched, we don't need to wake up unrelated sleeping threads.

### Why are the changes needed?

`MapOutputTracker.getStatuses` is pretty hard to maintain right now because it has a special lock mechanism which we needs to pay attention to whenever updating this method. As we can use `KeyLock` to hide the complexity of locking behind a dedicated lock class, it's better to refactor it to make it easy to understand and maintain.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#25680 from zsxwing/getStatuses.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

Replaces some incorrect usage of `new Configuration()` as it will load default configs defined in Hadoop

### Why are the changes needed?

Unexpected config could be accessed instead of the expected config, see SPARK-28203 for example

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existed tests.

Closes#25616 from advancedxy/remove_invalid_configuration.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This patch implements dynamic partition pruning by adding a dynamic-partition-pruning filter if there is a partitioned table and a filter on the dimension table. The filter is then planned using a heuristic approach:

1. As a broadcast relation if it is a broadcast hash join. The broadcast relation will then be transformed into a reused broadcast exchange by the `ReuseExchange` rule; or

2. As a subquery duplicate if the estimated benefit of partition table scan being saved is greater than the estimated cost of the extra scan of the duplicated subquery; otherwise

3. As a bypassed condition (`true`).

### Why are the changes needed?

This is an important performance feature.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UT

- Testing DPP by enabling / disabling the reuse broadcast results feature and / or the subquery duplication feature.

- Testing DPP with reused broadcast results.

- Testing the key iterators on different HashedRelation types.

- Testing the packing and unpacking of the broadcast keys in a LongType.

Closes#25600 from maryannxue/dpp.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

This patch fixes the bugs in test code itself, FsHistoryProviderSuite.

1. When creating log file via `newLogFile`, codec is ignored, leading to wrong file name. (No one tends to create test for test code, as well as the bug doesn't affect existing tests indeed, so not easy to catch.)

2. When writing events to log file via `writeFile`, metadata (in case of new format) gets written to file regardless of its codec, and the content is overwritten by another stream, hence no information for Spark version is available. It affects existing test, hence we have wrong expected value to workaround the bug.

This patch also removes redundant parameter `isNewFormat` in `writeFile`, as according to review comment, Spark no longer supports old format.

### Why are the changes needed?

Explained in above section why they're bugs, though they only reside in test-code. (Please note that the bug didn't come from non-test side of code.)

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Modified existing UTs, as well as read event log file in console to see metadata is not overwritten by other contents.

Closes#25629 from HeartSaVioR/MINOR-FIX-FsHistoryProviderSuite.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

We are adding other resource type support to the executors and Spark. We should show the resource information for each executor on the UI Executors page.

This also adds a toggle button to show the resources column. It is off by default.

### Why are the changes needed?

to show user what resources the executors have. Like Gpus, fpgas, etc

### Does this PR introduce any user-facing change?

Yes introduces UI and rest api changes to show the resources

### How was this patch tested?

Unit tests and manual UI tests on yarn and standalone modes.

Closes#25613 from tgravescs/SPARK-27489-gpu-ui-latest.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

This PR provides a new lock mechanism `KeyLock` to lock with a given key. Also use this new lock in `TorrentBroadcast` to avoid blocking tasks from fetching different broadcast values.

### Why are the changes needed?

`TorrentBroadcast.readObject` uses a global lock so only one task can be fetching the blocks at the same time. This is not optimal if we are running multiple stages concurrently because they should be able to independently fetch their own blocks.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25612 from zsxwing/SPARK-3137.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

The Experimental and Evolving annotations are both (like Unstable) used to express that a an API may change. However there are many things in the code that have been marked that way since even Spark 1.x. Per the dev thread, anything introduced at or before Spark 2.3.0 is pretty much 'stable' in that it would not change without a deprecation cycle. Therefore I'd like to remove most of these annotations. And, remove the `:: Experimental ::` scaladoc tag too. And likewise for Python, R.

The changes below can be summarized as:

- Generally, anything introduced at or before Spark 2.3.0 has been unmarked as neither Evolving nor Experimental

- Obviously experimental items like DSv2, Barrier mode, ExperimentalMethods are untouched

- I _did_ unmark a few MLlib classes introduced in 2.4, as I am quite confident they're not going to change (e.g. KolmogorovSmirnovTest, PowerIterationClustering)

It's a big change to review, so I'd suggest scanning the list of _files_ changed to see if any area seems like it should remain partly experimental and examine those.

### Why are the changes needed?

Many of these annotations are incorrect; the APIs are de facto stable. Leaving them also makes legitimate usages of the annotations less meaningful.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25558 from srowen/SPARK-28855.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Use the shuffle writer APIs introduced in SPARK-28209 in the sort shuffle writer.

## How was this patch tested?

Existing unit tests were changed to use the plugin instead, and they used the local disk version to ensure that there were no regressions.

Closes#25342 from mccheah/shuffle-writer-refactor-sort-shuffle-writer.

Lead-authored-by: mcheah <mcheah@palantir.com>

Co-authored-by: mccheah <mcheah@palantir.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

When starting python processes, set `OMP_NUM_THREADS` to the number of cores allocated to an executor or driver if `OMP_NUM_THREADS` is not already set. Each python process will use the same `OMP_NUM_THREADS` setting, even if workers are not shared.

This avoids creating an OpenMP thread pool for parallel processing with a number of threads equal to the number of cores on the executor and [significantly reduces memory consumption](https://github.com/numpy/numpy/issues/10455). Instead, this threadpool should use the number of cores allocated to the executor, if available. If a setting for number of cores is not available, this doesn't change any behavior. OpenMP is used by numpy and pandas.

### Why are the changes needed?

To reduce memory consumption for PySpark jobs.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Validated this reduces python worker memory consumption by more than 1GB on our cluster.

Closes#25545 from rdblue/SPARK-28843-set-omp-num-cores.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The shuffle writer API introduced in SPARK-28209 has a flaw that leads to a memory usage regression - we ended up tracking the partition lengths in two places. Here, we modify the API slightly to avoid redundant tracking. The implementation of the shuffle writer plugin is now responsible for tracking the lengths of partitions, and propagating this back up to the higher shuffle writer as part of the commitAllPartitions API.

## How was this patch tested?

Existing unit tests.

Closes#25341 from mccheah/dont-redundantly-store-part-lengths.

Authored-by: mcheah <mcheah@palantir.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In my application spark streaming is restarted programmatically by stopping StreamingContext without stopping of SparkContext and creating/starting a new one. I use it for automatic detection of Kafka topic/partition changes and automatic failover in case of non fatal exceptions.

However i notice that after multiple restarts driver fails with OOM. During investigation of heap dump i figured out that StreamingContext object isn't cleared by GC after stopping.

<img width="1901" alt="Screen Shot 2019-08-14 at 12 23 33" src="https://user-images.githubusercontent.com/13151161/63010149-83f4c200-be8e-11e9-9f48-12b6e97839f4.png">

There are several places which holds reference to it :

1. StreamingTab registers StreamingJobProgressListener which holds reference to Streaming Context directly to LiveListenerBus shared queue via ssc.sc.addSparkListener(listener) method invocation. However this listener isn't unregistered at stop method.

2. json handlers (/streaming/json and /streaming/batch/json) aren't unregistered in SparkUI, while they hold reference to StreamingJobProgressListener. Basically the same issue affects all the pages, i assume that renderJsonHandler should be added to pageToHandlers cache on attachPage method invocation in order to unregistered it as well on detachPage.

3. SparkUi holds reference to StreamingJobProgressListener in the corresponding local variable which isn't cleared after stopping of StreamingContext.

## How was this patch tested?

Added tests to existing test suites.

After i applied these changes via reflection in my app OOM on driver side gone.

Closes#25439 from choojoyq/SPARK-28709-fix-streaming-context-leak-on-stop.

Authored-by: Nikita Gorbachevsky <nikitag@playtika.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Resolves [SPARK-28778: Shuffle jobs fail due to incorrect advertised address when running in a virtual network on Mesos](https://issues.apache.org/jira/browse/SPARK-28778).

This patch fixes a bug which occurs when shuffle jobs are launched by Mesos in a virtual network. Mesos scheduler sets executor `--hostname` parameter to `0.0.0.0` in the case when `spark.mesos.network.name` is provided. This makes executors use `0.0.0.0` as their advertised address and, in the presence of shuffle, executors fail to fetch shuffle blocks from each other using `0.0.0.0` as the origin. When a virtual network is used the hostname or IP address is not known upfront and assigned to a container at its start time so the executor process needs to advertise the correct dynamically assigned address to be reachable by other executors.

Changes:

- added a fallback to `Utils.localHostName()` in Spark Executors when `--hostname` is not provided

- removed setting executor address to `0.0.0.0` from Mesos scheduler

- refactored the code related to building executor command in Mesos scheduler

- added network configuration support to Docker containerizer

- added unit tests

### Why are the changes needed?

The bug described above prevents Mesos users from running any jobs which involve shuffle due to the inability of executors to fetch shuffle blocks because of incorrect advertised address when virtual network is used.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- added unit test to `MesosCoarseGrainedSchedulerBackendSuite` which verifies the absence of `--hostname` parameter when `spark.mesos.network.name` is provided and its presence otherwise

- added unit test to `MesosSchedulerBackendUtilSuite` which verifies that `MesosSchedulerBackendUtil.buildContainerInfo` sets network-related properties for Docker containerizer

- unit tests from this repo launched with profiles: `./build/mvn test -Pmesos -Pnetlib-lgpl -Psparkr -Phive -Phive-thriftserver`, build log attached: [mvn.test.log](https://github.com/apache/spark/files/3516891/mvn.test.log)

- integration tests from [DCOS Spark repo](https://github.com/mesosphere/spark-build), more specifically - [test_spark_cni.py](https://github.com/mesosphere/spark-build/blob/master/tests/test_spark_cni.py) which runs a specific [shuffle job](https://github.com/mesosphere/spark-build/blob/master/tests/jobs/scala/src/main/scala/ShuffleApp.scala) and verifies its successful completion, Mesos task network configuration, and IP addresses for both Mesos and Docker containerizers

Closes#25500 from akirillov/DCOS-45840-fix-advertised-ip-in-virtual-networks.

Authored-by: Anton Kirillov <akirillov@mesosophere.io>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Improved warning message in Barrier Execution Mode when required slots > maximum slots.

The new message contains information about required slots, maximum slots and how many times retry failed.

### Why are the changes needed?

Providing to users with the number of required slots, maximum slots and how many times retry failed might help users to decide what they should do.

For example, continuing to wait for retry succeeded or killing jobs.

### Does this PR introduce any user-facing change?

Yes.

If `spark.scheduler.barrier.maxConcurrentTaskCheck.maxFailures=3`, we get following warning message.

Before applying this change:

```

19/08/18 15:18:09 WARN DAGScheduler: The job 2 requires to run a barrier stage that requires more slots than the total number of slots in the cluster currently.

19/08/18 15:18:24 WARN DAGScheduler: The job 2 requires to run a barrier stage that requires more slots than the total number of slots in the cluster currently.

19/08/18 15:18:39 WARN DAGScheduler: The job 2 requires to run a barrier stage that requires more slots than the total number of slots in the cluster currently.

19/08/18 15:18:54 WARN DAGScheduler: The job 2 requires to run a barrier stage that requires more slots than the total number of slots in the cluster currently.

org.apache.spark.scheduler.BarrierJobSlotsNumberCheckFailed: [SPARK-24819]: Barrier execution mode does not allow run a barrier stage that requires more slots than the total number of slots in the cluster currently. Please init a new cluster with more CPU cores or repartition the input RDD(s) to reduce the number of slots required to run this barrier stage.

at org.apache.spark.scheduler.DAGScheduler.checkBarrierStageWithNumSlots(DAGScheduler.scala:439)

at org.apache.spark.scheduler.DAGScheduler.createResultStage(DAGScheduler.scala:453)

at org.apache.spark.scheduler.DAGScheduler.handleJobSubmitted(DAGScheduler.scala:983)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2140)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2132)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2121)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:749)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2080)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2120)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2145)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:961)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:366)

at org.apache.spark.rdd.RDD.collect(RDD.scala:960)

... 47 elided

```

After applying this change:

```

19/08/18 16:52:23 WARN DAGScheduler: The job 0 requires to run a barrier stage that requires 3 slots than the total number of slots(2) in the cluster currently.

19/08/18 16:52:38 WARN DAGScheduler: The job 0 requires to run a barrier stage that requires 3 slots than the total number of slots(2) in the cluster currently (Retry 1/3 failed).

19/08/18 16:52:53 WARN DAGScheduler: The job 0 requires to run a barrier stage that requires 3 slots than the total number of slots(2) in the cluster currently (Retry 2/3 failed).

19/08/18 16:53:08 WARN DAGScheduler: The job 0 requires to run a barrier stage that requires 3 slots than the total number of slots(2) in the cluster currently (Retry 3/3 failed).

org.apache.spark.scheduler.BarrierJobSlotsNumberCheckFailed: [SPARK-24819]: Barrier execution mode does not allow run a barrier stage that requires more slots than the total number of slots in the cluster currently. Please init a new cluster with more CPU cores or repartition the input RDD(s) to reduce the number of slots required to run this barrier stage.

at org.apache.spark.scheduler.DAGScheduler.checkBarrierStageWithNumSlots(DAGScheduler.scala:439)

at org.apache.spark.scheduler.DAGScheduler.createResultStage(DAGScheduler.scala:453)

at org.apache.spark.scheduler.DAGScheduler.handleJobSubmitted(DAGScheduler.scala:983)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2140)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2132)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2121)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:749)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2080)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2120)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2145)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:961)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:366)

at org.apache.spark.rdd.RDD.collect(RDD.scala:960)

... 47 elided

```

### How was this patch tested?

I tested manually using Spark Shell with following configuration and script. And then, checked log message.

```

$ bin/spark-shell --master local[2] --conf spark.scheduler.barrier.maxConcurrentTasksCheck.maxFailures=3

scala> sc.parallelize(1 to 100, sc.defaultParallelism+1).barrier.mapPartitions(identity(_)).collect

```

Closes#25487 from sarutak/barrier-exec-mode-warning-message.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Spark uses Netty 4 directly, but also includes Netty 3 only because transitive dependencies do. The dependencies (Hadoop HDFS, Zookeeper, Avro) don't seem to need this dependency as used in Spark. I think we can forcibly remove it to slim down the dependencies.

Previous attempts were blocked by its usage in Flume, but that dependency has gone away.

https://github.com/apache/spark/pull/15436

### Why are the changes needed?

Mostly to reduce the transitive dependency size and complexity a little bit and avoid triggering spurious security alerts on Netty 3.x usage.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests

Closes#25544 from srowen/SPARK-17875.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

<!--

Thanks for sending a pull request! Here are some tips for you:

1. If this is your first time, please read our contributor guidelines: https://spark.apache.org/contributing.html

2. Ensure you have added or run the appropriate tests for your PR: https://spark.apache.org/developer-tools.html

3. If the PR is unfinished, add '[WIP]' in your PR title, e.g., '[WIP][SPARK-XXXX] Your PR title ...'.

4. Be sure to keep the PR description updated to reflect all changes.

5. Please write your PR title to summarize what this PR proposes.

6. If possible, provide a concise example to reproduce the issue for a faster review.

-->

### What changes were proposed in this pull request?

<!--

Please clarify what changes you are proposing. The purpose of this section is to outline the changes and how this PR fixes the issue.

If possible, please consider writing useful notes for better and faster reviews in your PR. See the examples below.

1. If you refactor some codes with changing classes, showing the class hierarchy will help reviewers.

2. If you fix some SQL features, you can provide some references of other DBMSes.

3. If there is design documentation, please add the link.

4. If there is a discussion in the mailing list, please add the link.

-->

Dealing with interrupted exception in BarrierTaskContext.barrier()

### Why are the changes needed?

<!--

Please clarify why the changes are needed. For instance,

1. If you propose a new API, clarify the use case for a new API.

2. If you fix a bug, you can clarify why it is a bug.

-->

Interrupted exception will happen in the case sparkContext local property "spark.job.interruptOnCancel" set true.

### Does this PR introduce any user-facing change?

<!--

If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description and/or an example to show the behavior difference if possible.

If no, write 'No'.

-->

No.

### How was this patch tested?

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

-->

UT.

Closes#25519 from WeichenXu123/barrier_fl.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This change reverts the logic which was introduced as a part of SPARK-24149 and a subsequent followup PR.

With existing logic:

- Spark fails to launch with HDFS federation enabled while trying to get a path to a logical nameservice.

- It gets tokens for unrelated namespaces if they are used in HDFS Federation

- Automatic namespace discovery is supported only if these are on the same cluster.

Rationale for change:

- For accessing data from related namespaces, viewfs should handle getting tokens for spark

- For accessing data from unrelated namespaces(user explicitly specifies them using existing configs) as these could be on the same or different cluster.

(Please fill in changes proposed in this fix)

Revert the changes.

## How was this patch tested?

Ran few manual tests and unit test.

Closes#24785 from dhruve/bug/SPARK-27937.

Authored-by: Dhruve Ashar <dhruveashar@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fix canceling a spark job using barrier mode but barrier tasks do not exit.

Currently, when spark tasks are killed, `BarrierTaskContext.barrier()` cannot be killed (it will blocking on RPC request), cause the task blocking and cannot exit.

In my PR I implement an interface for RPC which support `abort` in class `RpcEndpointRef`

```

def askAbortable[T: ClassTag](

message: Any,

timeout: RpcTimeout): AbortableRpcFuture[T]

```

The returned `AbortableRpcFuture` instance include an `abort` method so that we can abort the RPC before it timeout.

## How was this patch tested?

Unit test added.

Manually test:

### Test code

launch spark-shell via `spark-shell --master local[4]`

and run following code:

```

sc.setLogLevel("INFO")

import org.apache.spark.BarrierTaskContext

val n = 4

def taskf(iter: Iterator[Int]): Iterator[Int] = {

val context = BarrierTaskContext.get()

val x = iter.next()

if (x % 2 == 0) {

// sleep 6000000 seconds with task killed checking

for (i <- 0 until 6000000) {

Thread.sleep(1000)

if (context.isInterrupted()) {

throw new org.apache.spark.TaskKilledException()

}

}

}

context.barrier()

return Iterator.empty

}

// launch spark job, including 4 tasks, tasks 1/3 will enter `barrier()`, and tasks 0/2 will enter `sleep`

sc.parallelize((0 to n), n).barrier().mapPartitions(taskf).collect()

```

And then press Ctrl+C to exit the running job.

### Before

press Ctrl+C to exit the running job, then open spark UI we can see 2 tasks (task 1/3) are not killed. They are blocking.

### After

press Ctrl+C to exit the running job, we can see in spark UI all tasks killed successfully.

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25235 from WeichenXu123/sc_14848.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Change the logic of collecting the indeterminate stage, we should look at stages from mapStage, not failedStage during handle FetchFailed.

### Why are the changes needed?

In the fetch failed error handle logic, the original logic of collecting indeterminate stage from the fetch failed stage. And in the scenario of the fetch failed happened in the first task of this stage, this logic will cause the indeterminate stage to resubmit partially. Eventually, we are capable of getting correctness bug.

### Does this PR introduce any user-facing change?

It makes the corner case of indeterminate stage abort as expected.

### How was this patch tested?

New UT in DAGSchedulerSuite.

Run below integrated test with `local-cluster[5, 2, 5120]`, and set `spark.sql.execution.sortBeforeRepartition`=false, it will abort the indeterminate stage as expected:

```

import scala.sys.process._

import org.apache.spark.TaskContext

val res = spark.range(0, 10000 * 10000, 1).map{ x => (x % 1000, x)}

// kill an executor in the stage that performs repartition(239)

val df = res.repartition(113).map{ x => (x._1 + 1, x._2)}.repartition(239).map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 1 && TaskContext.get.stageAttemptNumber == 0) {

throw new Exception("pkill -f -n java".!!)

}

x

}

val r2 = df.distinct.count()

```

Closes#25498 from xuanyuanking/SPARK-28699-followup.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Update Jersey to 2.27+, ideally 2.29, for possible JDK 11 fixes.

## How was this patch tested?

Existing tests.

Closes#25455 from srowen/SPARK-28737.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In pr #[24533](https://github.com/apache/spark/pull/24533/files) , it prevent retry to a removed Executor.

In my test, I can't catch exceptions from

` new OneForOneBlockFetcher(client, appId, execId, blockIds, listener,

transportConf, tempFileManager).start()`

And I check the code carefully, method **start()** will handle exception of IOException in it's retry logical, won't throw it out. until it meet maxRetry times or meet exception that is not IOException.

And if we meet the situation that when we fetch block , the executor is dead, when we rerun

`RetryingBlockFetcher.BlockFetchStarter.createAndStart()`

we may failed when we create a transport client to dead executor. it will throw a IOException.

We should catch this IOException.

### Why are the changes needed?

Old solution not comprehensive. Didn't cover more case.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existed Unit Test

Closes#25469 from AngersZhuuuu/SPARK-27637-FLLOW-UP.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This patch adds the binding classes to enable spark to switch dataframe output to using the S3A zero-rename committers shipping in Hadoop 3.1+. It adds a source tree into the hadoop-cloud-storage module which only compiles with the hadoop-3.2 profile, and contains a binding for normal output and a specific bridge class for Parquet (as the parquet output format requires a subclass of `ParquetOutputCommitter`.

Commit algorithms are a critical topic. There's no formal proof of correctness, but the algorithms are documented an analysed in [A Zero Rename Committer](https://github.com/steveloughran/zero-rename-committer/releases). This also reviews the classic v1 and v2 algorithms, IBM's swift committer and the one from EMRFS which they admit was based on the concepts implemented here.

Test-wise

* There's a public set of scala test suites [on github](https://github.com/hortonworks-spark/cloud-integration)

* We have run integration tests against Spark on Yarn clusters.

* This code has been shipping for ~12 months in HDP-3.x.

Closes#24970 from steveloughran/cloud/SPARK-23977-s3a-committer.

Authored-by: Steve Loughran <stevel@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

1. PythonHadoopUtil.mapToConf generates a Configuration with loadDefaults disabled

2. merging hadoop conf in several places of PythonRDD is consistent.

## How was this patch tested?

Added a new test and existed tests

Closes#25002 from advancedxy/SPARK-28203.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This change implements a few changes to the k8s pod allocator so

that it behaves a little better when dynamic allocation is on.

(i) Allow the application to ramp up immediately when there's a

change in the target number of executors. Without this change,

scaling would only trigger when a change happened in the state of

the cluster, e.g. an executor going down, or when the periodical

snapshot was taken (default every 30s).

(ii) Get rid of pending pod requests, both acknowledged (i.e. Spark

knows that a pod is pending resource allocation) and unacknowledged

(i.e. Spark has requested the pod but the API server hasn't created it

yet), when they're not needed anymore. This avoids starting those

executors to just remove them after the idle timeout, wasting resources

in the meantime.

(iii) Re-work some of the code to avoid unnecessary logging. While not

bad without dynamic allocation, the existing logging was very chatty

when dynamic allocation was on. With the changes, all the useful

information is still there, but only when interesting changes happen.

(iv) Gracefully shut down executors when they become idle. Just deleting

the pod causes a lot of ugly logs to show up, so it's better to ask pods

to exit nicely. That also allows Spark to respect the "don't delete

pods" option when dynamic allocation is on.

Tested on a small k8s cluster running different TPC-DS workloads.

Closes#25236 from vanzin/SPARK-28487.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In the current UI, we cannot identify which RDDs are barrier. Visualizing it will make easy to debug.

Following images are shown after this change.

The boxes in pale green mean barrier (We might need to discuss which color is proper).

## How was this patch tested?

Tested manually.

The images above are shown by following operations.

```

val rdd1 = sc.parallelize(1 to 10)

val rdd2 = sc.parallelize(1 to 10)

val rdd3 = rdd1.zip(rdd2).barrier.mapPartitions(identity(_))

val rdd4 = rdd3.map(identity(_))

val rdd5 = rdd4.reduceByKey(_+_)

rdd5.collect

```

Closes#25296 from sarutak/barrierexec-dagviz.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

## What changes were proposed in this pull request?

By SPARK-17019, `On Heap Memory` and `Off Heap Memory` are introduced as optional metrics.

But they are not displayed because they are made `display: none` in css and there are no way to appear them.

I know #22595 also try to resolve this issue but that will use `additional-metrics.js`.

Initially, `additional-metrics.js` is created for `StagePage` but `StagePage` currently uses `stagepage.js` for its additional metrics to be toggle because `DataTable (one of jQuery plugins)` was introduced and we needed another mechanism to add/remove columns for additional metrics.

Now that `ExecutorsPage` also uses `DataTable` so it might be better to introduce same mechanism as `StagePage` for additional metrics.

And then, we can remove `additional-metrics.js` which is no longer used from anywhere.

## How was this patch tested?

After this change is applied, I confirmed `ExecutorsPage` and `StagePage` are properly rendered and all checkboxes for additional metrics work.

Closes#25374 from sarutak/remove-additional-metrics.js.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Currently, on requesting summary metrics, cached data are returned if the current number of "SUCCESS" tasks is the same as the value in cached data.

However, the number of "SUCCESS" tasks is wrong when there are running tasks. In `AppStatusStore`, the KVStore is `ElementTrackingStore`, instead of `InMemoryStore`. The value count is always the number of "SUCCESS" tasks + "RUNNING" tasks.

Thus, even when the running tasks are finished, the out-of-update cached data is returned.

This PR is to fix the code in getting the number of "SUCCESS" tasks.

## How was this patch tested?

Test manually, run

```

sc.parallelize(1 to 160, 40).map(i => Thread.sleep(i*100)).collect()

```

and keep refreshing the stage page , we can see the task summary metrics is wrong.

### Before fix:

### After fix:

Closes#25369 from gengliangwang/fixStagePage.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

* Add log in `NewHadoopRDD`

* Remove some words in logs which related to specific user API.

## How was this patch tested?

Manual.

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25391 from WeichenXu123/log_sf.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

SPARK-24817 and SPARK-24819 introduced new 3 non-internal properties for barrier-execution mode but they are not documented.

So I've added a section into configuration.md for barrier-mode execution.

## How was this patch tested?

Built using jekyll and confirm the layout by browser.

Closes#25370 from sarutak/barrier-exec-mode-conf-doc.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In StagePage, only the first optional column (Scheduler Delay, in this case) appears even though "Select All" checkbox is checked.

The cause is that wrong method is used to manipulate multiple columns. columns should have been used but column was used.

I've fixed this issue by replacing the `column` with `columns`.

## How was this patch tested?

Confirmed behavior of the check-box.

Closes#25397 from sarutak/fix-stagepage.js.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR fixed typos in comments and replace the explicit type with '<>' for Java 8+.

## How was this patch tested?

Manually tested.

Closes#25338 from younggyuchun/younggyu.

Authored-by: younggyu chun <younggyuchun@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In high workload environments, ContextCleaner seems to have excessive logging at INFO level which do not give much information. In one Particular case we see that ``INFO ContextCleaner: Cleaned accumulator`` message is 25-30% of the generated logs. We can log this information for cleanup in DEBUG level instead.

## How was this patch tested?

This do not modify any functionality. This is just changing cleanup log levels to DEBUG for ContextCleaner

Closes#25396 from ajithme/logss.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

In this PR, we implements a complete process of GPU-aware resources scheduling

in Standalone. The whole process looks like: Worker sets up isolated resources

when it starts up and registers to master along with its resources. And, Master

picks up usable workers according to driver/executor's resource requirements to

launch driver/executor on them. Then, Worker launches the driver/executor after

preparing resources file, which is created under driver/executor's working directory,

with specified resource addresses(told by master). When driver/executor finished,

their resources could be recycled to worker. Finally, if a worker stops, it

should always release its resources firstly.

For the case of Workers and Drivers in **client** mode run on the same host, we introduce

a config option named `spark.resources.coordinate.enable`(default true) to indicate

whether Spark should coordinate resources for user. If `spark.resources.coordinate.enable=false`, user should be responsible for configuring different resources for Workers and Drivers when use resourcesFile or discovery script. If true, Spark would help user to assign different resources for Workers and Drivers.

The solution for Spark to coordinate resources among Workers and Drivers is:

Generally, use a shared file named *____allocated_resources____.json* to sync allocated

resources info among Workers and Drivers on the same host.

After a Worker or Driver found all resources using the configured resourcesFile and/or

discovery script during launching, it should filter out available resources by excluding resources already allocated in *____allocated_resources____.json* and acquire resources from available resources according to its own requirement. After that, it should write its allocated resources along with its process id (pid) into *____allocated_resources____.json*. Pid (proposed by tgravescs) here used to check whether the allocated resources are still valid in case of Worker or Driver crashes and doesn't release resources properly. And when a Worker or Driver finished, normally, it would always clean up its own allocated resources in *____allocated_resources____.json*.

Note that we'll always get a file lock before any access to file *____allocated_resources____.json*

and release the lock finally.

Futhermore, we appended resources info in `WorkerSchedulerStateResponse` to work

around master change behaviour in HA mode.

## How was this patch tested?

Added unit tests in WorkerSuite, MasterSuite, SparkContextSuite.

Manually tested with client/cluster mode (e.g. multiple workers) in a single node Standalone.

Closes#25047 from Ngone51/SPARK-27371.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

This patch tries to keep consistency whenever UTF-8 charset is needed, as using `StandardCharsets.UTF_8` instead of using "UTF-8". If the String type is needed, `StandardCharsets.UTF_8.name()` is used.

This change also brings the benefit of getting rid of `UnsupportedEncodingException`, as we're providing `Charset` instead of `String` whenever possible.

This also changes some private Catalyst helper methods to operate on encodings as `Charset` objects rather than strings.

## How was this patch tested?

Existing unit tests.

Closes#25335 from HeartSaVioR/SPARK-28601.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Currently, PythonBroadcast may delete its data file while a python worker still needs it. This happens because PythonBroadcast overrides the `finalize()` method to delete its data file. So, when GC happens and no references on broadcast variable, it may trigger `finalize()` to delete

data file. That's also means, data under python Broadcast variable couldn't be deleted when `unpersist()`/`destroy()` called but relys on GC.

In this PR, we removed the `finalize()` method, and map the PythonBroadcast data file to a BroadcastBlock(which has the same broadcast id with the broadcast variable who wrapped this PythonBroadcast) when PythonBroadcast is deserializing. As a result, the data file could be deleted just like other pieces of the Broadcast variable when `unpersist()`/`destroy()` called and do not rely on GC any more.

## How was this patch tested?

Added a Python test, and tested manually(verified create/delete the broadcast block).

Closes#25262 from Ngone51/SPARK-28486.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

After SPARK-27677, the shuffle client not only handles the shuffle block but also responsible for local persist RDD blocks. For better code scalability and precise semantics(as the [discussion](https://github.com/apache/spark/pull/24892#discussion_r300173331)), here we did several changes:

- Rename ShuffleClient to BlockStoreClient.

- Correspondingly rename the ExternalShuffleClient to ExternalBlockStoreClient, also change the server-side class from ExternalShuffleBlockHandler to ExternalBlockHandler.

- Move MesosExternalBlockStoreClient to Mesos package.

Note, we still keep the name of BlockTransferService, because the `Service` contains both client and server, also the name of BlockTransferService is not referencing shuffle client only.

## How was this patch tested?

Existing UT.

Closes#25327 from xuanyuanking/SPARK-28593.

Lead-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Add configuration spark.scheduler.listenerbus.eventqueue.${name}.capacity to allow configuration of different event queue size.

## How was this patch tested?

Unit test in core/src/test/scala/org/apache/spark/scheduler/SparkListenerSuite.scala

Closes#25307 from yunzoud/SPARK-28574.

Authored-by: yunzoud <yun.zou@databricks.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

Today all registered metric sources are reported to GraphiteSink with no filtering mechanism, although the codahale project does support it.

GraphiteReporter (ScheduledReporter) from the codahale project requires you implement and supply the MetricFilter interface (there is only a single implementation by default in the codahale project, MetricFilter.ALL).

Propose to add an additional regex config to match and filter metrics to the GraphiteSink

## How was this patch tested?

Included a GraphiteSinkSuite that tests:

1. Absence of regex filter (existing default behavior maintained)

2. Presence of `regex=<regexexpr>` correctly filters metric keys

Closes#25232 from nkarpov/graphite_regex.

Authored-by: Nick Karpov <nick@nickkarpov.com>

Signed-off-by: jerryshao <jerryshao@tencent.com>

There's a small, probably very hard to hit thread-safety issue in the blacklist

abort timers in the task scheduler, where they access a non-thread-safe map without

locks.

In the tests, the code was also calling methods on the TaskSetManager without

holding the proper locks, which could cause threads to call non-thread-safe

TSM methods concurrently.

Closes#25317 from vanzin/SPARK-28584.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Prior to this change, in an executor, on each heartbeat, memory metrics are polled and sent in the heartbeat. The heartbeat interval is 10s by default. With this change, in an executor, memory metrics can optionally be polled in a separate poller at a shorter interval.

For each executor, we use a map of (stageId, stageAttemptId) to (count of running tasks, executor metric peaks) to track what stages are active as well as the per-stage memory metric peaks. When polling the executor memory metrics, we attribute the memory to the active stage(s), and update the peaks. In a heartbeat, we send the per-stage peaks (for stages active at that time), and then reset the peaks. The semantics would be that the per-stage peaks sent in each heartbeat are the peaks since the last heartbeat.

We also keep a map of taskId to memory metric peaks. This tracks the metric peaks during the lifetime of the task. The polling thread updates this as well. At end of a task, we send the peak metric values in the task result. In case of task failure, we send the peak metric values in the `TaskFailedReason`.

We continue to do the stage-level aggregation in the EventLoggingListener.

For the driver, we still only poll on heartbeats. What the driver sends will be the current values of the metrics in the driver at the time of the heartbeat. This is semantically the same as before.

## How was this patch tested?

Unit tests. Manually tested applications on an actual system and checked the event logs; the metrics appear in the SparkListenerTaskEnd and SparkListenerStageExecutorMetrics events.

Closes#23767 from wypoon/wypoon_SPARK-26329.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

Logging in driver when loading single large unsplittable file via `sc.textFile` or csv/json datasouce.

Current condition triggering logging is

* only generate one partition

* file is unsplittable, possible reason is:

- compressed by unsplittable compression algo such as gzip.

- multiLine mode in csv/json datasource

- wholeText mode in text datasource

* file size exceed the config threshold `spark.io.warning.largeFileThreshold` (default value is 1GB)

## How was this patch tested?

Manually test.

Generate one gzip file exceeding 1GB,

```

base64 -b 50 /dev/urandom | head -c 2000000000 > file1.txt

cat file1.txt | gzip > file1.gz

```

then launch spark-shell,

run

```

sc.textFile("file:///path/to/file1.gz").count()

```

Will print log like:

```

WARN HadoopRDD: Loading one large unsplittable file file:/.../f1.gz with only one partition, because the file is compressed by unsplittable compression codec

```

run

```

sc.textFile("file:///path/to/file1.txt").count()

```

Will print log like:

```

WARN HadoopRDD: Loading one large file file:/.../f1.gz with only one partition, we can increase partition numbers by the `minPartitions` argument in method `sc.textFile

```

run

```

spark.read.csv("file:///path/to/file1.gz").count

```

Will print log like:

```

WARN CSVScan: Loading one large unsplittable file file:/.../f1.gz with only one partition, the reason is: the file is compressed by unsplittable compression codec

```

run

```

spark.read.option("multiLine", true).csv("file:///path/to/file1.gz").count

```

Will print log like:

```

WARN CSVScan: Loading one large unsplittable file file:/.../f1.gz with only one partition, the reason is: the csv datasource is set multiLine mode

```

JSON and Text datasource also tested with similar cases.

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25134 from WeichenXu123/log_gz.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

The issue is that the test tried to stop an existing scheduler and replace it with

a new one set up for the test. That can cause issues because both were sharing the

same RpcEnv underneath, and unregistering RpcEndpoints is actually asynchronous

(see comment in Dispatcher.unregisterRpcEndpoint). So that could lead to races where

the new scheduler tried to register before the old one was fully unregistered.

The updated test avoids the issue by using a separate RpcEnv / scheduler instance

altogether, and also avoids a misleading NPE in the test logs.

Closes#25318 from vanzin/SPARK-24352.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

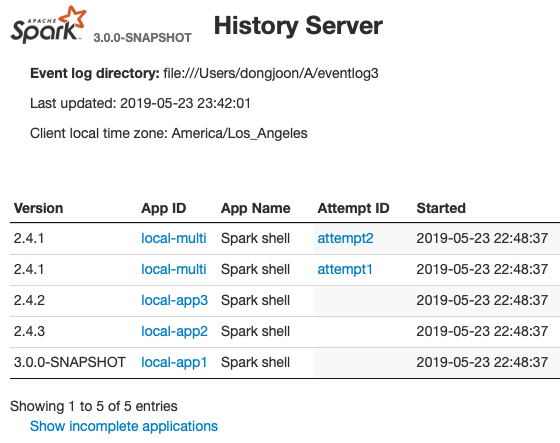

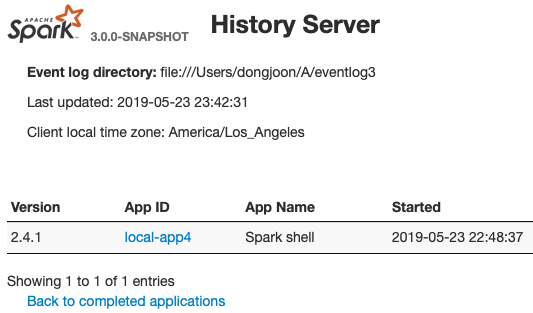

## What changes were proposed in this pull request?

When we set ```spark.history.ui.maxApplications``` to a small value, we can't get some apps from the page search.

If the url is spliced (http://localhost:18080/history/local-xxx), it can be accessed if the app has no attempt.

But in the case of multiple attempted apps, such a url cannot be accessed, and the page displays Not Found.

## How was this patch tested?

Add UT

Closes#25301 from cxzl25/hs_app_last_attempt_id.

Authored-by: sychen <sychen@ctrip.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR proposes to use `AtomicReference` so that parent and child threads can access to the same file block holder.

Python UDF expressions are turned to a plan and then it launches a separate thread to consume the input iterator. In the separate child thread, the iterator sets `InputFileBlockHolder.set` before the parent does which the parent thread is unable to read later.

1. In this separate child thread, if it happens to call `InputFileBlockHolder.set` first without initialization of the parent's thread local (which is done when the `ThreadLocal.get()` is first called), the child thread seems calling its own `initialValue` to initialize.

2. After that, the parent calls its own `initialValue` to initializes at the first call of `ThreadLocal.get()`.

3. Both now have two different references. Updating at child isn't reflected to parent.

This PR fixes it via initializing parent's thread local with `AtomicReference` for file status so that they can be used in each task, and children thread's update is reflected.

I also tried to explain this a bit more at https://github.com/apache/spark/pull/24958#discussion_r297203041.

## How was this patch tested?

Manually tested and unittest was added.

Closes#24958 from HyukjinKwon/SPARK-28153.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

As part of the shuffle storage API proposed in SPARK-25299, this introduces an API for persisting shuffle data in arbitrary storage systems.

This patch introduces several concepts:

* `ShuffleDataIO`, which is the root of the entire plugin tree that will be proposed over the course of the shuffle API project.

* `ShuffleExecutorComponents` - the subset of plugins for managing shuffle-related components for each executor. This will in turn instantiate shuffle readers and writers.

* `ShuffleMapOutputWriter` interface - instantiated once per map task. This provides child `ShufflePartitionWriter` instances for persisting the bytes for each partition in the map task.

The default implementation of these plugins exactly mirror what was done by the existing shuffle writing code - namely, writing the data to local disk and writing an index file. We leverage the APIs in the `BypassMergeSortShuffleWriter` only. Follow-up PRs will use the APIs in `SortShuffleWriter` and `UnsafeShuffleWriter`, but are left as future work to minimize the review surface area.

## How was this patch tested?

New unit tests were added. Micro-benchmarks indicate there's no slowdown in the affected code paths.

Closes#25007 from mccheah/spark-shuffle-writer-refactor.

Lead-authored-by: mcheah <mcheah@palantir.com>

Co-authored-by: mccheah <mcheah@palantir.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

… more efficiently

This PR improves the performance of scheduling speculative tasks to be O(1) instead of O(numSpeculativeTasks), using the same approach used for scheduling regular tasks. The performance of this method is particularly important because a lock is held on the TaskSchedulerImpl which is a bottleneck for all scheduling operations. We ran a Join query on a large dataset with speculation enabled and out of 100000 tasks for the ShuffleMapStage, the maximum number of speculatable tasks that was noted was close to 7900-8000 at a point. That is when we start seeing the bottleneck on the scheduler lock.

In particular, this works by storing a separate stack of tasks by executor, node, and rack locality preferences. Then when trying to schedule a speculative task, rather than scanning all speculative tasks to find ones which match the given executor (or node, or rack) preference, we can jump to a quick check of tasks matching the resource offer. This technique was already used for regular tasks -- this change refactors the code to allow sharing with regular and speculative task execution.

## What changes were proposed in this pull request?

Have split the main queue "speculatableTasks" into 5 separate queues based on locality preference similar to how normal tasks are enqueued. Thus, the "dequeueSpeculativeTask" method will avoid performing locality checks for each task at runtime and simply return the preferable task to be executed.

## How was this patch tested?

We ran a spark job that performed a join on a 10 TB dataset to test the code change.

Original Code:

<img width="1433" alt="screen shot 2019-01-28 at 5 07 22 pm" src="https://user-images.githubusercontent.com/22228190/51873321-572df280-2322-11e9-9149-0aae08d5edc6.png">

Optimized Code:

<img width="1435" alt="screen shot 2019-01-28 at 5 08 19 pm" src="https://user-images.githubusercontent.com/22228190/51873343-6745d200-2322-11e9-947b-2cfd0f06bcab.png">

As you can see, the run time of the ShuffleMapStage came down from 40 min to 6 min approximately, thus, reducing the overall running time of the spark job by a significant amount.

Another example for the same job:

Original Code:

<img width="1440" alt="screen shot 2019-01-28 at 5 11 30 pm" src="https://user-images.githubusercontent.com/22228190/51873355-70cf3a00-2322-11e9-9c3a-af035449a306.png">

Optimized Code:

<img width="1440" alt="screen shot 2019-01-28 at 5 12 16 pm" src="https://user-images.githubusercontent.com/22228190/51873367-7dec2900-2322-11e9-8d07-1b1b49285f71.png">

Closes#23677 from pgandhi999/SPARK-26755.

Lead-authored-by: pgandhi <pgandhi@verizonmedia.com>

Co-authored-by: pgandhi <pgandhi@oath.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

These are what I found during working on #22282.

- Remove unused value: `UnsafeArraySuite#defaultTz`

- Remove redundant new modifier to the case class, `KafkaSourceRDDPartition`

- Remove unused variables from `RDD.scala`

- Remove trailing space from `structured-streaming-kafka-integration.md`

- Remove redundant parameter from `ArrowConvertersSuite`: `nullable` is `true` by default.

- Remove leading empty line: `UnsafeRow`

- Remove trailing empty line: `KafkaTestUtils`

- Remove unthrown exception type: `UnsafeMapData`

- Replace unused declarations: `expressions`

- Remove duplicated default parameter: `AnalysisErrorSuite`

- `ObjectExpressionsSuite`: remove duplicated parameters, conversions and unused variable

Closes#25251 from dongjinleekr/cleanup/201907.

Authored-by: Lee Dongjin <dongjin@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This test tries to detect correct behavior in racy code, where the event

thread is racing with the executor thread that's trying to kill the running

task.

If the event that signals the stage end arrives first, any delay in the

delivery of the message to kill the task causes the code to rapidly process

elements, and may cause the test to assert. Adding a 10ms delay in

LocalSchedulerBackend before the task kill makes the test run through

~1000 elements. A longer delay can easily cause the 10000 elements to

be processed.

Instead, by adding a small delay (10ms) in the test code that processes

elements, there's a much lower probability that the kill event will not

arrive before the end; that leaves a window of 100s for the event

to be delivered to the executor. And because each element only sleeps for

10ms, the test is not really slowed down at all.

Closes#25270 from vanzin/SPARK-28535.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

The motivation for these additional metrics is to help in troubleshooting and monitoring task execution workload when running on a cluster. Currently available metrics include executor threadpool metrics for task completed and for active tasks. The addition of threadpool taskStarted metric will allow for example to collect info on the (approximate) number of failed tasks by computing the difference thread started – (active threads + completed tasks and/or successfully finished tasks).

The proposed metric finishedTasks is also intended for this type of troubleshooting. The difference between finshedTasks and threadpool.completeTasks, is that the latter is a (dropwizard library) gauge taken from the threadpool, while the former is a (dropwizard) counter computed in the [[Executor]] class, when a task successfully finishes, together with several other task metrics counters.