### What changes were proposed in this pull request?

#28671 introduced a change where the order in which CANCELED state for SparkExecuteStatementOperation is set was changed. Before setting the state to CANCELED, `cleanup()` was called which kills the jobs, causing an exception to be thrown inside `execute()`. This causes the state to transiently become ERROR before being set to CANCELED. This PR fixes the order.

### Why are the changes needed?

Bug: wrong operation state is set.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test in SparkExecuteStatementOperationSuite.scala.

Closes#28912 from alismess-db/execute-statement-operation-cleanup-order.

Authored-by: Ali Smesseim <ali.smesseim@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add a generic ClassificationSummary trait

### Why are the changes needed?

Add a generic ClassificationSummary trait so all the classification models can use it to implement summary.

Currently in classification, we only have summary implemented in ```LogisticRegression```. There are requests to implement summary for ```LinearSVCModel``` in https://issues.apache.org/jira/browse/SPARK-20249 and to implement summary for ```RandomForestClassificationModel``` in https://issues.apache.org/jira/browse/SPARK-23631. If we add a generic ClassificationSummary trait and put all the common code there, we can easily add summary to ```LinearSVCModel``` and ```RandomForestClassificationModel```, and also add summary to all the other classification models.

We can use the same approach to add a generic RegressionSummary trait to regression package and implement summary for all the regression models.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

existing tests

Closes#28710 from huaxingao/summary_trait.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR brings https://github.com/apache/spark/pull/28751 back

- It once reverted by 4a25200 because of inevitable maven test failure

- See related updates in this followup a0187cd6b5

- And reverted again because of the flakiness of the added unit tests

- In this PR, The flakiness reason found is caused by the hive metastore connection that the SparkSQLCLIService trying to create which turns out is unnecessary at all. This metastore client points to a dummy metastore server only.

- Also, add some cleanups for SharedThriftServer trait in before and after to prevent its configurations being polluted or polluting others

### Why are the changes needed?

fix flaky test

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

passing sbt and maven tests

Closes#28835 from yaooqinn/SPARK-31926-F.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR brings 02f32cfae4 back which reverted by 4a25200cd7 because of maven test failure

diffs newly made:

1. add a missing log4j file to test resources

2. Call `SessionState.detachSession()` to clean the thread local one in `afterAll`.

3. Not use dedicated JVMs for sbt test runner too

### Why are the changes needed?

fix the maven test

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

add new tests

Closes#28797 from yaooqinn/SPARK-31926-NEW.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Please refer https://issues.apache.org/jira/browse/SPARK-24634 to see rationalization of the issue.

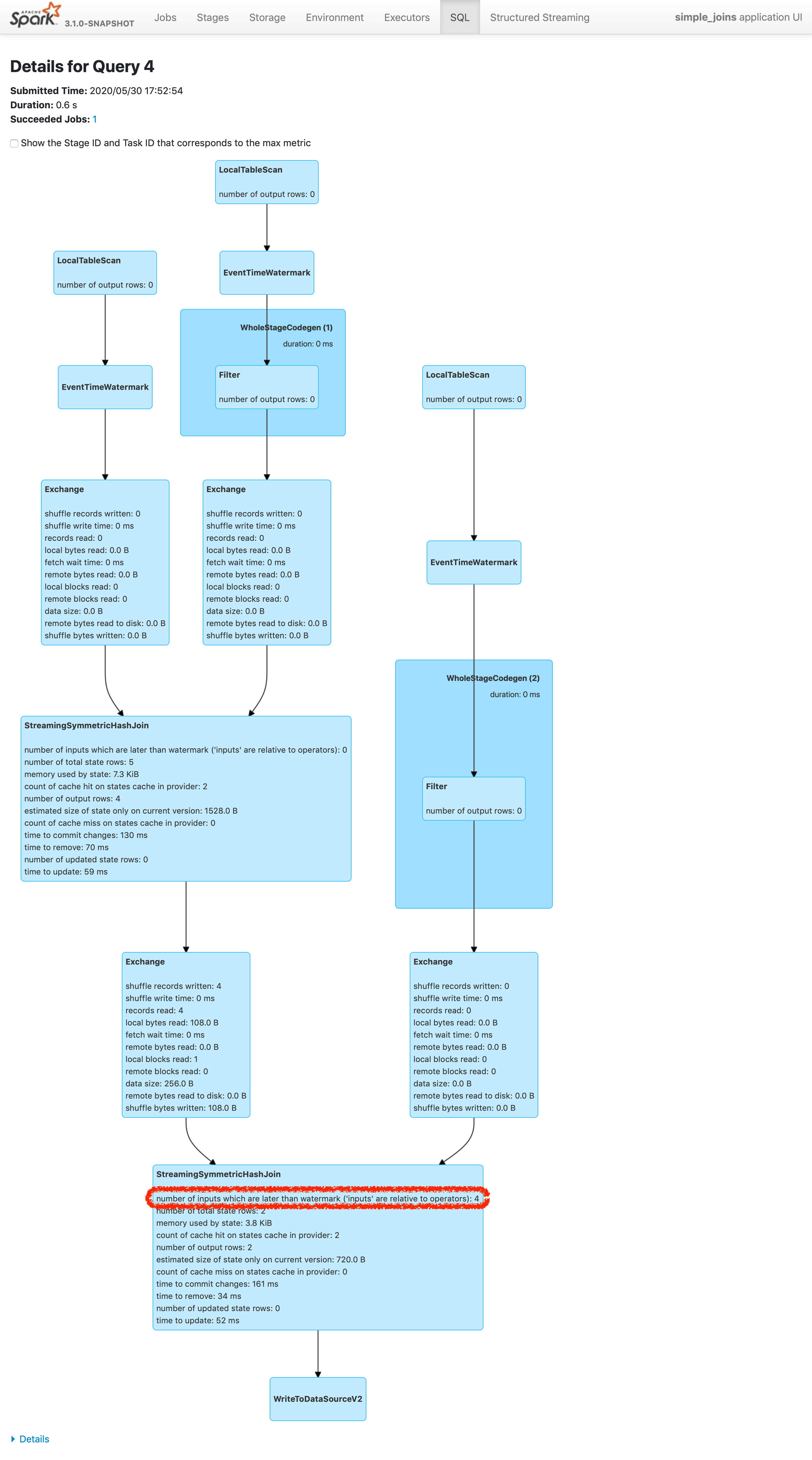

This patch adds a new metric to count the number of inputs arrived later than watermark plus allowed delay. To make changes simpler, this patch doesn't count the exact number of input rows which are later than watermark plus allowed delay. Instead, this patch counts the inputs which are dropped in the logic of operator. The difference of twos are shown in streaming aggregation: to optimize the calculation, streaming aggregation "pre-aggregates" the input rows, and later checks the lateness against "pre-aggregated" inputs, hence the number might be reduced.

The new metric will be provided via two places:

1. On Spark UI: check the metrics in stateful operator nodes in query execution details page in SQL tab

2. On Streaming Query Listener: check "numLateInputs" in "stateOperators" in QueryProcessEvent.

### Why are the changes needed?

Dropping late inputs means that end users might not get expected outputs. Even end users may indicate the fact and tolerate the result (as that's what allowed lateness is for), but they should be able to observe whether the current value of allowed lateness drops inputs or not so that they can adjust the value.

Also, whatever the chance they have multiple of stateful operators in a single query, if Spark drops late inputs "between" these operators, it becomes "correctness" issue. Spark should disallow such possibility, but given we already provided the flexibility, at least we should provide the way to observe the correctness issue and decide whether they should make correction of their query or not.

### Does this PR introduce _any_ user-facing change?

Yes. End users will be able to retrieve the information of late inputs via two ways:

1. SQL tab in Spark UI

2. Streaming Query Listener

### How was this patch tested?

New UTs added & existing UTs are modified to reflect the change.

And ran manual test reproducing SPARK-28094.

I've picked the specific case on "B outer C outer D" which is enough to represent the "intermediate late row" issue due to global watermark.

https://gist.github.com/jammann/b58bfbe0f4374b89ecea63c1e32c8f17

Spark logs warning message on the query which means SPARK-28074 is working correctly,

```

20/05/30 17:52:47 WARN UnsupportedOperationChecker: Detected pattern of possible 'correctness' issue due to global watermark. The query contains stateful operation which can emit rows older than the current watermark plus allowed late record delay, which are "late rows" in downstream stateful operations and these rows can be discarded. Please refer the programming guide doc for more details.;

Join LeftOuter, ((D_FK#28 = D_ID#87) AND (B_LAST_MOD#26-T30000ms = D_LAST_MOD#88-T30000ms))

:- Join LeftOuter, ((C_FK#27 = C_ID#58) AND (B_LAST_MOD#26-T30000ms = C_LAST_MOD#59-T30000ms))

: :- EventTimeWatermark B_LAST_MOD#26: timestamp, 30 seconds

: : +- Project [v#23.B_ID AS B_ID#25, v#23.B_LAST_MOD AS B_LAST_MOD#26, v#23.C_FK AS C_FK#27, v#23.D_FK AS D_FK#28]

: : +- Project [from_json(StructField(B_ID,StringType,false), StructField(B_LAST_MOD,TimestampType,false), StructField(C_FK,StringType,true), StructField(D_FK,StringType,true), value#21, Some(UTC)) AS v#23]

: : +- Project [cast(value#8 as string) AS value#21]

: : +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider3a7fd18c, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable396d2958, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee61a, [key#7, value#8, topic#9, partition#10, offset#11L, timestamp#12, timestampType#13], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> B, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#0, value#1, topic#2, partition#3, offset#4L, timestamp#5, timestampType#6]

: +- EventTimeWatermark C_LAST_MOD#59: timestamp, 30 seconds

: +- Project [v#56.C_ID AS C_ID#58, v#56.C_LAST_MOD AS C_LAST_MOD#59]

: +- Project [from_json(StructField(C_ID,StringType,false), StructField(C_LAST_MOD,TimestampType,false), value#54, Some(UTC)) AS v#56]

: +- Project [cast(value#41 as string) AS value#54]

: +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider3f507373, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable7b6736a4, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee61b, [key#40, value#41, topic#42, partition#43, offset#44L, timestamp#45, timestampType#46], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> C, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#33, value#34, topic#35, partition#36, offset#37L, timestamp#38, timestampType#39]

+- EventTimeWatermark D_LAST_MOD#88: timestamp, 30 seconds

+- Project [v#85.D_ID AS D_ID#87, v#85.D_LAST_MOD AS D_LAST_MOD#88]

+- Project [from_json(StructField(D_ID,StringType,false), StructField(D_LAST_MOD,TimestampType,false), value#83, Some(UTC)) AS v#85]

+- Project [cast(value#70 as string) AS value#83]

+- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider2b90e779, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable36f8cd29, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee620, [key#69, value#70, topic#71, partition#72, offset#73L, timestamp#74, timestampType#75], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> D, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#62, value#63, topic#64, partition#65, offset#66L, timestamp#67, timestampType#68]

```

and we can find the late inputs from the batch 4 as follows:

which represents intermediate inputs are being lost, ended up with correctness issue.

Closes#28607 from HeartSaVioR/SPARK-24634-v3.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to support guava version configurable from command line for sbt.

### Why are the changes needed?

#28455 added the configurability for Maven but not for sbt.

sbt is usually faster than Maven so it's useful for developers.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed the guava version is changed with the following commands.

```

$ build/sbt "inspect tree clean" | grep guava

[info] +-spark/*:dependencyOverrides = Set(com.google.guava:guava:14.0.1, xerces:xercesImpl:2.12.0, jline:jline:2.14.6, org.apache.avro:avro:1.8.2)

```

```

$ build/sbt -Dguava.version=25.0-jre "inspect tree clean" | grep guava

[info] +-spark/*:dependencyOverrides = Set(com.google.guava:guava:25.0-jre, xerces:xercesImpl:2.12.0, jline:jline:2.14.6, org.apache.avro:avro:1.8.2)

```

Closes#28822 from sarutak/guava-version-for-sbt.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

When` org.apache.spark.sql.hive.thriftserver.HiveThriftServer2#startWithContext` called,

it starts `ThriftCLIService` in the background with a new Thread, at the same time we call `ThriftCLIService.getPortNumber,` we might not get the bound port if it's configured with 0.

This PR moves the TServer/HttpServer initialization code out of that new Thread.

### Why are the changes needed?

Fix concurrency issue, improve test robustness.

### Does this PR introduce _any_ user-facing change?

NO

### How was this patch tested?

add new tests

Closes#28751 from yaooqinn/SPARK-31926.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add instance weight support in LogisticRegressionSummary

### Why are the changes needed?

LogisticRegression, MulticlassClassificationEvaluator and BinaryClassificationEvaluator support instance weight. We should support instance weight in LogisticRegressionSummary too.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Add new tests

Closes#28657 from huaxingao/weighted_summary.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR mainly adds two things.

1. Real headless browser support for UI test

2. A test suite using headless Chrome as one instance of those browsers.

Also, for environment where Chrome and Chrome driver is not installed, `ChromeUITest` tag is added to filter out the test suite.

By default, test suites with `ChromeUITest` is disabled.

### Why are the changes needed?

In the current master, there are two problems for UI test.

1. Lots of tests especially JavaScript related ones are done manually.

Appearance is better to be confirmed by our eyes but logic should be tested by test cases ideally.

2. Compared to the real web browsers, HtmlUnit doesn't seem to support JavaScript enough.

I added a JavaScript related test before for SPARK-31534 using HtmlUnit which is simple library based headless browser for test.

The test I added works somehow but some JavaScript related error is shown in unit-tests.log.

```

======= EXCEPTION START ========

Exception class=[net.sourceforge.htmlunit.corejs.javascript.JavaScriptException]

com.gargoylesoftware.htmlunit.ScriptException: Error: TOOLTIP: Option "sanitizeFn" provided type "window" but expected type "(null|function)". (http://192.168.1.209:60724/static/jquery-3.4.1.min.js#2)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine$HtmlUnitContextAction.run(JavaScriptEngine.java:904)

at net.sourceforge.htmlunit.corejs.javascript.Context.call(Context.java:628)

at net.sourceforge.htmlunit.corejs.javascript.ContextFactory.call(ContextFactory.java:515)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine.callFunction(JavaScriptEngine.java:835)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine.callFunction(JavaScriptEngine.java:807)

at com.gargoylesoftware.htmlunit.InteractivePage.executeJavaScriptFunctionIfPossible(InteractivePage.java:216)

at com.gargoylesoftware.htmlunit.javascript.background.JavaScriptFunctionJob.runJavaScript(JavaScriptFunctionJob.java:52)

at com.gargoylesoftware.htmlunit.javascript.background.JavaScriptExecutionJob.run(JavaScriptExecutionJob.java:102)

at com.gargoylesoftware.htmlunit.javascript.background.JavaScriptJobManagerImpl.runSingleJob(JavaScriptJobManagerImpl.java:426)

at com.gargoylesoftware.htmlunit.javascript.background.DefaultJavaScriptExecutor.run(DefaultJavaScriptExecutor.java:157)

at java.lang.Thread.run(Thread.java:748)

Caused by: net.sourceforge.htmlunit.corejs.javascript.JavaScriptException: Error: TOOLTIP: Option "sanitizeFn" provided type "window" but expected type "(null|function)". (http://192.168.1.209:60724/static/jquery-3.4.1.min.js#2)

at net.sourceforge.htmlunit.corejs.javascript.Interpreter.interpretLoop(Interpreter.java:1009)

at net.sourceforge.htmlunit.corejs.javascript.Interpreter.interpret(Interpreter.java:800)

at net.sourceforge.htmlunit.corejs.javascript.InterpretedFunction.call(InterpretedFunction.java:105)

at net.sourceforge.htmlunit.corejs.javascript.ContextFactory.doTopCall(ContextFactory.java:413)

at com.gargoylesoftware.htmlunit.javascript.HtmlUnitContextFactory.doTopCall(HtmlUnitContextFactory.java:252)

at net.sourceforge.htmlunit.corejs.javascript.ScriptRuntime.doTopCall(ScriptRuntime.java:3264)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine$4.doRun(JavaScriptEngine.java:828)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine$HtmlUnitContextAction.run(JavaScriptEngine.java:889)

... 10 more

JavaScriptException value = Error: TOOLTIP: Option "sanitizeFn" provided type "window" but expected type "(null|function)".

== CALLING JAVASCRIPT ==

function () {

throw e;

}

======= EXCEPTION END ========

```

I tried to upgrade HtmlUnit to 2.40.0 but what is worse, the test become not working even though it works on real browsers like Chrome, Safari and Firefox without error.

```

[info] UISeleniumSuite:

[info] - SPARK-31534: text for tooltip should be escaped *** FAILED *** (17 seconds, 745 milliseconds)

[info] The code passed to eventually never returned normally. Attempted 2 times over 12.910785232 seconds. Last failure message: com.gargoylesoftware.htmlunit.ScriptException: ReferenceError: Assignment to undefined "regeneratorRuntime" in strict mode (http://192.168.1.209:62132/static/vis-timeline-graph2d.min.js#52(Function)#1)

```

To resolve those problems, it's better to support headless browser for UI test.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I tested with following patterns. Both Chrome and Chrome driver should be installed to test.

1. sbt / with default excluded tags (ChromeUISeleniumSuite is expected to be skipped and SQLQueryTestSuite is expected to succeed)

`build/sbt -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver "testOnly org.apache.spark.ui.ChromeUISeleniumSuite org.apache.spark.sql.SQLQueryTestSuite"

2. sbt / overwrite default excluded tags as empty string (Both suites are expected to succeed)

`build/sbt -Dtest.default.exclude.tags= -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver "testOnly org.apache.spark.ui.ChromeUISeleniumSuite org.apache.spark.sql.SQLQueryTestSuite"

3. sbt / set `test.exclude.tags` to `org.apache.spark.tags.ExtendedSQLTest` (Both suites are expected to be skipped)

`build/sbt -Dtest.exclude.tags=org.apache.spark.tags.ExtendedSQLTest -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver "testOnly org.apache.spark.ui.ChromeUISeleniumSuite org.apache.spark.sql.SQLQueryTestSuite"

4. Maven / with default excluded tags (ChromeUISeleniumSuite is expected to be skipped and SQLQueryTestSuite is expected to succeed)

`build/mvn -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver -Dtest=none -DwildcardSuites=org.apache.spark.ui.ChromeUISeleniumSuite,org.apache.spark.sql.SQLQueryTestSuite test`

5. Maven / overwrite default excluded tags as empty string (Both suites are expected to succeed)

`build/mvn -Dtest.default.exclude.tags= -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver -Dtest=none -DwildcardSuites=org.apache.spark.ui.ChromeUISeleniumSuite,org.apache.spark.sql.SQLQueryTestSuite test`

6. Maven / set `test.exclude.tags` to `org.apache.spark.tags.ExtendedSQLTest` (Both suites are expected to be skipped)

`build/mvn -Dtest.exclude.tags=org.apache.spark.tags.ExtendedSQLTest -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver -Dtest=none -DwildcardSuites=org.apache.spark.ui.ChromeUISeleniumSuite,org.apache.spark.sql.SQLQueryTestSuite test`

Closes#28627 from sarutak/real-headless-browser-support-take2.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade `commons-io` from 2.4 to 2.5 for Apache Spark 3.1.

### Why are the changes needed?

Since Hadoop 3.1, `commons-io` 2.5 is used.

- https://issues.apache.org/jira/browse/HADOOP-15261

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with Hadoop-3.2 profile.

Maven dependency is verified via `test-dependencies.sh` automatically. SBT dependency can be verified like the following manually.

```

build/sbt -Phadoop-3.2 "core/dependencyTree" | grep commons-io:commons-io | head -n1

[info] | | +-commons-io:commons-io:2.5

```

Closes#28665 from dongjoon-hyun/SPARK-31858.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Implement abstract Selector. Put the common code among ```ANOVASelector```, ```ChiSqSelector```, ```FValueSelector``` and ```VarianceThresholdSelector``` to Selector.

### Why are the changes needed?

code reuse

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests

Closes#27978 from huaxingao/spark-31127.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

This PR aims to upgrade Genjavadoc to 0.16.

### Why are the changes needed?

Although we skipped Scala 2.12.11, this brings 2.12.11 official support and better 2.12.12 compatibility.

- https://github.com/lightbend/genjavadoc/commits/v0.16

### Does this PR introduce any user-facing change?

No. (The generated doc is the same)

### How was this patch tested?

Build with 0.15 and 0.16.

```

$ SKIP_PYTHONDOC=1 SKIP_RDOC=1 SKIP_SQLDOC=1 jekyll build

```

Compare the result. The generated doc is identical.

```

$ diff -r _site_0.15 _site_0.16 | grep -v '^diff -r' | grep -v 'Generated by javadoc' | sort | uniq

---

5c5

```

Closes#28321 from dongjoon-hyun/SPARK-31547.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- functions.toDegrees/toRadians

- functions.approxCountDistinct

- functions.monotonicallyIncreasingId

- Column.!==

- Dataset.explode

- Dataset.registerTempTable

- SQLContext.getOrCreate, setActive, clearActive, constructors

Below is the other removed APIs in the original PR, but not added back in this PR [https://issues.apache.org/jira/browse/SPARK-25908]:

- Remove some AccumulableInfo .apply() methods

- Remove non-label-specific multiclass precision/recall/fScore in favor of accuracy

- Remove unused Python StorageLevel constants

- Remove unused multiclass option in libsvm parsing

- Remove references to deprecated spark configs like spark.yarn.am.port

- Remove TaskContext.isRunningLocally

- Remove ShuffleMetrics.shuffle* methods

- Remove BaseReadWrite.context in favor of session

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

Added a new test suite for these APIs.

Author: gatorsmile <gatorsmile@gmail.com>

Author: yi.wu <yi.wu@databricks.com>

Closes#27821 from gatorsmile/addAPIBackV2.

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- HiveContext

- createExternalTable APIs

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

add a new test suite for createExternalTable APIs.

Closes#27815 from gatorsmile/addAPIsBack.

Lead-authored-by: gatorsmile <gatorsmile@gmail.com>

Co-authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

add arvo dep in SparkBuild

### Why are the changes needed?

fix sbt unidoc like https://github.com/apache/spark/pull/28017#issuecomment-603828597

```scala

[warn] Multiple main classes detected. Run 'show discoveredMainClasses' to see the list

[warn] Multiple main classes detected. Run 'show discoveredMainClasses' to see the list

[info] Main Scala API documentation to /home/jenkins/workspace/SparkPullRequestBuilder6/target/scala-2.12/unidoc...

[info] Main Java API documentation to /home/jenkins/workspace/SparkPullRequestBuilder6/target/javaunidoc...

[error] /home/jenkins/workspace/SparkPullRequestBuilder6/core/src/main/scala/org/apache/spark/serializer/GenericAvroSerializer.scala:123: value createDatumWriter is not a member of org.apache.avro.generic.GenericData

[error] writerCache.getOrElseUpdate(schema, GenericData.get.createDatumWriter(schema))

[error] ^

[info] No documentation generated with unsuccessful compiler run

[error] one error found

```

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

pass jenkins

and verify manually with `sbt dependencyTree`

```scala

kentyaohulk ~/spark dep build/sbt dependencyTree | grep avro | grep -v Resolving

[info] +-org.apache.avro:avro-mapred:1.8.2

[info] | +-org.apache.avro:avro-ipc:1.8.2

[info] | | +-org.apache.avro:avro:1.8.2

[info] +-org.apache.avro:avro:1.8.2

[info] | | +-org.apache.avro:avro:1.8.2

[info] org.apache.spark:spark-avro_2.12:3.1.0-SNAPSHOT [S]

[info] | | | +-org.apache.avro:avro-mapred:1.8.2

[info] | | | | +-org.apache.avro:avro-ipc:1.8.2

[info] | | | | | +-org.apache.avro:avro:1.8.2

[info] | | | +-org.apache.avro:avro:1.8.2

[info] | | | | | +-org.apache.avro:avro:1.8.2

```

Closes#28020 from yaooqinn/dep.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

`KafkaDelegationTokenSuite` has been ignored because showed flaky behaviour. In this PR I've changed the approach how the test executed and turning it on again. This PR contains the following:

* The test runs in separate JVM in order to avoid modified security context

* The body of the test runs in `testRetry` which reties if failed

* Additional logs to analyse possible failures

* Enhanced clean-up code

### Why are the changes needed?

`KafkaDelegationTokenSuite ` is ignored.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Executed the test in loop 1k+ times in jenkins (locally much harder to reproduce).

Closes#27877 from gaborgsomogyi/SPARK-30541.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…a sbt on Intellij IDEA.

### What changes were proposed in this pull request?

Read from java property "sbt.maven.profiles", the maven profiles to be enabled while importing to intellij IDEA via SBT.

### Why are the changes needed?

Without this change one needs to set an os-wide environment variable `SBT_MAVEN_PROFILES`, on mac it is even trickier (I have not figured out, what can be done).

### Does this PR introduce any user-facing change?

None

### How was this patch tested?

Manually tested by applying multiple profiles or a single profile.

Please see the attached images to see the steps.

<img width="802" alt="Screenshot 2020-03-11 at 4 09 57 PM" src="https://user-images.githubusercontent.com/992952/76411667-46223280-63b8-11ea-9a77-dc014b66d48b.png">

<img width="867" alt="Screenshot 2020-03-11 at 4 18 09 PM" src="https://user-images.githubusercontent.com/992952/76411676-4ae6e680-63b8-11ea-895d-ed9d6cc223c5.png">

Closes#27878 from ScrapCodes/SPARK-31120/idea-load-maven-profiles.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR manually reverts changes in #25292 and then wraps java.lang.Error with `QueryExecutionException` to notify `QueryExecutionListener` to send it to `QueryExecutionListener.onFailure` which only accepts `Exception`.

The bug fix PR for 2.4 is #27904. It needs a separate PR because the touched codes were changed a lot.

### Why are the changes needed?

Avoid API changes and fix a bug.

### Does this PR introduce any user-facing change?

Yes. Reverting an API change happening in 3.0. QueryExecutionListener APIs will be the same as 2.4.

### How was this patch tested?

The new added test.

Closes#27907 from zsxwing/SPARK-31144.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR (SPARK-31130) aims to pin `Commons IO` version to `2.4` in SBT build like Maven build.

### Why are the changes needed?

[HADOOP-15261](https://issues.apache.org/jira/browse/HADOOP-15261) upgraded `commons-io` from 2.4 to 2.5 at Apache Hadoop 3.1.

In `Maven`, Apache Spark always uses `Commons IO 2.4` based on `pom.xml`.

```

$ git grep commons-io.version

pom.xml: <commons-io.version>2.4</commons-io.version>

pom.xml: <version>${commons-io.version}</version>

```

However, `SBT` choose `2.5`.

**branch-3.0**

```

$ build/sbt -Phadoop-3.2 "core/dependencyTree" | grep commons-io:commons-io | head -n1

[info] | | +-commons-io:commons-io:2.5

```

**branch-2.4**

```

$ build/sbt -Phadoop-3.1 "core/dependencyTree" | grep commons-io:commons-io | head -n1

[info] | | +-commons-io:commons-io:2.5

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with `[test-hadoop3.2]` (the default PR Builder is `SBT`) and manually do the following locally.

```

build/sbt -Phadoop-3.2 "core/dependencyTree" | grep commons-io:commons-io | head -n1

```

Closes#27886 from dongjoon-hyun/SPARK-31130.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

```ChiSqSelector ``` depends on ```mllib.ChiSqSelectorModel``` to do the selection logic. Will remove the dependency in this PR.

### Why are the changes needed?

This PR is an intermediate PR. Removing ```ChiSqSelector``` dependency on ```mllib.ChiSqSelectorModel```. Next subtask will extract the common code between ```ChiSqSelector``` and ```FValueSelector``` and put in an abstract ```Selector```.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New and existing tests

Closes#27841 from huaxingao/chisq.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR propose

1. Explicitly include xml-apis. xml-apis is already the part of xerces 2.12.0 (https://repo1.maven.org/maven2/xerces/xercesImpl/2.12.0/xercesImpl-2.12.0.pom). However, we're excluding it by setting `scope` to `test`. This seems causing `spark-shell`, built from Maven, to fail.

Seems like previously xml-apis wasn't reached for some reasons but after we upgrade, it seems requiring. Therefore, this PR proposes to include it.

2. Pins `xerces` version in SBT as well. Seems this dependency is resolved differently from Maven.

Note that Hadoop 3 does not looks requiring this as they replaced xerces as of [HDFS-12221](https://issues.apache.org/jira/browse/HDFS-12221).

### Why are the changes needed?

To make `spark-shell` working from Maven build, and uses the same xerces version.

### Does this PR introduce any user-facing change?

No, it's master only.

### How was this patch tested?

**1.**

```bash

./build/mvn -DskipTests -Psparkr -Phive clean package

./bin/spark-shell

```

Before:

```

Exception in thread "main" java.lang.NoClassDefFoundError: org/w3c/dom/ElementTraversal

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:763)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at org.apache.xerces.parsers.AbstractDOMParser.startDocument(Unknown Source)

at org.apache.xerces.xinclude.XIncludeHandler.startDocument(Unknown Source)

at org.apache.xerces.impl.dtd.XMLDTDValidator.startDocument(Unknown Source)

at org.apache.xerces.impl.XMLDocumentScannerImpl.startEntity(Unknown Source)

at org.apache.xerces.impl.XMLVersionDetector.startDocumentParsing(Unknown Source)

at org.apache.xerces.parsers.XML11Configuration.parse(Unknown Source)

at org.apache.xerces.parsers.XML11Configuration.parse(Unknown Source)

at org.apache.xerces.parsers.XMLParser.parse(Unknown Source)

at org.apache.xerces.parsers.DOMParser.parse(Unknown Source)

at org.apache.xerces.jaxp.DocumentBuilderImpl.parse(Unknown Source)

at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:150)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2482)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2470)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2541)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2494)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2407)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1143)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1115)

at org.apache.spark.deploy.SparkHadoopUtil$.org$apache$spark$deploy$SparkHadoopUtil$$appendS3AndSparkHadoopHiveConfigurations(SparkHadoopUtil.scala:456)

at org.apache.spark.deploy.SparkHadoopUtil$.newConfiguration(SparkHadoopUtil.scala:427)

at org.apache.spark.deploy.SparkSubmit.$anonfun$prepareSubmitEnvironment$2(SparkSubmit.scala:342)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:342)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:871)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1007)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1016)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.w3c.dom.ElementTraversal

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 42 more

```

After:

```

...

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.1.0-SNAPSHOT

/_/

Using Scala version 2.12.10 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_202)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

```

**2.**

```

./build/sbt dependencyTree -Phadoop-2.7 -Phive-2.3 -Phive-thriftserver -Phive

./build/sbt dependencyTree -Phadoop-3.2 -Phive-2.3 -Phive-thriftserver -Phive

```

Closes#27808 from HyukjinKwon/SPARK-30994.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Remove the cases for ```MissingTypesProblem```, ```InheritedNewAbstractMethodProblem```, ```DirectMissingMethodProblem``` and ```ReversedMissingMethodProblem```.

### Why are the changes needed?

After the changes, we don't have ```org.apache.spark.sql.sources.v2``` any more, so the only problem we can get is ```MissingClassProblem```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested

Closes#27731 from huaxingao/spark-28998-followup.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

I found a few unnecessary MiMa excludes when auditing SQL binary incompatible changes.

### Why are the changes needed?

These MiMa excludes are not required any more, so remove.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested

Closes#27729 from huaxingao/mima.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

https://issues.apache.org/jira/browse/SPARK-30928

remove unnecessary MiMa excludes

### Why are the changes needed?

When auditing binary incompatible changes for 3.0, I found several MiMa excludes are not necessary, so remove these.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

run dev/mima to check

Closes#27696 from huaxingao/spark-mima.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

Fix for #27395

### What changes were proposed in this pull request?

The `allGather` method is added to the `BarrierTaskContext`. This method contains the same functionality as the `BarrierTaskContext.barrier` method; it blocks the task until all tasks make the call, at which time they may continue execution. In addition, the `allGather` method takes an input message. Upon returning from the `allGather` the task receives a list of all the messages sent by all the tasks that made the `allGather` call.

### Why are the changes needed?

There are many situations where having the tasks communicate in a synchronized way is useful. One simple example is if each task needs to start a server to serve requests from one another; first the tasks must find a free port (the result of which is undetermined beforehand) and then start making requests, but to do so they each must know the port chosen by the other task. An `allGather` method would allow them to inform each other of the port they will run on.

### Does this PR introduce any user-facing change?

Yes, an `BarrierTaskContext.allGather` method will be available through the Scala, Java, and Python APIs.

### How was this patch tested?

Most of the code path is already covered by tests to the `barrier` method, since this PR includes a refactor so that much code is shared by the `barrier` and `allGather` methods. However, a test is added to assert that an all gather on each tasks partition ID will return a list of every partition ID.

An example through the Python API:

```python

>>> from pyspark import BarrierTaskContext

>>>

>>> def f(iterator):

... context = BarrierTaskContext.get()

... return [context.allGather('{}'.format(context.partitionId()))]

...

>>> sc.parallelize(range(4), 4).barrier().mapPartitions(f).collect()[0]

[u'3', u'1', u'0', u'2']

```

Closes#27640 from sarthfrey/master.

Lead-authored-by: sarthfrey-db <sarth.frey@databricks.com>

Co-authored-by: sarthfrey <sarth.frey@gmail.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

- Add missing `since` annotation.

- Don't show classes under `org.apache.spark.sql.dynamicpruning` package in API docs.

- Fix the scope of `xxxExactNumeric` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27560 from xuanyuanking/SPARK-30809.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to throw exception by default when user use untyped UDF(a.k.a `org.apache.spark.sql.functions.udf(AnyRef, DataType)`).

And user could still use it by setting `spark.sql.legacy.useUnTypedUdf.enabled` to `true`.

### Why are the changes needed?

According to #23498, since Spark 3.0, the untyped UDF will return the default value of the Java type if the input value is null. For example, `val f = udf((x: Int) => x, IntegerType)`, `f($"x")` will return 0 in Spark 3.0 but null in Spark 2.4. And the behavior change is introduced due to Spark3.0 is built with Scala 2.12 by default.

As a result, this might change data silently and may cause correctness issue if user still expect `null` in some cases. Thus, we'd better to encourage user to use typed UDF to avoid this problem.

### Does this PR introduce any user-facing change?

Yeah. User will hit exception now when use untyped UDF.

### How was this patch tested?

Added test and updated some tests.

Closes#27488 from Ngone51/spark_26580_followup.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: wuyi <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR tries #26710 (comment) way to fix the test.

### Why are the changes needed?

To make the tests pass.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Jenkins will test first, and then `on spark-branch-3.0-test-sbt-hadoop-2.7-hive-2.3` will test it out.

Closes#27513 from HyukjinKwon/test-SPARK-30756.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

(cherry picked from commit 8efe367a4e)

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Fix the scope of `Logging.initializeForcefully` so that it doesn't appear in subclasses' public methods. Right now, `sc.initializeForcefully(false, false)` is allowed to called.

- Don't show classes under `org.apache.spark.internal` package in API docs.

- Add missing `since` annotation.

- Fix the scope of `ArrowUtils` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27528 from zsxwing/audit-ss-apis.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

We should also expose it in documentation as we marked it as unstable API as of SPARK-30547

Note that, seems Javadoc -> Scaladoc doesn't work but this PR does not target to fix.

### Why are the changes needed?

To show the documentation of API.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually built the docs via `jykill serve` under `docs` directory:

Closes#27412 from HyukjinKwon/SPARK-30547.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

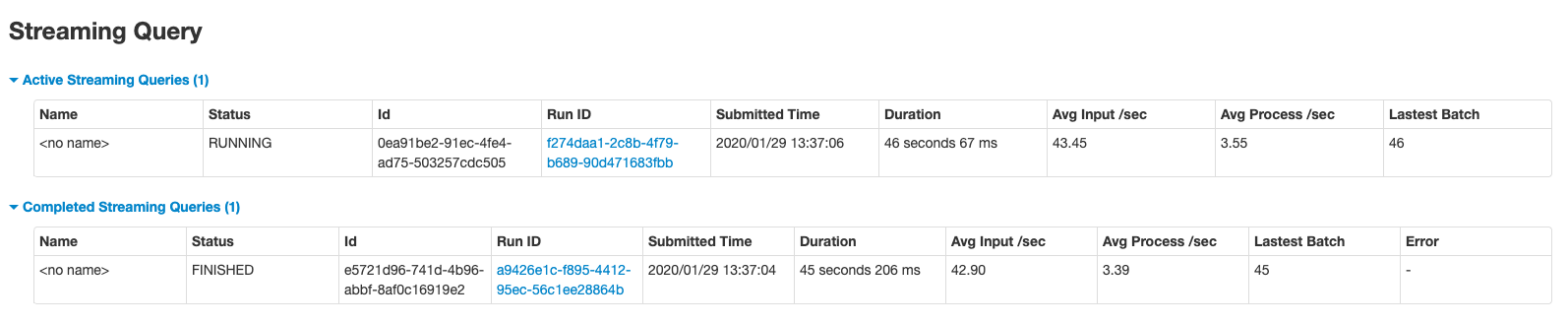

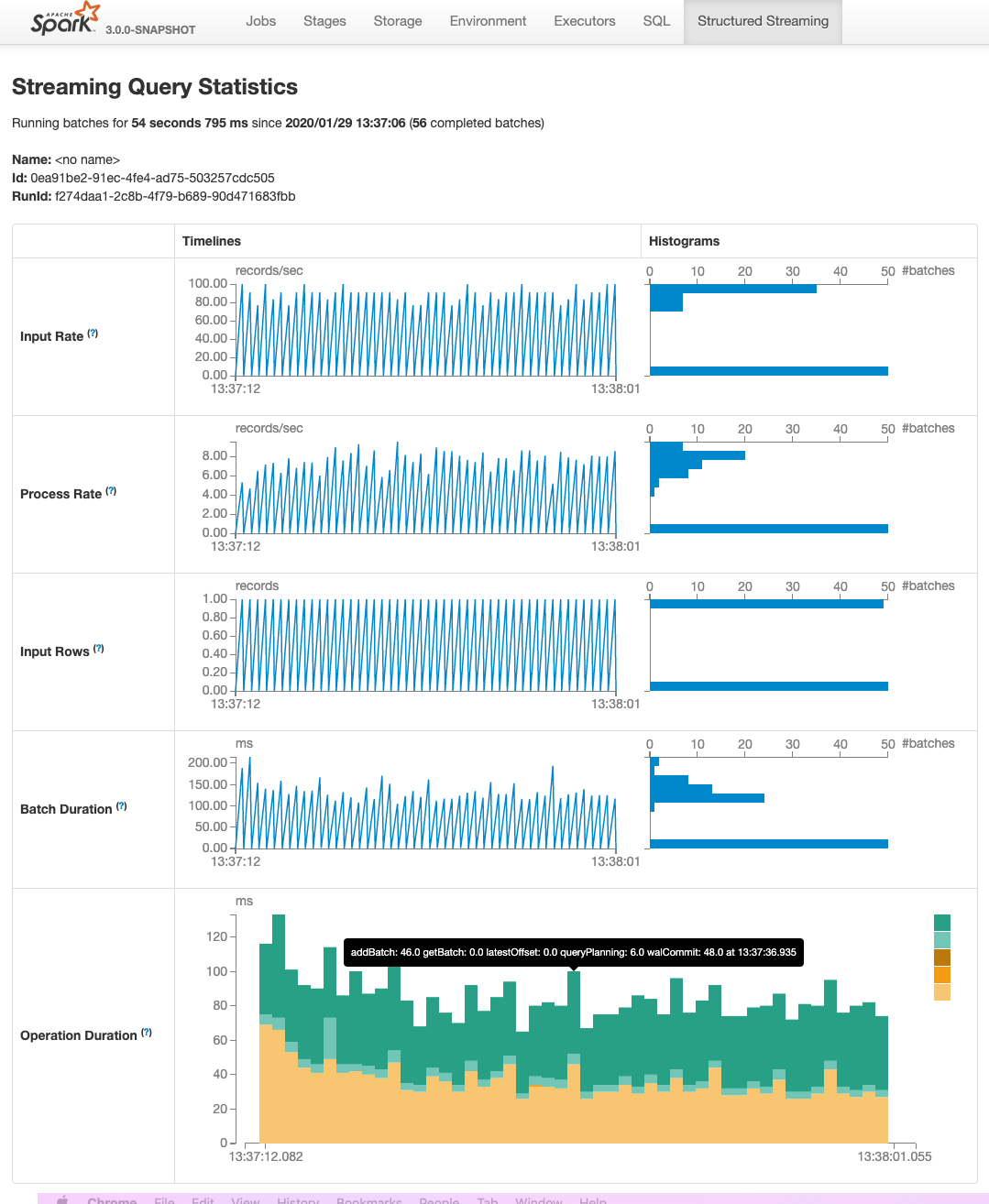

This PR adds two pages to Web UI for Structured Streaming:

- "/streamingquery": Streaming Query Page, providing some aggregate information for running/completed streaming queries.

- "/streamingquery/statistics": Streaming Query Statistics Page, providing detailed information for streaming query, including `Input Rate`, `Process Rate`, `Input Rows`, `Batch Duration` and `Operation Duration`

### Why are the changes needed?

It helps users to better monitor Structured Streaming query.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- new added and existing UTs

- manual test

Closes#26201 from uncleGen/SPARK-29543.

Lead-authored-by: uncleGen <hustyugm@gmail.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Genmao Yu <hustyugm@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

Remove ```numTrees``` in GBT in 3.0.0.

### Why are the changes needed?

Currently, GBT has

```

/**

* Number of trees in ensemble

*/

Since("2.0.0")

val getNumTrees: Int = trees.length

```

and

```

/** Number of trees in ensemble */

val numTrees: Int = trees.length

```

I think we should remove one of them. We deprecated it in 2.4.5 via https://github.com/apache/spark/pull/27352.

### Does this PR introduce any user-facing change?

Yes, remove ```numTrees``` in GBT in 3.0.0

### How was this patch tested?

existing tests

Closes#27330 from huaxingao/spark-numTrees.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Upgrade the version of Genjavadoc from 0.14 to 0.15.

### Why are the changes needed?

To enable to build for Scala 2.13.1.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

I confirmed there is no dependency error related to genjavadoc by manual build.

Also, I generated javadoc by `LANG=C build/sbt -Pkinesis-asl -Pyarn -Pkubernetes -Phive-thriftserver unidoc` for both code with/without this change and did `diff -r` target/javadoc.

Closes#27255 from sarutak/upgrade-genjavadoc.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is the second PR for the Stage Level Scheduling. This is adding in the necessary executor side changes:

1) executors to know what ResourceProfile they should be using

2) handle parsing the resource profile settings - these are not in the global configs

3) then reporting back to the driver what resource profile it was started with.

This PR adds all the piping for YARN to pass the information all the way to executors, but it just uses the default ResourceProfile (which is the global applicatino level configs).

At a high level these changes include:

1) adding a new --resourceProfileId option to the CoarseGrainedExecutorBackend

2) Add the ResourceProfile settings to new internal confs that gets passed into the Executor

3) Executor changes that use the resource profile id passed in to read the corresponding ResourceProfile confs and then parse those requests and discover resources as necessary

4) Executor registers to Driver with the Resource profile id so that the ExecutorMonitor can track how many executor with each profile are running

5) YARN side changes to show that passing the resource profile id and confs actually works. Just uses the DefaultResourceProfile for now.

I also removed a check from the CoarseGrainedExecutorBackend that used to check to make sure there were task requirements before parsing any custom resource executor requests. With the resource profiles this becomes much more expensive because we would then have to pass the task requests to each executor and the check was just a short cut and not really needed. It was much cleaner just to remove it.

Note there were some changes to the ResourceProfile, ExecutorResourceRequests, and TaskResourceRequests in this PR as well because I discovered some issues with things not being immutable. That api now look like:

val rpBuilder = new ResourceProfileBuilder()

val ereq = new ExecutorResourceRequests()

val treq = new TaskResourceRequests()

ereq.cores(2).memory("6g").memoryOverhead("2g").pysparkMemory("2g").resource("gpu", 2, "/home/tgraves/getGpus")

treq.cpus(2).resource("gpu", 2)

val resourceProfile = rpBuilder.require(ereq).require(treq).build

This makes is so that ResourceProfile is immutable and Spark can use it directly without worrying about the user changing it.

### Why are the changes needed?

These changes are needed for the executor to report which ResourceProfile they are using so that ultimately the dynamic allocation manager can use that information to know how many with a profile are running and how many more it needs to request. Its also needed to get the resource profile confs to the executor so that it can run the appropriate discovery script if needed.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit tests and manually on YARN.

Closes#26682 from tgravescs/SPARK-29306.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Make Regressors extend abstract class Regressor:

```AFTSurvivalRegression extends Estimator => extends Regressor```

```DecisionTreeRegressor extends Predictor => extends Regressor```

```FMRegressor extends Predictor => extends Regressor```

```GBTRegressor extends Predictor => extends Regressor```

```RandomForestRegressor extends Predictor => extends Regressor```

We will not make ```IsotonicRegression``` extend ```Regressor``` because it is tricky to handle both DoubleType and VectorType.

### Why are the changes needed?

Make class hierarchy consistent for all Regressors

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#27168 from huaxingao/spark-30377.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Make ```MultilayerPerceptronClassificationModel``` extend ```MultilayerPerceptronParams```

### Why are the changes needed?

Make ```MultilayerPerceptronClassificationModel``` extend ```MultilayerPerceptronParams``` to expose the training params, so user can see these params when calling ```extractParamMap```

### Does this PR introduce any user-facing change?

Yes. The ```MultilayerPerceptronParams``` such as ```seed```, ```maxIter``` ... are available in ```MultilayerPerceptronClassificationModel``` now

### How was this patch tested?

Manually tested ```MultilayerPerceptronClassificationModel.extractParamMap()``` to verify all the new params are there.

Closes#26838 from huaxingao/spark-30144.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

1, add new foreach-like methods: foreach/foreachNonZero

2, add iterator: iterator/activeIterator/nonZeroIterator

### Why are the changes needed?

see the [ticke](https://issues.apache.org/jira/browse/SPARK-30329) for details

foreach/foreachNonZero: for both convenience and performace (SparseVector.foreach should be faster than current traversal method)

iterator/activeIterator/nonZeroIterator: add the three iterators, so that we can futuremore add/change some impls based on those iterators for both ml and mllib sides, to avoid vector conversions.

### Does this PR introduce any user-facing change?

Yes, new methods are added

### How was this patch tested?

added testsuites

Closes#26982 from zhengruifeng/vector_iter.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

This reverts commit 709387d660.

See https://issues.apache.org/jira/browse/SPARK-27300?focusedCommentId=16990048&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16990048 and previous mailing list discussions.

### What changes were proposed in this pull request?

Revert the addition of skeleton graph API modules for Spark 3.0.

### Why are the changes needed?

It does not appear that content will be added to the module for Spark 3, so I propose avoiding committing to the modules, which are no-ops now, in the upcoming major 3.0 release.

### Does this PR introduce any user-facing change?

No, the modules were not released.

### How was this patch tested?

Existing tests, but mostly N/A.

Closes#26928 from srowen/Revert27300.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR proposes to exclude Unidoc checking in Hive domain. We don't publish this as a part of Spark documentation (see also https://github.com/apache/spark/blob/master/docs/_plugins/copy_api_dirs.rb#L30) and most of them are copy of Hive thrift server so that we can officially use Hive 2.3 release.

It doesn't much make sense to check the documentation generation against another domain, and that we don't use in documentation publish.

### Why are the changes needed?

To avoid unnecessary computation.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

By Jenkins:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-2.7 -Phive-2.3 -Phive -Pmesos -Pkubernetes -Phive-thriftserver -Phadoop-cloud -Pkinesis-asl -Pspark-ganglia-lgpl -Pyarn test:package streaming-kinesis-asl-assembly/assembly

...

========================================================================

Building Unidoc API Documentation

========================================================================

[info] Building Spark unidoc using SBT with these arguments: -Phadoop-2.7 -Phive-2.3 -Phive -Pmesos -Pkubernetes -Phive-thriftserver -Phadoop-cloud -Pkinesis-asl -Pspark-ganglia-lgpl -Pyarn unidoc

...

[info] Main Java API documentation successful.

...

[info] Main Scala API documentation successful.

```

Closes#26800 from HyukjinKwon/do-not-merge.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Observable metrics are named arbitrary aggregate functions that can be defined on a query (Dataframe). As soon as the execution of a Dataframe reaches a completion point (e.g. finishes batch query or reaches streaming epoch) a named event is emitted that contains the metrics for the data processed since the last completion point.

A user can observe these metrics by attaching a listener to spark session, it depends on the execution mode which listener to attach:

- Batch: `QueryExecutionListener`. This will be called when the query completes. A user can access the metrics by using the `QueryExecution.observedMetrics` map.

- (Micro-batch) Streaming: `StreamingQueryListener`. This will be called when the streaming query completes an epoch. A user can access the metrics by using the `StreamingQueryProgress.observedMetrics` map. Please note that we currently do not support continuous execution streaming.

### Why are the changes needed?

This enabled observable metrics.

### Does this PR introduce any user-facing change?

Yes. It adds the `observe` method to `Dataset`.

### How was this patch tested?

- Added unit tests for the `CollectMetrics` logical node to the `AnalysisSuite`.

- Added unit tests for `StreamingProgress` JSON serialization to the `StreamingQueryStatusAndProgressSuite`.

- Added integration tests for streaming to the `StreamingQueryListenerSuite`.

- Added integration tests for batch to the `DataFrameCallbackSuite`.

Closes#26127 from hvanhovell/SPARK-29348.

Authored-by: herman <herman@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR tries to fix flakiness in `HiveThriftServer2ListenerSuite` by using a dedicated JVM (after we switch to Hive 2.3 by default in PR builders). Likewise in 4a73bed318, there's no explicit evidence for this fix.

See https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/114653/testReport/org.apache.spark.sql.hive.thriftserver.ui/HiveThriftServer2ListenerSuite/_It_is_not_a_test_it_is_a_sbt_testing_SuiteSelector_/

```

sbt.ForkMain$ForkError: sbt.ForkMain$ForkError: java.lang.LinkageError: loader constraint violation: loader (instance of net/bytebuddy/dynamic/loading/MultipleParentClassLoader) previously initiated loading for a different type with name "org/apache/hive/service/ServiceStateChangeListener"

at org.mockito.codegen.HiveThriftServer2$MockitoMock$1974707245.<clinit>(Unknown Source)

at sun.reflect.GeneratedSerializationConstructorAccessor164.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.objenesis.instantiator.sun.SunReflectionFactoryInstantiator.newInstance(SunReflectionFactoryInstantiator.java:48)

at org.objenesis.ObjenesisBase.newInstance(ObjenesisBase.java:73)

at org.mockito.internal.creation.instance.ObjenesisInstantiator.newInstance(ObjenesisInstantiator.java:19)

at org.mockito.internal.creation.bytebuddy.SubclassByteBuddyMockMaker.createMock(SubclassByteBuddyMockMaker.java:47)

at org.mockito.internal.creation.bytebuddy.ByteBuddyMockMaker.createMock(ByteBuddyMockMaker.java:25)

at org.mockito.internal.util.MockUtil.createMock(MockUtil.java:35)

at org.mockito.internal.MockitoCore.mock(MockitoCore.java:62)

at org.mockito.Mockito.mock(Mockito.java:1908)

at org.mockito.Mockito.mock(Mockito.java:1880)

at org.apache.spark.sql.hive.thriftserver.ui.HiveThriftServer2ListenerSuite.createAppStatusStore(HiveThriftServer2ListenerSuite.scala:156)

at org.apache.spark.sql.hive.thriftserver.ui.HiveThriftServer2ListenerSuite.$anonfun$new$3(HiveThriftServer2ListenerSuite.scala:47)

at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85)

at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83)

at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104)

at org.scalatest.Transformer.apply(Transformer.scala:22)

at org.scalatest.Transformer.apply(Transformer.scala:20)

```

### Why are the changes needed?

To make test cases more robust.

### Does this PR introduce any user-facing change?

No (dev only).

### How was this patch tested?

Jenkins build.

Closes#26720 from shahidki31/mock.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Currently, Apache Spark PR Builder using `hive-1.2` for `hadoop-2.7` and `hive-2.3` for `hadoop-3.2`. This PR aims to support

- `[test-hive1.2]` in PR builder

- `[test-hive2.3]` in PR builder to be consistent and independent of the default profile

- After this PR, all PR builders will use Hive 2.3 by default (because Spark uses Hive 2.3 by default as of c98e5eb339)

- Use default profile in AppVeyor build.

Note that this was reverted due to unexpected test failure at `ThriftServerPageSuite`, which was investigated in https://github.com/apache/spark/pull/26706 . This PR fixed it by letting it use their own forked JVM. There is no explicit evidence for this fix and it was just my speculation, and thankfully it fixed at least.

### Why are the changes needed?

This new tag allows us more flexibility.

### Does this PR introduce any user-facing change?

No. (This is a dev-only change.)

### How was this patch tested?

Check the Jenkins triggers in this PR.

Default:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-2.7 -Phive-2.3 -Phive-thriftserver -Pmesos -Pspark-ganglia-lgpl -Phadoop-cloud -Phive -Pkubernetes -Pkinesis-asl -Pyarn test:package streaming-kinesis-asl-assembly/assembly

```

`[test-hive1.2][test-hadoop3.2]`:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-3.2 -Phive-1.2 -Phadoop-cloud -Pyarn -Pspark-ganglia-lgpl -Phive -Phive-thriftserver -Pmesos -Pkubernetes -Pkinesis-asl test:package streaming-kinesis-asl-assembly/assembly

```

`[test-maven][test-hive-2.3]`:

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using Maven with these arguments: -Phadoop-2.7 -Phive-2.3 -Pspark-ganglia-lgpl -Pyarn -Phive -Phadoop-cloud -Pkinesis-asl -Pmesos -Pkubernetes -Phive-thriftserver clean package -DskipTests

```

Closes#26710 from HyukjinKwon/SPARK-29991.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

support `modelType` `gaussian`

### Why are the changes needed?

current modelTypes do not support continuous data

### Does this PR introduce any user-facing change?

yes, add a `modelType` option

### How was this patch tested?

existing testsuites and added ones

Closes#26413 from zhengruifeng/gnb.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

This PR aims to add `io.netty.tryReflectionSetAccessible=true` to the testing configuration for JDK11 because this is an officially documented requirement of Apache Arrow.

Apache Arrow community documented this requirement at `0.15.0` ([ARROW-6206](https://github.com/apache/arrow/pull/5078)).

> #### For java 9 or later, should set "-Dio.netty.tryReflectionSetAccessible=true".

> This fixes `java.lang.UnsupportedOperationException: sun.misc.Unsafe or java.nio.DirectByteBuffer.(long, int) not available`. thrown by netty.

### Why are the changes needed?

After ARROW-3191, Arrow Java library requires the property `io.netty.tryReflectionSetAccessible` to be set to true for JDK >= 9. After https://github.com/apache/spark/pull/26133, JDK11 Jenkins job seem to fail.

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-3.2-jdk-11/676/

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-3.2-jdk-11/677/

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-3.2-jdk-11/678/

```scala

Previous exception in task:

sun.misc.Unsafe or java.nio.DirectByteBuffer.<init>(long, int) not available

io.netty.util.internal.PlatformDependent.directBuffer(PlatformDependent.java:473)

io.netty.buffer.NettyArrowBuf.getDirectBuffer(NettyArrowBuf.java:243)

io.netty.buffer.NettyArrowBuf.nioBuffer(NettyArrowBuf.java:233)

io.netty.buffer.ArrowBuf.nioBuffer(ArrowBuf.java:245)

org.apache.arrow.vector.ipc.message.ArrowRecordBatch.computeBodyLength(ArrowRecordBatch.java:222)

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with JDK11.

Closes#26552 from dongjoon-hyun/SPARK-ARROW-JDK11.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

SPARK-29397 added new interfaces for creating driver and executor

plugins. These were added in a new, more isolated package that does

not pollute the main o.a.s package.

The old interface is now redundant. Since it's a DeveloperApi and

we're about to have a new major release, let's remove it instead of

carrying more baggage forward.

Closes#26390 from vanzin/SPARK-29399.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>