### What changes were proposed in this pull request?

- Share a static ImmutableBitSet for `treePatternBits` in all object instances of AttributeReference.

- Share three static ImmutableBitSets for `treePatternBits` in three kinds of Literals.

- Add an ImmutableBitSet as a subclass of BitSet.

### Why are the changes needed?

Reduce the additional memory usage caused by `treePatternBits`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32157 from sigmod/leaf.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Small UI update to add highlighting the number of tasks in progress in a stage/job instead of highlighting the whole in progress stage/job. This was the behavior pre Spark 3.1 and the bootstrap 4 upgrade.

### Why are the changes needed?

To add back in functionality lost between 3.0 and 3.1. This provides a great visual queue of how much of a stage/job is currently being run.

### Does this PR introduce _any_ user-facing change?

Small UI change.

Before:

After (and pre Spark 3.1):

### How was this patch tested?

Updated existing UT.

Closes#32214 from Kimahriman/progress-bar-started.

Authored-by: Adam Binford <adamq43@gmail.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

To prevent potential NullPointerExceptions, this PR changes the `LiveStage` constructor to take `info` as a constructor parameter and adds a nullcheck in `AppStatusListener.activeStages`.

### Why are the changes needed?

The `AppStatusListener.getOrCreateStage` would create a LiveStage object with the `info` field set to null and right after that set it to a specific StageInfo object. This can lead to a race condition when the `livestages` are read in between those calls. This could then lead to a null pointer exception in, for instance: `AppStatusListener.activeStages`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Regular CI/CD tests

Closes#32233 from sander-goos/SPARK-35136-livestage.

Authored-by: Sander Goos <sander.goos@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

the auto-generated rdd's name in the storage tab should be truncated as a single line if it is too long.

### Why are the changes needed?

to make the ui shows more friendly.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

just a simple modifition in css, manual test works well like below:

before modified:

after modified:

Tht titile needs just one line:

Closes#32191 from kyoty/storage-rdd-titile-display-improve.

Authored-by: kyoty <echohlne@gmail.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

Make the attemptId in the log of historyServer to be more easily to read.

### Why are the changes needed?

Option variable in Spark historyServer log should be displayed as actual value instead of Some(XX)

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

manual test

Closes#32189 from kyoty/history-server-print-option-variable.

Authored-by: kyoty <echohlne@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Use hadoop FileSystem instead of FileInputStream.

### Why are the changes needed?

Make `spark.scheduler.allocation.file` suport remote file. When using Spark as a server (e.g. SparkThriftServer), it's hard for user to specify a local path as the scheduler pool.

### Does this PR introduce _any_ user-facing change?

Yes, a minor feature.

### How was this patch tested?

Pass `core/src/test/scala/org/apache/spark/scheduler/PoolSuite.scala` and manul test

After add config `spark.scheduler.allocation.file=hdfs:///tmp/fairscheduler.xml`. We intrudoce the configed pool.

Closes#32184 from ulysses-you/SPARK-35083.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

When counting the number of started fetch requests, we should exclude the deferred requests.

### Why are the changes needed?

Fix the wrong number in the log.

### Does this PR introduce _any_ user-facing change?

Yes, users see the correct number of started requests in logs.

### How was this patch tested?

Manually tested.

Closes#32180 from Ngone51/count-deferred-request.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: wuyi <yi.wu@databricks.com>

Signed-off-by: attilapiros <piros.attila.zsolt@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to replace Hadoop's `Path` with `Utils.resolveURI` to make the way to get URI simple in `SparkContext`.

### Why are the changes needed?

Keep the code simple.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32164 from sarutak/followup-SPARK-34225.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In current code, if we run spark sql with

```

./bin/spark-sql --verbose

```

It won't be passed to end SparkSQLCliDriver, then the SessionState won't call `setIsVerbose`

In the CLI option, it shows

```

CLI options:

-v,--verbose Verbose mode (echo executed SQL to the

console)

```

It's not consistent. This pr fix this issue

### Why are the changes needed?

Fix bug

### Does this PR introduce _any_ user-facing change?

when user call `-v` when run spark sql, sql will be echoed to console.

### How was this patch tested?

Added UT

Closes#32163 from AngersZhuuuu/SPARK-35086.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

Introducing a new test construct:

```

withHttpServer() { baseURL =>

...

}

```

Which starts and stops a Jetty server to serve files via HTTP.

Moreover this PR uses this new construct in the test `Run SparkRemoteFileTest using a remote data file`.

### Why are the changes needed?

Before this PR github URLs was used like "https://raw.githubusercontent.com/apache/spark/master/data/mllib/pagerank_data.txt".

This connects two Spark version in an unhealthy way like connecting the "master" branch which is moving part with the committed test code which is a non-moving (as it might be even released).

So this way a test running for an earlier version of Spark expects something (filename, content, path) from a the latter release and what is worse when the moving version is changed the earlier test will break.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unit test.

Closes#31935 from attilapiros/SPARK-34789.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Remove unused MapOutputTracker in BlockStoreShuffleReader

### Why are the changes needed?

Remove unused MapOutputTracker in BlockStoreShuffleReader

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#32148 from AngersZhuuuu/SPARK-35049.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com>

### What changes were proposed in this pull request?

The main change of this pr is extract a `private` method named `tryOrFetchFailedException` to eliminate duplicate code in `BufferReleasingInputStream`.

The patterns of duplicate code as follows:

```

try {

block

} catch {

case e: IOException if detectCorruption =>

IOUtils.closeQuietly(this)

iterator.throwFetchFailedException(blockId, mapIndex, address, e)

}

```

### Why are the changes needed?

Eliminate duplicate code.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#32130 from LuciferYang/SPARK-35029.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR contains:

- TreeNode, QueryPlan, AnalysisHelper changes to allow the transform function family to stop earlier without traversing the entire tree;

- Example changes in a few rules to support such pruning, e.g., ReorderJoin and OptimizeIn.

Here is a [design doc](https://docs.google.com/document/d/1SEUhkbo8X-0cYAJFYFDQhxUnKJBz4lLn3u4xR2qfWqk) that elaborates the ideas and benchmark numbers.

### Why are the changes needed?

It's a framework-level change for reducing the query compilation time.

In particular, if we update existing rules and transform call sites as per the examples in this PR, the analysis time and query optimization time can be reduced as described in this [doc](https://docs.google.com/document/d/1SEUhkbo8X-0cYAJFYFDQhxUnKJBz4lLn3u4xR2qfWqk) .

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

It is tested by existing tests.

Closes#32060 from sigmod/bits.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Line 425 in `MasterSuite` is considered as unused expression by Intellij IDE,

bfba7fadd2/core/src/test/scala/org/apache/spark/deploy/master/MasterSuite.scala (L421-L426)

If we merge lines 424 and 425 into one as:

```

System.getProperty("spark.ui.proxyBase") should startWith (s"$reverseProxyUrl/proxy/worker-")

```

this assertion will fail:

```

- master/worker web ui available behind front-end reverseProxy *** FAILED ***

The code passed to eventually never returned normally. Attempted 45 times over 5.091914027 seconds. Last failure message: "http://proxyhost:8080/path/to/spark" did not start with substring "http://proxyhost:8080/path/to/spark/proxy/worker-". (MasterSuite.scala:405)

```

`System.getProperty("spark.ui.proxyBase")` should be `reverseProxyUrl` because `Master#onStart` and `Worker#handleRegisterResponse` have not changed it.

So the main purpose of this pr is to fix the condition of this assertion.

### Why are the changes needed?

Bug fix.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

- Pass the Jenkins or GitHub Action

- Manual test:

1. merge lines 424 and 425 in `MasterSuite` into one to eliminate the unused expression:

```

System.getProperty("spark.ui.proxyBase") should startWith (s"$reverseProxyUrl/proxy/worker-")

```

2. execute `mvn clean test -pl core -Dtest=none -DwildcardSuites=org.apache.spark.deploy.master.MasterSuite`

**Before**

```

- master/worker web ui available behind front-end reverseProxy *** FAILED ***

The code passed to eventually never returned normally. Attempted 45 times over 5.091914027 seconds. Last failure message: "http://proxyhost:8080/path/to/spark" did not start with substring "http://proxyhost:8080/path/to/spark/proxy/worker-". (MasterSuite.scala:405)

Run completed in 1 minute, 14 seconds.

Total number of tests run: 32

Suites: completed 2, aborted 0

Tests: succeeded 31, failed 1, canceled 0, ignored 0, pending 0

*** 1 TEST FAILED ***

```

**After**

```

Run completed in 1 minute, 11 seconds.

Total number of tests run: 32

Suites: completed 2, aborted 0

Tests: succeeded 32, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#32105 from LuciferYang/SPARK-35004.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Close SparkContext after the Main method has finished, to allow SparkApplication on K8S to complete

### Why are the changes needed?

if I don't call the method sparkContext.stop() explicitly, then a Spark driver process doesn't terminate even after its Main method has been completed. This behaviour is different from spark on yarn, where the manual sparkContext stopping is not required. It looks like, the problem is in using non-daemon threads, which prevent the driver jvm process from terminating.

So I have inserted code that closes sparkContext automatically.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manually on the production AWS EKS environment in my company.

Closes#32081 from kotlovs/close-spark-context-on-exit.

Authored-by: skotlov <skotlov@joom.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR prevents reregistering BlockManager when a Executor is shutting down. It is achieved by checking `executorShutdown` before calling `env.blockManager.reregister()`.

### Why are the changes needed?

This change is required since Spark reports executors as active, even they are removed.

I was testing Dynamic Allocation on K8s with about 300 executors. While doing so, when the executors were torn down due to `spark.dynamicAllocation.executorIdleTimeout`, I noticed all the executor pods being removed from K8s, however, under the "Executors" tab in SparkUI, I could see some executors listed as alive. [spark.sparkContext.statusTracker.getExecutorInfos.length](65da9287bc/core/src/main/scala/org/apache/spark/SparkStatusTracker.scala (L105)) also returned a value greater than 1.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added a new test.

## Logs

Following are the logs of the executor(Id:303) which re-registers `BlockManager`

```

21/04/02 21:33:28 INFO CoarseGrainedExecutorBackend: Got assigned task 1076

21/04/02 21:33:28 INFO Executor: Running task 4.0 in stage 3.0 (TID 1076)

21/04/02 21:33:28 INFO MapOutputTrackerWorker: Updating epoch to 302 and clearing cache

21/04/02 21:33:28 INFO TorrentBroadcast: Started reading broadcast variable 3

21/04/02 21:33:28 INFO TransportClientFactory: Successfully created connection to /100.100.195.227:33703 after 76 ms (62 ms spent in bootstraps)

21/04/02 21:33:28 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 2.4 KB, free 168.0 MB)

21/04/02 21:33:28 INFO TorrentBroadcast: Reading broadcast variable 3 took 168 ms

21/04/02 21:33:28 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 3.9 KB, free 168.0 MB)

21/04/02 21:33:29 INFO MapOutputTrackerWorker: Don't have map outputs for shuffle 1, fetching them

21/04/02 21:33:29 INFO MapOutputTrackerWorker: Doing the fetch; tracker endpoint = NettyRpcEndpointRef(spark://MapOutputTrackerda-lite-test-4-7a57e478947d206d-driver-svc.dex-app-n5ttnbmg.svc:7078)

21/04/02 21:33:29 INFO MapOutputTrackerWorker: Got the output locations

21/04/02 21:33:29 INFO ShuffleBlockFetcherIterator: Getting 2 non-empty blocks including 1 local blocks and 1 remote blocks

21/04/02 21:33:30 INFO TransportClientFactory: Successfully created connection to /100.100.80.103:40971 after 660 ms (528 ms spent in bootstraps)

21/04/02 21:33:30 INFO ShuffleBlockFetcherIterator: Started 1 remote fetches in 1042 ms

21/04/02 21:33:31 INFO Executor: Finished task 4.0 in stage 3.0 (TID 1076). 1276 bytes result sent to driver

.

.

.

21/04/02 21:34:16 INFO CoarseGrainedExecutorBackend: Driver commanded a shutdown

21/04/02 21:34:16 INFO Executor: Told to re-register on heartbeat

21/04/02 21:34:16 INFO BlockManager: BlockManager BlockManagerId(303, 100.100.122.34, 41265, None) re-registering with master

21/04/02 21:34:16 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(303, 100.100.122.34, 41265, None)

21/04/02 21:34:16 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(303, 100.100.122.34, 41265, None)

21/04/02 21:34:16 INFO BlockManager: Reporting 0 blocks to the master.

21/04/02 21:34:16 INFO MemoryStore: MemoryStore cleared

21/04/02 21:34:16 INFO BlockManager: BlockManager stopped

21/04/02 21:34:16 INFO FileDataSink: Closing sink with output file = /tmp/safari-events/.des_analysis/safari-events/hdp_spark_monitoring_random-container-037caf27-6c77-433f-820f-03cd9c7d9b6e-spark-8a492407d60b401bbf4309a14ea02ca2_events.tsv

21/04/02 21:34:16 INFO HonestProfilerBasedThreadSnapshotProvider: Stopping agent

21/04/02 21:34:16 INFO HonestProfilerHandler: Stopping honest profiler agent

21/04/02 21:34:17 INFO ShutdownHookManager: Shutdown hook called

21/04/02 21:34:17 INFO ShutdownHookManager: Deleting directory /var/data/spark-d886588c-2a7e-491d-bbcb-4f58b3e31001/spark-4aa337a0-60c0-45da-9562-8c50eaff3cea

```

Closes#32043 from sumeetgajjar/SPARK-34949.

Authored-by: Sumeet Gajjar <sumeetgajjar93@gmail.com>

Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com>

### What changes were proposed in this pull request?

Synchronise access to `registerSource` and `removeSource` method since underlying `ArrayBuffer` is not thread safe.

### Why are the changes needed?

Unexpected behaviours are possible due to lack of thread safety, Like we got `ArrayIndexOutOfBoundsException` while adding new source.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Closes#32024 from BOOTMGR/SPARK-34934.

Lead-authored-by: Harsh Panchal <BOOTMGR@users.noreply.github.com>

Co-authored-by: BOOTMGR <panchal.harsh18@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch catches `IOException`, which is possibly thrown due to unable to deserialize map statuses (e.g., broadcasted value is destroyed), when deserilizing map statuses. Once `IOException` is caught, `MetadataFetchFailedException` is thrown to let Spark handle it.

### Why are the changes needed?

One customer encountered application error. From the log, it is caused by accessing non-existing broadcasted value. The broadcasted value is map statuses. E.g.,

```

[info] Cause: java.io.IOException: org.apache.spark.SparkException: Failed to get broadcast_0_piece0 of broadcast_0

[info] at org.apache.spark.util.Utils$.tryOrIOException(Utils.scala:1410)

[info] at org.apache.spark.broadcast.TorrentBroadcast.readBroadcastBlock(TorrentBroadcast.scala:226)

[info] at org.apache.spark.broadcast.TorrentBroadcast.getValue(TorrentBroadcast.scala:103)

[info] at org.apache.spark.broadcast.Broadcast.value(Broadcast.scala:70)

[info] at org.apache.spark.MapOutputTracker$.$anonfun$deserializeMapStatuses$3(MapOutputTracker.scala:967)

[info] at org.apache.spark.internal.Logging.logInfo(Logging.scala:57)

[info] at org.apache.spark.internal.Logging.logInfo$(Logging.scala:56)

[info] at org.apache.spark.MapOutputTracker$.logInfo(MapOutputTracker.scala:887)

[info] at org.apache.spark.MapOutputTracker$.deserializeMapStatuses(MapOutputTracker.scala:967)

```

There is a race-condition. After map statuses are broadcasted and the executors obtain serialized broadcasted map statuses. If any fetch failure happens after, Spark scheduler invalidates cached map statuses and destroy broadcasted value of the map statuses. Then any executor trying to deserialize serialized broadcasted map statuses and access broadcasted value, `IOException` will be thrown. Currently we don't catch it in `MapOutputTrackerWorker` and above exception will fail the application.

Normally we should throw a fetch failure exception for such case. Spark scheduler will handle this.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Closes#32033 from viirya/fix-broadcast-master.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

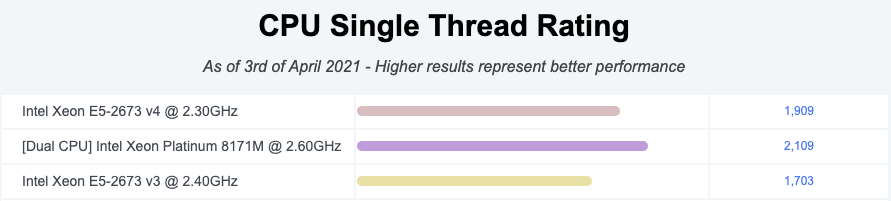

https://github.com/apache/spark/pull/32015 added a way to run benchmarks much more easily in the same GitHub Actions build. This PR updates the benchmark results by using the way.

**NOTE** that looks like GitHub Actions use four types of CPU given my observations:

- Intel(R) Xeon(R) Platinum 8171M CPU 2.60GHz

- Intel(R) Xeon(R) CPU E5-2673 v4 2.30GHz

- Intel(R) Xeon(R) CPU E5-2673 v3 2.40GHz

- Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz

Given my quick research, seems like they perform roughly similarly:

I couldn't find enough information about Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz but the performance seems roughly similar given the numbers.

So shouldn't be a big deal especially given that this way is much easier, encourages contributors to run more and guarantee the same number of cores and same memory with the same softwares.

### Why are the changes needed?

To have a base line of the benchmarks accordingly.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

It was generated from:

- [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465)

- [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337)

Closes#32044 from HyukjinKwon/SPARK-34950.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to add a workflow that allows developers to run benchmarks and download the results files. After this PR, developers can run benchmarks in GitHub Actions in their fork.

### Why are the changes needed?

1. Very easy to use.

2. We can use the (almost) same environment to run the benchmarks. Given my few experiments and observation, the CPU, cores, and memory are same.

3. Does not burden ASF's resource at GitHub Actions.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested in https://github.com/HyukjinKwon/spark/pull/31.

Entire benchmarks are being run as below:

- [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465)

- [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337)

### How do developers use it in their fork?

1. **Go to Actions in your fork, and click "Run benchmarks"**

2. **Run the benchmarks with JDK 8 or 11 with benchmark classes to run. Glob pattern is supported just like `testOnly` in SBT**

3. **After finishing the jobs, the benchmark results are available on the top in the underlying workflow:**

4. **After downloading it, unzip and untar at Spark git root directory:**

```bash

cd .../spark

mv ~/Downloads/benchmark-results-8.zip .

unzip benchmark-results-8.zip

tar -xvf benchmark-results-8.tar

```

5. **Check the results:**

```bash

git status

```

```

...

modified: core/benchmarks/MapStatusesSerDeserBenchmark-results.txt

```

Closes#32015 from HyukjinKwon/SPARK-34821-pr.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Allow ExecutorMetricsPoller to keep stage entries in stageTCMP until a heartbeat occurs even if the entries have task count = 0.

### Why are the changes needed?

This is an improvement.

The current implementation of ExecutorMetricsPoller keeps a map, stageTCMP of (stageId, stageAttemptId) to (count of running tasks, executor metric peaks). The entry for the stage is removed on task completion if the task count decreases to 0. In the case of an executor with a single core, this leads to unnecessary removal and insertion of entries for a given stage.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

A new unit test is added.

Closes#31871 from baohe-zhang/SPARK-34779.

Authored-by: Baohe Zhang <baohe.zhang@verizonmedia.com>

Signed-off-by: “attilapiros” <piros.attila.zsolt@gmail.com>

### What changes were proposed in this pull request?

Add more flexable parameters for stage end point

endpoint /application/{app-id}/stages. It can be:

/application/{app-id}/stages?details=[true|false]&status=[ACTIVE|COMPLETE|FAILED|PENDING|SKIPPED]&withSummaries=[true|false]$quantiles=[comma separated quantiles string]&taskStatus=[RUNNING|SUCCESS|FAILED|PENDING]

where

```

query parameter details=true is to show the detailed task information within each stage. The default value is details=false;

query parameter status can select those stages with the specified status. When status parameter is not specified, a list of all stages are generated.

query parameter withSummaries=true is to show both task summary information in percentile distribution and executor summary information in percentile distribution. The default value is withSummaries=false.

query parameter quantiles support user defined quantiles, default quantiles is `0.0,0.25,0.5,0.75,1.0`

query parameter taskStatus is to show only those tasks with the specified status within their corresponding stages. This parameter will be set when details=true (i.e. this parameter will be ignored when details=false).

```

### Why are the changes needed?

More flexable restful API

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

UT

Closes#31204 from AngersZhuuuu/SPARK-26399-NEW.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to add a script that detects and runs all benchmarks.

### Why are the changes needed?

To run the benchmarks easily. This is actually for SPARK-34821.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested with the command below after building Spark:

```bash

SPARK_GENERATE_BENCHMARK_FILES=1 bin/spark-submit --class \

org.apache.spark.benchmark.Benchmarks --jars \

"`find . -name "*3.2.0-SNAPSHOT-tests.jar" | paste -sd ',' -`" \

./core/target/scala-2.12/spark-core_2.12-3.2.0-SNAPSHOT-tests.jar

```

This is ongoing work. I will double check with working on SPARK-34821 and updating the results.

Closes#32005 from HyukjinKwon/SPARK-34907.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add a new config, `spark.shuffle.service.name`, which allows for Spark applications to look for a YARN shuffle service which is defined at a name other than the default `spark_shuffle`.

Add a new config, `spark.yarn.shuffle.service.metrics.namespace`, which allows for configuring the namespace used when emitting metrics from the shuffle service into the NodeManager's `metrics2` system.

Add a new mechanism by which to override shuffle service configurations independently of the configurations in the NodeManager. When a resource `spark-shuffle-site.xml` is present on the classpath of the shuffle service, the configs present within it will be used to override the configs coming from `yarn-site.xml` (via the NodeManager).

### Why are the changes needed?

There are two use cases which can benefit from these changes.

One use case is to run multiple instances of the shuffle service side-by-side in the same NodeManager. This can be helpful, for example, when running a YARN cluster with a mixed workload of applications running multiple Spark versions, since a given version of the shuffle service is not always compatible with other versions of Spark (e.g. see SPARK-27780). With this PR, it is possible to run two shuffle services like `spark_shuffle` and `spark_shuffle_3.2.0`, one of which is "legacy" and one of which is for new applications. This is possible because YARN versions since 2.9.0 support the ability to run shuffle services within an isolated classloader (see YARN-4577), meaning multiple Spark versions can coexist.

Besides this, the separation of shuffle service configs into `spark-shuffle-site.xml` can be useful for administrators who want to change and/or deploy Spark shuffle service configurations independently of the configurations for the NodeManager (e.g., perhaps they are owned by two different teams).

### Does this PR introduce _any_ user-facing change?

Yes. There are two new configurations related to the external shuffle service, and a new mechanism which can optionally be used to configure the shuffle service. `docs/running-on-yarn.md` has been updated to provide user instructions; please see this guide for more details.

### How was this patch tested?

In addition to the new unit tests added, I have deployed this to a live YARN cluster and successfully deployed two Spark shuffle services simultaneously, one running a modified version of Spark 2.3.0 (which supports some of the newer shuffle protocols) and one running Spark 3.1.1. Spark applications of both versions are able to communicate with their respective shuffle services without issue.

Closes#31936 from xkrogen/xkrogen-SPARK-34828-shufflecompat-config-from-classpath.

Authored-by: Erik Krogen <xkrogen@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Some `spark-submit` commands used to run benchmarks in the user's guide is wrong, we can't use these commands to run benchmarks successful.

So the major changes of this pr is correct these wrong commands, for example, run a benchmark which inherits from `SqlBasedBenchmark`, we must specify `--jars <spark core test jar>,<spark catalyst test jar>` because `SqlBasedBenchmark` based benchmark extends `BenchmarkBase(defined in spark core test jar)` and `SQLHelper(defined in spark catalyst test jar)`.

Another change of this pr is removed the `scalatest Assertions` dependency of Benchmarks because `scalatest-*.jar` are not in the distribution package, it will be troublesome to use.

### Why are the changes needed?

Make sure benchmarks can run using spark-submit cmd described in the guide

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Use the corrected `spark-submit` commands to run benchmarks successfully.

Closes#31995 from LuciferYang/fix-benchmark-guide.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In ProcfsMetricsGetter.scala, propogating IOException from addProcfsMetricsFromOneProcess to computeAllMetrics when the child pid's proc stat file is unavailable. As a result, the for-loop in computeAllMetrics() can terminate earlier and return an all-0 procfs metric.

### Why are the changes needed?

In the case of a child pid's stat file missing and the subsequent child pids' stat files exist, ProcfsMetricsGetter.computeAllMetrics() will return partial metrics (the sum of a subset of child pids), which can be misleading and is undesired per the existing code comments in https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/executor/ProcfsMetricsGetter.scala#L214.

Also, a side effect of this bug is that it can lead to a verbose warning log if many pids' stat files are missing. An early terminating can make the warning logs more concise.

The unit test can also explain the bug well.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

A unit test is added.

Closes#31945 from baohe-zhang/SPARK-34845.

Authored-by: Baohe Zhang <baohe.zhang@verizonmedia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

When we want to get stage's detail info with task information, it will return all tasks, the content is huge and always we just want to know some failed tasks/running tasks with whole stage info to judge is a task has some problem. This pr support

user to use

```

/application/[appid]/stages/[stage-id]?details=true&taskStatus=xxx

/application/[appid]/stages/[stage-id]/[stage-attempted-id]?details=true&taskStatus=xxx

```

to filter task details by task status

### Why are the changes needed?

More flexiable Restful API

### Does this PR introduce _any_ user-facing change?

User can use

```

/application/[appid]/stages/[stage-id]?details=true&taskStatus=xxx

/application/[appid]/stages/[stage-id]/[stage-attempted-id]?details=true&taskStatus=xxx

```

to filter task details by task status

### How was this patch tested?

Added

Closes#31165 from AngersZhuuuu/SPARK-34092.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Fix issue of https://github.com/apache/spark/pull/31948#issuecomment-808647121

### Why are the changes needed?

fix ut failed

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existed UT

Closes#31977 from AngersZhuuuu/SPARK-34848-followup.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Task duration distribution is also very important for us to judge whether a stage's task is skew enough.

### Why are the changes needed?

Add important information in TaskMetricsDistribution

### Does this PR introduce _any_ user-facing change?

People can see duration distribution from TaskMetricsDistribution

### How was this patch tested?

Existed UT

Closes#31948 from AngersZhuuuu/SPARK-34848.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

For a specific stage, it is useful to show the task metrics in percentile distribution. This information can help users know whether or not there is a skew/bottleneck among tasks in a given stage. We list an example in taskMetricsDistributions.json

Similarly, it is useful to show the executor metrics in percentile distribution for a specific stage. This information can show whether or not there is a skewed load on some executors. We list an example in executorMetricsDistributions.json

We define `withSummaries` and `quantiles` query parameter in the REST API for a specific stage as:

applications/<application_id>/<application_attempt/stages/<stage_id>/<stage_attempt>?withSummaries=[true|false]& quantiles=0.05,0.25,0.5,0.75,0.95

1. withSummaries: default is false, define whether to show current stage's taskMetricsDistribution and executorMetricsDistribution

2. quantiles: default is `0.0,0.25,0.5,0.75,1.0` only effect when `withSummaries=true`, it define the quantiles we use when calculating metrics distributions.

When withSummaries=true, both task metrics in percentile distribution and executor metrics in percentile distribution are included in the REST API output. The default value of withSummaries is false, i.e. no metrics percentile distribution will be included in the REST API output.

### Why are the changes needed?

For a specific stage, it is useful to show the task metrics in percentile distribution. This information can help users know whether or not there is a skew/bottleneck among tasks in a given stage. We list an example in taskMetricsDistributions.json

### Does this PR introduce _any_ user-facing change?

User can use below restful API to get task metrics distribution and executor metrics distribution for indivial stage

```

applications/<application_id>/<application_attempt/stages/<stage_id>/<stage_attempt>?withSummaries=[true|false]

```

### How was this patch tested?

Added UT

Closes#31611 from AngersZhuuuu/SPARK-34488.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch adds a config `spark.yarn.kerberos.renewal.excludeHadoopFileSystems` which lists the filesystems to be excluded from delegation token renewal at YARN.

### Why are the changes needed?

MapReduce jobs can instruct YARN to skip renewal of tokens obtained from certain hosts by specifying the hosts with configuration mapreduce.job.hdfs-servers.token-renewal.exclude=<host1>,<host2>,..,<hostN>.

But seems Spark lacks of similar option. So the job submission fails if YARN fails to renew DelegationToken for any of the remote HDFS cluster. The failure in DT renewal can happen due to many reason like Remote HDFS does not trust Kerberos identity of YARN etc. We have a customer facing such issue.

### Does this PR introduce _any_ user-facing change?

No, if the config is not set. Yes, as users can use this config to instruct YARN not to renew delegation token from certain filesystems.

### How was this patch tested?

It is hard to do unit test for this. We did verify it work from the customer using this fix in the production environment.

Closes#31761 from viirya/SPARK-34295.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

1) Modify `GenericAvroSerializer` to support serialization of any `GenericContainer`

2) Register `KryoSerializer`s for `GenericData.{Array, EnumSymbol, Fixed}` using the modified `GenericAvroSerializer`

### Why are the changes needed?

Without this change, Kryo throws NPEs when trying to serialize `GenericData.{Array, EnumSymbol, Fixed}`. More details in SPARK-34477 Jira

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added unit tests for testing roundtrip serialization and deserialization of `GenericData.{Array, EnumSymbol, Fixed}` using `GenericAvroSerializer` directly and also indirectly through `KryoSerializer`

Closes#31597 from shardulm94/avro-array-serializer.

Authored-by: Shardul Mahadik <smahadik@linkedin.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Move `ExecutionListenerBus` register (both `ListenerBus` and `ContextCleaner` register) into itself.

Also with a minor change that put `registerSparkListenerForCleanup` to a better place.

### Why are the changes needed?

improve code

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass existing tests.

Closes#31919 from Ngone51/SPARK-34087-followup.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch proposes to disable fetching shuffle blocks in batch when io encryption is enabled. Adaptive Query Execution fetch contiguous shuffle blocks for the same map task in batch to reduce IO and improve performance. However, we found that batch fetching is incompatible with io encryption.

### Why are the changes needed?

Before this patch, we set `spark.io.encryption.enabled` to true, then run some queries which coalesced partitions by AEQ, may got following error message:

```14:05:52.638 WARN org.apache.spark.scheduler.TaskSetManager: Lost task 1.0 in stage 2.0 (TID 3) (11.240.37.88 executor driver): FetchFailed(BlockManagerId(driver, 11.240.37.88, 63574, None), shuffleId=0, mapIndex=0, mapId=0, reduceId=2, message=

org.apache.spark.shuffle.FetchFailedException: Stream is corrupted

at org.apache.spark.storage.ShuffleBlockFetcherIterator.throwFetchFailedException(ShuffleBlockFetcherIterator.scala:772)

at org.apache.spark.storage.BufferReleasingInputStream.read(ShuffleBlockFetcherIterator.scala:845)

at java.io.BufferedInputStream.fill(BufferedInputStream.java:246)

at java.io.BufferedInputStream.read(BufferedInputStream.java:265)

at java.io.DataInputStream.readInt(DataInputStream.java:387)

at org.apache.spark.sql.execution.UnsafeRowSerializerInstance$$anon$2$$anon$3.readSize(UnsafeRowSerializer.scala:113)

at org.apache.spark.sql.execution.UnsafeRowSerializerInstance$$anon$2$$anon$3.next(UnsafeRowSerializer.scala:129)

at org.apache.spark.sql.execution.UnsafeRowSerializerInstance$$anon$2$$anon$3.next(UnsafeRowSerializer.scala:110)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:494)

at scala.collection.Iterator$$anon$10.next(Iterator.scala:459)

at org.apache.spark.util.CompletionIterator.next(CompletionIterator.scala:29)

at org.apache.spark.InterruptibleIterator.next(InterruptibleIterator.scala:40)

at scala.collection.Iterator$$anon$10.next(Iterator.scala:459)

at org.apache.spark.sql.execution.SparkPlan.$anonfun$getByteArrayRdd$1(SparkPlan.scala:345)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2(RDD.scala:898)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2$adapted(RDD.scala:898)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:498)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1437)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:501)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Stream is corrupted

at net.jpountz.lz4.LZ4BlockInputStream.refill(LZ4BlockInputStream.java:200)

at net.jpountz.lz4.LZ4BlockInputStream.refill(LZ4BlockInputStream.java:226)

at net.jpountz.lz4.LZ4BlockInputStream.read(LZ4BlockInputStream.java:157)

at org.apache.spark.storage.BufferReleasingInputStream.read(ShuffleBlockFetcherIterator.scala:841)

... 25 more

)

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New tests.

Closes#31898 from hezuojiao/fetch_shuffle_in_batch.

Authored-by: hezuojiao <hezuojiao@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Remove the unused variable 'secMgr' in SparkSubmit.scala and DriverWrapper.scala

In jira https://issues.apache.org/jira/browse/SPARK-33925, The last usage of SecurityManager in Utils.fetchFile was removed. We don't need the variable anymore

### Why are the changes needed?

For better readablity of codes

### Does this PR introduce _any_ user-facing change?

No,dev-only

### How was this patch tested?

Manually complied. Github Actions and Jenkins build should test it out as well.

Closes#31928 from Peng-Lei/rm_secMgr.

Authored-by: PengLei <18066542445@189.cn>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR fixes the `HybridRowQueue ` to respect the configured memory mode.

Besides, this PR also refactored the constructor of `MemoryConsumer` to accept the memory mode explicitly.

### Why are the changes needed?

`HybridRowQueue` supports both onHeap and offHeap manipulation. But it inherited the wrong `MemoryConsumer` constructor, which hard-coded the memory mode to `onHeap`.

### Does this PR introduce _any_ user-facing change?

No. (Maybe yes in some cases where users can't complete the job before could complete successfully after the fix because of `HybridRowQueue` is able to spill under offHeap mode now. )

### How was this patch tested?

Updated the existing test to make it test both offHeap and onHeap modes.

Closes#31152 from Ngone51/fix-MemoryConsumer-memorymode.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This reverts commit 86ea520320.

### Why are the changes needed?

The test added in the change was flaky.

Closes#31918 from bozhang2820/revert-spark-34757.

Authored-by: Bo Zhang <bo.zhang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR fixes an issue that `addFile` and `addJar` further encode even though a URI form string is passed.

For example, the following operation will throw exception even though the file exists.

```

sc.addFile("file:/foo/test%20file.txt")

```

Another case is `--files` and `--jars` option when we submit an application.

```

bin/spark-shell --files "/foo/test file.txt"

```

The path above is transformed to URI form [here](ecf4811764/core/src/main/scala/org/apache/spark/deploy/SparkSubmitArguments.scala (L400)) and passed to `addFile` so the same issue happens.

### Why are the changes needed?

This is a bug.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New test.

Closes#31718 from sarutak/fix-uri-encode-double.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

This PR aims to enable `spark.hadoopRDD.ignoreEmptySplits` by default for Apache Spark 3.2.0.

### Why are the changes needed?

Although this is a safe improvement, this hasn't been enabled by default to avoid the explicit behavior change. This PR aims to switch the default explicitly in Apache Spark 3.2.0.

### Does this PR introduce _any_ user-facing change?

Yes, the behavior change is documented.

### How was this patch tested?

Pass the existing CIs.

Closes#31909 from dongjoon-hyun/SPARK-34809.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Like we redact secrets and tokens, this PR aims to redact access key.

### Why are the changes needed?

Access key is also worth to hide.

### Does this PR introduce _any_ user-facing change?

This will hide this information from SparkUI (`Spark Properties` and `Hadoop Properties` and logs).

### How was this patch tested?

Pass the newly updated UT.

Closes#31912 from dongjoon-hyun/SPARK-34811.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

avoid unnecessary shuffle if possible

### Why are the changes needed?

avoid unnecessary shuffle.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

added testsuites and existing testsuites

Closes#31480 from zhengruifeng/repartitionAndSortWithinPartitions_opt_II.

Authored-by: Ruifeng Zheng <ruifengz@foxmail.com>

Signed-off-by: yi.wu <yi.wu@databricks.com>

### What changes were proposed in this pull request?

This change is to ignore cache for SNAPSHOT dependencies in spark-submit.

### Why are the changes needed?

When spark-submit is executed with --packages, it will not download the dependency jars when they are available in cache (e.g. ivy cache), even when the dependencies are SNAPSHOT.

This might block developers who work on external modules in Spark (e.g. spark-avro), since they need to remove the cache manually every time when they update the code during developments (which generates SNAPSHOT jars). Without knowing this, they could be blocked wondering why their code changes are not reflected in spark-submit executions.

### Does this PR introduce _any_ user-facing change?

Yes. With this change, developers/users who run spark-submit with SNAPSHOT dependencies do not need to remove the cache every time when the SNAPSHOT dependencies are updated.

### How was this patch tested?

Added a unit test.

Closes#31849 from bozhang2820/spark-submit-cache-ignore.

Authored-by: Bo Zhang <bo.zhang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Change DAGScheduler to pass a clone of the Properties object, rather than the original object, to the SparkListenerJobStart event.

### Why are the changes needed?

DAGScheduler might modify the Properties object (e.g., in addPySparkConfigsToProperties) after firing off the SparkListenerJobStart event. Since the handler for that event (onJobStart in EventLoggingListener) will iterate over the elements of the Property object, this sometimes results in a ConcurrentModificationException.

This can be demonstrated using these steps:

```

$ bin/spark-shell --conf spark.ui.showConsoleProgress=false \

--conf spark.executor.cores=1 --driver-memory 4g --conf \

"spark.ui.showConsoleProgress=false" \

--conf spark.eventLog.enabled=true \

--conf spark.eventLog.dir=/tmp/spark-events

...

scala> (0 to 500).foreach { i =>

| val df = spark.range(0, 20000).toDF("a")

| df.filter("a > 12").count

| }

21/03/12 18:16:44 ERROR AsyncEventQueue: Listener EventLoggingListener threw an exception

java.util.ConcurrentModificationException

at java.util.Hashtable$Enumerator.next(Hashtable.java:1387)

```

I've not actually seen a ConcurrentModificationException in onStageSubmitted, only in onJobStart. However, they both iterate over the Properties object, so for safety's sake I pass a clone to SparkListenerStageSubmitted as well.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

By repeatedly running the reproduction steps from above.

Closes#31826 from bersprockets/elconcurrent.

Authored-by: Bruce Robbins <bersprockets@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes an alternative way to fix the memory leak of `ExecutionListenerBus`, which would automatically clean them up.

Basically, the idea is to add `registerSparkListenerForCleanup` to `ContextCleaner`, so we can remove the `ExecutionListenerBus` from `LiveListenerBus` when the `SparkSession` is GC'ed.

On the other hand, to make the `SparkSession` GC-able, we need to get rid of the reference of `SparkSession` in `ExecutionListenerBus`. Therefore, we introduced the `sessionUUID`, which is a unique identifier for SparkSession, to replace the `SparkSession` object.

Note that, the proposal wouldn't take effect when `spark.cleaner.referenceTracking=false` since it depends on `ContextCleaner`.

### Why are the changes needed?

Fix the memory leak caused by `ExecutionListenerBus` mentioned in SPARK-34087.

### Does this PR introduce _any_ user-facing change?

Yes, save memory for users.

### How was this patch tested?

Added unit test.

Closes#31839 from Ngone51/fix-mem-leak-of-ExecutionListenerBus.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The main change of this pr as follows:

- Use `org.junit.Assert.assertThrows(String, Class, ThrowingRunnable)` method instead of `ExpectedException.none()`

- Use `org.hamcrest.MatcherAssert.assertThat()` method instead of `org.junit.Assert.assertThat(T, org.hamcrest.Matcher<? super T>)`

### Why are the changes needed?

Clean up deprecated API usage

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#31815 from LuciferYang/SPARK-34722.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR upgrade Py4J from 0.10.9.1 to 0.10.9.2 that contains some bug fixes and improvements.

* expose shell parameter in Popen inside launch_gateway. ([bartdag/py4j220efc3](220efc3716))

* fixed Flake8 errors ([bartdag/py4j6c6ee9a](6c6ee9aedc))

### Why are the changes needed?

To leverage fixes from the upstream in Py4J.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Jenkins build and GitHub Actions will test it out.

Closes#31796 from wankunde/py4j.

Authored-by: wankunde <wankunde@163.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR aims to upgrade ZSTD-JNI to 1.4.9-1.

### Why are the changes needed?

ZStandard 1.4.9 and its corresponding JNI brings the following bug fixes and improvements.

- https://github.com/facebook/zstd/releases/tag/v1.4.9

One of notable improvement of ZStandard 1.4.9 is `2x faster Long Distance Mode`, but we are not using it yet.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs with the existing tests and there is no regression in ZStandardBenchmark.

Closes#31784 from dongjoon-hyun/ZSTD-149.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fixed an issue where data could not be cleaned up when unregisterShuffle.

### Why are the changes needed?

While we use the old shuffle fetch protocol, we use partitionId as mapId in the ShuffleBlockId construction,but we use `context.taskAttemptId()` as mapId that it is cached in `taskIdMapsForShuffle` when we `getWriter[K, V]`.

where data could not be cleaned up when unregisterShuffle ,because we remove a shuffle's metadata from the `taskIdMapsForShuffle`'s mapIds, the mapId is `context.taskAttemptId()` instead of partitionId.

### Does this PR introduce _any_ user-facing change?

yes

### How was this patch tested?

add new test.

Closes#31664 from yikf/master.

Authored-by: yikf <13468507104@163.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

pyrolite 4.21 introduced and enabled value comparison by default (`valueCompare=true`) during object memoization and serialization: https://github.com/irmen/Pyrolite/blob/pyrolite-4.21/java/src/main/java/net/razorvine/pickle/Pickler.java#L112-L122

This change has undesired effect when we serialize a row (actually `GenericRowWithSchema`) to be passed to python: https://github.com/apache/spark/blob/branch-3.0/sql/core/src/main/scala/org/apache/spark/sql/execution/python/EvaluatePython.scala#L60. A simple example is that

```

new GenericRowWithSchema(Array(1.0, 1.0), StructType(Seq(StructField("_1", DoubleType), StructField("_2", DoubleType))))

```

and

```

new GenericRowWithSchema(Array(1, 1), StructType(Seq(StructField("_1", IntegerType), StructField("_2", IntegerType))))

```

are currently equal and the second instance is replaced to the short code of the first one during serialization.

### Why are the changes needed?

The above can cause nasty issues like the one in https://issues.apache.org/jira/browse/SPARK-34545 description:

```

>>> from pyspark.sql.functions import udf

>>> from pyspark.sql.types import *

>>>

>>> def udf1(data_type):

def u1(e):

return e[0]

return udf(u1, data_type)

>>>

>>> df = spark.createDataFrame([((1.0, 1.0), (1, 1))], ['c1', 'c2'])

>>>

>>> df = df.withColumn("c3", udf1(DoubleType())("c1"))

>>> df = df.withColumn("c4", udf1(IntegerType())("c2"))

>>>

>>> df.select("c3").show()

+---+

| c3|

+---+

|1.0|

+---+

>>> df.select("c4").show()

+---+

| c4|

+---+

| 1|

+---+

>>> df.select("c3", "c4").show()

+---+----+

| c3| c4|

+---+----+

|1.0|null|

+---+----+

```

This is because during serialization from JVM to Python `GenericRowWithSchema(1.0, 1.0)` (`c1`) is memoized first and when `GenericRowWithSchema(1, 1)` (`c2`) comes next, it is replaced to some short code of the `c1` (instead of serializing `c2` out) as they are `equal()`. The python functions then runs but the return type of `c4` is expected to be `IntegerType` and if a different type (`DoubleType`) comes back from python then it is discarded: https://github.com/apache/spark/blob/branch-3.0/sql/core/src/main/scala/org/apache/spark/sql/execution/python/EvaluatePython.scala#L108-L113

After this PR:

```

>>> df.select("c3", "c4").show()

+---+---+

| c3| c4|

+---+---+

|1.0| 1|

+---+---+

```

### Does this PR introduce _any_ user-facing change?

Yes, fixes a correctness issue.

### How was this patch tested?

Added new UT + manual tests.

Closes#31682 from peter-toth/SPARK-34545-fix-row-comparison.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR aims to use `ZstdInputStreamNoFinalizer` and `ZstdOutputStreamNoFinalizer` classes and upgrade ZSTD JNI to 1.4.8-7.

### Why are the changes needed?

`1.4.8-7` makes `NoFinalizer` classes public again. This improves the performance.

- 57d53a09d2

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#31762 from dongjoon-hyun/SPARK-ZSTD-NOFINALIZER.

Lead-authored-by: Dongjoon Hyun <dhyun@apple.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Exclude non-jar dependencies of the ivy/maven packages we want to resolve as our current dependency resolution code assumes artifacts to be jars. 17601e014c/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala (L1215) and 17601e014c/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala (L318)

### Why are the changes needed?

Some maven artifacts define non-jar dependencies. One such example is `hive-exec`'s dependency on the `pom` of `apache-curator` https://repo1.maven.org/maven2/org/apache/hive/hive-exec/2.3.8/hive-exec-2.3.8.pom

Today trying to depend on such an artifact using `--packages` will print an error but continue without including the non-jar dependency. Doing the same using `spark.sql("ADD JAR ivy://org.apache.hive:hive-exec:2.3.8?exclude=org.pentaho:pentaho-aggdesigner-algorithm")` will cause a failure. Detailed stacktraces can be found in SPARK-34624.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added unit test. Retried the same example in `spark-shell` which produced the stacktrace in the JIRA.

Closes#31741 from shardulm94/add-jar-filter-poms.

Authored-by: Shardul Mahadik <smahadik@linkedin.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch proposes to mark indeterminate RDD in Web UI.

### Why are the changes needed?

It is somehow hard to track which part is indeterminate in a graph of RDDs. In some cases we may need to track indeterminate RDDs. For example, indeterminate map stage fails and Spark is unable to fallback some parent stages. The developers are usually unable to easily identify indeterminate part from the complicated RDD computation. If Web UI can show up indeterminate RDD like cached RDD, it could be useful to track it.

### Does this PR introduce _any_ user-facing change?

Yes, there is a UI change for users.

### How was this patch tested?

Manual check with Web UI locally. Updated existing unit tests.

<img width="544" alt="Screen Shot 2021-03-02 at 12 38 02 AM" src="https://user-images.githubusercontent.com/68855/109621580-020bce80-7af0-11eb-834f-46b0f89d47c0.png">

<img width="390" alt="Screen Shot 2021-03-05 at 9 27 14 AM" src="https://user-images.githubusercontent.com/68855/110151181-04db1d80-7d95-11eb-8b3a-7235f7fe9eac.png">

Closes#31707 from viirya/SPARK-34592.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Add trailing slashes in URLs of Spark UI pages.

### Why are the changes needed?

When a user accesses a URL without a trailing slash, Spark UI always responds with a 302 redirect to a URL with a trailing slash.

Adding trailing slash to URLs in UI pages can reduce such unnecessary redirects

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manual test. It's a very simple change.

Closes#31753 from gengliangwang/reduceRedirect.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR intends to fix a bug of `objects.NewInstance` if a user runs Spark on jdk8u and a given `cls` in `NewInstance` is a deeply-nested inner class, e.g.,.

```

object OuterLevelWithVeryVeryVeryLongClassName1 {

object OuterLevelWithVeryVeryVeryLongClassName2 {

object OuterLevelWithVeryVeryVeryLongClassName3 {

object OuterLevelWithVeryVeryVeryLongClassName4 {

object OuterLevelWithVeryVeryVeryLongClassName5 {

object OuterLevelWithVeryVeryVeryLongClassName6 {

object OuterLevelWithVeryVeryVeryLongClassName7 {

object OuterLevelWithVeryVeryVeryLongClassName8 {

object OuterLevelWithVeryVeryVeryLongClassName9 {

object OuterLevelWithVeryVeryVeryLongClassName10 {

object OuterLevelWithVeryVeryVeryLongClassName11 {

object OuterLevelWithVeryVeryVeryLongClassName12 {

object OuterLevelWithVeryVeryVeryLongClassName13 {

object OuterLevelWithVeryVeryVeryLongClassName14 {

object OuterLevelWithVeryVeryVeryLongClassName15 {

object OuterLevelWithVeryVeryVeryLongClassName16 {

object OuterLevelWithVeryVeryVeryLongClassName17 {

object OuterLevelWithVeryVeryVeryLongClassName18 {

object OuterLevelWithVeryVeryVeryLongClassName19 {

object OuterLevelWithVeryVeryVeryLongClassName20 {

case class MalformedNameExample2(x: Int)

}}}}}}}}}}}}}}}}}}}}

```

The root cause that Kris (rednaxelafx) investigated is as follows (Kudos to Kris);

The reason why the test case above is so convoluted is in the way Scala generates the class name for nested classes. In general, Scala generates a class name for a nested class by inserting the dollar-sign ( `$` ) in between each level of class nesting. The problem is that this format can concatenate into a very long string that goes beyond certain limits, so Scala will change the class name format beyond certain length threshold.

For the example above, we can see that the first two levels of class nesting have class names that look like this:

```

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassName1$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassName1$OuterLevelWithVeryVeryVeryLongClassName2$

```

If we leave out the fact that Scala uses a dollar-sign ( `$` ) suffix for the class name of the companion object, `OuterLevelWithVeryVeryVeryLongClassName1`'s full name is a prefix (substring) of `OuterLevelWithVeryVeryVeryLongClassName2`.

But if we keep going deeper into the levels of nesting, you'll find names that look like:

```

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$2a1321b953c615695d7442b2adb1$$$$ryVeryLongClassName8$OuterLevelWithVeryVeryVeryLongClassName9$OuterLevelWithVeryVeryVeryLongClassName10$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$2a1321b953c615695d7442b2adb1$$$$ryVeryLongClassName8$OuterLevelWithVeryVeryVeryLongClassName9$OuterLevelWithVeryVeryVeryLongClassName10$OuterLevelWithVeryVeryVeryLongClassName11$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$85f068777e7ecf112afcbe997d461b$$$$VeryLongClassName11$OuterLevelWithVeryVeryVeryLongClassName12$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$85f068777e7ecf112afcbe997d461b$$$$VeryLongClassName11$OuterLevelWithVeryVeryVeryLongClassName12$OuterLevelWithVeryVeryVeryLongClassName13$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$85f068777e7ecf112afcbe997d461b$$$$VeryLongClassName11$OuterLevelWithVeryVeryVeryLongClassName12$OuterLevelWithVeryVeryVeryLongClassName13$OuterLevelWithVeryVeryVeryLongClassName14$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$5f7ad51804cb1be53938ea804699fa$$$$VeryLongClassName14$OuterLevelWithVeryVeryVeryLongClassName15$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$5f7ad51804cb1be53938ea804699fa$$$$VeryLongClassName14$OuterLevelWithVeryVeryVeryLongClassName15$OuterLevelWithVeryVeryVeryLongClassName16$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$5f7ad51804cb1be53938ea804699fa$$$$VeryLongClassName14$OuterLevelWithVeryVeryVeryLongClassName15$OuterLevelWithVeryVeryVeryLongClassName16$OuterLevelWithVeryVeryVeryLongClassName17$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$69b54f16b1965a31e88968df1a58d8$$$$VeryLongClassName17$OuterLevelWithVeryVeryVeryLongClassName18$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$69b54f16b1965a31e88968df1a58d8$$$$VeryLongClassName17$OuterLevelWithVeryVeryVeryLongClassName18$OuterLevelWithVeryVeryVeryLongClassName19$

org.apache.spark.sql.catalyst.encoders.ExpressionEncoderSuite$OuterLevelWithVeryVeryVeryLongClassNam$$$$69b54f16b1965a31e88968df1a58d8$$$$VeryLongClassName17$OuterLevelWithVeryVeryVeryLongClassName18$OuterLevelWithVeryVeryVeryLongClassName19$OuterLevelWithVeryVeryVeryLongClassName20$

```

with a hash code in the middle and various levels of nesting omitted.

The `java.lang.Class.isMemberClass` method is implemented in JDK8u as:

http://hg.openjdk.java.net/jdk8u/jdk8u/jdk/file/tip/src/share/classes/java/lang/Class.java#l1425

```

/**

* Returns {code true} if and only if the underlying class

* is a member class.

*

* return {code true} if and only if this class is a member class.

* since 1.5

*/

public boolean isMemberClass() {

return getSimpleBinaryName() != null && !isLocalOrAnonymousClass();

}

/**

* Returns the "simple binary name" of the underlying class, i.e.,

* the binary name without the leading enclosing class name.

* Returns {code null} if the underlying class is a top level

* class.

*/

private String getSimpleBinaryName() {

Class<?> enclosingClass = getEnclosingClass();

if (enclosingClass == null) // top level class

return null;

// Otherwise, strip the enclosing class' name

try {

return getName().substring(enclosingClass.getName().length());

} catch (IndexOutOfBoundsException ex) {

throw new InternalError("Malformed class name", ex);

}

}

```

and the problematic code is `getName().substring(enclosingClass.getName().length())` -- if a class's enclosing class's full name is *longer* than the nested class's full name, this logic would end up going out of bounds.

The bug has been fixed in JDK9 by https://bugs.java.com/bugdatabase/view_bug.do?bug_id=8057919 , but still exists in the latest JDK8u release. So from the Spark side we'd need to do something to avoid hitting this problem.

### Why are the changes needed?

Bugfix on jdk8u.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added tests.

Closes#31733 from maropu/SPARK-34607.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Make the "duration" column in standalone mode master UI sorted by numeric duration, hence the column can be sorted by the correct order.

Before changes:

After changes:

### Why are the changes needed?

Fix a UI bug to make the sorting consistent across different pages.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Ran several apps with different durations and verified the duration column on the master page can be sorted correctly.

Closes#31743 from baohe-zhang/SPARK-32924.

Authored-by: Baohe Zhang <baohe.zhang@verizonmedia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

SPARK-33084 added the ability to use ivy coordinates with `SparkContext.addJar`. PR #29966 claims to mimic Hive behavior although I found a few cases where it doesn't

1) The default value of the transitive parameter is false, both in case of parameter not being specified in coordinate or parameter value being invalid. The Hive behavior is that transitive is [true if not specified](cb2ac3dcc6/ql/src/java/org/apache/hadoop/hive/ql/util/DependencyResolver.java (L169)) in the coordinate and [false for invalid values](cb2ac3dcc6/ql/src/java/org/apache/hadoop/hive/ql/util/DependencyResolver.java (L124)). Also, regardless of Hive, I think a default of true for the transitive parameter also matches [ivy's own defaults](https://ant.apache.org/ivy/history/2.5.0/ivyfile/dependency.html#_attributes).

2) The parameter value for transitive parameter is regarded as case-sensitive [based on the understanding](https://github.com/apache/spark/pull/29966#discussion_r547752259) that Hive behavior is case-sensitive. However, this is not correct, Hive [treats the parameter value case-insensitively](cb2ac3dcc6/ql/src/java/org/apache/hadoop/hive/ql/util/DependencyResolver.java (L122)).

I propose that we be compatible with Hive for these behaviors

### Why are the changes needed?

To make `ADD JAR` with ivy coordinates compatible with Hive's transitive behavior

### Does this PR introduce _any_ user-facing change?

The user-facing changes here are within master as the feature introduced in SPARK-33084 has not been released yet

1. Previously an ivy coordinate without `transitive` parameter specified did not resolve transitive dependency, now it does.

2. Previously an `transitive` parameter value was treated case-sensitively. e.g. `transitive=TRUE` would be treated as false as it did not match exactly `true`. Now it will be treated case-insensitively.

### How was this patch tested?

Modified existing unit tests to test new behavior

Add new unit test to cover usage of `exclude` with unspecified `transitive`

Closes#31623 from shardulm94/spark-34506.

Authored-by: Shardul Mahadik <smahadik@linkedin.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This is kind of a followup of https://github.com/apache/spark/pull/24033 and https://github.com/apache/spark/pull/30945.

Many of references in `SecurityManager` were introduced from SPARK-1189, and related usages were removed later from https://github.com/apache/spark/pull/24033 and https://github.com/apache/spark/pull/30945. This PR proposes to remove them out.

### Why are the changes needed?

For better readability of codes.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually complied. GitHub Actions and Jenkins build should test it out as well.

Closes#31636 from HyukjinKwon/SPARK-34520.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

The endpoints of Prometheus metrics are properly marked and documented as an experimental (SPARK-31674). The class `PrometheusServlet` itself is not the part of an API so this PR proposes to remove it.

### Why are the changes needed?

To avoid marking a non-API as an API.

### Does this PR introduce _any_ user-facing change?

No, the class is already `private[spark]`.

### How was this patch tested?

Existing tests should cover.

Closes#31640 from HyukjinKwon/SPARK-34531.

Lead-authored-by: HyukjinKwon <gurwls223@apache.org>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Use `ask` instead of `send` to sync the `ExecutorStateChanged` between Worker and Master and retry(up to 5 times) on the failure until the message is successfully handled by the Master. And the Worker would exit itself if the message can not be sent after 5 times retry.

### Why are the changes needed?

If the Worker fails to send ExecutorStateChanged to the Master due to some unexpected errors, e.g., temporary network error, then the Master can't remove the finished executor normally and think the executor is still alive. In the worst case, if the executor is the only executor for the application, the application can get hang.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass existing tests.

Closes#31348 from Ngone51/periodically-trigger-master-schedule.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Apache Spark 3.0 introduced `spark.eventLog.compression.codec` configuration.

For Apache Spark 3.2, this PR aims to set `zstd` as the default value for `spark.eventLog.compression.codec` configuration.

This only affects creating a new log file.

### Why are the changes needed?

The main purpose of event logs is archiving. Many logs are generated and occupy the storage, but most of them are never accessed by users.

**1. Save storage resources (and money)**

In general, ZSTD is much smaller than LZ4.

For example, in case of TPCDS (Scale 200) log, ZSTD generates about 3 times smaller log files than LZ4.

| CODEC | SIZE (bytes) |

|---------|-------------|

| LZ4 | 184001434|

| ZSTD | 64522396|

And, the plain file is 17.6 times bigger.

```

-rw-r--r-- 1 dongjoon staff 1135464691 Feb 21 22:31 spark-a1843ead29834f46b1125a03eca32679

-rw-r--r-- 1 dongjoon staff 64522396 Feb 21 22:31 spark-a1843ead29834f46b1125a03eca32679.zstd

```

**2. Better Usability**

We cannot decompress Spark-generated LZ4 event log files via CLI while we can for ZSTD event log files. Spark's LZ4 event log files are inconvenient to some users who want to uncompress and access them.

```

$ lz4 -d spark-d3deba027bd34435ba849e14fc2c42ef.lz4

Decoding file spark-d3deba027bd34435ba849e14fc2c42ef

Error 44 : Unrecognized header : file cannot be decoded

```

```

$ zstd -d spark-a1843ead29834f46b1125a03eca32679.zstd

spark-a1843ead29834f46b1125a03eca32679.zstd: 1135464691 bytes

```

**3. Speed**

The following results are collected by running [lzbench](https://github.com/inikep/lzbench) on the above Spark event log. Note that

- This is not a direct comparison of Spark compression/decompression codec.

- `lzbench` is an in-memory benchmark. So, it doesn't show the benefit of the reduced network traffic due to the small size of ZSTD.

Here,