## What changes were proposed in this pull request?

When using older versions of spark releases, a use case generated a huge code-gen file which hit the limitation `Constant pool has grown past JVM limit of 0xFFFF`. In this situation, it should fail immediately. But the diagnosis message sent to RM is too large, the ApplicationMaster suspended and RM's ZKStateStore was crashed. For 2.3 or later spark releases the limitation of code-gen has been removed, but maybe there are still some uncaught exceptions that contain oversized error message will cause such a problem.

This PR is aim to cut down the diagnosis message size.

## How was this patch tested?

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22180 from yaooqinn/SPARK-25174.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

When there are missing offsets, Kafka v2 source may return duplicated records when `failOnDataLoss=false` because it doesn't skip missing offsets.

This PR fixes the issue and also adds regression tests for all Kafka readers.

## How was this patch tested?

New tests.

Closes#22207 from zsxwing/SPARK-25214.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

**## What changes were proposed in this pull request?**

When the yarn.nodemanager.resource.memory-mb or yarn.scheduler.maximum-allocation-mb

memory assignment is insufficient, Spark always reports an error request to adjust

yarn.scheduler.maximum-allocation-mb even though in message it shows the memory value

of yarn.nodemanager.resource.memory-mb parameter,As the error Message is bit misleading to the user we can modify the same, We can keep the error message same as executor memory validation message.

Defintion of **yarn.nodemanager.resource.memory-mb:**

Amount of physical memory, in MB, that can be allocated for containers. It means the amount of memory YARN can utilize on this node and therefore this property should be lower then the total memory of that machine.

**yarn.scheduler.maximum-allocation-mb:**

It defines the maximum memory allocation available for a container in MB

it means RM can only allocate memory to containers in increments of "yarn.scheduler.minimum-allocation-mb" and not exceed "yarn.scheduler.maximum-allocation-mb" and It should not be more than total allocated memory of the Node.

**## How was this patch tested?**

Manually tested in hdfs-Yarn clustaer

Closes#22199 from sujith71955/maste_am_log.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR generates the code that to refer a `StructType` generated in the scala code instead of generating `StructType` in Java code.

The original code has two issues.

1. Avoid to used the field name such as `key.name`

1. Support complicated schema (e.g. nested DataType)

At first, [the JIRA entry](https://issues.apache.org/jira/browse/SPARK-25178) proposed to change the generated field name of the keySchema / valueSchema to a dummy name in `RowBasedHashMapGenerator` and `VectorizedHashMapGenerator.scala`. This proposal can addresse issue 1.

Ueshin suggested an approach to refer to a `StructType` generated in the scala code using `ctx.addReferenceObj()`. This approach can address issues 1 and 2. Finally, this PR uses this approach.

## How was this patch tested?

Existing UTs

Closes#22187 from kiszk/SPARK-25178.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Update to janino 3.0.9 to address Java 8 + Scala 2.12 incompatibility. The error manifests as test failures like this in `ExpressionEncoderSuite`:

```

- encode/decode for seq of string: List(abc, xyz) *** FAILED ***

java.lang.RuntimeException: Error while encoding: org.codehaus.janino.InternalCompilerException: failed to compile: org.codehaus.janino.InternalCompilerException: Compiling "GeneratedClass": Two non-abstract methods "public int scala.collection.TraversableOnce.size()" have the same parameter types, declaring type and return type

```

It comes up pretty immediately in any generated code that references Scala collections, and virtually always concerning the `size()` method.

## How was this patch tested?

Existing tests

Closes#22203 from srowen/SPARK-25029.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

(Link to Jira: https://issues.apache.org/jira/browse/SPARK-4502)

_N.B. This is a restart of PR #16578 which includes a subset of that code. Relevant review comments from that PR should be considered incorporated by reference. Please avoid duplication in review by reviewing that PR first. The summary below is an edited copy of the summary of the previous PR._

## What changes were proposed in this pull request?

One of the hallmarks of a column-oriented data storage format is the ability to read data from a subset of columns, efficiently skipping reads from other columns. Spark has long had support for pruning unneeded top-level schema fields from the scan of a parquet file. For example, consider a table, `contacts`, backed by parquet with the following Spark SQL schema:

```

root

|-- name: struct

| |-- first: string

| |-- last: string

|-- address: string

```

Parquet stores this table's data in three physical columns: `name.first`, `name.last` and `address`. To answer the query

```SQL

select address from contacts

```

Spark will read only from the `address` column of parquet data. However, to answer the query

```SQL

select name.first from contacts

```

Spark will read `name.first` and `name.last` from parquet.

This PR modifies Spark SQL to support a finer-grain of schema pruning. With this patch, Spark reads only the `name.first` column to answer the previous query.

### Implementation

There are two main components of this patch. First, there is a `ParquetSchemaPruning` optimizer rule for gathering the required schema fields of a `PhysicalOperation` over a parquet file, constructing a new schema based on those required fields and rewriting the plan in terms of that pruned schema. The pruned schema fields are pushed down to the parquet requested read schema. `ParquetSchemaPruning` uses a new `ProjectionOverSchema` extractor for rewriting a catalyst expression in terms of a pruned schema.

Second, the `ParquetRowConverter` has been patched to ensure the ordinals of the parquet columns read are correct for the pruned schema. `ParquetReadSupport` has been patched to address a compatibility mismatch between Spark's built in vectorized reader and the parquet-mr library's reader.

### Limitation

Among the complex Spark SQL data types, this patch supports parquet column pruning of nested sequences of struct fields only.

## How was this patch tested?

Care has been taken to ensure correctness and prevent regressions. A more advanced version of this patch incorporating optimizations for rewriting queries involving aggregations and joins has been running on a production Spark cluster at VideoAmp for several years. In that time, one bug was found and fixed early on, and we added a regression test for that bug.

We forward-ported this patch to Spark master in June 2016 and have been running this patch against Spark 2.x branches on ad-hoc clusters since then.

Closes#21320 from mallman/spark-4502-parquet_column_pruning-foundation.

Lead-authored-by: Michael Allman <msa@allman.ms>

Co-authored-by: Adam Jacques <adam@technowizardry.net>

Co-authored-by: Michael Allman <michael@videoamp.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Dataset.apply calls dataset.deserializer (to provide an early error) which ends up calling the full Analyzer on the deserializer. This can take tens of milliseconds, depending on how big the plan is.

Since Dataset.apply is called for many Dataset operations such as Dataset.where it can be a significant overhead for short queries.

According to a comment in the PR that introduced this check, we can at least remove this check for DataFrames: https://github.com/apache/spark/pull/20402#discussion_r164338267

## How was this patch tested?

Existing tests + manual benchmark

Author: Bogdan Raducanu <bogdan@databricks.com>

Closes#22201 from bogdanrdc/deserializer-fix.

## What changes were proposed in this pull request?

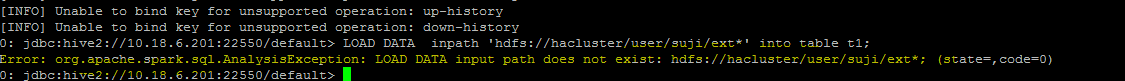

**Problem statement**

load data command with hdfs file paths consists of wild card strings like * are not working

eg:

"load data inpath 'hdfs://hacluster/user/ext* into table t1"

throws Analysis exception while executing this query

**Analysis -**

Currently fs.exists() API which is used for path validation in load command API cannot resolve the path with wild card pattern, To mitigate this problem i am using globStatus() API another api which can resolve the paths with hdfs supported wildcards like *,? etc(inline with hive wildcard support).

**Improvement identified as part of this issue -**

Currently system wont support wildcard character to be used for folder level path in a local file system. This PR has handled this scenario, the same globStatus API will unify the validation logic of local and non local file systems, this will ensure the behavior consistency between the hdfs and local file path in load command.

with this improvement user will be able to use a wildcard character in folder level path of a local file system in load command inline with hive behaviour, in older versions user can use wildcards only in file path of the local file system if they use in folder path system use to give an error by mentioning that not supported.

eg: load data local inpath '/localfilesystem/folder* into table t1

## How was this patch tested?

a) Manually tested by executing test-cases in HDFS yarn cluster. Reports is been attached in below section.

b) Existing test-case can verify the impact and functionality for local file path scenarios

c) A test-case is been added for verifying the functionality when wild card is been used in folder level path of a local file system

## Test Results

Note: all ip's were updated to localhost for security reasons.

HDFS path details

```

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith1

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith1/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith1/typeddata61.txt

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith2

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith2/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith2/typeddata61.txt

```

positive scenario by specifying complete file path to know about record size

```

0: jdbc:hive2://localhost:22550/default> create table wild_spark (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.217 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata60.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (4.236 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata61.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.602 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from wild_spark;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (18.529 seconds)

0: jdbc:hive2://localhost:22550/default>

```

With wild card character in file path

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.409 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/type*' into table spark_withWildChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.502 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

```

with ? wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_DiffChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata60.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata61.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.644 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_DiffChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (16.078 seconds)

```

with folder level wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_folderlevel (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/suji*/*' into table spark_withWildChar_folderlevel;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_folderlevel;

+-----------+--+

| count(1) |

+-----------+--+

| 242 |

+-----------+--+

1 row selected (16.078 seconds)

```

Negative scenario invalid path

```

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujiinvalid*/*' into table spark_withWildChar_folder;

Error: org.apache.spark.sql.AnalysisException: LOAD DATA input path does not exist: /user/data/sujiinvalid*/*; (state=,code=0)

0: jdbc:hive2://localhost:22550/default>

```

Hive Test results- file level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_files (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.723 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/sujith1/type*' into table hive_withWildChar_files;

INFO : Loading data to table default.hive_withwildchar_files from hdfs://hacluster/user/sujith1/type*

No rows affected (0.682 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_files;

+------+--+

| _c0 |

+------+--+

| 121 |

+------+--+

1 row selected (50.832 seconds)

```

Hive Test results- folder level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_folder (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.459 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/suji*/*' into table hive_withWildChar_folder;

INFO : Loading data to table default.hive_withwildchar_folder from hdfs://hacluster/user/data/suji*/*

No rows affected (0.76 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_folder;

+------+--+

| _c0 |

+------+--+

| 242 |

+------+--+

1 row selected (46.483 seconds)

```

Closes#20611 from sujith71955/master_wldcardsupport.

Lead-authored-by: s71955 <sujithchacko.2010@gmail.com>

Co-authored-by: sujith71955 <sujithchacko.2010@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In feature.py, VectorSizeHint setSize and getSize don't return value. Add return.

## How was this patch tested?

I tested the changes on my local.

Closes#22136 from huaxingao/spark-25124.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Joseph K. Bradley <joseph@databricks.com>

## What changes were proposed in this pull request?

Fix a race in the rate source tests. We need a better way of testing restart behavior.

## How was this patch tested?

unit test

Closes#22191 from jose-torres/racetest.

Authored-by: Jose Torres <torres.joseph.f+github@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

Casting to `DecimalType` is not always needed to force nullable.

If the decimal type to cast is wider than original type, or only truncating or precision loss, the casted value won't be `null`.

## How was this patch tested?

Added and modified tests.

Closes#22200 from ueshin/issues/SPARK-25208/cast_nullable_decimal.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

[SPARK-25126] (https://issues.apache.org/jira/browse/SPARK-25126)

reports loading a large number of orc files consumes a lot of memory

in both 2.0 and 2.3. The issue is caused by creating a Reader for every

orc file in order to infer the schema.

In OrFileOperator.ReadSchema, a Reader is created for every file

although only the first valid one is used. This uses significant

amount of memory when there `paths` have a lot of files. In 2.3

a different code path (OrcUtils.readSchema) is used for inferring

schema for orc files. This commit changes both functions to create

Reader lazily.

## How was this patch tested?

Pass the Jenkins with a newly added test case by dongjoon-hyun

Closes#22157 from raofu/SPARK-25126.

Lead-authored-by: Rao Fu <rao@coupang.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Co-authored-by: Rao Fu <raofu04@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/21838, the class `AvroDataToCatalyst` and `CatalystDataToAvro` were put in package `org.apache.spark.sql`.

They should be moved to package `org.apache.spark.sql.avro`.

Also optimize imports in Avro module.

## How was this patch tested?

Unit test

Closes#22196 from gengliangwang/avro_revise_package_name.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

VectorizedParquetRecordReader::initializeInternal rebuilds the column list and path list once for each column. Therefore, it indirectly iterates 2\*colCount\*colCount times for each parquet file.

This inefficiency impacts jobs that read parquet-backed tables with many columns and many files. Jobs that read tables with few columns or few files are not impacted.

This PR changes initializeInternal so that it builds each list only once.

I ran benchmarks on my laptop with 1 worker thread, running this query:

<pre>

sql("select * from parquet_backed_table where id1 = 1").collect

</pre>

There are roughly one matching row for every 425 rows, and the matching rows are sprinkled pretty evenly throughout the table (that is, every page for column <code>id1</code> has at least one matching row).

6000 columns, 1 million rows, 67 32M files:

master | branch | improvement

-------|---------|-----------

10.87 min | 6.09 min | 44%

6000 columns, 1 million rows, 23 98m files:

master | branch | improvement

-------|---------|-----------

7.39 min | 5.80 min | 21%

600 columns 10 million rows, 67 32M files:

master | branch | improvement

-------|---------|-----------

1.95 min | 1.96 min | -0.5%

60 columns, 100 million rows, 67 32M files:

master | branch | improvement

-------|---------|-----------

0.55 min | 0.55 min | 0%

## How was this patch tested?

- sql unit tests

- pyspark-sql tests

Closes#22188 from bersprockets/SPARK-25164.

Authored-by: Bruce Robbins <bersprockets@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This pr proposed to show RDD/relation names in RDD/Hive table scan nodes.

This change made these names show up in the webUI and explain results.

For example;

```

scala> sql("CREATE TABLE t(c1 int) USING hive")

scala> sql("INSERT INTO t VALUES(1)")

scala> spark.table("t").explain()

== Physical Plan ==

Scan hive default.t [c1#8], HiveTableRelation `default`.`t`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [c1#8]

^^^^^^^^^^^

```

<img width="212" alt="spark-pr-hive" src="https://user-images.githubusercontent.com/692303/44501013-51264c80-a6c6-11e8-94f8-0704aee83bb6.png">

Closes#20226

## How was this patch tested?

Added tests in `DataFrameSuite`, `DatasetSuite`, and `HiveExplainSuite`

Closes#22153 from maropu/pr20226.

Lead-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Co-authored-by: Tejas Patil <tejasp@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a follow-up pr of #22031 which added `zip_with` function to fix an example.

## How was this patch tested?

Existing tests.

Closes#22194 from ueshin/issues/SPARK-23932/fix_examples.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

A few SQL tests for R were failing in my development environment. In this PR, i am attempting to

address some of them. Below are the reasons for the failure.

- The catalog api tests assumes catalog artifacts named "foo" to be non existent. I think name such as foo and bar are common and i use it frequently. I have changed it to a string that i hope is less likely to collide.

- One test assumes that we only have one database in the system. I had more than one and it caused the test to fail. I have changed that check.

- One more test which compares two timestamp values fail - i am debugging this now. I will send it as a followup - may be.

## How was this patch tested?

Its a test fix.

Closes#22161 from dilipbiswal/r-sql-test-fix1.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

They depend on internal Expression APIs. Let's see how far we can get without it.

## How was this patch tested?

Just some code removal. There's no existing tests as far as I can tell so it's easy to remove.

Closes#22185 from rxin/SPARK-25127.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

After https://github.com/apache/spark/pull/21495 the welcome message is printed first, and then Scala prompt will be shown before the Spark UI info is printed.

Although it's a minor issue, but visually, it doesn't look as nice as the existing behavior. This PR intends to fix it by duplicating the Scala `process` code to arrange the printing order. However, one variable is private, so reflection has to be used which is not desirable.

We can use this PR to brainstorm how to handle it properly and how Scala can change their APIs to fit our need.

## How was this patch tested?

Existing test

Closes#21749 from dbtsai/repl-followup.

Authored-by: DB Tsai <d_tsai@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

`ExternalAppendOnlyMapSuiteCheck` test is flaky.

We use a `SparkListener` to collect spill metrics of completed stages. `withListener` runs the code that does spill. Spill status was checked after the code finishes but it was still in `withListener`. At that time it was possibly not all events to the listener bus are processed.

We should check spill status after all events are processed.

## How was this patch tested?

Locally ran unit tests.

Closes#22181 from viirya/SPARK-25163.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

The race condition that caused test failure is between 2 threads.

- The MicrobatchExecution thread that processes inputs to produce answers and then generates progress events.

- The test thread that generates some input data, checked the answer and then verified the query generated progress event.

The synchronization structure between these threads is as follows

1. MicrobatchExecution thread, in every batch, does the following in order.

a. Processes batch input to generate answer.

b. Signals `awaitProgressLockCondition` to wake up threads waiting for progress using `awaitOffset`

c. Generates progress event

2. Test execution thread

a. Calls `awaitOffset` to wait for progress, which waits on `awaitProgressLockCondition`.

b. As soon as `awaitProgressLockCondition` is signaled, it would move on the in the test to check answer.

c. Finally, it would verify the last generated progress event.

What can happen is the following sequence of events: 2a -> 1a -> 1b -> 2b -> 2c -> 1c.

In other words, the progress event may be generated after the test tries to verify it.

The solution has two steps.

1. Signal the waiting thread after the progress event has been generated, that is, after `finishTrigger()`.

2. Increase the timeout of `awaitProgressLockCondition.await(100 ms)` to a large value.

This latter is to ensure that test thread for keeps waiting on `awaitProgressLockCondition`until the MicroBatchExecution thread explicitly signals it. With the existing small timeout of 100ms the following sequence can occur.

- MicroBatchExecution thread updates committed offsets

- Test thread waiting on `awaitProgressLockCondition` accidentally times out after 100 ms, finds that the committed offsets have been updated, therefore returns from `awaitOffset` and moves on to the progress event tests.

- MicroBatchExecution thread then generates progress event and signals. But the test thread has already attempted to verify the event and failed.

By increasing the timeout to large (e.g., `streamingTimeoutMs = 60 seconds`, similar to `awaitInitialization`), this above type of race condition is also avoided.

## How was this patch tested?

Ran locally many times.

Closes#22182 from tdas/SPARK-25184.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

Limit Thread Pool size in BlockManager Master and Slave endpoints.

Currently, BlockManagerMasterEndpoint and BlockManagerSlaveEndpoint both have thread pools with nearly unbounded (Integer.MAX_VALUE) numbers of threads. In certain cases, this can lead to driver OOM errors. This change limits the thread pools to 100 threads; this should not break any existing behavior because any tasks beyond that number will get queued.

## How was this patch tested?

Manual testing

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22176 from mukulmurthy/25181-threads.

Authored-by: Mukul Murthy <mukul.murthy@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

Include PandasUDFType in the import all of pyspark.sql.functions

## How was this patch tested?

Run the test case from the pyspark shell from the jira [spark-25105](https://jira.apache.org/jira/browse/SPARK-25105?jql=project%20%3D%20SPARK%20AND%20component%20in%20(ML%2C%20PySpark%2C%20SQL%2C%20%22Structured%20Streaming%22))

I manually test on pyspark-shell:

before:

`

>>> from pyspark.sql.functions import *

>>> foo = pandas_udf(lambda x: x, 'v int', PandasUDFType.GROUPED_MAP)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

NameError: name 'PandasUDFType' is not defined

>>>

`

after:

`

>>> from pyspark.sql.functions import *

>>> foo = pandas_udf(lambda x: x, 'v int', PandasUDFType.GROUPED_MAP)

>>>

`

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22100 from kevinyu98/spark-25105.

Authored-by: Kevin Yu <qyu@us.ibm.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Fix issues arising from the fact that builtins __file__, __long__, __raw_input()__, __unicode__, __xrange()__, etc. were all removed from Python 3. __Undefined names__ have the potential to raise [NameError](https://docs.python.org/3/library/exceptions.html#NameError) at runtime.

## How was this patch tested?

* $ __python2 -m flake8 . --count --select=E9,F82 --show-source --statistics__

* $ __python3 -m flake8 . --count --select=E9,F82 --show-source --statistics__

holdenk

flake8 testing of https://github.com/apache/spark on Python 3.6.3

$ __python3 -m flake8 . --count --select=E901,E999,F821,F822,F823 --show-source --statistics__

```

./dev/merge_spark_pr.py:98:14: F821 undefined name 'raw_input'

result = raw_input("\n%s (y/n): " % prompt)

^

./dev/merge_spark_pr.py:136:22: F821 undefined name 'raw_input'

primary_author = raw_input(

^

./dev/merge_spark_pr.py:186:16: F821 undefined name 'raw_input'

pick_ref = raw_input("Enter a branch name [%s]: " % default_branch)

^

./dev/merge_spark_pr.py:233:15: F821 undefined name 'raw_input'

jira_id = raw_input("Enter a JIRA id [%s]: " % default_jira_id)

^

./dev/merge_spark_pr.py:278:20: F821 undefined name 'raw_input'

fix_versions = raw_input("Enter comma-separated fix version(s) [%s]: " % default_fix_versions)

^

./dev/merge_spark_pr.py:317:28: F821 undefined name 'raw_input'

raw_assignee = raw_input(

^

./dev/merge_spark_pr.py:430:14: F821 undefined name 'raw_input'

pr_num = raw_input("Which pull request would you like to merge? (e.g. 34): ")

^

./dev/merge_spark_pr.py:442:18: F821 undefined name 'raw_input'

result = raw_input("Would you like to use the modified title? (y/n): ")

^

./dev/merge_spark_pr.py:493:11: F821 undefined name 'raw_input'

while raw_input("\n%s (y/n): " % pick_prompt).lower() == "y":

^

./dev/create-release/releaseutils.py:58:16: F821 undefined name 'raw_input'

response = raw_input("%s [y/n]: " % msg)

^

./dev/create-release/releaseutils.py:152:38: F821 undefined name 'unicode'

author = unidecode.unidecode(unicode(author, "UTF-8")).strip()

^

./python/setup.py:37:11: F821 undefined name '__version__'

VERSION = __version__

^

./python/pyspark/cloudpickle.py:275:18: F821 undefined name 'buffer'

dispatch[buffer] = save_buffer

^

./python/pyspark/cloudpickle.py:807:18: F821 undefined name 'file'

dispatch[file] = save_file

^

./python/pyspark/sql/conf.py:61:61: F821 undefined name 'unicode'

if not isinstance(obj, str) and not isinstance(obj, unicode):

^

./python/pyspark/sql/streaming.py:25:21: F821 undefined name 'long'

intlike = (int, long)

^

./python/pyspark/streaming/dstream.py:405:35: F821 undefined name 'long'

return self._sc._jvm.Time(long(timestamp * 1000))

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:21:10: F821 undefined name 'xrange'

for i in xrange(50):

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:22:14: F821 undefined name 'xrange'

for j in xrange(5):

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:23:18: F821 undefined name 'xrange'

for k in xrange(20022):

^

20 F821 undefined name 'raw_input'

20

```

Closes#20838 from cclauss/fix-undefined-names.

Authored-by: cclauss <cclauss@bluewin.ch>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Improve the data source v2 API according to the [design doc](https://docs.google.com/document/d/1DDXCTCrup4bKWByTalkXWgavcPdvur8a4eEu8x1BzPM/edit?usp=sharing)

summary of the changes

1. rename `ReadSupport` -> `DataSourceReader` -> `InputPartition` -> `InputPartitionReader` to `BatchReadSupportProvider` -> `BatchReadSupport` -> `InputPartition`/`PartitionReaderFactory` -> `PartitionReader`. Similar renaming also happens at streaming and write APIs.

2. create `ScanConfig` to store query specific information like operator pushdown result, streaming offsets, etc. This makes batch and streaming `ReadSupport`(previouslly named `DataSourceReader`) immutable. All other methods take `ScanConfig` as input, which implies applying operator pushdown and getting streaming offsets happen before all other things(get input partitions, report statistics, etc.).

3. separate `InputPartition` to `InputPartition` and `PartitionReaderFactory`. This is a natural separation, data splitting and reading are orthogonal and we should not mix them in one interfaces. This also makes the naming consistent between read and write API: `PartitionReaderFactory` vs `DataWriterFactory`.

4. separate the batch and streaming interfaces. Sometimes it's painful to force the streaming interface to extend batch interface, as we may need to override some batch methods to return false, or even leak the streaming concept to batch API(e.g. `DataWriterFactory#createWriter(partitionId, taskId, epochId)`)

Some follow-ups we should do after this PR (tracked by https://issues.apache.org/jira/browse/SPARK-25186 ):

1. Revisit the life cycle of `ReadSupport` instances. Currently I keep it same as the previous `DataSourceReader`, i.e. the life cycle is bound to the batch/stream query. This fits streaming very well but may not be perfect for batch source. We can also consider to let `ReadSupport.newScanConfigBuilder` take `DataSourceOptions` as parameter, if we decide to change the life cycle.

2. Add `WriteConfig`. This is similar to `ScanConfig` and makes the write API more flexible. But it's only needed when we add the `replaceWhere` support, and it needs to change the streaming execution engine for this new concept, which I think is better to be done in another PR.

3. Refine the document. This PR adds/changes a lot of document and it's very likely that some people may have better ideas.

4. Figure out the life cycle of `CustomMetrics`. It looks to me that it should be bound to a `ScanConfig`, but we need to change `ProgressReporter` to get the `ScanConfig`. Better to be done in another PR.

5. Better operator pushdown API. This PR keeps the pushdown API as it was, i.e. using the `SupportsPushdownXYZ` traits. We can design a better API using build pattern, but this is a complicated design and deserves an individual JIRA ticket and design doc.

6. Improve the continuous streaming engine to only create a new `ScanConfig` when re-configuring.

7. Remove `SupportsPushdownCatalystFilter`. This is actually not a must-have for file source, we can change the hive partition pruning to use the public `Filter`.

## How was this patch tested?

existing tests.

Closes#22009 from cloud-fan/redesign.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The PR moves the compilation of the regexp for code formatting outside the method which is called for each code block when splitting expressions, in order to avoid recompiling the regexp every time.

Credit should be given to Izek Greenfield.

## How was this patch tested?

existing UTs

Closes#22135 from mgaido91/SPARK-25093.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This fixes a perf regression caused by https://github.com/apache/spark/pull/21376 .

We should not use `RDD#toLocalIterator`, which triggers one Spark job per RDD partition. This is very bad for RDDs with a lot of small partitions.

To fix it, this PR introduces a way to access SQLConf in the scheduler event loop thread, so that we don't need to use `RDD#toLocalIterator` anymore in `JsonInferSchema`.

## How was this patch tested?

a new test

Closes#22152 from cloud-fan/conf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This pr is to fix bugs when expr codegen fails; we need to catch `java.util.concurrent.ExecutionException` instead of `InternalCompilerException` and `CompileException` . This handling is the same with the `WholeStageCodegenExec ` one: 60af2501e1/sql/core/src/main/scala/org/apache/spark/sql/execution/WholeStageCodegenExec.scala (L585)

## How was this patch tested?

Added tests in `CodeGeneratorWithInterpretedFallbackSuite`

Closes#22154 from maropu/SPARK-25140.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Two back to PRs implicitly conflicted by one PR removing an existing import that the other PR needed. This did not cause explicit conflict as the import already existed, but not used.

https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Compile/job/spark-master-compile-maven-hadoop-2.7/8226/consoleFull

```

[info] Compiling 342 Scala sources and 97 Java sources to /home/jenkins/workspace/spark-master-compile-maven-hadoop-2.7/sql/core/target/scala-2.11/classes...

[warn] /home/jenkins/workspace/spark-master-compile-maven-hadoop-2.7/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetFileFormat.scala:128: value ENABLE_JOB_SUMMARY in object ParquetOutputFormat is deprecated: see corresponding Javadoc for more information.

[warn] && conf.get(ParquetOutputFormat.ENABLE_JOB_SUMMARY) == null) {

[warn] ^

[error] /home/jenkins/workspace/spark-master-compile-maven-hadoop-2.7/sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/statefulOperators.scala:95: value asJava is not a member of scala.collection.immutable.Map[String,Long]

[error] new java.util.HashMap(customMetrics.mapValues(long2Long).asJava)

[error] ^

[warn] one warning found

[error] one error found

[error] Compile failed at Aug 21, 2018 4:04:35 PM [12.827s]

```

## How was this patch tested?

It compiles!

Closes#22175 from tdas/fix-build.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

Add method `barrier()` and `getTaskInfos()` in python TaskContext, these two methods are only allowed for barrier tasks.

## How was this patch tested?

Add new tests in `tests.py`

Closes#22085 from jiangxb1987/python.barrier.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

This patch exposes the estimation of size of cache (loadedMaps) in HDFSBackedStateStoreProvider as a custom metric of StateStore.

The rationalize of the patch is that state backed by HDFSBackedStateStoreProvider will consume more memory than the number what we can get from query status due to caching multiple versions of states. The memory footprint to be much larger than query status reports in situations where the state store is getting a lot of updates: while shallow-copying map incurs additional small memory usages due to the size of map entities and references, but row objects will still be shared across the versions. If there're lots of updates between batches, less row objects will be shared and more row objects will exist in memory consuming much memory then what we expect.

While HDFSBackedStateStore refers loadedMaps in HDFSBackedStateStoreProvider directly, there would be only one `StateStoreWriter` which refers a StateStoreProvider, so the value is not exposed as well as being aggregated multiple times. Current state metrics are safe to aggregate for the same reason.

## How was this patch tested?

Tested manually. Below is the snapshot of UI page which is reflected by the patch:

<img width="601" alt="screen shot 2018-06-05 at 10 16 16 pm" src="https://user-images.githubusercontent.com/1317309/40978481-b46ad324-690e-11e8-9b0f-e80528612a62.png">

Please refer "estimated size of states cache in provider total" as well as "count of versions in state cache in provider".

Closes#21469 from HeartSaVioR/SPARK-24441.

Authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

In https://issues.apache.org/jira/browse/SPARK-24924, the data source provider com.databricks.spark.avro is mapped to the new package org.apache.spark.sql.avro .

As per the discussion in the [Jira](https://issues.apache.org/jira/browse/SPARK-24924) and PR #22119, we should make the mapping configurable.

This PR also improve the error message when data source of Avro/Kafka is not found.

## How was this patch tested?

Unit test

Closes#22133 from gengliangwang/configurable_avro_mapping.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This patch proposes a new flag option for stateful aggregation: remove redundant key data from value.

Enabling new option runs similar with current, and uses less memory for state according to key/value fields of state operator.

Please refer below link to see detailed perf. test result:

https://issues.apache.org/jira/browse/SPARK-24763?focusedCommentId=16536539&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16536539

Since the state between enabling the option and disabling the option is not compatible, the option is set to 'disable' by default (to ensure backward compatibility), and OffsetSeqMetadata would prevent modifying the option after executing query.

## How was this patch tested?

Modify unit tests to cover both disabling option and enabling option.

Also did manual tests to see whether propose patch improves state memory usage.

Closes#21733 from HeartSaVioR/SPARK-24763.

Authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

runParallelPersonalizedPageRank in graphx checks that `sources` are <= Int.MaxValue.toLong, but this is not actually required. This check seems to have been added because we use sparse vectors in the implementation and sparse vectors cannot be indexed by values > MAX_INT. However we do not ever index the sparse vector by the source vertexIds so this isn't an issue. I've added a test with large vertexIds to confirm this works as expected.

## How was this patch tested?

Unit tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22139 from MrBago/remove-veretexId-check-pppr.

Authored-by: Bago Amirbekian <bago@databricks.com>

Signed-off-by: Joseph K. Bradley <joseph@databricks.com>

When replicating large cached RDD blocks, it can be helpful to replicate

them as a stream, to avoid using large amounts of memory during the

transfer. This also allows blocks larger than 2GB to be replicated.

Added unit tests in DistributedSuite. Also ran tests on a cluster for

blocks > 2gb.

Closes#21451 from squito/clean_replication.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fix a few broken links and typos, and, nit, use HTTPS more consistently esp. on scripts and Apache links

## How was this patch tested?

Doc build

Closes#22172 from srowen/DocTypo.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Add .crc files to .gitignore so that we don't add .crc files in state checkpoint to git repo which could be added in test resources.

This is based on comments in #21733, https://github.com/apache/spark/pull/21733#issuecomment-414578244.

## How was this patch tested?

Add `.1.delta.crc` and `.2.delta.crc` in `<spark root>/sql/core/src/test/resources`, and confirm git doesn't suggest the files to add to stage.

Closes#22170 from HeartSaVioR/add-crc-files-to-gitignore.

Authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Fix several bugs in failure handling of barrier execution mode:

* Mark TaskSet for a barrier stage as zombie when a task attempt fails;

* Multiple barrier task failures from a single barrier stage should not trigger multiple stage retries;

* Barrier task failure from a previous failed stage attempt should not trigger stage retry;

* Fail the job when a task from a barrier ResultStage failed;

* RDD.isBarrier() should not rely on `ShuffleDependency`s.

## How was this patch tested?

Added corresponding test cases in `DAGSchedulerSuite` and `TaskSchedulerImplSuite`.

Closes#22158 from jiangxb1987/failure.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/22079#discussion_r209705612 It is possible for two objects to be unequal and yet we consider them as equal with this code, if the long values are separated by Int.MaxValue.

This PR fixes the issue.

## How was this patch tested?

Add new test cases in `RecordBinaryComparatorSuite`.

Closes#22101 from jiangxb1987/fix-rbc.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Spark SQL returns NULL for a column whose Hive metastore schema and Parquet schema are in different letter cases, regardless of spark.sql.caseSensitive set to true or false. This PR aims to add case-insensitive field resolution for ParquetFileFormat.

* Do case-insensitive resolution only if Spark is in case-insensitive mode.

* Field resolution should fail if there is ambiguity, i.e. more than one field is matched.

## How was this patch tested?

Unit tests added.

Closes#22148 from seancxmao/SPARK-25132-Parquet.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When column pruning is turned on the checking of headers in the csv should only be for the fields in the requiredSchema, not the dataSchema, because column pruning means only requiredSchema is read.

## How was this patch tested?

Added 2 unit tests where column pruning is turned on/off and csv headers are checked againt schema

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22123 from koertkuipers/feat-csv-column-pruning-and-check-header.

Authored-by: Koert Kuipers <koert@tresata.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

[SPARK-25144](https://issues.apache.org/jira/browse/SPARK-25144) reports memory leaks on Apache Spark 2.0.2 ~ 2.3.2-RC5. The bug is already fixed via #21738 as a part of SPARK-21743. This PR only adds a test case to prevent any future regression.

```scala

scala> case class Foo(bar: Option[String])

scala> val ds = List(Foo(Some("bar"))).toDS

scala> val result = ds.flatMap(_.bar).distinct

scala> result.rdd.isEmpty

18/08/19 23:01:54 WARN Executor: Managed memory leak detected; size = 8650752 bytes, TID = 125

res0: Boolean = false

```

## How was this patch tested?

Pass the Jenkins with a new added test case.

Closes#22155 from dongjoon-hyun/SPARK-25144-2.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

When j is 0, log(j+1) will be 0, and this leads to division by 0 issue.

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22090 from yueguoguo/patch-1.

Authored-by: Zhang Le <yueguoguo@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In the PR for supporting logical timestamp types https://github.com/apache/spark/pull/21935, a SQL configuration spark.sql.avro.outputTimestampType is added, so that user can specify the output timestamp precision they want.

With PR https://github.com/apache/spark/pull/21847, the output file can be written with user specified types.

So there is no need to have such trivial configuration. Otherwise to make it consistent we need to add configuration for all the Catalyst types that can be converted into different Avro types.

This PR also add a test case for user specified output schema with different timestamp types.

## How was this patch tested?

Unit test

Closes#22151 from gengliangwang/removeOutputTimestampType.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

We should also check `HigherOrderFunction.bind` method passes expected parameters.

This pr modifies tests for higher-order functions to check `bind` method.

## How was this patch tested?

Modified tests.

Closes#22131 from ueshin/issues/SPARK-25141/bind_test.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to skip invoking of the CSV/JSON parser per each line in the case if the required schema is empty. Added benchmarks for `count()` shows performance improvement up to **3.5 times**.

Before:

```

Count a dataset with 10 columns: Best/Avg Time(ms) Rate(M/s) Per Row(ns)

--------------------------------------------------------------------------------------

JSON count() 7676 / 7715 1.3 767.6

CSV count() 3309 / 3363 3.0 330.9

```

After:

```

Count a dataset with 10 columns: Best/Avg Time(ms) Rate(M/s) Per Row(ns)

--------------------------------------------------------------------------------------

JSON count() 2104 / 2156 4.8 210.4

CSV count() 2332 / 2386 4.3 233.2

```

## How was this patch tested?

It was tested by `CSVSuite` and `JSONSuite` as well as on added benchmarks.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Author: Maxim Gekk <max.gekk@gmail.com>

Closes#21909 from MaxGekk/empty-schema-optimization.

## What changes were proposed in this pull request?

This builds on top of SPARK-24748 to report 'offset lag' as a custom metrics for Kafka structured streaming source.

This lag is the difference between the latest offsets in Kafka the time the metrics is reported (just after a micro-batch completes) and the latest offset Spark has processed. It can be 0 (or close to 0) if spark keeps up with the rate at which messages are ingested into Kafka topics in steady state. This measures how far behind the spark source has fallen behind (per partition) and can aid in tuning the application.

## How was this patch tested?

Existing and new unit tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#21819 from arunmahadevan/SPARK-24863.

Authored-by: Arun Mahadevan <arunm@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>