### What changes were proposed in this pull request?

The changed [unit test](https://github.com/apache/spark/blob/master/sql/core/src/test/scala/org/apache/spark/sql/streaming/StreamingJoinSuite.scala#L566) was introduce in https://github.com/apache/spark/pull/21587, to fix the planner side of thing for stream-stream join. Ideally check the query result should catch the bug, but it would be better to add plan check to make the purpose of unit test more clearly and catch future bug from planner change.

### Why are the changes needed?

Improve unit test.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Changed test itself.

Closes#32836 from c21/ss-test.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Handle type coercion when resolving V2 function. In particular:

- prior to evaluating function arguments, insert cast whenever the argument type doesn't match the expected input type.

- use `BoundFunction.inputTypes()` to lookup magic method for scalar function

### Why are the changes needed?

For V2 functions, the actual argument types should not necessarily match those of the input types, and Spark should handle type coercion whenever it is needed.

### Does this PR introduce _any_ user-facing change?

Yes. Now V2 function resolution should be able to handle type coercion properly.

### How was this patch tested?

Added a few new tests.

Closes#32764 from sunchao/SPARK-35390.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR enhances `RepartitionByExpression` to make it coalesce partitions efficiently by AQE. Usually used to merge small files.

The basic logic is: Spark first tries to coalesce partitions, if it cannot be coalesced, then use the local shuffle reader to read data to avoid exchange the data over the network.

Usage:

```sql

SELECT /*+ REPARTITION */ * FROM t

```

```scala

df.repartition()

```

For example:

coalesce small output files | local shuffle reader

--- | ---

|

### Why are the changes needed?

Coalesce partitions efficiently.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#32781 from wangyum/SPARK-35650.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Write tests for timestamp without time zone in UDF as input parameters and results.

### Why are the changes needed?

It follows https://github.com/apache/spark/pull/31779 to improve test coverage.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test

Closes#32840 from gengliangwang/tswtzUDF.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to extend Spark SQL API to accept `java.time.LocalDateTime` as an external type of recently added new Catalyst type - `TimestampWithoutTZ`. The Java class `java.time.LocalDateTime` has a similar semantic to ANSI SQL timestamp without timezone type, and it is the most suitable to be an external type for `TimestampWithoutTZType`. In more details:

* Added `TimestampWithoutTZConverter` which converts java.time.LocalDateTime instances to/from internal representation of the Catalyst type `TimestampWithoutTZType` (to Long type). The `TimestampWithoutTZConverter` object uses new methods of DateTimeUtils:

* localDateTimeToMicros() converts the input date time to the total length in microseconds.

* microsToLocalDateTime() obtains a java.time.LocalDateTime

* Support new type `TimestampWithoutTZType` in RowEncoder via the methods createDeserializerForLocalDateTime() and createSerializerForLocalDateTime().

* Extended the Literal API to construct literals from `java.time.LocalDateTime` instances.

### Why are the changes needed?

To allow users parallelization of `java.time.LocalDateTime` collections, and construct timestamp without time zone columns. Also to collect such columns back to the driver side.

### Does this PR introduce _any_ user-facing change?

The PR extends existing functionality. So, users can parallelize instances of the java.time.LocalDateTime class and collect them back.

```

scala> val ds = Seq(java.time.LocalDateTime.parse("1970-01-01T00:00:00")).toDS

ds: org.apache.spark.sql.Dataset[java.time.LocalDateTime] = [value: timestampwithouttz]

scala> ds.collect()

res0: Array[java.time.LocalDateTime] = Array(1970-01-01T00:00)

```

### How was this patch tested?

New unit tests

Closes#32814 from gengliangwang/LocalDateTime.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

Move `assume` into methods at `PythonUDFSuite`.

### Why are the changes needed?

When we run Spark test with such command:

`./build/mvn -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn -Pkubernetes clean test`

get this exception:

```

PythonUDFSuite:

org.apache.spark.sql.execution.python.PythonUDFSuite *** ABORTED ***

java.lang.RuntimeException: Unable to load a Suite class that was discovered in the runpath: org.apache.spark.sql.execution.python.PythonUDFSuite

at org.scalatest.tools.DiscoverySuite$.getSuiteInstance(DiscoverySuite.scala:81)

at org.scalatest.tools.DiscoverySuite.$anonfun$nestedSuites$1(DiscoverySuite.scala:38)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

at scala.collection.Iterator.foreach(Iterator.scala:941)

at scala.collection.Iterator.foreach$(Iterator.scala:941)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1429)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at scala.collection.TraversableLike.map(TraversableLike.scala:238)

```

The test env has no PYSpark module so it failed.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

manual

Closes#32833 from ulysses-you/SPARK-35687.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The implementation for the save operation of RocksDBFileManager.

### Why are the changes needed?

Save all the files in the given local checkpoint directory as a committed version in DFS.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New UT added.

Closes#32582 from xuanyuanking/SPARK-35436.

Authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

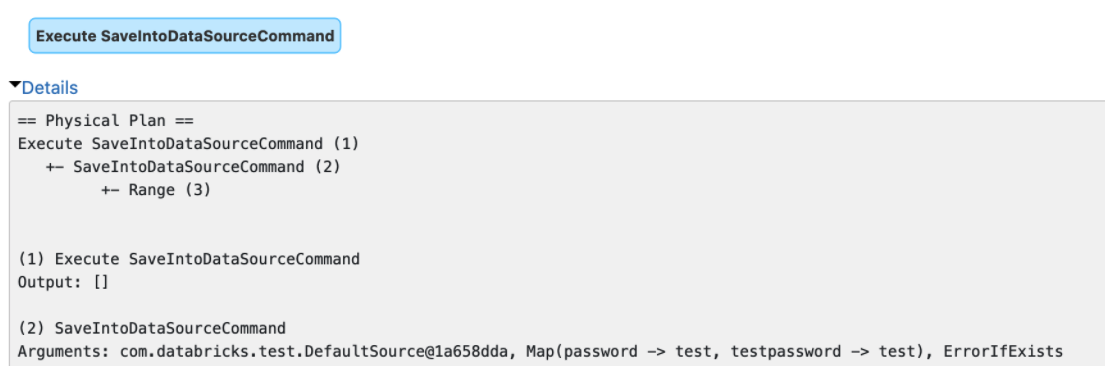

### What changes were proposed in this pull request?

Currently, Spark eagerly executes commands on the caller side of `QueryExecution`, which is a bit hacky as `QueryExecution` is not aware of it and leads to confusion.

For example, if you run `sql("show tables").collect()`, you will see two queries with identical query plans in the web UI.

The first query is triggered at `Dataset.logicalPlan`, which eagerly executes the command.

The second query is triggered at `Dataset.collect`, which is the normal query execution.

From the web UI, it's hard to tell that these two queries are caused by eager command execution.

This PR proposes to move the eager command execution to `QueryExecution`, and turn the command plan to `CommandResult` to indicate that command has been executed already. Now `sql("show tables").collect()` still triggers two queries, but the quey plans are not identical. The second query becomes:

In addition to the UI improvements, this PR also has other benefits:

1. Simplifies code as caller side no need to worry about eager command execution. `QueryExecution` takes care of it.

2. It helps https://github.com/apache/spark/pull/32442 , where there can be more plan nodes above commands, and we need to replace commands with something like local relation that produces unsafe rows.

### Why are the changes needed?

Explained above.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

existing tests

Closes#32513 from beliefer/SPARK-35378.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: beliefer <beliefer@163.com>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Refactor LocalDateTimeUDT as YearUDT in UserDefinedTypeSuite

### Why are the changes needed?

As we are going to support java.time.LocalDateTime as an external type of TimestampWithoutTZ type https://github.com/apache/spark/pull/32814, registering java.time.LocalDateTime as UDT will cause test failures: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/139469/testReport/

This PR is to unblock https://github.com/apache/spark/pull/32814.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#32824 from gengliangwang/UDTFollowUp.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

This PR fixes an issue that both key and value of state schema cannot accept long length (>65535 bytes) JSON.

To solve the problem explained below, JSON represented schema is divided into chunks whose maximum length is 65535 bytes, and each chunk is written by `DataOutputStream.writeUTF`.

As the solution changes the format of the schema, the version is also changes from `1` to `2` but old version schema is still acceptable to ensures backward compatibility.

### Why are the changes needed?

In the current implementation, writing state schema fails if the length of schema exceeds 65535 bytes and `UTFDataFormatException` is thrown.

It's due to the limitation of `DataOutputStream.writeUTF`.

`writeUTF` writes a length field first and it's 2 bytes width, meaning the maximum content length is limited to `2^16-1`=`65535` bytes.

https://docs.oracle.com/javase/8/docs/api/java/io/DataOutputStream.html#writeUTF-java.lang.String-

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New tests.

Closes#32788 from sarutak/fix-UTFDataFormatException.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

This patch removes the usage of putting null into StateStore.

### Why are the changes needed?

According to `get` method doc in `StateStore` API, it returns non-null row if the key exists. So basically we should avoid write null to `StateStore`. You cannot distinguish if the returned null row is because the key doesn't exist, or the value is actually null. And due to the defined behavior of `get`, it is quite easy to cause NPE error if the caller doesn't expect to get a null if the caller believes the key exists.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added test.

Closes#32796 from viirya/fix-ss-joinstatemanager.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

It's a long-standing bug that we forgot to resolve `UnresolvedAlias` in `CollectMetrics`. It's a bit hard to trigger this bug before 3.2 as most likely people won't create `UnresolvedAlias` when calling `Dataset.observe`. However things have been changed after https://github.com/apache/spark/pull/30974

This PR proposes to handle `CollectMetrics` in the rule `ResolveAliases`.

### Why are the changes needed?

bug fix

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

updated test

Closes#32803 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Optimizes the retrieval of approximate quantiles for an array of percentiles.

* Adds an overload for QuantileSummaries.query that accepts an array of percentiles and optimizes the computation to do a single pass over the sketch and avoid redundant computation.

* Modifies the ApproximatePercentiles operator to call into the new method.

All formatting changes are the result of running ./dev/scalafmt

### Why are the changes needed?

The existing implementation does repeated calls per input percentile resulting in redundant computation.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added unit tests for the new method.

Closes#32700 from alkispoly-db/spark_35558_approx_quants_array.

Authored-by: Alkis Polyzotis <alkis.polyzotis@databricks.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Override `getJDBCType` method in `MySQLDialect` so that `FloatType` is mapped to `FLOAT` instead of `REAL`

### Why are the changes needed?

MySQL treats `REAL` as a synonym to `DOUBLE` by default (see https://dev.mysql.com/doc/refman/8.0/en/numeric-types.html). Therefore, when creating a table with a column of `REAL` type, it will be created as `DOUBLE`. However, currently, `MySQLDialect` does not provide an implementation for `getJDBCType`, and will thus ultimately fall back to `JdbcUtils.getCommonJDBCType`, which maps `FloatType` to `REAL`. This change is needed so that we can properly map the `FloatType` to `FLOAT` for MySQL.

### Does this PR introduce _any_ user-facing change?

Prior to this PR, when writing a dataframe with a `FloatType` column to a MySQL table, it will create a `DOUBLE` column. After the PR, it will create a `FLOAT` column.

### How was this patch tested?

Added a test case in `JDBCSuite` that verifies the mapping.

Closes#32605 from mariosmeim-db/SPARK-35446.

Authored-by: Marios Meimaris <marios.meimaris@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

A followup for 345d35ed1a, in this PR we support CURRENT_USER without tailing parentheses in default mode. And for ANSI mode, we can only use CURRENT_USER without tailing parentheses because it is a reserved keyword that cannot be used as a function name

### Why are the changes needed?

1. make it the same as current_date/current_timestamp

2. better ANSI compliance

### Does this PR introduce _any_ user-facing change?

no, just a followup

### How was this patch tested?

new tests

Closes#32770 from yaooqinn/SPARK-21957-F.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is to fix the `UnsupportedOperationException` described in [PR#32705](https://github.com/apache/spark/pull/32705).

When AQE and DPP are turned on at the same time, because the `BroadcastExchange` included in the DPP filter is not added through `EnsureRequirement` rule, Therefore, when AQE optimizes the DPP filter, there is no way to add `BroadcastExchange` through the `EnsureRequirement` rule in `reOptimize` method, which eventually leads to the loss of `BroadcastExchange` in the final physical plan. This PR adds `BroadcastExchange` node in the `reOptimize` method if the current plan is DPP filter.

### Why are the changes needed?

bug fix

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

adding new ut

Closes#32741 from JkSelf/fixDPP+AQEbug.

Authored-by: Ke Jia <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add database if exists check in `SeesionCatalog`

### Why are the changes needed?

Curently execute `drop database test` will throw unfriendly error msg.

```

Error in query: org.apache.hadoop.hive.metastore.api.NoSuchObjectException: test

org.apache.spark.sql.AnalysisException: org.apache.hadoop.hive.metastore.api.NoSuchObjectException: test

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:112)

at org.apache.spark.sql.hive.HiveExternalCatalog.dropDatabase(HiveExternalCatalog.scala:200)

at org.apache.spark.sql.catalyst.catalog.ExternalCatalogWithListener.dropDatabase(ExternalCatalogWithListener.scala:53)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.dropDatabase(SessionCatalog.scala:273)

at org.apache.spark.sql.execution.command.DropDatabaseCommand.run(ddl.scala:111)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:75)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:73)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:84)

at org.apache.spark.sql.Dataset.$anonfun$logicalPlan$1(Dataset.scala:228)

at org.apache.spark.sql.Dataset.$anonfun$withAction$1(Dataset.scala:3707)

```

### Does this PR introduce _any_ user-facing change?

Yes, more cleaner error msg.

### How was this patch tested?

Add test.

Closes#32768 from ulysses-you/SPARK-35629.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

Currently, we do not have a suitable definition of the `user` concept in Spark. We only have a `sparkUser` app widely but do not support identify or retrieve the user information from a session in STS or a runtime query execution.

`current_user()` is very popular and supported by plenty of other modern or old school databases, and also ANSI compliant.

This PR add `current_user()` as a SQL function. And, they are the same. In this PR, we add these functions w/o ambiguity.

1. For a normal single-threaded Spark application, clearly the `sparkUser` is always equivalent to `current_user()` .

2. For a multi-threaded Spark application, e.g. Spark thrift server, we use a `ThreadLocal` variable to store the client-side user(after authenticated) before running the query and retrieve it in the parser.

### Why are the changes needed?

`current_user()` is very popular and supported by plenty of other modern or old school databases, and also ANSI compliant.

### Does this PR introduce _any_ user-facing change?

yes, added `current_user()` as a SQL function

### How was this patch tested?

new tests in thrift server and sql/catalyst

Closes#32718 from yaooqinn/SPARK-21957.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add rule `ConvertToLocalRelation` into AQE Optimizer.

### Why are the changes needed?

Support propagate empty local relation through project and filter like such SQL case:

```

Aggregate

Project

Join

ShuffleStage

ShuffleStage

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Add test.

Closes#32724 from ulysses-you/SPARK-35585.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The condition check for FULL OUTER sort merge join (https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/joins/SortMergeJoinExec.scala#L1368 ) has unnecessary trip when `leftIndex == leftMatches.size` or `rightIndex == rightMatches.size`. Though this does not affect correctness (`scanNextInBuffered()` returns false anyway). But we can avoid it in the first place.

### Why are the changes needed?

Better readability for developers and avoid unnecessary execution.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unit tests, such as `OuterJoinSuite.scala`.

Closes#32736 from c21/join-bug.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

A test case of AdaptiveQueryExecSuite becomes flaky since there are too many debug logs in RootLogger:

https://github.com/Yikun/spark/runs/2715222392?check_suite_focus=truehttps://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/139125/testReport/

To fix it, I suggest supporting multiple loggers in the testing method withLogAppender. So that the LogAppender gets clean target log outputs.

### Why are the changes needed?

Fix a flaky test case.

Also, reduce unnecessary memory cost in tests.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test

Closes#32725 from gengliangwang/fixFlakyLogAppender.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

Move `Set` command related test cases from `SQLQuerySuite` to a new test suite `SetCommandSuite`. There are 7 test cases in total.

### Why are the changes needed?

Code refactoring. `SQLQuerySuite` is becoming big.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit tests

Closes#32732 from gengliangwang/setsuite.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to support special datetime values introduced by #25708 and by #25716 only in typed literals, and don't recognize them in parsing strings to dates/timestamps. The following string values are supported only in typed timestamp literals:

- `epoch [zoneId]` - `1970-01-01 00:00:00+00 (Unix system time zero)`

- `today [zoneId]` - midnight today.

- `yesterday [zoneId]` - midnight yesterday

- `tomorrow [zoneId]` - midnight tomorrow

- `now` - current query start time.

For example:

```sql

spark-sql> SELECT timestamp 'tomorrow';

2019-09-07 00:00:00

```

Similarly, the following special date values are supported only in typed date literals:

- `epoch [zoneId]` - `1970-01-01`

- `today [zoneId]` - the current date in the time zone specified by `spark.sql.session.timeZone`.

- `yesterday [zoneId]` - the current date -1

- `tomorrow [zoneId]` - the current date + 1

- `now` - the date of running the current query. It has the same notion as `today`.

For example:

```sql

spark-sql> SELECT date 'tomorrow' - date 'yesterday';

2

```

### Why are the changes needed?

In the current implementation, Spark supports the special date/timestamp value in any input strings casted to dates/timestamps that leads to the following problems:

- If executors have different system time, the result is inconsistent, and random. Column values depend on where the conversions were performed.

- The special values play the role of distributed non-deterministic functions though users might think of the values as constants.

### Does this PR introduce _any_ user-facing change?

Yes but the probability should be small.

### How was this patch tested?

By running existing test suites:

```

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z interval.sql"

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z date.sql"

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z timestamp.sql"

$ build/sbt "test:testOnly *DateTimeUtilsSuite"

```

Closes#32714 from MaxGekk/remove-datetime-special-values.

Lead-authored-by: Max Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Currently, the results of following SQL queries are not redacted:

```

SET [KEY];

SET;

```

For example:

```

scala> spark.sql("set javax.jdo.option.ConnectionPassword=123456").show()

+--------------------+------+

| key| value|

+--------------------+------+

|javax.jdo.option....|123456|

+--------------------+------+

scala> spark.sql("set javax.jdo.option.ConnectionPassword").show()

+--------------------+------+

| key| value|

+--------------------+------+

|javax.jdo.option....|123456|

+--------------------+------+

scala> spark.sql("set").show()

+--------------------+--------------------+

| key| value|

+--------------------+--------------------+

|javax.jdo.option....| 123456|

```

We should hide the sensitive information and redact the query output.

### Why are the changes needed?

Security.

### Does this PR introduce _any_ user-facing change?

Yes, the sensitive information in the output of Set commands are redacted

### How was this patch tested?

Unit test

Closes#32712 from gengliangwang/redactSet.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a minor change to update how `StateStoreRestoreExec` computes its number of output rows. Previously we only count input rows, but the optionally restored rows are not counted in.

### Why are the changes needed?

Currently the number of output rows of `StateStoreRestoreExec` only counts the each input row. But it actually outputs input rows + optional restored rows. We should provide correct number of output rows.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#32703 from viirya/fix-outputrows.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR refactors `SubqueryExpression` class. It removes the children field from SubqueryExpression's constructor and adds `outerAttrs` and `joinCond`.

### Why are the changes needed?

Currently, the children field of a subquery expression is used to store both collected outer references in the subquery plan and join conditions after correlated predicates are pulled up.

For example:

`SELECT (SELECT max(c1) FROM t1 WHERE t1.c1 = t2.c1) FROM t2`

During the analysis phase, outer references in the subquery are stored in the children field: `scalar-subquery [t2.c1]`, but after the optimizer rule `PullupCorrelatedPredicates`, the children field will be used to store the join conditions, which contain both the inner and the outer references: `scalar-subquery [t1.c1 = t2.c1]`. This is why the references of SubqueryExpression excludes the inner plan's output:

29ed1a2de4/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/subquery.scala (L68-L69)

This can be confusing and error-prone. The references for a subquery expression should always be defined as outer attribute references.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32687 from allisonwang-db/refactor-subquery-expr.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

After SPARK-29291 and SPARK-33352, there are still some compilation warnings about `procedure syntax is deprecated` as follows:

```

[WARNING] [Warn] /spark/core/src/main/scala/org/apache/spark/MapOutputTracker.scala:723: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `registerMergeResult`'s return type

[WARNING] [Warn] /spark/core/src/main/scala/org/apache/spark/MapOutputTracker.scala:748: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `unregisterMergeResult`'s return type

[WARNING] [Warn] /spark/core/src/test/scala/org/apache/spark/util/collection/ExternalAppendOnlyMapSuite.scala:223: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `testSimpleSpillingForAllCodecs`'s return type

[WARNING] [Warn] /spark/mllib-local/src/test/scala/org/apache/spark/ml/linalg/BLASBenchmark.scala:53: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `runBLASBenchmark`'s return type

[WARNING] [Warn] /spark/sql/core/src/main/scala/org/apache/spark/sql/execution/command/DataWritingCommand.scala:110: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `assertEmptyRootPath`'s return type

[WARNING] [Warn] /spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/SQLQuerySuite.scala:602: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `executeCTASWithNonEmptyLocation`'s return type

```

So the main change of this pr is cleanup these compilation warnings.

### Why are the changes needed?

Eliminate compilation warnings in Scala 2.13 and this change should be compatible with Scala 2.12

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#32669 from LuciferYang/re-clean-procedure-syntax.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Added the following TreePattern enums:

- EXCHANGE

- IN_SUBQUERY_EXEC

- UPDATE_FIELDS

Migrated `transformAllExpressions` call sites to use `transformAllExpressionsWithPruning`

### Why are the changes needed?

Reduce the number of tree traversals and hence improve the query compilation latency.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Perf diff:

Rule name | Total Time (baseline) | Total Time (experiment) | experiment/baseline

OptimizeUpdateFields | 54646396 | 27444424 | 0.5

ReplaceUpdateFieldsExpression | 24694303 | 2087517 | 0.08

Closes#32643 from sigmod/all_expressions.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

I just noticed that `AdaptiveQueryExecSuite.SPARK-34091: Batch shuffle fetch in AQE partition coalescing` takes more than 10 minutes to finish, which is unacceptable.

This PR sets the shuffle partitions to 10 in that test, so that the test can finish with 5 seconds.

### Why are the changes needed?

speed up the test

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

N/A

Closes#32695 from cloud-fan/test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Add a new method `isMaterialized` in `QueryStageExec`.

### Why are the changes needed?

Currently, we use `resultOption().get.isDefined` to check if a query stage has materialized. The code is not readable at a glance. It's better to use a new method like `isMaterialized` to define it.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass CI.

Closes#32689 from ulysses-you/SPARK-35552.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

Various small code simplification/cleanup for OptimizeSkewedJoin

### Why are the changes needed?

code refactor

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing tests

Closes#32685 from cloud-fan/skew-join.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Initial implementation of RocksDBCheckpointMetadata. It persists the metadata for RocksDBFileManager.

### Why are the changes needed?

The RocksDBCheckpointMetadata persists the metadata for each committed batch in JSON format. The object contains all RocksDB file names and the number of total keys.

The metadata binds closely with the directory structure of RocksDBFileManager, as described in the design doc - [Directory Structure and Format for Files stored in DFS](https://docs.google.com/document/d/10wVGaUorgPt4iVe4phunAcjU924fa3-_Kf29-2nxH6Y/edit#heading=h.zgvw85ijoz2).

### Does this PR introduce _any_ user-facing change?

No. Internal implementation only.

### How was this patch tested?

New UT added.

Closes#32272 from xuanyuanking/SPARK-35172.

Lead-authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Co-authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

Spark conv function is from MySQL and it's better to follow the MySQL behavior. MySQL returns the max unsigned long if the input string is too big, and Spark should follow it.

However, seems Spark has different behavior in two cases:

MySQL allows leading spaces but Spark does not.

If the input string is way too long, Spark fails with ArrayIndexOutOfBoundException

This patch now help conv follow behavior in those two cases

conv allows leading spaces

conv will return the max unsigned long when the input string is way too long

### Why are the changes needed?

fixing it to match the behavior of conv function to the (almost) only one reference of another DBMS, MySQL

### Does this PR introduce _any_ user-facing change?

Yes, as pointed out above

### How was this patch tested?

Add test

Closes#32684 from dgd-contributor/SPARK-33428.

Authored-by: dgd-contributor <dgd_contributor@viettel.com.vn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add a new data source V2 API: `LocalScan`. It is a special Scan that will happen on Driver locally instead of Executors.

### Why are the changes needed?

The new API improves the flexibility of the DSV2 API. It allows developers to implement connectors for data sources of small data sizes.

For example, we can build a data source for Spark History applications from Spark History Server RESTFUL API. The result set is small and fetching all the results from the Spark driver is good enough. Making it a data source allows us to operate SQL queries with filters or table joins.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test

Closes#32678 from gengliangwang/LocalScan.

Lead-authored-by: Gengliang Wang <ltnwgl@gmail.com>

Co-authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup from https://github.com/apache/spark/pull/32547#discussion_r639916474, where for LEFT ANTI join, we do not need to depend on `loaded` variable, as in `codegenAnti` we only load `streamedAfter` no more than once (i.e. assign column values from streamed row which are not used in join condition).

### Why are the changes needed?

Avoid unnecessary processing in code-gen (though it's just `boolean $loaded = false;`, and `if (!$loaded) { $loaded = true; }`).

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unite tests in `ExistenceJoinSuite`.

Closes#32681 from c21/join-followup.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The main code change is:

* Change rule `DemoteBroadcastHashJoin` to `DynamicJoinSelection` and add shuffle hash join selection code.

* Specify a join strategy hint `SHUFFLE_HASH` if AQE think a join can be converted to SHJ.

* Skip `preferSortMerge` config check in AQE side if a join can be converted to SHJ.

### Why are the changes needed?

Use AQE runtime statistics to decide if we can use shuffled hash join instead of sort merge join. Currently, the formula of shuffled hash join selection dose not work due to the dymanic shuffle partition number.

Add a new config spark.sql.adaptive.shuffledHashJoinLocalMapThreshold to decide if join can be converted to shuffled hash join safely.

### Does this PR introduce _any_ user-facing change?

Yes, add a new config.

### How was this patch tested?

Add test.

Closes#32550 from ulysses-you/SPARK-35282-2.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add the metrics to record how many tasks fallback to sort-based aggregation for hash aggregation. This will help developers and users to debug and optimize query. Object hash aggregation has similar metrics already.

### Why are the changes needed?

Help developers and users to debug and optimize query with hash aggregation.

### Does this PR introduce _any_ user-facing change?

Yes, the added metrics will show up in Spark web UI.

Example:

<img width="604" alt="Screen Shot 2021-05-26 at 12 17 08 AM" src="https://user-images.githubusercontent.com/4629931/119618437-bf3c5880-bdb7-11eb-89bb-5b88db78639f.png">

### How was this patch tested?

Changed unit test in `SQLMetricsSuite.scala`.

Closes#32671 from c21/agg-metrics.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to upgrade json4s from 3.7.0-M5 to 3.7.0-M11

Note: json4s version greater than 3.7.0-M11 is not binary compatible with Spark third party jars

### Why are the changes needed?

Multiple defect fixes and improvements like

https://github.com/json4s/json4s/issues/750https://github.com/json4s/json4s/issues/554https://github.com/json4s/json4s/issues/715

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Ran with the existing UTs

Closes#32636 from vinodkc/br_build_upgrade_json4s.

Authored-by: Vinod KC <vinod.kc.in@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Add the function type, such as "scala_udf", "python_udf", "java_udf", "hive", "built-in" to the `ExpressionInfo` for UDF.

### Why are the changes needed?

Make the `ExpressionInfo` of UDF more meaningful

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing and newly added UT

Closes#32587 from linhongliu-db/udf-language.

Authored-by: Linhong Liu <linhong.liu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR improves the interaction between partition coalescing and skew handling by moving the skew join rule ahead of the partition coalescing rule and making corresponding changes to the two rules:

1. Simplify `OptimizeSkewedJoin` as it doesn't need to handle `CustomShuffleReaderExec` anymore.

2. Update `CoalesceShufflePartitions` to support coalescing non-skewed partitions.

### Why are the changes needed?

It's a bit hard to reason about skew join if partitions have been coalesced. A skewed partition needs to be much larger than other partitions and we need to look at the raw sizes before coalescing.

It also makes `OptimizeSkewedJoin` more robust, as we don't need to worry about a skewed partition being coalesced with a small partition and breaks skew join handling.

It also helps with https://github.com/apache/spark/pull/31653 , which needs to move `OptimizeSkewedJoin` to an earlier phase and run before `CoalesceShufflePartitions`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

new UT and existing tests

Closes#32594 from cloud-fan/shuffle.

Lead-authored-by: Wenchen Fan <wenchen@databricks.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

* remove `EliminateUnnecessaryJoin`, using `AQEPropagateEmptyRelation` instead.

* eliminate join, aggregate, limit, repartition, sort, generate which is beneficial.

### Why are the changes needed?

Make `EliminateUnnecessaryJoin` available with more case.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Add test.

Closes#32602 from ulysses-you/SPARK-35455.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes an issue that `RemoveRedundantProjects` removes `ProjectExec` which is for generating `UnsafeRow`.

In `DataSourceV2Strategy`, `ProjectExec` will be inserted to ensure internal rows are `UnsafeRow`.

```

private def withProjectAndFilter(

project: Seq[NamedExpression],

filters: Seq[Expression],

scan: LeafExecNode,

needsUnsafeConversion: Boolean): SparkPlan = {

val filterCondition = filters.reduceLeftOption(And)

val withFilter = filterCondition.map(FilterExec(_, scan)).getOrElse(scan)

if (withFilter.output != project || needsUnsafeConversion) {

ProjectExec(project, withFilter)

} else {

withFilter

}

}

...

case PhysicalOperation(project, filters, relation: DataSourceV2ScanRelation) =>

// projection and filters were already pushed down in the optimizer.

// this uses PhysicalOperation to get the projection and ensure that if the batch scan does

// not support columnar, a projection is added to convert the rows to UnsafeRow.

val batchExec = BatchScanExec(relation.output, relation.scan)

withProjectAndFilter(project, filters, batchExec, !batchExec.supportsColumnar) :: Nil

```

So, the hierarchy of the partial tree should be like `ProjectExec(FilterExec(BatchScan))`.

But `RemoveRedundantProjects` doesn't consider this type of hierarchy, leading `ClassCastException`.

A concreate example to reproduce this issue is reported:

```

import scala.collection.JavaConverters._

import org.apache.iceberg.{PartitionSpec, TableProperties}

import org.apache.iceberg.hadoop.HadoopTables

import org.apache.iceberg.spark.SparkSchemaUtil

import org.apache.spark.sql.{DataFrame, QueryTest, SparkSession}

import org.apache.spark.sql.internal.SQLConf

class RemoveRedundantProjectsTest extends QueryTest {

override val spark: SparkSession = SparkSession

.builder()

.master("local[4]")

.config("spark.driver.bindAddress", "127.0.0.1")

.appName(suiteName)

.getOrCreate()

test("RemoveRedundantProjects removes non-redundant projects") {

withSQLConf(

SQLConf.AUTO_BROADCASTJOIN_THRESHOLD.key -> "-1",

SQLConf.WHOLESTAGE_CODEGEN_ENABLED.key -> "false",

SQLConf.REMOVE_REDUNDANT_PROJECTS_ENABLED.key -> "true") {

withTempDir { dir =>

val path = dir.getCanonicalPath

val data = spark.range(3).toDF

val table = new HadoopTables().create(

SparkSchemaUtil.convert(data.schema),

PartitionSpec.unpartitioned(),

Map(TableProperties.WRITE_NEW_DATA_LOCATION -> path).asJava,

path)

data.write.format("iceberg").mode("overwrite").save(path)

table.refresh()

val df = spark.read.format("iceberg").load(path)

val dfX = df.as("x")

val dfY = df.as("y")

val join = dfX.filter(dfX("id") > 0).join(dfY, "id")

join.explain("extended")

assert(join.count() == 2)

}

}

}

}

```

```

[info] - RemoveRedundantProjects removes non-redundant projects *** FAILED ***

[info] org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 1.0 failed 1 times, most recent failure: Lost task 0.0 in stage 1.0 (TID 4) (xeroxms100.northamerica.corp.microsoft.com executor driver): java.lang.ClassCastException: org.apache.spark.sql.catalyst.expressions.GenericInternalRow cannot be cast to org.apache.spark.sql.catalyst.expressions.UnsafeRow

[info] at org.apache.spark.sql.execution.UnsafeExternalRowSorter.sort(UnsafeExternalRowSorter.java:226)

[info] at org.apache.spark.sql.execution.SortExec.$anonfun$doExecute$1(SortExec.scala:119)

```

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New test.

Closes#32606 from sarutak/fix-project-removal-issue.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Use `SpecificInternalRow` instead of `GenericInternalRow` to avoid boxing / unboxing cost.

### Why are the changes needed?

Since it doesn't know the input row schema, `GenericInternalRow` potentially need to apply boxing for input arguments. It's better to use `SpecificInternalRow` instead since we know input data types.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32647 from sunchao/specific-input-row.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR fixes a bug with subexpression elimination for CaseWhen statements. https://github.com/apache/spark/pull/30245 added support for creating subexpressions that are present in all branches of conditional statements. However, for a statement to be in "all branches" of a CaseWhen statement, it must also be in the elseValue.

### Why are the changes needed?

Fix a bug where a subexpression can be created and run for branches of a conditional that don't pass. This can cause issues especially with a UDF in a branch that gets executed assuming the condition is true.

### Does this PR introduce _any_ user-facing change?

Yes, fixes a potential bug where a UDF could be eagerly executed even though it might expect to have already passed some form of validation. For example:

```

val col = when($"id" < 0, myUdf($"id"))

spark.range(1).select(when(col > 0, col)).show()

```

`myUdf($"id")` is considered a subexpression and eagerly evaluated, because it is pulled out as a common expression from both executions of the when clause, but if `id >= 0` it should never actually be run.

### How was this patch tested?

Updated existing test with new case.

Closes#32595 from Kimahriman/bug-case-subexpr-elimination.

Authored-by: Adam Binford <adamq43@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

This patch fixes a bug when dealing with common expressions in conditional expressions such as `CaseWhen` during subexpression elimination.

For example, previously we find common expressions among conditions of `CaseWhen`, but children expressions are also counted into. We should not count these children expressions as common expressions.

### Why are the changes needed?

If the redundant children expressions are counted as common expressions too, they will be redundantly evaluated and miss the subexpression elimination opportunity.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added tests.

Closes#32559 from viirya/SPARK-35410.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR proposes to avoid wrapping if-else to the constant literals for `percentage` and `accuracy` in `percentile_approx`. They are expected to be literals (or foldable expressions).

Pivot works by two phrase aggregations, and it works with manipulating the input to `null` for non-matched values (pivot column and value).

Note that pivot supports an optimized version without such logic with changing input to `null` for some types (non-nested types basically). So the issue fixed by this PR is only for complex types.

```scala

val df = Seq(

("a", -1.0), ("a", 5.5), ("a", 2.5), ("b", 3.0), ("b", 5.2)).toDF("type", "value")

.groupBy().pivot("type", Seq("a", "b")).agg(

percentile_approx(col("value"), array(lit(0.5)), lit(10000)))

df.show()

```

**Before:**

```

org.apache.spark.sql.AnalysisException: cannot resolve 'percentile_approx((IF((type <=> CAST('a' AS STRING)), value, CAST(NULL AS DOUBLE))), (IF((type <=> CAST('a' AS STRING)), array(0.5D), NULL)), (IF((type <=> CAST('a' AS STRING)), 10000, CAST(NULL AS INT))))' due to data type mismatch: The accuracy or percentage provided must be a constant literal;

'Aggregate [percentile_approx(if ((type#7 <=> cast(a as string))) value#8 else cast(null as double), if ((type#7 <=> cast(a as string))) array(0.5) else cast(null as array<double>), if ((type#7 <=> cast(a as string))) 10000 else cast(null as int), 0, 0) AS a#16, percentile_approx(if ((type#7 <=> cast(b as string))) value#8 else cast(null as double), if ((type#7 <=> cast(b as string))) array(0.5) else cast(null as array<double>), if ((type#7 <=> cast(b as string))) 10000 else cast(null as int), 0, 0) AS b#18]

+- Project [_1#2 AS type#7, _2#3 AS value#8]

+- LocalRelation [_1#2, _2#3]

```

**After:**

```

+-----+-----+

| a| b|

+-----+-----+

|[2.5]|[3.0]|

+-----+-----+

```

### Why are the changes needed?

To make percentile_approx work with pivot as expected

### Does this PR introduce _any_ user-facing change?

Yes. It threw an exception but now it returns a correct result as shown above.

### How was this patch tested?

Manually tested and unit test was added.

Closes#32619 from HyukjinKwon/SPARK-35480.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/32411, to fix a mistake and use `sparkSession.sessionState.newHadoopConf` which includes SQL configs instead of `sparkSession.sparkContext.hadoopConfiguration` .

### Why are the changes needed?

fix mistake

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing tests

Closes#32618 from cloud-fan/follow1.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Kent Yao <yao@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/32622 to fix a test case.

### Why are the changes needed?

Fix a wrong test case name and fix the test case to cause the expected error correctly.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#32623 from dongjoon-hyun/SPARK-34558.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/31671https://github.com/apache/spark/pull/31671 qualifies the warehouse at the beginning, which may fail Spark startup if something goes wrong, like the underlying FileSystem can't be initialized.

This PR falls back to the old behavior and leave the warehouse path unqualified if qualifying fails.

### Why are the changes needed?

Fix a regression. It's important to be always able to start Spark app (e.g. spark-shell), so that we can debug.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

a new test case

Closes#32622 from cloud-fan/follow2.

Lead-authored-by: Wenchen Fan <wenchen@databricks.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Change the type of `DATASET_ID_TAG` from `Long` to `HashSet[Long]` to allow the logical plan to match multiple datasets.

### Why are the changes needed?

During the transformation from one Dataset to another Dataset, the DATASET_ID_TAG of logical plan won't change if the plan itself doesn't change:

b5241c97b1/sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala (L234-L237)

However, dataset id always changes even if the logical plan doesn't change:

b5241c97b1/sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala (L207-L208)

And this can lead to the mismatch between dataset's id and col's __dataset_id. E.g.,

```scala

test("SPARK-28344: fail ambiguous self join - Dataset.colRegex as column ref") {

// The test can fail if we change it to:

// val df1 = spark.range(3).toDF()

// val df2 = df1.filter($"id" > 0).toDF()

val df1 = spark.range(3)

val df2 = df1.filter($"id" > 0)

withSQLConf(

SQLConf.FAIL_AMBIGUOUS_SELF_JOIN_ENABLED.key -> "true",

SQLConf.CROSS_JOINS_ENABLED.key -> "true") {

assertAmbiguousSelfJoin(df1.join(df2, df1.colRegex("id") > df2.colRegex("id")))

}

}

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added unit tests.

Closes#32616 from Ngone51/fix-ambiguous-join.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add tests to check the `EXCEPTION` rebase mode explicitly in the datasources:

- Parquet: `DATE` type and `TIMESTAMP`: `INT96`, `TIMESTAMP_MICROS`, `TIMESTAMP_MILLIS`

- Avro: `DATE` type and `TIMESTAMP`: `timestamp-millis` and `timestamp-micros`.

### Why are the changes needed?

1. To improve test coverage

2. The `EXCEPTION` rebase mode should be checked independently from the default settings.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the affected test suites:

```

$ build/sbt "test:testOnly *AvroV2Suite"

$ build/sbt "test:testOnly *ParquetRebaseDatetimeV1Suite"

```

Closes#32574 from MaxGekk/test-rebase-exception.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR group exception messages in `sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst`.

### Why are the changes needed?

It will largely help with standardization of error messages and its maintenance.

### Does this PR introduce _any_ user-facing change?

No. Error messages remain unchanged.

### How was this patch tested?

No new tests - pass all original tests to make sure it doesn't break any existing behavior.

Closes#32478 from beliefer/SPARK-35063.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to format strings correctly for `PushedFilters`. For example, `explain()` for a query below prints `v in (array('a'))` as `PushedFilters: [In(v, [WrappedArray(a)])]`;

```

scala> sql("create table t (v array<string>) using parquet")

scala> sql("select * from t where v in (array('a'), null)").explain()

== Physical Plan ==

*(1) Filter v#4 IN ([a],null)

+- FileScan parquet default.t[v#4] Batched: false, DataFilters: [v#4 IN ([a],null)], Format: Parquet, Location: InMemoryFileIndex[file:/Users/maropu/Repositories/spark/spark-3.1.1-bin-hadoop2.7/spark-warehouse/t], PartitionFilters: [], PushedFilters: [In(v, [WrappedArray(a),null])], ReadSchema: struct<v:array<string>>

```

This PR makes `explain()` print it as `PushedFilters: [In(v, [[a]])]`;

```

scala> sql("select * from t where v in (array('a'), null)").explain()

== Physical Plan ==

*(1) Filter v#4 IN ([a],null)

+- FileScan parquet default.t[v#4] Batched: false, DataFilters: [v#4 IN ([a],null)], Format: Parquet, Location: InMemoryFileIndex[file:/Users/maropu/Repositories/spark/spark-3.1.1-bin-hadoop2.7/spark-warehouse/t], PartitionFilters: [], PushedFilters: [In(v, [[a],null])], ReadSchema: struct<v:array<string>>

```

NOTE: This PR includes a bugfix caused by #32577 (See the cloud-fan comment: https://github.com/apache/spark/pull/32577/files#r636108150).

### Why are the changes needed?

To improve explain strings.

### Does this PR introduce _any_ user-facing change?

Yes, this PR improves the explain strings for pushed-down filters.

### How was this patch tested?

Added tests in `SQLQueryTestSuite`.

Closes#32615 from maropu/ExplainPartitionFilters.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Print the invalid value in config validation error message for `checkValue` just like `checkValues`

### Why are the changes needed?

Invalid configuration values may come in many ways, this PR can help different kinds of users or developers to identify what the config the error is related to

### Does this PR introduce _any_ user-facing change?

yes, but only error msg

### How was this patch tested?

yes, modified tests

Closes#32600 from yaooqinn/SPARK-35456.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

CTAS with location clause acts as an insert overwrite. This can cause problems when there are subdirectories within a location directory.

This causes some users to accidentally wipe out directories with very important data. We should not allow CTAS with location to a non-empty directory.

### Why are the changes needed?

Hive already handled this scenario: HIVE-11319

Steps to reproduce:

```scala

sql("""create external table `demo_CTAS`( `comment` string) PARTITIONED BY (`col1` string, `col2` string) STORED AS parquet location '/tmp/u1/demo_CTAS'""")

sql("""INSERT OVERWRITE TABLE demo_CTAS partition (col1='1',col2='1') VALUES ('abc')""")

sql("select* from demo_CTAS").show

sql("""create table ctas1 location '/tmp/u2/ctas1' as select * from demo_CTAS""")

sql("select* from ctas1").show

sql("""create table ctas2 location '/tmp/u2' as select * from demo_CTAS""")

```

Before the fix: Both create table operations will succeed. But values in table ctas1 will be replaced by ctas2 accidentally.

After the fix: `create table ctas2...` will throw `AnalysisException`:

```

org.apache.spark.sql.AnalysisException: CREATE-TABLE-AS-SELECT cannot create table with location to a non-empty directory /tmp/u2 . To allow overwriting the existing non-empty directory, set 'spark.sql.legacy.allowNonEmptyLocationInCTAS' to true.

```

### Does this PR introduce _any_ user-facing change?

Yes, if the location directory is not empty, CTAS with location will throw AnalysisException

```

sql("""create table ctas2 location '/tmp/u2' as select * from demo_CTAS""")

```

```

org.apache.spark.sql.AnalysisException: CREATE-TABLE-AS-SELECT cannot create table with location to a non-empty directory /tmp/u2 . To allow overwriting the existing non-empty directory, set 'spark.sql.legacy.allowNonEmptyLocationInCTAS' to true.

```

`CREATE TABLE AS SELECT` with non-empty `LOCATION` will throw `AnalysisException`. To restore the behavior before Spark 3.2, need to set `spark.sql.legacy.allowNonEmptyLocationInCTAS` to `true`. , default value is `false`.

Updated SQL migration guide.

### How was this patch tested?

Test case added in SQLQuerySuite.scala

Closes#32411 from vinodkc/br_fixCTAS_nonempty_dir.

Authored-by: Vinod KC <vinod.kc.in@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

As title. Fixed two places where the documentation for window operator has some error.

### Why are the changes needed?

Help people read code for window operator more easily in the future.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32585 from c21/minor-doc.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

AQE has an optimization where it attempts to reuse compatible exchanges but it does not take into account whether the exchanges are columnar or not, resulting in incorrect reuse under some circumstances.

This PR simply changes the key used to lookup cached stages. It now uses the canonicalized form of the new query stage (potentially created by a plugin) rather than using the canonicalized form of the original exchange.

### Why are the changes needed?

When using the [RAPIDS Accelerator for Apache Spark](https://github.com/NVIDIA/spark-rapids) we sometimes see a new query stage correctly create a row-based exchange and then Spark replaces it with a cached columnar exchange, which is not compatible, and this causes queries to fail.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

The patch has been tested with the query that highlighted this issue. I looked at writing unit tests for this but it would involve implementing a mock columnar exchange in the tests so would be quite a bit of work. If anyone has ideas on other ways to test this I am happy to hear them.

Closes#32195 from andygrove/SPARK-35093.

Authored-by: Andy Grove <andygrove73@gmail.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR adds `sentences`, a string function, which is present as of `2.0.0` but missing in `functions.{scala,py}`.

### Why are the changes needed?

This function can be only used from SQL for now.

It's good if we can use this function from Scala/Python code as well as SQL.

### Does this PR introduce _any_ user-facing change?

Yes. Users can use this function from Scala and Python.

### How was this patch tested?

New test.

Closes#32566 from sarutak/sentences-function.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

1. In HadoopMapReduceCommitProtocol, create parent directory before renaming custom partition path staging files

2. In InMemoryCatalog and HiveExternalCatalog, create new partition directory before renaming old partition path

3. Check return value of FileSystem#rename, if false, throw exception to avoid silent data loss cause by rename failure

4. Change DebugFilesystem#rename behavior to make it match HDFS's behavior (return false without rename when dst parent directory not exist)

### Why are the changes needed?

Depends on FileSystem#rename implementation, when destination directory does not exist, file system may

1. return false without renaming file nor throwing exception (e.g. HDFS), or

2. create destination directory, rename files, and return true (e.g. LocalFileSystem)

In the first case above, renames in HadoopMapReduceCommitProtocol for custom partition path will fail silently if the destination partition path does not exist. Failed renames can happen when

1. dynamicPartitionOverwrite == true, the custom partition path directories are deleted by the job before the rename; or

2. the custom partition path directories do not exist before the job; or

3. something else is wrong when file system handle `rename`

The renames in MemoryCatalog and HiveExternalCatalog for partition renaming also have similar issue.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Modified DebugFilesystem#rename, and added new unit tests.

Without the fix in src code, five InsertSuite tests and one AlterTableRenamePartitionSuite test failed:

InsertSuite.SPARK-20236: dynamic partition overwrite with custom partition path (existing test with modified FS)

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 0 ==

struct<> struct<>

![2,1,1]

```

InsertSuite.SPARK-35106: insert overwrite with custom partition path

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 0 ==

struct<> struct<>

![2,1,1]

```

InsertSuite.SPARK-35106: dynamic partition overwrite with custom partition path

```

== Results ==

!== Correct Answer - 2 == == Spark Answer - 1 ==

!struct<> struct<i:int,part1:int,part2:int>

[1,1,1] [1,1,1]

![1,1,2]

```

InsertSuite.SPARK-35106: Throw exception when rename custom partition paths returns false

```

Expected exception org.apache.spark.SparkException to be thrown, but no exception was thrown

```

InsertSuite.SPARK-35106: Throw exception when rename dynamic partition paths returns false

```

Expected exception org.apache.spark.SparkException to be thrown, but no exception was thrown

```

AlterTableRenamePartitionSuite.ALTER TABLE .. RENAME PARTITION V1: multi part partition (existing test with modified FS)

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 0 ==

struct<> struct<>

![3,123,3]

```

Closes#32530 from YuzhouSun/SPARK-35106.

Authored-by: Yuzhou Sun <yuzhosun@amazon.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes an issue that streaming queries with V2Relation can have redundant `ProjectExec` in its physical plan.

You can easily reproduce this issue with the following code.

```

import org.apache.spark.sql.streaming.Trigger

val query = spark.

readStream.

format("rate").

option("rowsPerSecond", 1000).

option("rampUpTime", "10s").

load().

selectExpr("timestamp", "100", "value").

writeStream.

format("console").

trigger(Trigger.ProcessingTime("5 seconds")).

// trigger(Trigger.Continuous("5 seconds")). // You can reproduce with continuous processing too.

outputMode("append").

start()

```

The plan tree is here.

### Why are the changes needed?

For better performance.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I run the same code above and get the following plan tree.

Closes#32570 from sarutak/fix-redundant-projectexec.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

To pass the TPCDS-related plan stability tests in scala-2.13, this PR proposes to fix two things below;

- (1) Sorts elements in the predicate `InSet` and the source filter `In` for printing their nodes.

- (2) Formats nested collection elements (`Seq`, `Array`, and `Set`) recursively in `TreeNode.argString`.

As for (1), it seems v2.12/v2.13 prints `Set` elements with a different order, so we need to sort them explicitly. As for (2), the `Seq` implementation is different between v2.12/v2.13, so we need to format nested `Seq` elements correctly to hide the name of its implementation (See an example below);

```

(74) Expand [codegen id : 20]

Input [5]: [sales#41, RETURNS#42, profit#43, channel#44, id#45]

-Arguments: [ArrayBuffer(sales#41, returns#42, ... <-- scala-2.12

+Arguments: [Vector(sales#41, returns#42, ... <-- scala-2.13

+Arguments: [[(sales#41, returns#42, ... <-- the proposed fix to hide the name of its implementation

```

### Why are the changes needed?

To pass the tests in Scala v2.13.

### Does this PR introduce _any_ user-facing change?

Yes, this fix changes query explain strings.

### How was this patch tested?

Manually checked.

Closes#32577 from maropu/FixTPCDSTestIssueInScala213.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When creating `Invoke` and `StaticInvoke` for `ScalarFunction`'s magic method, set `propagateNull` to false.

### Why are the changes needed?

When `propgagateNull` is true (which is the default value), `Invoke` and `StaticInvoke` will return null if any of the argument is null. For scalar function this is incorrect, as we should leave the logic to function implementation instead.

### Does this PR introduce _any_ user-facing change?

Yes. Now null arguments shall be properly handled with magic method.

### How was this patch tested?

Added new tests.

Closes#32553 from sunchao/SPARK-35389.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

To pass `subquery/scalar-subquery/scalar-subquery-select.sql` (`SQLQueryTestSuite`) in Scala v2.13, this PR proposes to change the aggregate expr of a test query in the file from `collect_set(...)` to `sort_array(collect_set(...))` because `collect_set` depends on the `mutable.HashSet` implementation and elements in the set are printed in a different order in Scala v2.12/v2.13.

### Why are the changes needed?

To pass the test in Scala v2.13.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually checked.

Closes#32578 from maropu/FixSQLTestIssueInScala213.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fix test failure under Scala 2.13 by making test `ScalaFunction` `StrLenMagic` public.

### Why are the changes needed?

A few tests are failing when using Scala 2.13 with error message like the following:

```

[info] Cause: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 35, Column 121: failed to compile: org.codehaus.commons.compiler.CompileException: File 'generated.java', Line 35, Column 121: No a

pplicable constructor/method found for actual parameters "org.apache.spark.unsafe.types.UTF8String"; candidates are: "public int org.apache.spark.sql.connector.DataSourceV2FunctionSuite$StrLenMagic$.invoke(org.apache.spark.

unsafe.types.UTF8String)"

[info] at org.apache.spark.sql.errors.QueryExecutionErrors$.compilerError(QueryExecutionErrors.scala:387)

[info] at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$.org$apache$spark$sql$catalyst$expressions$codegen$CodeGenerator$$doCompile(CodeGenerator.scala:1415)

[info] at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:1501)

```

This seems to be caused by the fact that the `StrLenMagic` is using `private` scope. After removing the `private` keyword the tests are now passing.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

```

$ dev/change-scala-version.sh 2.13

$ build/sbt "sql/testOnly *.DataSourceV2FunctionSuite" -Pscala-2.13

```

Closes#32575 from sunchao/SPARK-34981-follow-up.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR is used to fix this bug:

```

set spark.sql.legacy.charVarcharAsString=true;

create table chartb01(a char(3));

insert into chartb01 select 'aaaaa';

```

here we expect the data of table chartb01 is 'aaa', but it runs failed.

### Why are the changes needed?

Improve backward compatibility

```

spark-sql>

> create table tchar01(col char(2)) using parquet;

Time taken: 0.767 seconds

spark-sql>

> insert into tchar01 select 'aaa';

ERROR | Executor task launch worker for task 0.0 in stage 0.0 (TID 0) | Aborting task | org.apache.spark.util.Utils.logError(Logging.scala:94)

java.lang.RuntimeException: Exceeds char/varchar type length limitation: 2

at org.apache.spark.sql.catalyst.util.CharVarcharCodegenUtils.trimTrailingSpaces(CharVarcharCodegenUtils.java:31)

at org.apache.spark.sql.catalyst.util.CharVarcharCodegenUtils.charTypeWriteSideCheck(CharVarcharCodegenUtils.java:44)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.project_doConsume_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:755)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$executeTask$1(FileFormatWriter.scala:279)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1500)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:288)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$write$15(FileFormatWriter.scala:212)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:497)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1466)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:500)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

### Does this PR introduce _any_ user-facing change?

No (the legacy config is false by default).

### How was this patch tested?

Added unit tests.

Closes#32501 from fhygh/master.

Authored-by: fhygh <283452027@qq.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Spark doesn't support aggregate functions with mixed outer and local references. This PR applies this check earlier to fail with a clear error message instead of some weird ones, and simplifies the related code in `SubExprUtils.getOuterReferences`. This PR also refines the error message a bit.

### Why are the changes needed?

better error message

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

updated tests

Closes#32503 from cloud-fan/try.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>