### What changes were proposed in this pull request?

This PR renames `spark.sql.pandas.udf.buffer.size` to `spark.sql.execution.pandas.udf.buffer.size` to be more consistent with other pandas configuration prefixes, given:

- `spark.sql.execution.pandas.arrowSafeTypeConversion`

- `spark.sql.execution.pandas.respectSessionTimeZone`

- `spark.sql.legacy.execution.pandas.groupedMap.assignColumnsByName`

- other configurations like `spark.sql.execution.arrow.*`.

### Why are the changes needed?

To make configuration names consistent.

### Does this PR introduce any user-facing change?

No because this configuration was not released yet.

### How was this patch tested?

Existing tests should cover.

Closes#27450 from HyukjinKwon/SPARK-27870-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Prevent unnecessary copies of data during conversion from Arrow to Pandas.

### Why are the changes needed?

During conversion of pyarrow data to Pandas, columns are checked for timestamp types and then modified to correct for local timezone. If the data contains no timestamp types, then unnecessary copies of the data can be made. This is most prevalent when checking columns of a pandas DataFrame where each series is assigned back to the DataFrame, regardless if it had timestamps. See https://www.mail-archive.com/devarrow.apache.org/msg17008.html and ARROW-7596 for discussion.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests

Closes#27358 from BryanCutler/pyspark-pandas-timestamp-copy-fix-SPARK-30640.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

This reverts commit 1d20d13149.

Closes#27351 from gatorsmile/revertSPARK25496.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Minor documentation fix

### Why are the changes needed?

### Does this PR introduce any user-facing change?

### How was this patch tested?

Manually; consider adding tests?

Closes#27295 from deepyaman/patch-2.

Authored-by: Deepyaman Datta <deepyaman.datta@utexas.edu>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds:

- `pyspark.sql.functions.overlay` function to PySpark

- `overlay` function to SparkR

### Why are the changes needed?

Feature parity. At the moment R and Python users can access this function only using SQL or `expr` / `selectExpr`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New unit tests.

Closes#27325 from zero323/SPARK-30607.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to redesign pandas UDFs as described in [the proposal](https://docs.google.com/document/d/1-kV0FS_LF2zvaRh_GhkV32Uqksm_Sq8SvnBBmRyxm30/edit?usp=sharing).

```python

from pyspark.sql.functions import pandas_udf

import pandas as pd

pandas_udf("long")

def plug_one(s: pd.Series) -> pd.Series:

return s + 1

spark.range(10).select(plug_one("id")).show()

```

```

+------------+

|plug_one(id)|

+------------+

| 1|

| 2|

| 3|

| 4|

| 5|

| 6|

| 7|

| 8|

| 9|

| 10|

+------------+

```

Note that, this PR address one of the future improvements described [here](https://docs.google.com/document/d/1-kV0FS_LF2zvaRh_GhkV32Uqksm_Sq8SvnBBmRyxm30/edit#heading=h.h3ncjpk6ujqu), "A couple of less-intuitive pandas UDF types" (by zero323) together.

In short,

- Adds new way with type hints as an alternative and experimental way.

```python

pandas_udf(schema='...')

def func(c1: Series, c2: Series) -> DataFrame:

pass

```

- Replace and/or add an alias for three types below from UDF, and make them as separate standalone APIs. So, `pandas_udf` is now consistent with regular `udf`s and other expressions.

`df.mapInPandas(udf)` -replace-> `df.mapInPandas(f, schema)`

`df.groupby.apply(udf)` -alias-> `df.groupby.applyInPandas(f, schema)`

`df.groupby.cogroup.apply(udf)` -replace-> `df.groupby.cogroup.applyInPandas(f, schema)`

*`df.groupby.apply` was added from 2.3 while the other were added in the master only.

- No deprecation for the existing ways for now.

```python

pandas_udf(schema='...', functionType=PandasUDFType.SCALAR)

def func(c1, c2):

pass

```

If users are happy with this, I plan to deprecate the existing way and declare using type hints is not experimental anymore.

One design goal in this PR was that, avoid touching the internal (since we didn't deprecate the old ways for now), but supports type hints with a minimised changes only at the interface.

- Once we deprecate or remove the old ways, I think it requires another refactoring for the internal in the future. At the very least, we should rename internal pandas evaluation types.

- If users find this experimental type hints isn't quite helpful, we should simply revert the changes at the interface level.

### Why are the changes needed?

In order to address old design issues. Please see [the proposal](https://docs.google.com/document/d/1-kV0FS_LF2zvaRh_GhkV32Uqksm_Sq8SvnBBmRyxm30/edit?usp=sharing).

### Does this PR introduce any user-facing change?

For behaviour changes, No.

It adds new ways to use pandas UDFs by using type hints. See below.

**SCALAR**:

```python

pandas_udf(schema='...')

def func(c1: Series, c2: DataFrame) -> Series:

pass # DataFrame represents a struct column

```

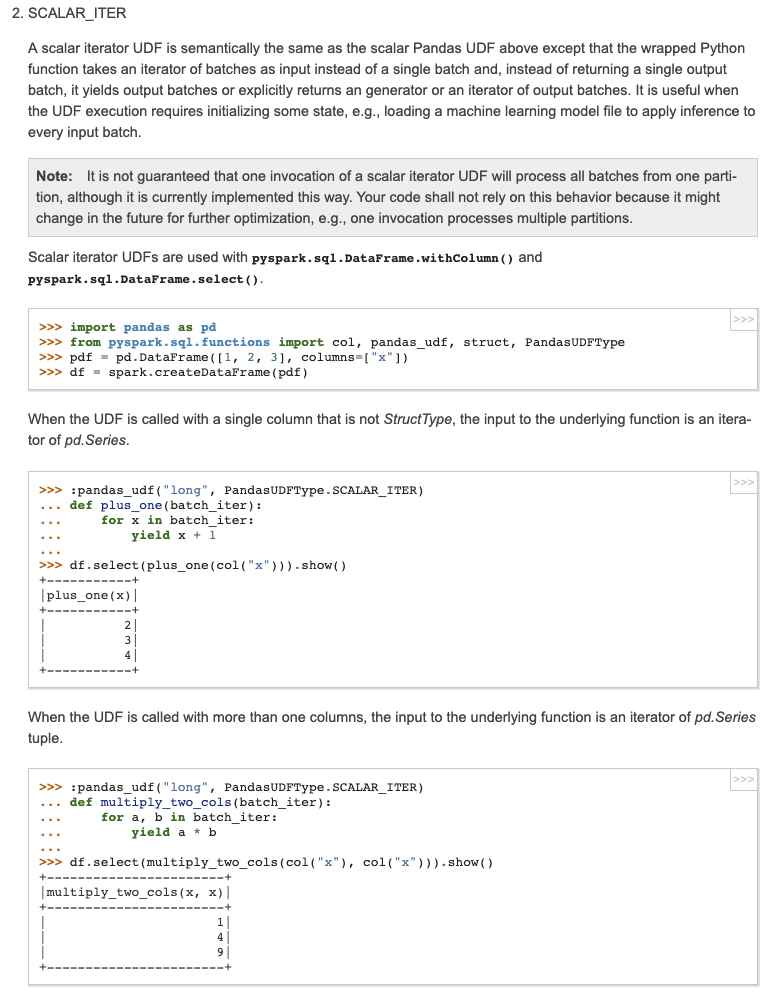

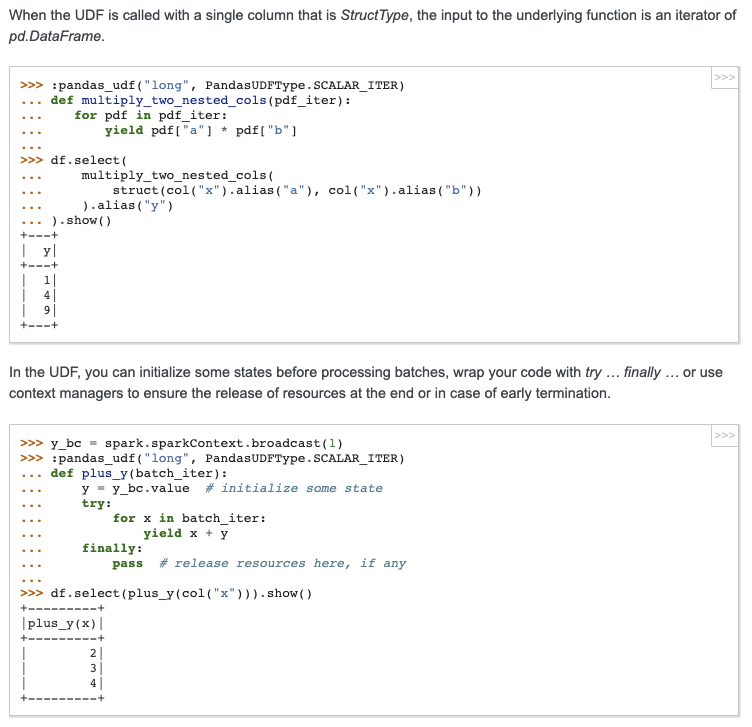

**SCALAR_ITER**:

```python

pandas_udf(schema='...')

def func(iter: Iterator[Tuple[Series, DataFrame, ...]]) -> Iterator[Series]:

pass # Same as SCALAR but wrapped by Iterator

```

**GROUPED_AGG**:

```python

pandas_udf(schema='...')

def func(c1: Series, c2: DataFrame) -> int:

pass # DataFrame represents a struct column

```

**GROUPED_MAP**:

This was added in Spark 2.3 as of SPARK-20396. As described above, it keeps the existing behaviour. Additionally, we now have a new alias `groupby.applyInPandas` for `groupby.apply`. See the example below:

```python

def func(pdf):

return pdf

df.groupby("...").applyInPandas(func, schema=df.schema)

```

**MAP_ITER**: this is not a pandas UDF anymore

This was added in Spark 3.0 as of SPARK-28198; and this PR replaces the usages. See the example below:

```python

def func(iter):

for df in iter:

yield df

df.mapInPandas(func, df.schema)

```

**COGROUPED_MAP**: this is not a pandas UDF anymore

This was added in Spark 3.0 as of SPARK-27463; and this PR replaces the usages. See the example below:

```python

def asof_join(left, right):

return pd.merge_asof(left, right, on="...", by="...")

df1.groupby("...").cogroup(df2.groupby("...")).applyInPandas(asof_join, schema="...")

```

### How was this patch tested?

Unittests added and tested against Python 2.7, 3.6 and 3.7.

Closes#27165 from HyukjinKwon/revisit-pandas.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR propose to disallow negative `scale` of `Decimal` in Spark. And this PR brings two behavior changes:

1) for literals like `1.23E4BD` or `1.23E4`(with `spark.sql.legacy.exponentLiteralAsDecimal.enabled`=true, see [SPARK-29956](https://issues.apache.org/jira/browse/SPARK-29956)), we set its `(precision, scale)` to (5, 0) rather than (3, -2);

2) add negative `scale` check inside the decimal method if it exposes to set `scale` explicitly. If check fails, `AnalysisException` throws.

And user could still use `spark.sql.legacy.allowNegativeScaleOfDecimal.enabled` to restore the previous behavior.

### Why are the changes needed?

According to SQL standard,

> 4.4.2 Characteristics of numbers

An exact numeric type has a precision P and a scale S. P is a positive integer that determines the number of significant digits in a particular radix R, where R is either 2 or 10. S is a non-negative integer.

scale of Decimal should always be non-negative. And other mainstream databases, like Presto, PostgreSQL, also don't allow negative scale.

Presto:

```

presto:default> create table t (i decimal(2, -1));

Query 20191213_081238_00017_i448h failed: line 1:30: mismatched input '-'. Expecting: <integer>, <type>

create table t (i decimal(2, -1))

```

PostgrelSQL:

```

postgres=# create table t(i decimal(2, -1));

ERROR: NUMERIC scale -1 must be between 0 and precision 2

LINE 1: create table t(i decimal(2, -1));

^

```

And, actually, Spark itself already doesn't allow to create table with negative decimal types using SQL:

```

scala> spark.sql("create table t(i decimal(2, -1))");

org.apache.spark.sql.catalyst.parser.ParseException:

no viable alternative at input 'create table t(i decimal(2, -'(line 1, pos 28)

== SQL ==

create table t(i decimal(2, -1))

----------------------------^^^

at org.apache.spark.sql.catalyst.parser.ParseException.withCommand(ParseDriver.scala:263)

at org.apache.spark.sql.catalyst.parser.AbstractSqlParser.parse(ParseDriver.scala:130)

at org.apache.spark.sql.execution.SparkSqlParser.parse(SparkSqlParser.scala:48)

at org.apache.spark.sql.catalyst.parser.AbstractSqlParser.parsePlan(ParseDriver.scala:76)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:605)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:605)

... 35 elided

```

However, it is still possible to create such table or `DatFrame` using Spark SQL programming API:

```

scala> val tb =

CatalogTable(

TableIdentifier("test", None),

CatalogTableType.MANAGED,

CatalogStorageFormat.empty,

StructType(StructField("i", DecimalType(2, -1) ) :: Nil))

```

```

scala> spark.sql("SELECT 1.23E4BD")

res2: org.apache.spark.sql.DataFrame = [1.23E+4: decimal(3,-2)]

```

while, these two different behavior could make user confused.

On the other side, even if user creates such table or `DataFrame` with negative scale decimal type, it can't write data out if using format, like `parquet` or `orc`. Because these formats have their own check for negative scale and fail on it.

```

scala> spark.sql("SELECT 1.23E4BD").write.saveAsTable("parquet")

19/12/13 17:37:04 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)

java.lang.IllegalArgumentException: Invalid DECIMAL scale: -2

at org.apache.parquet.Preconditions.checkArgument(Preconditions.java:53)

at org.apache.parquet.schema.Types$BasePrimitiveBuilder.decimalMetadata(Types.java:495)

at org.apache.parquet.schema.Types$BasePrimitiveBuilder.build(Types.java:403)

at org.apache.parquet.schema.Types$BasePrimitiveBuilder.build(Types.java:309)

at org.apache.parquet.schema.Types$Builder.named(Types.java:290)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.convertField(ParquetSchemaConverter.scala:428)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.convertField(ParquetSchemaConverter.scala:334)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.$anonfun$convert$2(ParquetSchemaConverter.scala:326)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

at scala.collection.Iterator.foreach(Iterator.scala:941)

at scala.collection.Iterator.foreach$(Iterator.scala:941)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1429)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at org.apache.spark.sql.types.StructType.foreach(StructType.scala:99)

at scala.collection.TraversableLike.map(TraversableLike.scala:238)

at scala.collection.TraversableLike.map$(TraversableLike.scala:231)

at org.apache.spark.sql.types.StructType.map(StructType.scala:99)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.convert(ParquetSchemaConverter.scala:326)

at org.apache.spark.sql.execution.datasources.parquet.ParquetWriteSupport.init(ParquetWriteSupport.scala:97)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:388)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:349)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:150)

at org.apache.spark.sql.execution.datasources.SingleDirectoryDataWriter.newOutputWriter(FileFormatDataWriter.scala:124)

at org.apache.spark.sql.execution.datasources.SingleDirectoryDataWriter.<init>(FileFormatDataWriter.scala:109)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:264)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$write$15(FileFormatWriter.scala:205)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:441)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:444)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

So, I think it would be better to disallow negative scale totally and make behaviors above be consistent.

### Does this PR introduce any user-facing change?

Yes, if `spark.sql.legacy.allowNegativeScaleOfDecimal.enabled=false`, user couldn't create Decimal value with negative scale anymore.

### How was this patch tested?

Added new tests in `ExpressionParserSuite` and `DecimalSuite`;

Updated `SQLQueryTestSuite`.

Closes#26881 from Ngone51/nonnegative-scale.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

https://github.com/apache/spark/pull/26809 added `Dataset.tail` API. It should be good to have it in PySpark API as well.

### Why are the changes needed?

To support consistent APIs.

### Does this PR introduce any user-facing change?

No. It adds a new API.

### How was this patch tested?

Manually tested and doctest was added.

Closes#27251 from HyukjinKwon/SPARK-30539.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Another followup of 4398dfa709

I missed two more tests added:

```

======================================================================

ERROR [0.133s]: test_to_pandas_from_mixed_dataframe (pyspark.sql.tests.test_dataframe.DataFrameTests)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/home/jenkins/python/pyspark/sql/tests/test_dataframe.py", line 617, in test_to_pandas_from_mixed_dataframe

self.assertTrue(np.all(pdf_with_only_nulls.dtypes == pdf_with_some_nulls.dtypes))

AssertionError: False is not true

======================================================================

ERROR [0.061s]: test_to_pandas_from_null_dataframe (pyspark.sql.tests.test_dataframe.DataFrameTests)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/home/jenkins/python/pyspark/sql/tests/test_dataframe.py", line 588, in test_to_pandas_from_null_dataframe

self.assertEqual(types[0], np.float64)

AssertionError: dtype('O') != <class 'numpy.float64'>

----------------------------------------------------------------------

```

### Why are the changes needed?

To make the test independent of default values of configuration.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually tested and Jenkins should test.

Closes#27250 from HyukjinKwon/SPARK-29188-followup2.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to explicitly disable Arrow execution for the test of toPandas empty types. If `spark.sql.execution.arrow.pyspark.enabled` is enabled by default, this test alone fails as below:

```

======================================================================

ERROR [0.205s]: test_to_pandas_from_empty_dataframe (pyspark.sql.tests.test_dataframe.DataFrameTests)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/.../pyspark/sql/tests/test_dataframe.py", line 568, in test_to_pandas_from_empty_dataframe

self.assertTrue(np.all(dtypes_when_empty_df == dtypes_when_nonempty_df))

AssertionError: False is not true

----------------------------------------------------------------------

```

it should be best to explicitly disable for the test that only works when it's disabled.

### Why are the changes needed?

To make the test independent of default values of configuration.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually tested and Jenkins should test.

Closes#27247 from HyukjinKwon/SPARK-29188-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to remove the SQL config `spark.sql.execution.pandas.respectSessionTimeZone` which has been deprecated since Spark 2.3.

### Why are the changes needed?

To improve code maintainability.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

by running python tests, https://spark.apache.org/docs/latest/building-spark.html#pyspark-tests-with-maven-or-sbtCloses#27218 from MaxGekk/remove-respectSessionTimeZone.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/27109. It should match the parameter lists in `createDataFrame`.

### Why are the changes needed?

To pass parameters supposed to pass.

### Does this PR introduce any user-facing change?

No (it's only in master)

### How was this patch tested?

Manually tested and existing tests should cover.

Closes#27225 from HyukjinKwon/SPARK-30434-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Fix all the failed tests when enable AQE.

### Why are the changes needed?

Run more tests with AQE to catch bugs, and make it easier to enable AQE by default in the future.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing unit tests

Closes#26813 from JkSelf/enableAQEDefault.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Removing the sorting of PySpark SQL Row fields that were previously sorted by name alphabetically for Python versions 3.6 and above. Field order will now match that as entered. Rows will be used like tuples and are applied to schema by position. For Python versions < 3.6, the order of kwargs is not guaranteed and therefore will be sorted automatically as in previous versions of Spark.

### Why are the changes needed?

This caused inconsistent behavior in that local Rows could be applied to a schema by matching names, but once serialized the Row could only be used by position and the fields were possibly in a different order.

### Does this PR introduce any user-facing change?

Yes, Row fields are no longer sorted alphabetically but will be in the order entered. For Python < 3.6 `kwargs` can not guarantee the order as entered, so `Row`s will be automatically sorted.

An environment variable "PYSPARK_ROW_FIELD_SORTING_ENABLED" can be set that will override construction of `Row` to maintain compatibility with Spark 2.x.

### How was this patch tested?

Existing tests are run with PYSPARK_ROW_FIELD_SORTING_ENABLED=true and added new test with unsorted fields for Python 3.6+

Closes#26496 from BryanCutler/pyspark-remove-Row-sorting-SPARK-29748.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

### What changes were proposed in this pull request?

This PR adds a note that we're not adding "pandas compatible" aliases anymore.

### Why are the changes needed?

We added "pandas compatible" aliases as of https://github.com/apache/spark/pull/5544 and https://github.com/apache/spark/pull/6066 . There are too many differences and I don't think it makes sense to add such aliases anymore at this moment.

I was even considering deprecating them out but decided to take a more conservative approache by just documenting it.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests should cover.

Closes#27142 from HyukjinKwon/SPARK-30464.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to move pandas related functionalities into pandas package. Namely:

```bash

pyspark/sql/pandas

├── __init__.py

├── conversion.py # Conversion between pandas <> PySpark DataFrames

├── functions.py # pandas_udf

├── group_ops.py # Grouped UDF / Cogrouped UDF + groupby.apply, groupby.cogroup.apply

├── map_ops.py # Map Iter UDF + mapInPandas

├── serializers.py # pandas <> PyArrow serializers

├── types.py # Type utils between pandas <> PyArrow

└── utils.py # Version requirement checks

```

In order to separately locate `groupby.apply`, `groupby.cogroup.apply`, `mapInPandas`, `toPandas`, and `createDataFrame(pdf)` under `pandas` sub-package, I had to use a mix-in approach which Scala side uses often by `trait`, and also pandas itself uses this approach (see `IndexOpsMixin` as an example) to group related functionalities. Currently, you can think it's like Scala's self typed trait. See the structure below:

```python

class PandasMapOpsMixin(object):

def mapInPandas(self, ...):

...

return ...

# other Pandas <> PySpark APIs

```

```python

class DataFrame(PandasMapOpsMixin):

# other DataFrame APIs equivalent to Scala side.

```

Yes, This is a big PR but they are mostly just moving around except one case `createDataFrame` which I had to split the methods.

### Why are the changes needed?

There are pandas functionalities here and there and I myself gets lost where it was. Also, when you have to make a change commonly for all of pandas related features, it's almost impossible now.

Also, after this change, `DataFrame` and `SparkSession` become more consistent with Scala side since pandas is specific to Python, and this change separates pandas-specific APIs away from `DataFrame` or `SparkSession`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests should cover. Also, I manually built the PySpark API documentation and checked.

Closes#27109 from HyukjinKwon/pandas-refactoring.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds a note first and last can be non-deterministic in SQL function docs as well.

This is already documented in `functions.scala`.

### Why are the changes needed?

Some people look reading SQL docs only.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Jenkins will test.

Closes#27099 from HyukjinKwon/SPARK-30335.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds a note that UserDefinedFunction's constructor is private.

### Why are the changes needed?

To match with Scala side. Scala side does not have it at all.

### Does this PR introduce any user-facing change?

Doc only changes but it declares UserDefinedFunction's constructor is private explicitly.

### How was this patch tested?

Jenkins

Closes#27101 from HyukjinKwon/SPARK-30430.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up of https://github.com/apache/spark/pull/26780

In https://github.com/apache/spark/pull/26780, a new Avro data source option `actualSchema` is introduced for setting the original Avro schema in function `from_avro`, while the expected schema is supposed to be set in the parameter `jsonFormatSchema` of `from_avro`.

However, there is another Avro data source option `avroSchema`. It is used for setting the expected schema in readiong and writing.

This PR is to use the option `avroSchema` option for reading Avro data with an evolved schema and remove the new one `actualSchema`

### Why are the changes needed?

Unify and simplify the Avro data source options.

### Does this PR introduce any user-facing change?

Yes.

To deserialize Avro data with an evolved schema, before changes:

```

from_avro('col, expectedSchema, ("actualSchema" -> actualSchema))

```

After changes:

```

from_avro('col, actualSchema, ("avroSchema" -> expectedSchema))

```

The second parameter is always the actual Avro schema after changes.

### How was this patch tested?

Update the existing tests in https://github.com/apache/spark/pull/26780Closes#27045 from gengliangwang/renameAvroOption.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds and exposes the options, 'recursiveFileLookup' and 'pathGlobFilter' in file sources 'mergeSchema' in ORC, into documentation.

- `recursiveFileLookup` at file sources: https://github.com/apache/spark/pull/24830 ([SPARK-27627](https://issues.apache.org/jira/browse/SPARK-27627))

- `pathGlobFilter` at file sources: https://github.com/apache/spark/pull/24518 ([SPARK-27990](https://issues.apache.org/jira/browse/SPARK-27990))

- `mergeSchema` at ORC: https://github.com/apache/spark/pull/24043 ([SPARK-11412](https://issues.apache.org/jira/browse/SPARK-11412))

**Note that** `timeZone` option was not moved from `DataFrameReader.options` as I assume it will likely affect other datasources as well once DSv2 is complete.

### Why are the changes needed?

To document available options in sources properly.

### Does this PR introduce any user-facing change?

In PySpark, `pathGlobFilter` can be set via `DataFrameReader.(text|orc|parquet|json|csv)` and `DataStreamReader.(text|orc|parquet|json|csv)`.

### How was this patch tested?

Manually built the doc and checked the output. Option setting in PySpark is rather a logical change. I manually tested one only:

```bash

$ ls -al tmp

...

-rw-r--r-- 1 hyukjin.kwon staff 3 Dec 20 12:19 aa

-rw-r--r-- 1 hyukjin.kwon staff 3 Dec 20 12:19 ab

-rw-r--r-- 1 hyukjin.kwon staff 3 Dec 20 12:19 ac

-rw-r--r-- 1 hyukjin.kwon staff 3 Dec 20 12:19 cc

```

```python

>>> spark.read.text("tmp", pathGlobFilter="*c").show()

```

```

+-----+

|value|

+-----+

| ac|

| cc|

+-----+

```

Closes#26958 from HyukjinKwon/doc-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to fix documentation for slide function. Fixed the spacing issue and added some parameter related info.

### Why are the changes needed?

Documentation improvement

### Does this PR introduce any user-facing change?

No (doc-only change).

### How was this patch tested?

Manually tested by documentation build.

Closes#26896 from bboutkov/pyspark_doc_fix.

Authored-by: Boris Boutkov <boris.boutkov@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR mainly targets:

1. Expose only explain(mode: String) in Scala side

2. Clean up related codes

- Hide `ExplainMode` under private `execution` package. No particular reason but just because `ExplainUtils` exists there

- Use `case object` + `trait` pattern in `ExplainMode` to look after `ParseMode`.

- Move `Dataset.toExplainString` to `QueryExecution.explainString` to look after `QueryExecution.simpleString`, and deduplicate the codes at `ExplainCommand`.

- Use `ExplainMode` in `ExplainCommand` too.

- Add `explainString` to `PythonSQLUtils` to avoid unexpected test failure of PySpark during refactoring Scala codes side.

### Why are the changes needed?

To minimised exposed APIs, deduplicate, and clean up.

### Does this PR introduce any user-facing change?

`Dataset.explain(mode: ExplainMode)` will be removed (which only exists in master).

### How was this patch tested?

Manually tested and existing tests should cover.

Closes#26898 from HyukjinKwon/SPARK-30200-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This pr is a followup of #26861 to address minor comments from viirya.

### Why are the changes needed?

For better error messages.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually tested.

Closes#26886 from maropu/SPARK-30231-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This pr intends to support explain modes implemented in #26829 for PySpark.

### Why are the changes needed?

For better debugging info. in PySpark dataframes.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UTs.

Closes#26861 from maropu/ExplainModeInPython.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

An empty Spark DataFrame converted to a Pandas DataFrame wouldn't have the right column types. Several type mappings were missing.

### Why are the changes needed?

Empty Spark DataFrames can be used to write unit tests, and verified by converting them to Pandas first. But this can fail when the column types are wrong.

### Does this PR introduce any user-facing change?

Yes; the error reported in the JIRA issue should not happen anymore.

### How was this patch tested?

Through unit tests in `pyspark.sql.tests.test_dataframe.DataFrameTests#test_to_pandas_from_empty_dataframe`

Closes#26747 from dlindelof/SPARK-29188.

Authored-by: David <dlindelof@expediagroup.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

Follow up of https://github.com/apache/spark/pull/24405

### What changes were proposed in this pull request?

The current implementation of _from_avro_ and _AvroDataToCatalyst_ doesn't allow doing schema evolution since it requires the deserialization of an Avro record with the exact same schema with which it was serialized.

The proposed change is to add a new option `actualSchema` to allow passing the schema used to serialize the records. This allows using a different compatible schema for reading by passing both schemas to _GenericDatumReader_. If no writer's schema is provided, nothing changes from before.

### Why are the changes needed?

Consider the following example.

```

// schema ID: 1

val schema1 = """

{

"type": "record",

"name": "MySchema",

"fields": [

{"name": "col1", "type": "int"},

{"name": "col2", "type": "string"}

]

}

"""

// schema ID: 2

val schema2 = """

{

"type": "record",

"name": "MySchema",

"fields": [

{"name": "col1", "type": "int"},

{"name": "col2", "type": "string"},

{"name": "col3", "type": "string", "default": ""}

]

}

"""

```

The two schemas are compatible - i.e. you can use `schema2` to deserialize events serialized with `schema1`, in which case there will be the field `col3` with the default value.

Now imagine that you have two dataframes (read from batch or streaming), one with Avro events from schema1 and the other with events from schema2. **We want to combine them into one dataframe** for storing or further processing.

With the current `from_avro` function we can only decode each of them with the corresponding schema:

```

scalaval df1 = ... // Avro events created with schema1

df1: org.apache.spark.sql.DataFrame = [eventBytes: binary]

scalaval decodedDf1 = df1.select(from_avro('eventBytes, schema1) as "decoded")

decodedDf1: org.apache.spark.sql.DataFrame = [decoded: struct<col1: int, col2: string>]

scalaval df2= ... // Avro events created with schema2

df2: org.apache.spark.sql.DataFrame = [eventBytes: binary]

scalaval decodedDf2 = df2.select(from_avro('eventBytes, schema2) as "decoded")

decodedDf2: org.apache.spark.sql.DataFrame = [decoded: struct<col1: int, col2: string, col3: string>]

```

but then `decodedDf1` and `decodedDf2` have different Spark schemas and we can't union them. Instead, with the proposed change we can decode `df1` in the following way:

```

scalaimport scala.collection.JavaConverters._

scalaval decodedDf1 = df1.select(from_avro(data = 'eventBytes, jsonFormatSchema = schema2, options = Map("actualSchema" -> schema1).asJava) as "decoded")

decodedDf1: org.apache.spark.sql.DataFrame = [decoded: struct<col1: int, col2: string, col3: string>]

```

so that both dataframes have the same schemas and can be merged.

### Does this PR introduce any user-facing change?

This PR allows users to pass a new configuration but it doesn't affect current code.

### How was this patch tested?

A new unit test was added.

Closes#26780 from Fokko/SPARK-27506.

Lead-authored-by: Fokko Driesprong <fokko@apache.org>

Co-authored-by: Gianluca Amori <gianluca.amori@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

This PR is a follow-up to #24043 and cousin of #26730. It exposes the `mergeSchema` option directly in the ORC APIs.

### Why are the changes needed?

So the Python API matches the Scala API.

### Does this PR introduce any user-facing change?

Yes, it adds a new option directly in the ORC reader method signatures.

### How was this patch tested?

I tested this manually as follows:

```

>>> spark.range(3).write.orc('test-orc')

>>> spark.range(3).withColumnRenamed('id', 'name').write.orc('test-orc/nested')

>>> spark.read.orc('test-orc', recursiveFileLookup=True, mergeSchema=True)

DataFrame[id: bigint, name: bigint]

>>> spark.read.orc('test-orc', recursiveFileLookup=True, mergeSchema=False)

DataFrame[id: bigint]

>>> spark.conf.set('spark.sql.orc.mergeSchema', True)

>>> spark.read.orc('test-orc', recursiveFileLookup=True)

DataFrame[id: bigint, name: bigint]

>>> spark.read.orc('test-orc', recursiveFileLookup=True, mergeSchema=False)

DataFrame[id: bigint]

```

Closes#26755 from nchammas/SPARK-30113-ORC-mergeSchema.

Authored-by: Nicholas Chammas <nicholas.chammas@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This change properly documents the `mergeSchema` option directly in the Python APIs for reading Parquet data.

### Why are the changes needed?

The docstring for `DataFrameReader.parquet()` mentions `mergeSchema` but doesn't show it in the API. It seems like a simple oversight.

Before this PR, you'd have to do this to use `mergeSchema`:

```python

spark.read.option('mergeSchema', True).parquet('test-parquet').show()

```

After this PR, you can use the option as (I believe) it was intended to be used:

```python

spark.read.parquet('test-parquet', mergeSchema=True).show()

```

### Does this PR introduce any user-facing change?

Yes, this PR changes the signatures of `DataFrameReader.parquet()` and `DataStreamReader.parquet()` to match their docstrings.

### How was this patch tested?

Testing the `mergeSchema` option directly seems to be left to the Scala side of the codebase. I tested my change manually to confirm the API works.

I also confirmed that setting `spark.sql.parquet.mergeSchema` at the session does not get overridden by leaving `mergeSchema` at its default when calling `parquet()`:

```

>>> spark.conf.set('spark.sql.parquet.mergeSchema', True)

>>> spark.range(3).write.parquet('test-parquet/id')

>>> spark.range(3).withColumnRenamed('id', 'name').write.parquet('test-parquet/name')

>>> spark.read.option('recursiveFileLookup', True).parquet('test-parquet').show()

+----+----+

| id|name|

+----+----+

|null| 1|

|null| 2|

|null| 0|

| 1|null|

| 2|null|

| 0|null|

+----+----+

>>> spark.read.option('recursiveFileLookup', True).parquet('test-parquet', mergeSchema=False).show()

+----+

| id|

+----+

|null|

|null|

|null|

| 1|

| 2|

| 0|

+----+

```

Closes#26730 from nchammas/parquet-merge-schema.

Authored-by: Nicholas Chammas <nicholas.chammas@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

As a follow-up to #24830, this PR adds the `recursiveFileLookup` option to the Python DataFrameReader API.

### Why are the changes needed?

This PR maintains Python feature parity with Scala.

### Does this PR introduce any user-facing change?

Yes.

Before this PR, you'd only be able to use this option as follows:

```python

spark.read.option("recursiveFileLookup", True).text("test-data").show()

```

With this PR, you can reference the option from within the format-specific method:

```python

spark.read.text("test-data", recursiveFileLookup=True).show()

```

This option now also shows up in the Python API docs.

### How was this patch tested?

I tested this manually by creating the following directories with dummy data:

```

test-data

├── 1.txt

└── nested

└── 2.txt

test-parquet

├── nested

│ ├── _SUCCESS

│ ├── part-00000-...-.parquet

├── _SUCCESS

├── part-00000-...-.parquet

```

I then ran the following tests and confirmed the output looked good:

```python

spark.read.parquet("test-parquet", recursiveFileLookup=True).show()

spark.read.text("test-data", recursiveFileLookup=True).show()

spark.read.csv("test-data", recursiveFileLookup=True).show()

```

`python/pyspark/sql/tests/test_readwriter.py` seems pretty sparse. I'm happy to add my tests there, though it seems we have been deferring testing like this to the Scala side of things.

Closes#26718 from nchammas/SPARK-27990-recursiveFileLookup-python.

Authored-by: Nicholas Chammas <nicholas.chammas@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Upgrade Apache Arrow to version 0.15.1. This includes Java artifacts and increases the minimum required version of PyArrow also.

Version 0.12.0 to 0.15.1 includes the following selected fixes/improvements relevant to Spark users:

* ARROW-6898 - [Java] Fix potential memory leak in ArrowWriter and several test classes

* ARROW-6874 - [Python] Memory leak in Table.to_pandas() when conversion to object dtype

* ARROW-5579 - [Java] shade flatbuffer dependency

* ARROW-5843 - [Java] Improve the readability and performance of BitVectorHelper#getNullCount

* ARROW-5881 - [Java] Provide functionalities to efficiently determine if a validity buffer has completely 1 bits/0 bits

* ARROW-5893 - [C++] Remove arrow::Column class from C++ library

* ARROW-5970 - [Java] Provide pointer to Arrow buffer

* ARROW-6070 - [Java] Avoid creating new schema before IPC sending

* ARROW-6279 - [Python] Add Table.slice method or allow slices in \_\_getitem\_\_

* ARROW-6313 - [Format] Tracking for ensuring flatbuffer serialized values are aligned in stream/files.

* ARROW-6557 - [Python] Always return pandas.Series from Array/ChunkedArray.to_pandas, propagate field names to Series from RecordBatch, Table

* ARROW-2015 - [Java] Use Java Time and Date APIs instead of JodaTime

* ARROW-1261 - [Java] Add container type for Map logical type

* ARROW-1207 - [C++] Implement Map logical type

Changelog can be seen at https://arrow.apache.org/release/0.15.0.html

### Why are the changes needed?

Upgrade to get bug fixes, improvements, and maintain compatibility with future versions of PyArrow.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests, manually tested with Python 3.7, 3.8

Closes#26133 from BryanCutler/arrow-upgrade-015-SPARK-29376.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

On the worker we express lambda functions as strings and then eval them to create a "mapper" function. This make the code hard to read & limits the # of arguments a udf can support to 256 for python <= 3.6.

This PR rewrites the mapper functions as nested functions instead of "lambda strings" and allows passing in more than 255 args.

### Why are the changes needed?

The jira ticket associated with this issue describes how MLflow uses udfs to consume columns as features. This pattern isn't unique and a limit of 255 features is quite low.

### Does this PR introduce any user-facing change?

Users can now pass more than 255 cols to a udf function.

### How was this patch tested?

Added a unit test for passing in > 255 args to udf.

Closes#26442 from MrBago/replace-lambdas-on-worker.

Authored-by: Bago Amirbekian <bago@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to infer bytes as binary types in Python 3. See https://github.com/apache/spark/pull/25749 for discussions. I have also checked that Arrow considers `bytes` as binary type, and PySpark UDF can also accepts `bytes` as a binary type.

Since `bytes` is not a `str` anymore in Python 3, it's clear to call it `BinaryType` in Python 3.

### Why are the changes needed?

To respect Python 3's `bytes` type and support Python's primitive types.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

```python

>>> spark.createDataFrame([[b"abc"]])

Traceback (most recent call last):

File "/.../spark/python/pyspark/sql/types.py", line 1036, in _infer_type

return _infer_schema(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1062, in _infer_schema

raise TypeError("Can not infer schema for type: %s" % type(row))

TypeError: Can not infer schema for type: <class 'bytes'>

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/session.py", line 787, in createDataFrame

rdd, schema = self._createFromLocal(map(prepare, data), schema)

File "/.../spark/python/pyspark/sql/session.py", line 445, in _createFromLocal

struct = self._inferSchemaFromList(data, names=schema)

File "/.../spark/python/pyspark/sql/session.py", line 377, in _inferSchemaFromList

schema = reduce(_merge_type, (_infer_schema(row, names) for row in data))

File "/.../spark/python/pyspark/sql/session.py", line 377, in <genexpr>

schema = reduce(_merge_type, (_infer_schema(row, names) for row in data))

File "/.../spark/python/pyspark/sql/types.py", line 1064, in _infer_schema

fields = [StructField(k, _infer_type(v), True) for k, v in items]

File "/.../spark/python/pyspark/sql/types.py", line 1064, in <listcomp>

fields = [StructField(k, _infer_type(v), True) for k, v in items]

File "/.../spark/python/pyspark/sql/types.py", line 1038, in _infer_type

raise TypeError("not supported type: %s" % type(obj))

TypeError: not supported type: <class 'bytes'>

```

**After:**

```python

>>> spark.createDataFrame([[b"abc"]])

DataFrame[_1: binary]

```

### How was this patch tested?

Unittest was added and manually tested.

Closes#26432 from HyukjinKwon/SPARK-29798.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

### What changes were proposed in this pull request?

I propose that we change the example code documentation to call the proper function .

For example, under the `foreachBatch` function, the example code was calling the `foreach()` function by mistake.

### Why are the changes needed?

I suppose it could confuse some people, and it is a typo

### Does this PR introduce any user-facing change?

No, there is no "meaningful" code being change, simply the documentation

### How was this patch tested?

I made the change on a fork and it still worked

Closes#26299 from mstill3/patch-1.

Authored-by: Matt Stillwell <18670089+mstill3@users.noreply.github.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR changes the behavior of `Column.getItem` to call `Column.getItem` on Scala side instead of `Column.apply`.

### Why are the changes needed?

The current behavior is not consistent with that of Scala.

In PySpark:

```Python

df = spark.range(2)

map_col = create_map(lit(0), lit(100), lit(1), lit(200))

df.withColumn("mapped", map_col.getItem(col('id'))).show()

# +---+------+

# | id|mapped|

# +---+------+

# | 0| 100|

# | 1| 200|

# +---+------+

```

In Scala:

```Scala

val df = spark.range(2)

val map_col = map(lit(0), lit(100), lit(1), lit(200))

// The following getItem results in the following exception, which is the right behavior:

// java.lang.RuntimeException: Unsupported literal type class org.apache.spark.sql.Column id

// at org.apache.spark.sql.catalyst.expressions.Literal$.apply(literals.scala:78)

// at org.apache.spark.sql.Column.getItem(Column.scala:856)

// ... 49 elided

df.withColumn("mapped", map_col.getItem(col("id"))).show

```

### Does this PR introduce any user-facing change?

Yes. If the use wants to pass `Column` object to `getItem`, he/she now needs to use the indexing operator to achieve the previous behavior.

```Python

df = spark.range(2)

map_col = create_map(lit(0), lit(100), lit(1), lit(200))

df.withColumn("mapped", map_col[col('id'))].show()

# +---+------+

# | id|mapped|

# +---+------+

# | 0| 100|

# | 1| 200|

# +---+------+

```

### How was this patch tested?

Existing tests.

Closes#26351 from imback82/spark-29664.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This PR adds some extra documentation for the new Cogrouped map Pandas udfs. Specifically:

- Updated the usage guide for the new `COGROUPED_MAP` Pandas udfs added in https://github.com/apache/spark/pull/24981

- Updated the docstring for pandas_udf to include the COGROUPED_MAP type as suggested by HyukjinKwon in https://github.com/apache/spark/pull/25939Closes#26110 from d80tb7/SPARK-29126-cogroup-udf-usage-guide.

Authored-by: Chris Martin <chris@cmartinit.co.uk>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to allow `array_contains` to take column instances.

### Why are the changes needed?

For consistent support in Scala and Python APIs. Scala allows column instances at `array_contains`

Scala:

```scala

import org.apache.spark.sql.functions._

val df = Seq(Array("a", "b", "c"), Array.empty[String]).toDF("data")

df.select(array_contains($"data", lit("a"))).show()

```

Python:

```python

from pyspark.sql.functions import array_contains, lit

df = spark.createDataFrame([(["a", "b", "c"],), ([],)], ['data'])

df.select(array_contains(df.data, lit("a"))).show()

```

However, PySpark sides does not allow.

### Does this PR introduce any user-facing change?

Yes.

```python

from pyspark.sql.functions import array_contains, lit

df = spark.createDataFrame([(["a", "b", "c"],), ([],)], ['data'])

df.select(array_contains(df.data, lit("a"))).show()

```

**Before:**

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/functions.py", line 1950, in array_contains

return Column(sc._jvm.functions.array_contains(_to_java_column(col), value))

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1277, in __call__

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1241, in _build_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1228, in _get_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_collections.py", line 500, in convert

File "/.../spark/python/pyspark/sql/column.py", line 344, in __iter__

raise TypeError("Column is not iterable")

TypeError: Column is not iterable

```

**After:**

```

+-----------------------+

|array_contains(data, a)|

+-----------------------+

| true|

| false|

+-----------------------+

```

### How was this patch tested?

Manually tested and added a doctest.

Closes#26288 from HyukjinKwon/SPARK-29627.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

# What changes were proposed in this pull request?

Add description for ignoreNullFields, which is commited in #26098 , in DataFrameWriter and readwriter.py.

Enable user to use ignoreNullFields in pyspark.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

run unit tests

Closes#26227 from stczwd/json-generator-doc.

Authored-by: stczwd <qcsd2011@163.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

Updating univocity-parsers version to 2.8.3, which adds support for multiple character delimiters

Moving univocity-parsers version to spark-parent pom dependencyManagement section

Adding new utility method to build multi-char delimiter string, which delegates to existing one

Adding tests for multiple character delimited CSV

### What changes were proposed in this pull request?

Adds support for parsing CSV data using multiple-character delimiters. Existing logic for converting the input delimiter string to characters was kept and invoked in a loop. Project dependencies were updated to remove redundant declaration of `univocity-parsers` version, and also to change that version to the latest.

### Why are the changes needed?

It is quite common for people to have delimited data, where the delimiter is not a single character, but rather a sequence of characters. Currently, it is difficult to handle such data in Spark (typically needs pre-processing).

### Does this PR introduce any user-facing change?

Yes. Specifying the "delimiter" option for the DataFrame read, and providing more than one character, will no longer result in an exception. Instead, it will be converted as before and passed to the underlying library (Univocity), which has accepted multiple character delimiters since 2.8.0.

### How was this patch tested?

The `CSVSuite` tests were confirmed passing (including new methods), and `sbt` tests for `sql` were executed.

Closes#26027 from jeff303/SPARK-24540.

Authored-by: Jeff Evans <jeffrey.wayne.evans@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Added tests for grouped map pandas_udf using a window.

### Why are the changes needed?

Current tests for grouped map do not use a window and this had previously caused an error due the window range being a struct column, which was not yet supported.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New tests added.

Closes#26063 from BryanCutler/pyspark-pandas_udf-group-with-window-tests-SPARK-29402.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

Follow up from https://github.com/apache/spark/pull/24981 incorporating some comments from HyukjinKwon.

Specifically:

- Adding `CoGroupedData` to `pyspark/sql/__init__.py __all__` so that documentation is generated.

- Added pydoc, including example, for the use case whereby the user supplies a cogrouping function including a key.

- Added the boilerplate for doctests to cogroup.py. Note that cogroup.py only contains the apply() function which has doctests disabled as per the other Pandas Udfs.

- Restricted the newly exposed RelationalGroupedDataset constructor parameters to access only by the sql package.

- Some minor formatting tweaks.

This was tested by running the appropriate unit tests. I'm unsure as to how to check that my change will cause the documentation to be generated correctly, but it someone can describe how I can do this I'd be happy to check.

Closes#25939 from d80tb7/SPARK-27463-fixes.

Authored-by: Chris Martin <chris@cmartinit.co.uk>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR makes `element_at` in PySpark able to take PySpark `Column` instances.

### Why are the changes needed?

To match with Scala side. Seems it was intended but not working correctly as a bug.

### Does this PR introduce any user-facing change?

Yes. See below:

```python

from pyspark.sql import functions as F

x = spark.createDataFrame([([1,2,3],1),([4,5,6],2),([7,8,9],3)],['list','num'])

x.withColumn('aa',F.element_at('list',x.num.cast('int'))).show()

```

Before:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/functions.py", line 2059, in element_at

return Column(sc._jvm.functions.element_at(_to_java_column(col), extraction))

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1277, in __call__

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1241, in _build_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1228, in _get_args

File "/.../forked/spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_collections.py", line 500, in convert

File "/.../spark/python/pyspark/sql/column.py", line 344, in __iter__

raise TypeError("Column is not iterable")

TypeError: Column is not iterable

```

After:

```

+---------+---+---+

| list|num| aa|

+---------+---+---+

|[1, 2, 3]| 1| 1|

|[4, 5, 6]| 2| 5|

|[7, 8, 9]| 3| 9|

+---------+---+---+

```

### How was this patch tested?

Manually tested against literal, Python native types, and PySpark column.

Closes#25950 from HyukjinKwon/SPARK-29240.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Added "array indices start at 1" in annotation to make it clear for the usage of function slice, in R Scala Python component

### Why are the changes needed?

It will throw exception if the value stare is 0, but array indices start at 0 most of times in other scenarios.

### Does this PR introduce any user-facing change?

Yes, more info provided to user.

### How was this patch tested?

No tests added, only doc change.

Closes#25704 from sheepstop/master.

Authored-by: sheepstop <yangting617@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR allows non-ascii string as an exception message in Python 2 by explicitly en/decoding in case of `str` in Python 2.

### Why are the changes needed?

Previously PySpark will hang when the `UnicodeDecodeError` occurs and the real exception cannot be passed to the JVM side.

See the reproducer as below:

```python

def f():

raise Exception("中")

spark = SparkSession.builder.master('local').getOrCreate()

spark.sparkContext.parallelize([1]).map(lambda x: f()).count()

```

### Does this PR introduce any user-facing change?

User may not observe hanging for the similar cases.

### How was this patch tested?

Added a new test and manually checking.

This pr is based on #18324, credits should also go to dataknocker.

To make lint-python happy for python3, it also includes a followup fix for #25814Closes#25847 from advancedxy/python_exception_19926_and_21045.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR allows Python toLocalIterator to prefetch the next partition while the first partition is being collected. The PR also adds a demo micro bench mark in the examples directory, we may wish to keep this or not.

### Why are the changes needed?

In https://issues.apache.org/jira/browse/SPARK-23961 / 5e79ae3b40 we changed PySpark to only pull one partition at a time. This is memory efficient, but if partitions take time to compute this can mean we're spending more time blocking.

### Does this PR introduce any user-facing change?

A new param is added to toLocalIterator

### How was this patch tested?

New unit test inside of `test_rdd.py` checks the time that the elements are evaluated at. Another test that the results remain the same are added to `test_dataframe.py`.

I also ran a micro benchmark in the examples directory `prefetch.py` which shows an improvement of ~40% in this specific use case.

>

> 19/08/16 17:11:36 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

> Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

> Setting default log level to "WARN".

> To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

> Running timers:

>

> [Stage 32:> (0 + 1) / 1]

> Results:

>

> Prefetch time:

>

> 100.228110831

>

>

> Regular time:

>

> 188.341721614

>

>

>

Closes#25515 from holdenk/SPARK-27659-allow-pyspark-tolocalitr-to-prefetch.

Authored-by: Holden Karau <hkarau@apple.com>

Signed-off-by: Holden Karau <hkarau@apple.com>

### What changes were proposed in this pull request?

The str of `CapaturedException` is now returned by str(self.desc) rather than repr(self.desc), which is more user-friendly. It also handles unicode under python2 specially.

### Why are the changes needed?

This is an improvement, and makes exception more human readable in python side.

### Does this PR introduce any user-facing change?

Before this pr, select `中文字段` throws exception something likes below:

```

Traceback (most recent call last):

File "/Users/advancedxy/code_workspace/github/spark/python/pyspark/sql/tests/test_utils.py", line 34, in test_capture_user_friendly_exception

raise e

AnalysisException: u"cannot resolve '`\u4e2d\u6587\u5b57\u6bb5`' given input columns: []; line 1 pos 7;\n'Project ['\u4e2d\u6587\u5b57\u6bb5]\n+- OneRowRelation\n"

```

after this pr:

```

Traceback (most recent call last):

File "/Users/advancedxy/code_workspace/github/spark/python/pyspark/sql/tests/test_utils.py", line 34, in test_capture_user_friendly_exception

raise e

AnalysisException: cannot resolve '`中文字段`' given input columns: []; line 1 pos 7;

'Project ['中文字段]

+- OneRowRelation

```

### How was this patch

Add a new test to verify unicode are correctly converted and manual checks for thrown exceptions.

This pr's credits should go to uncleGen and is based on https://github.com/apache/spark/pull/17267Closes#25814 from advancedxy/python_exception_19926_and_21045.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

reorganize the packages of DS v2 interfaces/classes:

1. `org.spark.sql.connector.catalog`: put `TableCatalog`, `Table` and other related interfaces/classes

2. `org.spark.sql.connector.expression`: put `Expression`, `Transform` and other related interfaces/classes

3. `org.spark.sql.connector.read`: put `ScanBuilder`, `Scan` and other related interfaces/classes

4. `org.spark.sql.connector.write`: put `WriteBuilder`, `BatchWrite` and other related interfaces/classes

### Why are the changes needed?

Data Source V2 has evolved a lot. It's a bit weird that `Expression` is in `org.spark.sql.catalog.v2` and `Table` is in `org.spark.sql.sources.v2`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#25700 from cloud-fan/package.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to allow `bytes` as an acceptable type for binary type for `createDataFrame`.

### Why are the changes needed?

`bytes` is a standard type for binary in Python. This should be respected in PySpark side.

### Does this PR introduce any user-facing change?

Yes, _when specified type is binary_, we will allow `bytes` as a binary type. Previously this was not allowed in both Python 2 and Python 3 as below:

```python

spark.createDataFrame([[b"abcd"]], "col binary")

```

in Python 3

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/session.py", line 787, in createDataFrame

rdd, schema = self._createFromLocal(map(prepare, data), schema)

File "/.../spark/python/pyspark/sql/session.py", line 442, in _createFromLocal

data = list(data)

File "/.../spark/python/pyspark/sql/session.py", line 769, in prepare

verify_func(obj)

File "/.../forked/spark/python/pyspark/sql/types.py", line 1403, in verify

verify_value(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1384, in verify_struct

verifier(v)

File "/.../spark/python/pyspark/sql/types.py", line 1403, in verify

verify_value(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1397, in verify_default

verify_acceptable_types(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1282, in verify_acceptable_types

% (dataType, obj, type(obj))))

TypeError: field col: BinaryType can not accept object b'abcd' in type <class 'bytes'>

```

in Python 2:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/session.py", line 787, in createDataFrame

rdd, schema = self._createFromLocal(map(prepare, data), schema)

File "/.../spark/python/pyspark/sql/session.py", line 442, in _createFromLocal

data = list(data)

File "/.../spark/python/pyspark/sql/session.py", line 769, in prepare

verify_func(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1403, in verify

verify_value(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1384, in verify_struct

verifier(v)

File "/.../spark/python/pyspark/sql/types.py", line 1403, in verify

verify_value(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1397, in verify_default

verify_acceptable_types(obj)

File "/.../spark/python/pyspark/sql/types.py", line 1282, in verify_acceptable_types

% (dataType, obj, type(obj))))

TypeError: field col: BinaryType can not accept object 'abcd' in type <type 'str'>

```

So, it won't break anything.

### How was this patch tested?

Unittests were added and also manually tested as below.

```bash

./run-tests --python-executables=python2,python3 --testnames "pyspark.sql.tests.test_serde"

```

Closes#25749 from HyukjinKwon/SPARK-29041.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Remove SQLContext.createExternalTable and Catalog.createExternalTable, deprecated in favor of createTable since 2.2.0, plus tests of deprecated methods

- Remove HiveContext, deprecated in 2.0.0, in favor of `SparkSession.builder.enableHiveSupport`

- Remove deprecated KinesisUtils.createStream methods, plus tests of deprecated methods, deprecate in 2.2.0

- Remove deprecated MLlib (not Spark ML) linear method support, mostly utility constructors and 'train' methods, and associated docs. This includes methods in LinearRegression, LogisticRegression, Lasso, RidgeRegression. These have been deprecated since 2.0.0

- Remove deprecated Pyspark MLlib linear method support, including LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD

- Remove 'runs' argument in KMeans.train() method, which has been a no-op since 2.0.0

- Remove deprecated ChiSqSelector isSorted protected method

- Remove deprecated 'yarn-cluster' and 'yarn-client' master argument in favor of 'yarn' and deploy mode 'cluster', etc

Notes:

- I was not able to remove deprecated DataFrameReader.json(RDD) in favor of DataFrameReader.json(Dataset); the former was deprecated in 2.2.0, but, it is still needed to support Pyspark's .json() method, which can't use a Dataset.

- Looks like SQLContext.createExternalTable was not actually deprecated in Pyspark, but, almost certainly was meant to be? Catalog.createExternalTable was.

- I afterwards noted that the toDegrees, toRadians functions were almost removed fully in SPARK-25908, but Felix suggested keeping just the R version as they hadn't been technically deprecated. I'd like to revisit that. Do we really want the inconsistency? I'm not against reverting it again, but then that implies leaving SQLContext.createExternalTable just in Pyspark too, which seems weird.

- I *kept* LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD, RidgeRegressionWithSGD in Pyspark, though deprecated, as it is hard to remove them (still used by StreamingLogisticRegressionWithSGD?) and they are not fully removed in Scala. Maybe should not have been deprecated.

### Why are the changes needed?

Deprecated items are easiest to remove in a major release, so we should do so as much as possible for Spark 3. This does not target items deprecated 'recently' as of Spark 2.3, which is still 18 months old.

### Does this PR introduce any user-facing change?

Yes, in that deprecated items are removed from some public APIs.

### How was this patch tested?

Existing tests.

Closes#25684 from srowen/SPARK-28980.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

`DataFrameReader.json()` accepts a partition column that is of numeric, date or timestamp type, according to the implementation in `JDBCRelation.scala`. Update the scaladoc accordingly, to match the documentation in `sql-data-sources-jdbc.md` too.

### Why are the changes needed?

scaladoc is incorrect.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#25687 from srowen/SPARK-28977.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The Experimental and Evolving annotations are both (like Unstable) used to express that a an API may change. However there are many things in the code that have been marked that way since even Spark 1.x. Per the dev thread, anything introduced at or before Spark 2.3.0 is pretty much 'stable' in that it would not change without a deprecation cycle. Therefore I'd like to remove most of these annotations. And, remove the `:: Experimental ::` scaladoc tag too. And likewise for Python, R.

The changes below can be summarized as:

- Generally, anything introduced at or before Spark 2.3.0 has been unmarked as neither Evolving nor Experimental

- Obviously experimental items like DSv2, Barrier mode, ExperimentalMethods are untouched

- I _did_ unmark a few MLlib classes introduced in 2.4, as I am quite confident they're not going to change (e.g. KolmogorovSmirnovTest, PowerIterationClustering)

It's a big change to review, so I'd suggest scanning the list of _files_ changed to see if any area seems like it should remain partly experimental and examine those.

### Why are the changes needed?

Many of these annotations are incorrect; the APIs are de facto stable. Leaving them also makes legitimate usages of the annotations less meaningful.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25558 from srowen/SPARK-28855.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to match the test with branch-2.4. See https://github.com/apache/spark/pull/25593#discussion_r318109047

Seems using `SparkSession.builder` with Spark conf possibly affects other tests.

### Why are the changes needed?

To match with branch-2.4 and to make easier to backport.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Test was fixed.

Closes#25603 from HyukjinKwon/SPARK-28881-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Make `spark.sql.crossJoin.enabled` default value true

### Why are the changes needed?

For implicit cross join, we can set up a watchdog to cancel it if running for a long time.

When "spark.sql.crossJoin.enabled" is false, because `CheckCartesianProducts` is implemented in logical plan stage, it may generate some mismatching error which may confuse end user:

* it's done in logical phase, so we may fail queries that can be executed via broadcast join, which is very fast.

* if we move the check to the physical phase, then a query may success at the beginning, and begin to fail when the table size gets larger (other people insert data to the table). This can be quite confusing.

* the CROSS JOIN syntax doesn't work well if join reorder happens.

* some non-equi-join will generate plan using cartesian product, but `CheckCartesianProducts` do not detect it and raise error.

So that in order to address this in simpler way, we can turn off showing this cross-join error by default.

For reference, I list some cases raising mismatching error here:

Providing:

```

spark.range(2).createOrReplaceTempView("sm1") // can be broadcast

spark.range(50000000).createOrReplaceTempView("bg1") // cannot be broadcast

spark.range(60000000).createOrReplaceTempView("bg2") // cannot be broadcast

```

1) Some join could be convert to broadcast nested loop join, but CheckCartesianProducts raise error. e.g.

```

select sm1.id, bg1.id from bg1 join sm1 where sm1.id < bg1.id

```

2) Some join will run by CartesianJoin but CheckCartesianProducts DO NOT raise error. e.g.

```

select bg1.id, bg2.id from bg1 join bg2 where bg1.id < bg2.id

```

### Does this PR introduce any user-facing change?

### How was this patch tested?

Closes#25520 from WeichenXu123/SPARK-28621.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to add a test case for:

```bash

./bin/pyspark --conf spark.driver.maxResultSize=1m

spark.conf.set("spark.sql.execution.arrow.enabled",True)

```

```python

spark.range(10000000).toPandas()

```

```

Empty DataFrame

Columns: [id]

Index: []

```

which can result in partial results (see https://github.com/apache/spark/pull/25593#issuecomment-525153808). This regression was found between Spark 2.3 and Spark 2.4, and accidentally fixed.

### Why are the changes needed?

To prevent the same regression in the future.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Test was added.

Closes#25594 from HyukjinKwon/SPARK-28881.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The parameters doc string of the function format_string was changed from _col_, _d_ to _format_, _cols_ which is what the actual function declaration states

### Why are the changes needed?

The parameters stated by the documentation was inaccurate

### Does this PR introduce any user-facing change?

Yes.

**BEFORE**

**AFTER**

### How was this patch tested?

N/A: documentation only

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

-->

Closes#25506 from darrentirto/SPARK-28777.

Authored-by: darrentirto <darrentirto@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

A GROUPED_AGG pandas python udf can't work, if without group by clause, like `select udf(id) from table`.