### What changes were proposed in this pull request?

Add examples and parameter description for these Scala functions:

* transform

* exists

* forall

* aggregate

* zip_with

* transform_keys

* transform_values

* map_filter

* map_zip_with

### Why are the changes needed?

Better documentation for UX.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27449 from Ngone51/doc-funcs.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Use `CommandUtils.calculateTotalLocationSize` for `AnalyzePartitionCommand` in order to calculate location sizes in parallel.

### Why are the changes needed?

For better performance.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27471 from Ngone51/dev_calculate_in_parallel.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When I running the `window_part2.sql` tests find it lack insert sql. Therefore, the output is empty.

I checked the postgresql and reference https://github.com/postgres/postgres/blob/master/src/test/regress/sql/window.sql

Although `window_part1.sql` and `window_part3.sql` exists the insert sql, I think should also add it into `window_part2.sql`.

Because only one case reference the table `empsalary` and it throws `AnalysisException`.

```

-- !query

select last(salary) over(order by salary range between 1000 preceding and 1000 following),

lag(salary) over(order by salary range between 1000 preceding and 1000 following),

salary from empsalary

-- !query schema

struct<>

-- !query output

org.apache.spark.sql.AnalysisException

Window Frame specifiedwindowframe(RangeFrame, -1000, 1000) must match the required frame specifiedwindowframe(RowFrame, -1, -1);

```

So we should do four work:

1. comment out the only one case and create a new ticket.

2. Add `INSERT INTO empsalary`.

Note: window_part4.sql not use the table `empsalary`.

### Why are the changes needed?

Supplementary test data.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New test case

Closes#27439 from beliefer/add-insert-to-window.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR fixes the issue where queries with qualified columns like `SELECT t.a FROM t` would fail to resolve for v2 tables.

This PR would allow qualified column names in query as following:

```SQL

SELECT testcat.ns1.ns2.tbl.foo FROM testcat.ns1.ns2.tbl

SELECT ns1.ns2.tbl.foo FROM testcat.ns1.ns2.tbl

SELECT ns2.tbl.foo FROM testcat.ns1.ns2.tbl

SELECT tbl.foo FROM testcat.ns1.ns2.tbl

```

### Why are the changes needed?

This is a bug because you cannot qualify column names in queries.

### Does this PR introduce any user-facing change?

Yes, now users can qualify column names for v2 tables.

### How was this patch tested?

Added new tests.

Closes#27391 from imback82/qualified_col.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

update `DataFrameAggregateSuite` to make it pass with AQE

### Why are the changes needed?

We don't need to turn off AQE in `DataFrameAggregateSuite`

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

run `DataFrameAggregateSuite` locally with AQE on.

Closes#27451 from cloud-fan/aqe-test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Follow up work for SPARK-29864, reference the config `spark.sql.legacy.fromDayTimeString.enabled` in error message.

### Why are the changes needed?

For better usability.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27464 from xuanyuanking/SPARK-29864-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

When spark.sql.ansi.enabled is true, for the statement:

```

select cast(cast(2147483648 as Float) as Integer) //result is 2147483647

```

Its result is 2147483647 and does not throw `ArithmeticException`.

The root cause is that, the below code does not work for some corner cases.

94fc0e3235/sql/catalyst/src/main/scala/org/apache/spark/sql/types/numerics.scala (L129-L141)

For example:

In this PR, I fix it by comparing Math.floor(x) with Int.MaxValue directly.

### Why are the changes needed?

Result corrupt.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added Unit test.

Closes#27151 from turboFei/SPARK-26218-follow-up-int-overflow.

Authored-by: turbofei <fwang12@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to partially revert the commit 51a6ba0181, and provide a legacy parser based on `FastDateFormat` which is compatible to `SimpleDateFormat`.

To enable the legacy parser, set `spark.sql.legacy.timeParser.enabled` to `true`.

### Why are the changes needed?

To allow users to restore old behavior in parsing timestamps/dates using `SimpleDateFormat` patterns. The main reason for restoring is `DateTimeFormatter`'s patterns are not fully compatible to `SimpleDateFormat` patterns, see https://issues.apache.org/jira/browse/SPARK-30668

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

- Added new test to `DateFunctionsSuite`

- Restored additional test cases in `JsonInferSchemaSuite`.

Closes#27441 from MaxGekk/support-simpledateformat.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a follow-up for #22787. In #22787 we disallowed empty strings for json parser except for string and binary types. This follow-up adds a legacy config for restoring previous behavior of allowing empty string.

### Why are the changes needed?

Adding a legacy config to make migration easy for Spark users.

### Does this PR introduce any user-facing change?

Yes. If set this legacy config to true, the users can restore previous behavior prior to Spark 3.0.0.

### How was this patch tested?

Unit test.

Closes#27456 from viirya/SPARK-25040-followup.

Lead-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR is to fix a potential bug in AQE where an `ExecSubqueryExpression` could be mistakenly replaced with another `ExecSubqueryExpression` with the same `ListQuery` but a different `child` expression.

This is because a ListQuery's id can only identify the ListQuery itself, not the parent expression `InSubquery`, but right now the `subqueryMap` in `InsertAdaptiveSparkPlan` uses the `ListQuery`'s id as key and the corresponding `InSubqueryExec` for the `ListQuery`'s parent expression as value. So the fix uses the corresponding `SubqueryExec` for the `ListQuery` itself as the map's value.

### Why are the changes needed?

This logical bug could potentially cause a wrong query plan, which could throw an exception related to unresolved columns.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Passed existing UTs.

Closes#27446 from maryannxue/spark-30717.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Put the configs below needed by Structured Streaming UI into StaticSQLConf:

- spark.sql.streaming.ui.enabled

- spark.sql.streaming.ui.retainedProgressUpdates

- spark.sql.streaming.ui.retainedQueries

### Why are the changes needed?

Make all SS UI configs consistent with other similar configs in usage and naming.

### Does this PR introduce any user-facing change?

Yes, add new static config `spark.sql.streaming.ui.retainedProgressUpdates`.

### How was this patch tested?

Existing UT.

Closes#27425 from xuanyuanking/SPARK-29543-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

Adds NoSuchDatabaseException and NoSuchNamespaceException to the `isView` method for SessionCatalog.

### Why are the changes needed?

This method prevents specialized resolutions from kicking in within Analysis when using V2 Catalogs if the identifier is a specialized identifier.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added test to DataSourceV2SessionCatalogSuite

Closes#27423 from brkyvz/isViewF.

Authored-by: Burak Yavuz <brkyvz@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch adds a DDL command `SHOW CREATE TABLE AS SERDE`. It is used to generate Hive DDL for a Hive table.

For original `SHOW CREATE TABLE`, it now shows Spark DDL always. If given a Hive table, it tries to generate Spark DDL.

For Hive serde to data source conversion, this uses the existing mapping inside `HiveSerDe`. If can't find a mapping there, throws an analysis exception on unsupported serde configuration.

It is arguably that some Hive fileformat + row serde might be mapped to Spark data source, e.g., CSV. It is not included in this PR. To be conservative, it may not be supported.

For Hive serde properties, for now this doesn't save it to Spark DDL because it may not useful to keep Hive serde properties in Spark table.

## How was this patch tested?

Added test.

Closes#24938 from viirya/SPARK-27946.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

This PR adds `SparkSession.executeCommand` API for external datasource to execute a random command like

```

val df = spark.executeCommand("xxxCommand", "xxxSource", "xxxOptions")

```

Note that the command doesn't execute in Spark, but inside an external execution engine depending on data source. And it will be eagerly executed after `executeCommand` called and the returned `DataFrame` will contain the output of the command(if any).

### Why are the changes needed?

This can be useful when user wants to execute some commands out of Spark. For example, executing custom DDL/DML command for JDBC, creating index for ElasticSearch, creating cores for Solr and so on(as HyukjinKwon suggested).

Previously, user needs to use an option to achieve the goal, e.g. `spark.read.format("xxxSource").option("command", "xxxCommand").load()`, which is kind of cumbersome. With this change, it can be more convenient for user to achieve the same goal.

### Does this PR introduce any user-facing change?

Yes, new API from `SparkSession` and a new interface `ExternalCommandRunnableProvider`.

### How was this patch tested?

Added a new test suite.

Closes#27199 from Ngone51/dev-executeCommand.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Co-authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

- Replace `new Integer(0)` by a serializable instance in RDD.scala

- Use `.valueOf()` instead of constructors of `java.lang.Integer` and `java.lang.Double` because constructors has been deprecated, see https://docs.oracle.com/javase/9/docs/api/java/lang/Integer.html

### Why are the changes needed?

This fixes the following warnings:

1. RDD.scala:240: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

2. MutableProjectionSuite.scala:63: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

3. UDFSuite.scala:446: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

4. UDFSuite.scala:451: constructor Double in class Double is deprecated: see corresponding Javadoc for more information.

5. HiveUserDefinedTypeSuite.scala:71: constructor Double in class Double is deprecated: see corresponding Javadoc for more information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- By RDDSuite, MutableProjectionSuite, UDFSuite and HiveUserDefinedTypeSuite

Closes#27399 from MaxGekk/eliminate-warning-part4.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Added description for metrics shown in the WholeStageCodegen-node in DAG viz.

This is before the change is applied.

And following is after change.

For this change, I also modify the layout of DAG viz.

Actually, I noticed it's not enough to just added the description.

Following is without changing the layout.

### Why are the changes needed?

Users can't understand what those metrics mean.

### Does this PR introduce any user-facing change?

Yes. The layout is a little bit changed.

### How was this patch tested?

I confirm the result of DAG viz with following 3 operations.

`sc.parallelize(1 to 10).toDF.sort("value").filter("value > 1").selectExpr("value * 2").show`

`sc.parallelize(1 to 10).toDF.sort("value").filter("value > 1").selectExpr("value * 2").write.format("json").mode("overwrite").save("/tmp/test_output")`

`sc.parallelize(1 to 10).toDF.write.format("json").mode("append").save("/tmp/test_output")`

Closes#27405 from sarutak/sql-dag-metrics.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR reflects review comments on post-hoc review among PRs for SPARK-29779 (#27085), SPARK-30479 (#27164). The list of review comments this PR addresses are below:

* https://github.com/apache/spark/pull/27085#discussion_r373304218

* https://github.com/apache/spark/pull/27164#discussion_r373300793

* https://github.com/apache/spark/pull/27164#discussion_r373301193

* https://github.com/apache/spark/pull/27164#discussion_r373301351

I also applied review comments to the CORE module (BasicEventFilterBuilder.scala) as well, as the review comments for SQL/core module (SQLEventFilterBuilder.scala) can be applied there as well.

### Why are the changes needed?

There're post-hoc reviews on PRs for such issues, like links in above section.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27414 from HeartSaVioR/SPARK-28869-SPARK-29779-SPARK-30479-FOLLOWUP-posthoc-reviews.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

This PR solves two bugs related to streaming limits

**Bug 1 (SPARK-30658)**: Limit before a streaming aggregate (i.e. `df.limit(5).groupBy().count()`) in complete mode was not being planned as a stateful streaming limit. The planner rule planned a logical limit with a stateful streaming limit plan only if the query is in append mode. As a result, instead of allowing max 5 rows across batches, the planned streaming query was allowing 5 rows in every batch thus producing incorrect results.

**Solution**: Change the planner rule to plan the logical limit with a streaming limit plan even when the query is in complete mode if the logical limit has no stateful operator before it.

**Bug 2 (SPARK-30657)**: `LocalLimitExec` does not consume the iterator of the child plan. So if there is a limit after a stateful operator like streaming dedup in append mode (e.g. `df.dropDuplicates().limit(5)`), the state changes of streaming duplicate may not be committed (most stateful ops commit state changes only after the generated iterator is fully consumed).

**Solution**: Change the planner rule to always use a new `StreamingLocalLimitExec` which always fully consumes the iterator. This is the safest thing to do. However, this will introduce a performance regression as consuming the iterator is extra work. To minimize this performance impact, add an additional post-planner optimization rule to replace `StreamingLocalLimitExec` with `LocalLimitExec` when there is no stateful operator before the limit that could be affected by it.

No

Updated incorrect unit tests and added new ones

Closes#27373 from tdas/SPARK-30657.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

All credit to Ngone51, Closes#27293.

### What changes were proposed in this pull request?

This PR improves `AlterTableAddPartitionCommand` by:

1. adds an internal config for partitions batch size to avoid hard code

2. reuse `InMemoryFileIndex.bulkListLeafFiles` to perform parallel file listing to improve code reuse

### Why are the changes needed?

Improve code quality.

### Does this PR introduce any user-facing change?

Yes. We renamed `spark.sql.statistics.parallelFileListingInStatsComputation.enabled` to `spark.sql.parallelFileListingInCommands.enabled` as a side effect of this change.

### How was this patch tested?

Pass Jenkins.

Closes#27413 from xuanyuanking/SPARK-29938.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Adds an Analyzer rule to normalize the column names used in V2 AlterTable table changes. We need to handle all ColumnChange operations. We add an extra match statement for future proofing new changes that may be added. This prevents downstream consumers (e.g. catalogs) to deal about case sensitivity or check that columns exist, etc.

We also fix the behavior for ALTER TABLE CHANGE COLUMN (Hive style syntax) for adding comments to complex data types. Currently, the data type needs to be provided as part of the Hive style syntax. This assumes that the data type as changed when it may have not and the user only wants to add a comment, which fails in CheckAnalysis.

### Why are the changes needed?

Currently we do not handle case sensitivity correctly for ALTER TABLE ALTER COLUMN operations.

### Does this PR introduce any user-facing change?

No, fixes a bug.

### How was this patch tested?

Introduced v2CommandsCaseSensitivitySuite and added a test around HiveStyle Change columns to PlanResolutionSuite

Closes#27350 from brkyvz/normalizeAlter.

Authored-by: Burak Yavuz <brkyvz@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR makes `SparkSession.emptyDataFrame` use an empty local relation instead of an empty RDD. This allows to optimizer to recognize this as an empty relation, and creates the opportunity to do some more aggressive optimizations.

### Why are the changes needed?

It allows us to optimize empty dataframes better.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added a test case to `DataFrameSuite`.

Closes#27400 from hvanhovell/SPARK-30671.

Authored-by: herman <herman@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

We propose to add a new interface `SupportsAdmissionControl` and `ReadLimit`. A ReadLimit defines how much data should be read in the next micro-batch. `SupportsAdmissionControl` specifies that a source can rate limit its ingest into the system. The source can tell the system what the user specified as a read limit, and the system can enforce this limit within each micro-batch or impose its own limit if the Trigger is Trigger.Once() for example.

We then use this interface in FileStreamSource, KafkaSource, and KafkaMicroBatchStream.

### Why are the changes needed?

Sources currently have no information around execution semantics such as whether the stream is being executed in Trigger.Once() mode. This interface will pass this information into the sources as part of planning. With a trigger like Trigger.Once(), the semantics are to process all the data available to the datasource in a single micro-batch. However, this semantic can be broken when data source options such as `maxOffsetsPerTrigger` (in the Kafka source) rate limit the amount of data read for that micro-batch without this interface.

### Does this PR introduce any user-facing change?

DataSource developers can extend this interface for their streaming sources to add admission control into their system and correctly support Trigger.Once().

### How was this patch tested?

Existing tests, as this API is mostly internal

Closes#27380 from brkyvz/rateLimit.

Lead-authored-by: Burak Yavuz <brkyvz@gmail.com>

Co-authored-by: Burak Yavuz <burak@databricks.com>

Signed-off-by: Burak Yavuz <brkyvz@gmail.com>

### What changes were proposed in this pull request?

Instead of having several overloads of `getTable` method in `TableProvider`, it's better to have 2 methods explicitly: `inferSchema` and `inferPartitioning`. With a single `getTable` method that takes everything: schema, partitioning and properties.

This PR also adds a `supportsExternalMetadata` method in `TableProvider`, to indicate if the source support external table metadata. If this flag is false:

1. spark.read.schema... is disallowed and fails

2. when we support creating v2 tables in session catalog, spark only keeps table properties in the catalog.

### Why are the changes needed?

API improvement.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

existing tests

Closes#26868 from cloud-fan/provider2.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Credit to uncleGen for discovering the problem and providing simple reproducer as UT. New UT in this patch is borrowed from #26156 and I'm retaining a commit from #26156 (except unnecessary part on this path) to properly give a credit.

This patch fixes the issue that partition ID could be mis-assigned when the query contains UNION and stream-stream join is placed on the right side. We assume the range of partition IDs as `(0 ~ number of shuffle partitions - 1)` for stateful operators, but when we use stream-stream join on the right side of UNION, the range of partition ID of task goes to `(number of partitions in left side, number of partitions in left side + number of shuffle partitions - 1)`, which `number of partitions in left side` can be changed in some cases (new UT points out the one of the cases).

The root reason of bug is that stream-stream join picks the partition ID from TaskContext, which wouldn't be same as partition ID from source if union is being used. Hopefully we can pick the right partition ID from source in StateStoreAwareZipPartitionsRDD - this patch leverages that partition ID.

### Why are the changes needed?

This patch will fix the broken of assumption of partition range on stateful operator, as well as fix the issue reported in JIRA issue SPARK-29438.

### Does this PR introduce any user-facing change?

Yes, if their query is using UNION and stream-stream join is placed on the right side. They may encounter the problem to read state from checkpoint and may need to discard checkpoint to continue.

### How was this patch tested?

Added UT which fails on current master branch, and passes with this patch.

Closes#26162 from HeartSaVioR/SPARK-29438.

Lead-authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Co-authored-by: uncleGen <hustyugm@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

### What changes were proposed in this pull request?

Update `ResolvedNamespace` to accept catalog as `CatalogPlugin` not `SupportsNamespaces`.

This is extracted from https://github.com/apache/spark/pull/27345

### Why are the changes needed?

not all commands that need to resolve namespaces require a namespace catalog. For example, `SHOW TABLE` is implemented by `TableCatalog.listTables`, and is nothing to do with `SupportsNamespace`.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

existing tests

Closes#27403 from cloud-fan/ns.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fix following typos:

- tranformation -> transformation

- the boolean -> the Boolean

- signle -> single

### Why are the changes needed?

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Scala linter.

Closes#27382 from zero323/functions-typos.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

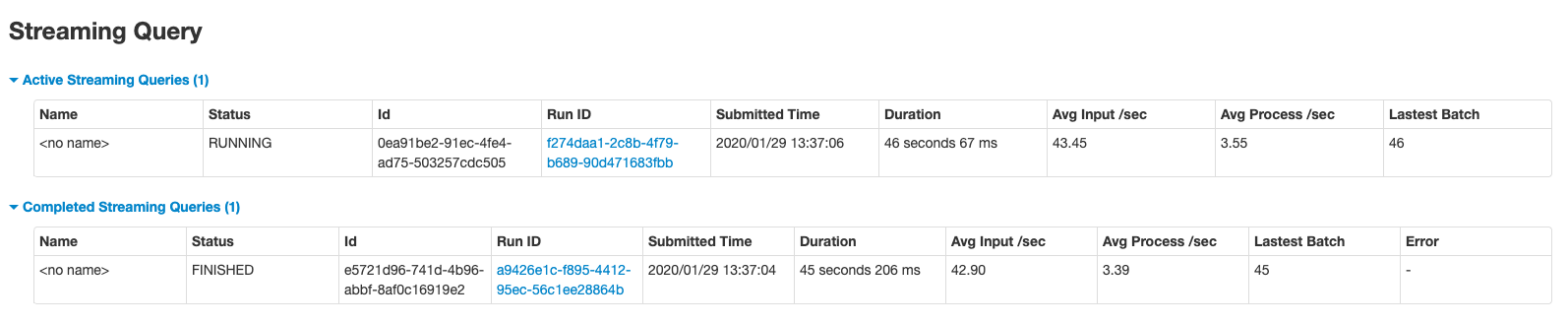

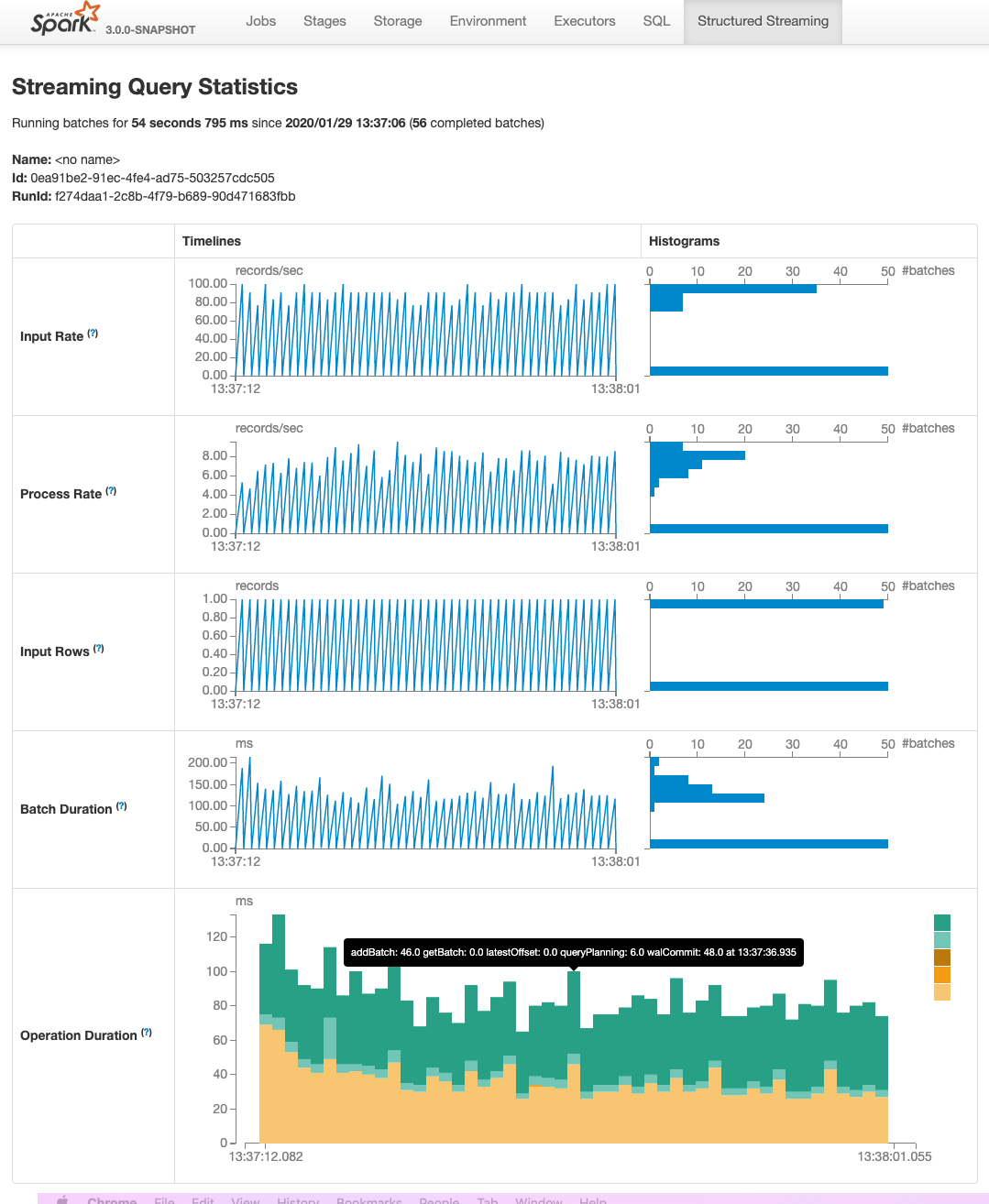

This PR adds two pages to Web UI for Structured Streaming:

- "/streamingquery": Streaming Query Page, providing some aggregate information for running/completed streaming queries.

- "/streamingquery/statistics": Streaming Query Statistics Page, providing detailed information for streaming query, including `Input Rate`, `Process Rate`, `Input Rows`, `Batch Duration` and `Operation Duration`

### Why are the changes needed?

It helps users to better monitor Structured Streaming query.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- new added and existing UTs

- manual test

Closes#26201 from uncleGen/SPARK-29543.

Lead-authored-by: uncleGen <hustyugm@gmail.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Genmao Yu <hustyugm@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

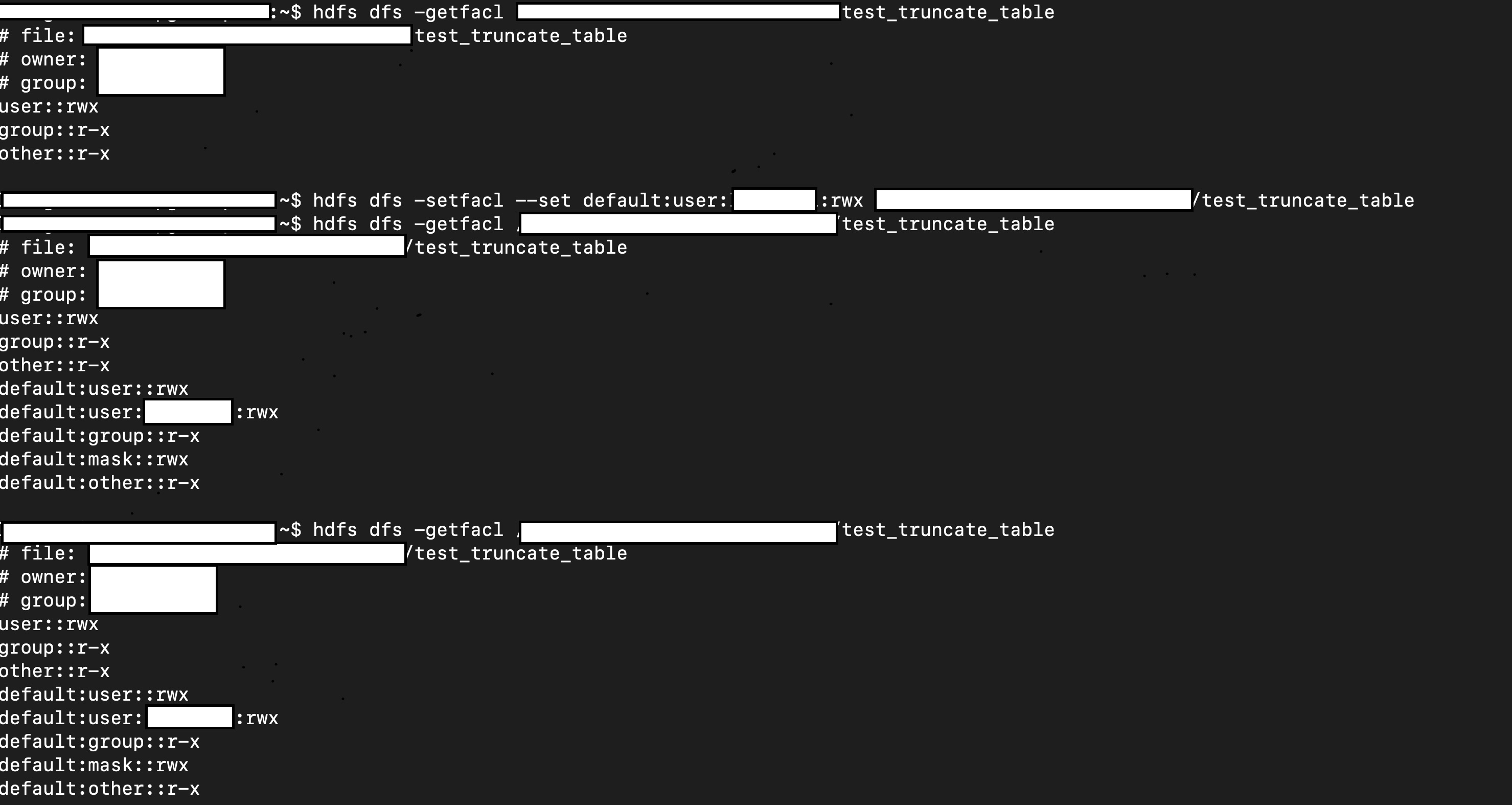

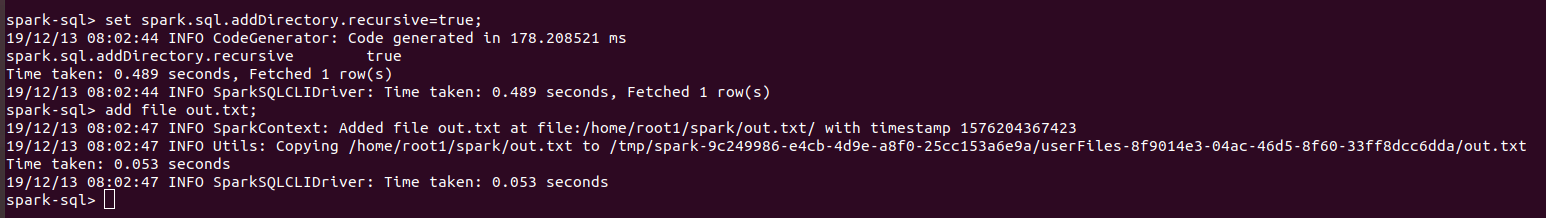

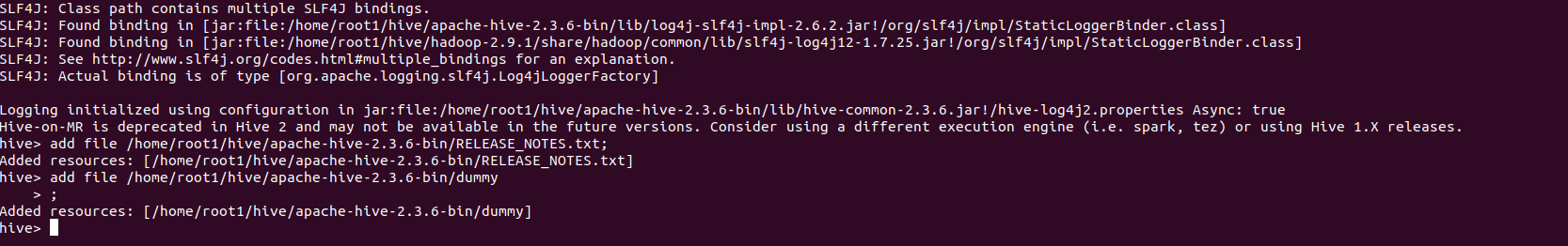

### What changes were proposed in this pull request?

This pr intends to rename `spark.sql.legacy.addDirectory.recursive` into `spark.sql.legacy.addDirectory.recursive.enabled`.

### Why are the changes needed?

For consistent option names.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#27372 from maropu/SPARK-30234-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to transform the `Like` expression to `TernaryExpression`, and add third parameter `escape`. So, the `like` function will have feature parity with `LIKE ... ESCAPE` syntax supported by 187f3c1773.

### Why are the changes needed?

The `like` functions can be called with 2 or 3 parameters, and functionally equivalent to `LIKE` and `LIKE ... ESCAPE` SQL expressions.

### Does this PR introduce any user-facing change?

Yes, before `like` fails with the exception:

```sql

spark-sql> SELECT like('_Apache Spark_', '__%Spark__', '_');

Error in query: Invalid number of arguments for function like. Expected: 2; Found: 3; line 1 pos 7

```

After:

```sql

spark-sql> SELECT like('_Apache Spark_', '__%Spark__', '_');

true

```

### How was this patch tested?

- Add new example for the `like` function which is checked by `SQLQuerySuite`

- Run `RegexpExpressionsSuite` and `ExpressionParserSuite`.

Closes#27355 from MaxGekk/like-3-args.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch converts CR/LF into LF in 3 source files, which most files are only using LF. This patch also add rules to enforce EOL as LF for all java, scala, xml, py, R files.

### Why are the changes needed?

The majority of source code files are using LF and only three files are CR/LF. While using IDE would let us don't bother with the difference, it still has a chance to make unnecessary diff if the file is modified with the editor which doesn't handle it automatically.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

```

grep -IUrl --color "^M" . | grep "\.java\|\.scala\|\.xml\|\.py\|\.R" | grep -v "/target/" | grep -v "/build/" | grep -v "/dist/" | grep -v "dependency-reduced-pom.xml" | grep -v ".pyc"

```

(Please note you'll need to type CTRL+V -> CTRL+M in bash shell to get `^M` because it's representing CR/LF, not a combination of `^` and `M`.)

Before the patch, the result is:

```

./sql/core/src/main/java/org/apache/spark/sql/execution/columnar/ColumnDictionary.java

./sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/complexTypesSuite.scala

./sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/ComplexTypes.scala

```

and after the patch, the result is None.

And git shows WARNING message if EOL of any of source files in given types are modified to CR/LF, like below:

```

warning: CRLF will be replaced by LF in sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/Analyzer.scala.

The file will have its original line endings in your working directory.

```

Closes#27365 from HeartSaVioR/MINOR-remove-CRLF-in-source-codes.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Add identifier and catalog information in DataSourceV2Relation so it would be possible to do richer checks in checkAnalysis step.

### Why are the changes needed?

In data source v2, table implementations are all customized so we may not be able to get the resolved identifier from tables them selves. Therefore we encode the table and catalog information in DSV2Relation so no external changes are needed to make sure this information is available.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit tests in the following suites:

CatalogManagerSuite.scala

CatalogV2UtilSuite.scala

SupportsCatalogOptionsSuite.scala

PlanResolutionSuite.scala

Closes#26957 from yuchenhuo/SPARK-30314.

Authored-by: Yuchen Huo <yuchen.huo@databricks.com>

Signed-off-by: Burak Yavuz <brkyvz@gmail.com>

### What changes were proposed in this pull request?

This PR is to remove query index from the golden files of SQLQueryTestSuite

### Why are the changes needed?

Because the SQLQueryTestSuite's golden files have the query index for each query, removal of any query statement [except the last one] will generate many unneeded difference. This will make code review harder. The number of changed lines is misleading.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#27361 from gatorsmile/removeIndexNum.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

This reverts commit 1d20d13149.

Closes#27351 from gatorsmile/revertSPARK25496.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch proposes to prune unnecessary nested fields from Generate which has no Project on top of it.

### Why are the changes needed?

In Optimizer, we can prune nested columns from Project(projectList, Generate). However, unnecessary columns could still possibly be read in Generate, if no Project on top of it. We should prune it too.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit test.

Closes#26978 from viirya/SPARK-29721.

Lead-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Disable all the V2 file sources in Spark 3.0 by default.

### Why are the changes needed?

There are still some missing parts in the file source V2 framework:

1. It doesn't support reporting file scan metrics such as "numOutputRows"/"numFiles"/"fileSize" like `FileSourceScanExec`. This requires another patch in the data source V2 framework. Tracked by [SPARK-30362](https://issues.apache.org/jira/browse/SPARK-30362)

2. It doesn't support partition pruning with subqueries(including dynamic partition pruning) for now. Tracked by [SPARK-30628](https://issues.apache.org/jira/browse/SPARK-30628)

As we are going to code freeze on Jan 31st, this PR proposes to disable all the V2 file sources in Spark 3.0 by default.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#27348 from gengliangwang/disableFileSourceV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR is a follow-up PR https://github.com/apache/spark/pull/25666 for adding the description and example for the Scala function API `filter`.

### Why are the changes needed?

It is hard to tell which parameter is the index column.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#27336 from gatorsmile/spark28962.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, in the following scenario, bucket join is not utilized:

```scala

val df = (0 until 20).map(i => (i, i)).toDF("i", "j").as("df")

df.write.format("parquet").bucketBy(8, "i").saveAsTable("t")

sql("CREATE VIEW v AS SELECT * FROM t")

sql("SELECT * FROM t a JOIN v b ON a.i = b.i").explain

```

```

== Physical Plan ==

*(4) SortMergeJoin [i#13], [i#15], Inner

:- *(1) Sort [i#13 ASC NULLS FIRST], false, 0

: +- *(1) Project [i#13, j#14]

: +- *(1) Filter isnotnull(i#13)

: +- *(1) ColumnarToRow

: +- FileScan parquet default.t[i#13,j#14] Batched: true, DataFilters: [isnotnull(i#13)], Format: Parquet, Location: InMemoryFileIndex[file:..., PartitionFilters: [], PushedFilters: [IsNotNull(i)], ReadSchema: struct<i:int,j:int>, SelectedBucketsCount: 8 out of 8

+- *(3) Sort [i#15 ASC NULLS FIRST], false, 0

+- Exchange hashpartitioning(i#15, 8), true, [id=#64] <----- Exchange node introduced

+- *(2) Project [i#13 AS i#15, j#14 AS j#16]

+- *(2) Filter isnotnull(i#13)

+- *(2) ColumnarToRow

+- FileScan parquet default.t[i#13,j#14] Batched: true, DataFilters: [isnotnull(i#13)], Format: Parquet, Location: InMemoryFileIndex[file:..., PartitionFilters: [], PushedFilters: [IsNotNull(i)], ReadSchema: struct<i:int,j:int>, SelectedBucketsCount: 8 out of 8

```

Notice that `Exchange` is present. This is because `Project` introduces aliases and `outputPartitioning` and `requiredChildDistribution` do not consider aliases while considering bucket join in `EnsureRequirements`. This PR addresses to allow this scenario.

### Why are the changes needed?

This allows bucket join to be utilized in the above example.

### Does this PR introduce any user-facing change?

Yes, now with the fix, the `explain` out is as follows:

```

== Physical Plan ==

*(3) SortMergeJoin [i#13], [i#15], Inner

:- *(1) Sort [i#13 ASC NULLS FIRST], false, 0

: +- *(1) Project [i#13, j#14]

: +- *(1) Filter isnotnull(i#13)

: +- *(1) ColumnarToRow

: +- FileScan parquet default.t[i#13,j#14] Batched: true, DataFilters: [isnotnull(i#13)], Format: Parquet, Location: InMemoryFileIndex[file:.., PartitionFilters: [], PushedFilters: [IsNotNull(i)], ReadSchema: struct<i:int,j:int>, SelectedBucketsCount: 8 out of 8

+- *(2) Sort [i#15 ASC NULLS FIRST], false, 0

+- *(2) Project [i#13 AS i#15, j#14 AS j#16]

+- *(2) Filter isnotnull(i#13)

+- *(2) ColumnarToRow

+- FileScan parquet default.t[i#13,j#14] Batched: true, DataFilters: [isnotnull(i#13)], Format: Parquet, Location: InMemoryFileIndex[file:.., PartitionFilters: [], PushedFilters: [IsNotNull(i)], ReadSchema: struct<i:int,j:int>, SelectedBucketsCount: 8 out of 8

```

Note that the `Exchange` is no longer present.

### How was this patch tested?

Closes#26943 from imback82/bucket_alias.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

In this PR, I propose to move the `RESERVED_PROPERTIES `s from `SupportsNamespaces` and `TableCatalog` to `CatalogV2Util`, which can keep `RESERVED_PROPERTIES ` safe for interval usages only.

### Why are the changes needed?

the `RESERVED_PROPERTIES` should not be changed by subclasses

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

existing uts

Closes#27318 from yaooqinn/SPARK-30603.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to remove sorting in the asserts of checking output of:

- expression examples,

- SQL tests in `SQLQueryTestSuite`.

### Why are the changes needed?

* Sorted `actual` and `expected` make assert output unusable. Instead of `"[true]" did not equal "[false]"`, it looks like `"[ertu]" did not equal "[aefls]"`.

* Output of expression examples should be always the same except nondeterministic expressions listed in the `ignoreSet` of the `check outputs of expression examples` test.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running `SQLQuerySuite` via `./build/sbt "sql/test:testOnly org.apache.spark.sql.SQLQuerySuite"`.

Closes#27324 from MaxGekk/remove-sorting-in-examples-tests.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is to remove the conf

### Why are the changes needed?

This rule can be excluded using spark.sql.optimizer.excludedRules without an extra conf

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

N/A

Closes#27334 from gatorsmile/spark27871.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This reverts commit b5cb9abdd5.

### Why are the changes needed?

The merged commit (#27243) was too risky for several reasons:

1. It doesn't fix a bug

2. It makes the resolution of the table that's going to be altered a child. We had avoided this on purpose as having an arbitrary rule change the child of AlterTable seemed risky. This change alone is a big -1 for me for this change.

3. While the code may look cleaner, I think this approach makes certain things harder, e.g. differentiating between the Hive based Alter table CHANGE COLUMN and ALTER COLUMN syntax. Resolving and normalizing columns for ALTER COLUMN also becomes a bit harder, as we now have to check every single AlterTable command instead of just a single ALTER TABLE ALTER COLUMN statement

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing unit tests

This closes#27315Closes#27327 from brkyvz/revAlter.

Authored-by: Burak Yavuz <brkyvz@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Skip resolving the merge expressions if the target is a DSv2 table with ACCEPT_ANY_SCHEMA capability.

### Why are the changes needed?

Some DSv2 sources may want to customize the merge resolution logic. For example, a table that can accept any schema (TableCapability.ACCEPT_ANY_SCHEMA) may want to allow certain merge queries that are blocked (that is, throws AnalysisError) by the default resolution logic. So there should be a way to completely bypass the merge resolution logic in the Analyzer.

### Does this PR introduce any user-facing change?

No, since merge itself is an unreleased feature

### How was this patch tested?

added unit test to specifically test the skipping.

Closes#27326 from tdas/SPARK-30609.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

### What changes were proposed in this pull request?

In `org.apache.spark.sql.execution.SubqueryExec#relationFuture` make a copy of `org.apache.spark.SparkContext#localProperties` and pass it to the sub-execution thread in `org.apache.spark.sql.execution.SubqueryExec#executionContext`

### Why are the changes needed?

Local properties set via sparkContext are not available as TaskContext properties when executing jobs and threadpools have idle threads which are reused

Explanation:

When `SubqueryExec`, the relationFuture is evaluated via a separate thread. The threads inherit the `localProperties` from `sparkContext` as they are the child threads.

These threads are created in the `executionContext` (thread pools). Each Thread pool has a default keepAliveSeconds of 60 seconds for idle threads.

Scenarios where the thread pool has threads which are idle and reused for a subsequent new query, the thread local properties will not be inherited from spark context (thread properties are inherited only on thread creation) hence end up having old or no properties set. This will cause taskset properties to be missing when properties are transferred by child thread via `sparkContext.runJob/submitJob`

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added UT

Closes#27267 from ajithme/subquerylocalprop.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

When you save a Spark UI SQL query page to disk and then display the html file with your browser, the query plan will be rendered a second time. This change avoids rendering the plan visualization when it exists already.

This is master:

And with the fix:

### Why are the changes needed?

The duplicate query plan is unexpected and redundant.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually tested. Testing this in a reproducible way requires a running browser or HTML rendering engine that executes the JavaScript.

Closes#27238 from EnricoMi/branch-sql-ui-duplicate-plan.

Authored-by: Enrico Minack <github@enrico.minack.dev>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

In the PR, I propose to add additional constructor in the `Like` expression. The constructor can be used on applying the `like` function with 2 parameters.

### Why are the changes needed?

`FunctionRegistry` cannot find a constructor if the `like` function is applied to 2 parameters.

### Does this PR introduce any user-facing change?

Yes, before:

```sql

spark-sql> SELECT like('Spark', '_park');

Invalid arguments for function like; line 1 pos 7

org.apache.spark.sql.AnalysisException: Invalid arguments for function like; line 1 pos 7

at org.apache.spark.sql.catalyst.analysis.FunctionRegistry$.$anonfun$expression$7(FunctionRegistry.scala:618)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.catalyst.analysis.FunctionRegistry$.$anonfun$expression$4(FunctionRegistry.scala:602)

at org.apache.spark.sql.catalyst.analysis.SimpleFunctionRegistry.lookupFunction(FunctionRegistry.scala:121)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.lookupFunction(SessionCatalog.scala:1412)

```

After:

```sql

spark-sql> SELECT like('Spark', '_park');

true

```

### How was this patch tested?

By running `check outputs of expression examples` from `SQLQuerySuite`.

Closes#27323 from MaxGekk/fix-like-func.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In this PR, I'd propose to fully support interval for the CSV and JSON functions.

On one hand, CSV and JSON records consist of string values, in the cast logic, we can cast string from/to interval now, so we can make those functions support intervals easily.

Before this change we can only use this as a workaround.

```sql

SELECT cast(from_csv('1, 1 day', 'a INT, b string').b as interval)

struct<CAST(from_csv(1, 1 day).b AS INTERVAL):interval>

1 days

```

On the other hand, we ban reading or writing intervals from CSV and JSON files. To directly read and write with external json/csv storage, you still need explicit cast, e.g.

```scala

spark.read.schema("a string").json("a.json").selectExpr("cast(a as interval)").show

+------+

| a|

+------+

|1 days|

+------+

```

### Why are the changes needed?

for interval's future-proofing purpose

### Does this PR introduce any user-facing change?

yes, the `to_json`/`from_json` function can deal with intervals now. e.g.

for `from_json` there is no such use case because we do not support `a interval`

for `to_json`, we can use interval values now

#### before

```sql

SELECT to_json(map('a', interval 25 month 100 day 130 minute));

Error in query: cannot resolve 'to_json(map('a', INTERVAL '2 years 1 months 100 days 2 hours 10 minutes'))' due to data type mismatch: Unable to convert column a of type interval to JSON.; line 1 pos 7;

'Project [unresolvedalias(to_json(map(a, 2 years 1 months 100 days 2 hours 10 minutes), Some(Asia/Shanghai)), None)]

+- OneRowRelation

```

#### after

```sql

SELECT to_json(map('a', interval 25 month 100 day 130 minute))

{"a":"2 years 1 months 100 days 2 hours 10 minutes"}

```

### How was this patch tested?

add ut

Closes#27317 from yaooqinn/SPARK-30592.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

when resolving the `Assignment` of insert action in MERGE INTO, only resolve with the source table, to avoid ambiguous attribute failure if there is a same-name column in the target table.

### Why are the changes needed?

The insert action is used when NOT MATCHED, so it can't access the row from the target table anyway.

### Does this PR introduce any user-facing change?

on

### How was this patch tested?

new tests

Closes#27265 from cloud-fan/merge.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr removes the nonstandard `SET OWNER` syntax for namespaces and changes the owner reserved properties from `ownerName` and `ownerType` to `owner`.

### Why are the changes needed?

the `SET OWNER` syntax for namespaces is hive-specific and non-sql standard, we need a more future-proofing design before we implement user-facing changes for SQL security issues

### Does this PR introduce any user-facing change?

no, just revert an unpublic syntax

### How was this patch tested?

modified uts

Closes#27300 from yaooqinn/SPARK-30591.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add optimizer rule PruneHiveTablePartitions pruning hive table partitions based on filters on partition columns.

Doing so, the total size of pruned partitions may be small enough for broadcast join in JoinSelection strategy.

### Why are the changes needed?

In JoinSelection strategy, spark use the "plan.stats.sizeInBytes" to decide whether the plan is suitable for broadcast join.

Currently, "plan.stats.sizeInBytes" does not take "pruned partitions" into account, so it may miss some broadcast join and take sort-merge join instead, which will definitely impact join performance.

This PR aim at taking "pruned partitions" into account for hive table in "plan.stats.sizeInBytes" and then improve performance by using broadcast join if possible.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Added unit tests.

This is based on #25919, credits should go to lianhuiwang and advancedxy.

Closes#26805 from fuwhu/SPARK-15616.

Authored-by: fuwhu <bestwwg@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR propose to disallow negative `scale` of `Decimal` in Spark. And this PR brings two behavior changes:

1) for literals like `1.23E4BD` or `1.23E4`(with `spark.sql.legacy.exponentLiteralAsDecimal.enabled`=true, see [SPARK-29956](https://issues.apache.org/jira/browse/SPARK-29956)), we set its `(precision, scale)` to (5, 0) rather than (3, -2);

2) add negative `scale` check inside the decimal method if it exposes to set `scale` explicitly. If check fails, `AnalysisException` throws.

And user could still use `spark.sql.legacy.allowNegativeScaleOfDecimal.enabled` to restore the previous behavior.

### Why are the changes needed?

According to SQL standard,

> 4.4.2 Characteristics of numbers

An exact numeric type has a precision P and a scale S. P is a positive integer that determines the number of significant digits in a particular radix R, where R is either 2 or 10. S is a non-negative integer.

scale of Decimal should always be non-negative. And other mainstream databases, like Presto, PostgreSQL, also don't allow negative scale.

Presto:

```

presto:default> create table t (i decimal(2, -1));

Query 20191213_081238_00017_i448h failed: line 1:30: mismatched input '-'. Expecting: <integer>, <type>

create table t (i decimal(2, -1))

```

PostgrelSQL:

```

postgres=# create table t(i decimal(2, -1));

ERROR: NUMERIC scale -1 must be between 0 and precision 2

LINE 1: create table t(i decimal(2, -1));

^

```

And, actually, Spark itself already doesn't allow to create table with negative decimal types using SQL:

```

scala> spark.sql("create table t(i decimal(2, -1))");

org.apache.spark.sql.catalyst.parser.ParseException:

no viable alternative at input 'create table t(i decimal(2, -'(line 1, pos 28)

== SQL ==

create table t(i decimal(2, -1))

----------------------------^^^

at org.apache.spark.sql.catalyst.parser.ParseException.withCommand(ParseDriver.scala:263)

at org.apache.spark.sql.catalyst.parser.AbstractSqlParser.parse(ParseDriver.scala:130)

at org.apache.spark.sql.execution.SparkSqlParser.parse(SparkSqlParser.scala:48)

at org.apache.spark.sql.catalyst.parser.AbstractSqlParser.parsePlan(ParseDriver.scala:76)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:605)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:605)

... 35 elided

```

However, it is still possible to create such table or `DatFrame` using Spark SQL programming API:

```

scala> val tb =

CatalogTable(

TableIdentifier("test", None),

CatalogTableType.MANAGED,

CatalogStorageFormat.empty,

StructType(StructField("i", DecimalType(2, -1) ) :: Nil))

```

```

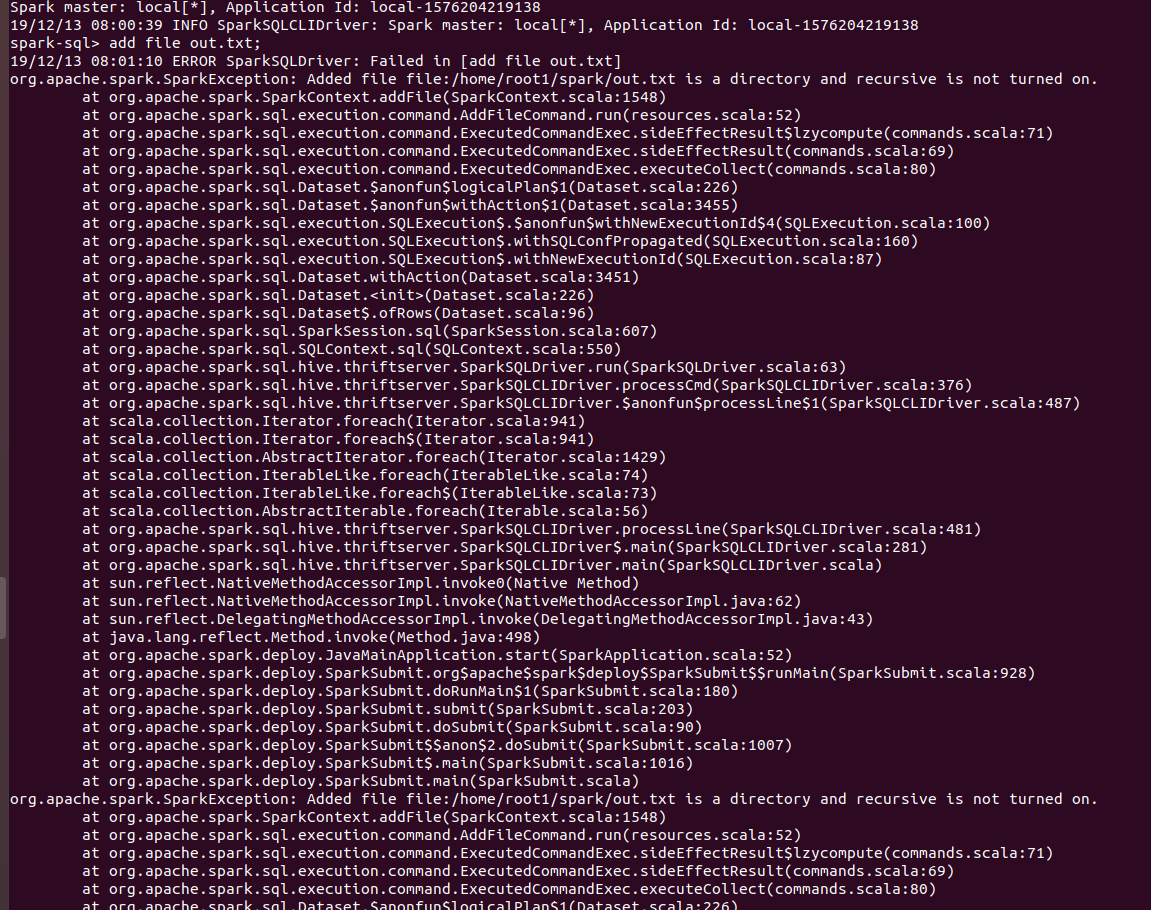

scala> spark.sql("SELECT 1.23E4BD")

res2: org.apache.spark.sql.DataFrame = [1.23E+4: decimal(3,-2)]

```

while, these two different behavior could make user confused.

On the other side, even if user creates such table or `DataFrame` with negative scale decimal type, it can't write data out if using format, like `parquet` or `orc`. Because these formats have their own check for negative scale and fail on it.

```

scala> spark.sql("SELECT 1.23E4BD").write.saveAsTable("parquet")

19/12/13 17:37:04 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)

java.lang.IllegalArgumentException: Invalid DECIMAL scale: -2

at org.apache.parquet.Preconditions.checkArgument(Preconditions.java:53)

at org.apache.parquet.schema.Types$BasePrimitiveBuilder.decimalMetadata(Types.java:495)

at org.apache.parquet.schema.Types$BasePrimitiveBuilder.build(Types.java:403)

at org.apache.parquet.schema.Types$BasePrimitiveBuilder.build(Types.java:309)

at org.apache.parquet.schema.Types$Builder.named(Types.java:290)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.convertField(ParquetSchemaConverter.scala:428)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.convertField(ParquetSchemaConverter.scala:334)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.$anonfun$convert$2(ParquetSchemaConverter.scala:326)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

at scala.collection.Iterator.foreach(Iterator.scala:941)

at scala.collection.Iterator.foreach$(Iterator.scala:941)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1429)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at org.apache.spark.sql.types.StructType.foreach(StructType.scala:99)

at scala.collection.TraversableLike.map(TraversableLike.scala:238)

at scala.collection.TraversableLike.map$(TraversableLike.scala:231)

at org.apache.spark.sql.types.StructType.map(StructType.scala:99)

at org.apache.spark.sql.execution.datasources.parquet.SparkToParquetSchemaConverter.convert(ParquetSchemaConverter.scala:326)

at org.apache.spark.sql.execution.datasources.parquet.ParquetWriteSupport.init(ParquetWriteSupport.scala:97)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:388)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:349)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:150)

at org.apache.spark.sql.execution.datasources.SingleDirectoryDataWriter.newOutputWriter(FileFormatDataWriter.scala:124)

at org.apache.spark.sql.execution.datasources.SingleDirectoryDataWriter.<init>(FileFormatDataWriter.scala:109)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:264)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$write$15(FileFormatWriter.scala:205)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:441)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:444)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

So, I think it would be better to disallow negative scale totally and make behaviors above be consistent.

### Does this PR introduce any user-facing change?

Yes, if `spark.sql.legacy.allowNegativeScaleOfDecimal.enabled=false`, user couldn't create Decimal value with negative scale anymore.

### How was this patch tested?

Added new tests in `ExpressionParserSuite` and `DecimalSuite`;

Updated `SQLQueryTestSuite`.

Closes#26881 from Ngone51/nonnegative-scale.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This revert https://github.com/apache/spark/pull/26418, file a new ticket under https://issues.apache.org/jira/browse/SPARK-30546 for better tracking interval behavior

### Why are the changes needed?

Revert interval ISO/ANSI SQL Standard output since we decide not to follow ANSI and there is no round trip

### Does this PR introduce any user-facing change?

no, not released yet

### How was this patch tested?

existing uts

Closes#27304 from yaooqinn/SPARK-30593.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Follow up on [SPARK-30428](https://github.com/apache/spark/pull/27112) which added support for partition pruning in File source V2.

This PR implements the necessary changes in order to pass the `dataFilters` to the `listFiles`. This enables having `FileIndex` implementations which use the `dataFilters` for further pruning the file listing (see the discussion [here](https://github.com/apache/spark/pull/27112#discussion_r364757217)).

### Why are the changes needed?

Datasources such as `csv` and `json` do not implement the `SupportsPushDownFilters` trait. In order to support data skipping uniformly for all file based data sources, one can override the `listFiles` method in a `FileIndex` implementation, which consults external metadata and prunes the list of files.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Modifying the unit tests for v2 file sources to verify the `dataFilters` are passed

Closes#27157 from guykhazma/PushdataFiltersInFileListing.

Authored-by: Guy Khazma <guykhag@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to make `JsonSuite` and `CSVSuite` abstract classes, and add sub-classes that check JSON/CSV datasource v1 and v2.

### Why are the changes needed?

To improve test coverage and test JSON/CSV v1 which is still supported, and can be enabled by users.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running new test suites `JsonV1Suite` and `CSVv1Suite`.

Closes#27294 from MaxGekk/csv-json-v1-test-suites.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

After this commit d67b98ea01, we are able to create table or alter table with interval column types if the external catalog accepts which is varying the interval type's purpose for internal usage. With d67b98ea01 's original purpose it should only work from cast logic.

Instead of adding type checker for the interval type from commands to commands to work among catalogs, It much simpler to treat interval as an invalid data type but can be identified by cast only.

### Why are the changes needed?

enhance interval internal usage purpose.

### Does this PR introduce any user-facing change?

NO,

Additionally, this PR restores user behavior when using interval type to create/alter table schema, e.g. for hive catalog

for 2.4,

```java

Caused by: org.apache.spark.sql.catalyst.parser.ParseException:

DataType calendarinterval is not supported.(line 1, pos 0)

```

for master after d67b98ea01

```java

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.IllegalArgumentException: Error: type expected at the position 0 of 'interval' but 'interval' is found.

at org.apache.hadoop.hive.ql.metadata.Hive.createTable(Hive.java:862)

```

now with this pr, we restore the type checker in spark side.

### How was this patch tested?

add more ut

Closes#27277 from yaooqinn/SPARK-30568.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add `owner` property to v2 table, it is reversed by `TableCatalog`, indicates the table's owner.

### Why are the changes needed?

enhance ownership management of catalog API

### Does this PR introduce any user-facing change?

yes, add 1 reserved property - `owner` , and it is not allowed to use in OPTIONS/TBLPROPERTIES anymore, only if legacy on

### How was this patch tested?

add uts

Closes#27249 from yaooqinn/SPARK-30019.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to output additional msg from the tests where a log appender is added. The message is printed as a part of `IllegalStateException` in the case of reaching the limit of maximum number of logged events.

### Why are the changes needed?

If a log appender is not removed from the log4j appenders list. the caller message could help to investigate the problem and find the test which doesn't remove the log appender.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running the modified test suites `AvroSuite`, `CSVSuite`, `ResolveHintsSuite` and etc.

Closes#27296 from MaxGekk/assign-name-to-log-appender.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Explicitly set conf to let orc use DSv2 in `OrcFilterSuite` in both v1.2 and v2.3.

### Why are the changes needed?

Tests should not rely on default conf when they're going to test something intentionally, which can be fail when conf changes.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27285 from Ngone51/fix-orcfilter-test.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Use the new framework to resolve the ALTER TABLE commands.

This PR also refactors ALTER TABLE logical plans such that they extend a base class `AlterTable`. Each plan now implements `def changes: Seq[TableChange]` for any table change operations.

Additionally, `UnresolvedV2Relation` and its usage is completely removed.

### Why are the changes needed?

This is a part of effort to make the relation lookup behavior consistent: [SPARK-29900](https://issues.apache.org/jira/browse/SPARK-29900).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Updated existing tests

Closes#27243 from imback82/v2commands_newframework.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR implements a tiny performance optimization for a `GenericArrayData` constructor, avoiding an unnecessary roundtrip through `WrappedArray` when the provided value is already an array of objects.

It also fixes a related performance problem in `ParquetRowConverter`.

### Why are the changes needed?

`GenericArrayData` has a `this(seqOrArray: Any)` constructor, which was originally added in #13138 for use in `RowEncoder` (where we may not know concrete types until runtime) but is also called (perhaps unintentionally) in a few other code paths.

In this constructor's existing implementation, a call to `new WrappedArray(Array[Object](""))` is dispatched to the `this(seqOrArray: Any)` constructor, where we then call `this(array.toSeq)`: this wraps the provided array into a `WrappedArray`, which is subsequently unwrapped in a `this(seq.toArray)` call. For an interactive example, see https://scastie.scala-lang.org/7jOHydbNTaGSU677FWA8nA

This PR changes the `this(seqOrArray: Any)` constructor so that it calls the primary `this(array: Array[Any])` constructor, allowing us to save a `.toSeq.toArray` call; this comes at the cost of one additional `case` in the `match` statement (but I believe this has a negligible performance impact relative to the other savings).

As code cleanup, I also reverted the JVM 1.7 workaround from #14271.

I also fixed a related performance problem in `ParquetRowConverter`: previously, this code called `ArrayBasedMapData.apply` which, in turn, called the `this(Any)` constructor for `GenericArrayData`: this PR's micro-benchmarks show that this is _significantly_ slower than calling the `this(Array[Any])` constructor (and I also observed time spent here during other Parquet scan benchmarking work). To fix this performance problem, I replaced the call to the `ArrayBasedMapData.apply` method with direct calls to the `ArrayBasedMapData` and `GenericArrayData` constructors.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

I tested this by running code in a debugger and by running microbenchmarks (which I've added to a new `GenericArrayDataBenchmark` in this PR):

- With JDK8 benchmarks: this PR's changes more than double the performance of calls to the `this(Any)` constructor. Even after improvements, however, calls to the `this(Array[Any])` constructor are still ~60x faster than calls to `this(Any)` when passing a non-primitive array (thereby motivating this patch's other change in `ParquetRowConverter`).

- With JDK11 benchmarks: the changes more-or-less completely eliminate the performance penalty associated with the `this(Any)` constructor.

Closes#27088 from JoshRosen/joshrosen/GenericArrayData-optimization.

Authored-by: Josh Rosen <rosenville@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to fix the bug reported in SPARK-30530. CSV datasource returns invalid records in the case when `parsedSchema` is shorter than number of tokens returned by UniVocity parser. In the case `UnivocityParser.convert()` always throws `BadRecordException` independently from the result of applying filters.

For the described case, I propose to save the exception in `badRecordException` and continue value conversion according to `parsedSchema`. If a bad record doesn't pass filters, `convert()` returns empty Seq otherwise throws `badRecordException`.

### Why are the changes needed?

It fixes the bug reported in the JIRA ticket.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added new test from the JIRA ticket.

Closes#27239 from MaxGekk/spark-30530-csv-filter-is-null.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

As we are not going to follow ANSI to implement year-month and day-time interval types, it is weird to compare the year-month part to the day-time part for our current implementation of interval type now.

Additionally, the current ordering logic comes from PostgreSQL where the implementation of the interval is messy. And we are not aiming PostgreSQL compliance at all.

THIS PR will revert https://github.com/apache/spark/pull/26681 and https://github.com/apache/spark/pull/26337

### Why are the changes needed?

make interval type more future-proofing

### Does this PR introduce any user-facing change?

there are new in 3.0, so no

### How was this patch tested?

existing uts shall work

Closes#27262 from yaooqinn/SPARK-30551.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Resolve the remaining comments in [PR#27226](https://github.com/apache/spark/pull/27226).

### Why are the changes needed?

Resolve the comments.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing unit tests.

Closes#27253 from JkSelf/followup-skewjoinoptimization2.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

https://github.com/apache/spark/pull/26809 added `Dataset.tail` API. It should be good to have it in PySpark API as well.

### Why are the changes needed?

To support consistent APIs.

### Does this PR introduce any user-facing change?

No. It adds a new API.

### How was this patch tested?

Manually tested and doctest was added.

Closes#27251 from HyukjinKwon/SPARK-30539.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

[SPARK-20568](https://issues.apache.org/jira/browse/SPARK-20568) added the possibility to clean up completed files in streaming query. Deleting/archiving uses the main thread which can slow down processing. In this PR I've created thread pool to handle file delete/archival. The number of threads can be configured with `spark.sql.streaming.fileSource.cleaner.numThreads`.

### Why are the changes needed?

Do file delete/archival in separate thread.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Closes#26502 from gaborgsomogyi/SPARK-29876.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

SPARK-29894 provides information on the Codegen Stage Id in WEBUI for SQL Plan graphs. Similarly, this proposes to add Codegen Stage Id in the DAG visualization for Stage execution. DAGs for Stage execution are available in the WEBUI under the Jobs and Stages tabs.

### Why are the changes needed?

This is proposed as an aid for drill-down analysis of complex SQL statement execution, as it is not always easy to match parts of the SQL Plan graph with the corresponding Stage DAG execution graph. Adding Codegen Stage Id for WholeStageCodegen operations makes this task easier.

### Does this PR introduce any user-facing change?

Stage DAG visualization in the WEBUI will show codegen stage id for WholeStageCodegen operations, as in the example snippet from the WEBUI, Jobs tab (the query used in the example is TPCDS 2.4 q14a):

### How was this patch tested?

Manually tested, see also example snippet.

Closes#26675 from LucaCanali/addCodegenStageIdtoStageGraph.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Use the new framework to resolve the SHOW TBLPROPERTIES command. This PR along with #27243 should update all the existing V2 commands with `UnresolvedV2Relation`.

### Why are the changes needed?

This is a part of effort to make the relation lookup behavior consistent: [SPARK-2990](https://issues.apache.org/jira/browse/SPARK-29900).

### Does this PR introduce any user-facing change?

Yes `SHOW TBLPROPERTIES temp_view` now fails with `AnalysisException` will be thrown with a message `temp_view is a temp view not table`. Previously, it was returning empty row.

### How was this patch tested?

Existing tests

Closes#26921 from imback82/consistnet_v2command.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add a `V1Scan` interface, so that data source v1 implementations can migrate to DS v2 much easier.

### Why are the changes needed?

It's a lot of work to migrate v1 sources to DS v2. The new API added here can allow v1 sources to go through v2 code paths without implementing all the Batch, Stream, PartitionReaderFactory, ... stuff.

We already have a v1 write fallback API after https://github.com/apache/spark/pull/25348

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

new test suite

Closes#26231 from cloud-fan/v1-read-fallback.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

`OptimizeSkewedJoin `rule change the `outputPartitioning `after inserting `PartialShuffleReaderExec `or `SkewedPartitionReaderExec`. So it may need to introduce additional to ensure the right result. This PR disable `OptimizeSkewedJoin ` rule when introducing additional shuffle.

### Why are the changes needed?

bug fix

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Add new ut

Closes#27226 from JkSelf/followup-skewedoptimization.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

TableCatalog reserves some properties, e,g `provider`, `location` for internal usage. Some of them are static once create, some of them need specific syntax to modify. Instead of using `OPTIONS (k='v')` or TBLPROPERTIES (k='v'), if k is a reserved TableCatalog property, we should use its specific syntax to add/modify/delete it. e.g. `provider` is a reserved property, we should use the `USING` clause to specify it, and should not allow `ALTER TABLE ... UNSET TBLPROPERTIES('provider')` to delete it. Also, there are two paths for v1/v2 catalog tables to resolve these properties, e.g. the v1 session catalog tables will only use the `USING` clause to decide `provider` but v2 tables will also lookup OPTION/TBLPROPERTIES(although there is a bug prohibit it).

Additionally, 'path' is not reserved but holds special meaning for `LOCATION` and it is used in `CREATE/REPLACE TABLE`'s `OPTIONS` sub-clause. Now for the session catalog tables, the `path` is case-insensitive, but for the non-session catalog tables, it is case-sensitive, we should make it both case insensitive for disambiguation.

### Why are the changes needed?

prevent reserved properties from being modified unexpectedly

unify the property resolution for v1/v2.

fix some bugs.

### Does this PR introduce any user-facing change?

yes

1 . `location` and `provider` (case sensitive) cannot be used in `CREATE/REPLACE TABLE ... OPTIONS/TBLPROPETIES` and `ALTER TABLE ... SET TBLPROPERTIES (...)`, if legacy on, they will be ignored to let the command success without having side effects

3. Once `path` in `CREATE/REPLACE TABLE ... OPTIONS` is case insensitive for v1 but sensitive for v2, but now we change it case insensitive for both kinds of tables, then v2 tables will also fail if `LOCATION` and `OPTIONS('PaTh' ='abc')` are both specified or will pick `PaTh`'s value as table location if `LOCATION` is missing.

4. Now we will detect if there are two different case `path` keys or more in `CREATE/REPLACE TABLE ... OPTIONS`, once it is a kind of unexpected last-win policy for v1, and v2 is case sensitive.

### How was this patch tested?

add ut

Closes#27197 from yaooqinn/SPARK-30507.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?