## What changes were proposed in this pull request?

- Added new test for Java Bean encoder of the classes: `java.time.LocalDate` and `java.time.Instant`.

- Updated comment for `Encoders.bean`

- New Row getters: `getLocalDate` and `getInstant`

- Extended `inferDataType` to infer types for `java.time.LocalDate` -> `DateType` and `java.time.Instant` -> `TimestampType`.

## How was this patch tested?

By `JavaBeanDeserializationSuite`

Closes#24273 from MaxGekk/bean-instant-localdate.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, we raise an AnalysisError when we detect the presense of aggregate expressions in where clause. Here is the problem description from the JIRA.

Aggregate functions should not be allowed in WHERE clause. But Spark SQL throws an exception when generating codes. It is supposed to throw an exception during parsing or analyzing.

Here is an example:

```

val df = spark.sql("select * from t where sum(ta) > 0")

df.explain(true)

df.show()

```

Resulting exception:

```

Exception in thread "main" java.lang.UnsupportedOperationException: Cannot generate code for expression: sum(cast(input[0, int, false] as bigint))

at org.apache.spark.sql.catalyst.expressions.Unevaluable.doGenCode(Expression.scala:291)

at org.apache.spark.sql.catalyst.expressions.Unevaluable.doGenCode$(Expression.scala:290)

at org.apache.spark.sql.catalyst.expressions.aggregate.AggregateExpression.doGenCode(interfaces.scala:87)

at org.apache.spark.sql.catalyst.expressions.Expression.$anonfun$genCode$3(Expression.scala:138)

at scala.Option.getOrElse(Option.scala:138)

```

Checked the behaviour of other database and all of them return an exception:

**Postgress**

```

select * from foo where max(c1) > 0;

Error

ERROR: aggregate functions are not allowed in WHERE Position: 25

```

**DB2**

```

db2 => select * from foo where max(c1) > 0;

SQL0120N Invalid use of an aggregate function or OLAP function.

```

**Oracle**

```

select * from foo where max(c1) > 0;

ORA-00934: group function is not allowed here

```

**MySql**

```

select * from foo where max(c1) > 0;

Invalid use of group function

```

**Update**

This PR has been enhanced to report error when expressions such as Aggregate, Window, Generate are hosted by operators where they are invalid.

## How was this patch tested?

Added tests in AnalysisErrorSuite and group-by.sql

Closes#24209 from dilipbiswal/SPARK-27255.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to deprecate the `from_utc_timestamp()` and `to_utc_timestamp`, and disable them by default. The functions can be enabled back via the SQL config `spark.sql.legacy.utcTimestampFunc.enabled`. By default, any calls of the functions throw an analysis exception.

One of the reason for deprecation is functions violate semantic of `TimestampType` which is number of microseconds since epoch in UTC time zone. Shifting microseconds since epoch by time zone offset doesn't make sense because the result doesn't represent microseconds since epoch in UTC time zone any more, and cannot be considered as `TimestampType`.

## How was this patch tested?

The changes were tested by `DateExpressionsSuite` and `DateFunctionsSuite`.

Closes#24195 from MaxGekk/conv-utc-timestamp-deprecate.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/23285. This PR adds the notes into PySpark and SparkR documentation as well.

While I am here, I revised the doc a bit to make it sound a bit more neutral

## How was this patch tested?

Manually built the doc and verified.

Closes#24272 from HyukjinKwon/SPARK-26224.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Use Single Abstract Method syntax where possible (and minor related cleanup). Comments below. No logic should change here.

## How was this patch tested?

Existing tests.

Closes#24241 from srowen/SPARK-27323.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

We have seen many cases when users make several subsequent calls to `withColumn` on a Dataset. This leads now to the generation of a lot of `Project` nodes on the top of the plan, with serious problem which can lead also to `StackOverflowException`s.

The PR improves the doc of `withColumn`, in order to advise the user to avoid this pattern and do something different, ie. a single select with all the column he/she needs.

## How was this patch tested?

NA

Closes#23285 from mgaido91/SPARK-26224.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to deprecate the `from_utc_timestamp()` and `to_utc_timestamp`, and disable them by default. The functions can be enabled back via the SQL config `spark.sql.legacy.utcTimestampFunc.enabled`. By default, any calls of the functions throw an analysis exception.

One of the reason for deprecation is functions violate semantic of `TimestampType` which is number of microseconds since epoch in UTC time zone. Shifting microseconds since epoch by time zone offset doesn't make sense because the result doesn't represent microseconds since epoch in UTC time zone any more, and cannot be considered as `TimestampType`.

## How was this patch tested?

The changes were tested by `DateExpressionsSuite` and `DateFunctionsSuite`.

Closes#24195 from MaxGekk/conv-utc-timestamp-deprecate.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

If object serializer has map of map key/value, pruning nested field should work.

Previously object serializer pruner don't recursively prunes nested fields if it is deeply located in map key or value. This patch proposed to address it by slightly factoring the pruning logic.

## How was this patch tested?

Added tests.

Closes#24260 from viirya/SPARK-27329.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Added implicit encoders for the `java.time.LocalDate` and `java.time.Instant` classes. This allows creation of datasets from instances of the types.

## How was this patch tested?

Added new tests to `JavaDatasetSuite` and `DatasetSuite`.

Closes#24249 from MaxGekk/instant-localdate-encoders.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

When there is a `Union`, the reported output datatypes are the ones of the first plan and the nullability is updated according to all the plans. For complex types, though, the nullability of their elements is not updated using the types from the other plans. This means that the nullability of the inner elements is the one of the first plan. If this is not compatible with the one of other plans, errors can happen (as reported in the JIRA).

The PR proposes to update the nullability of the inner elements of complex datatypes according to most permissive value of all the plans.

## How was this patch tested?

added UT

Closes#23726 from mgaido91/SPARK-26812.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The current master doesn't support ANALYZE TABLE to collect tables stats for catalog views even if they are cached as follows;

```scala

scala> sql(s"CREATE VIEW v AS SELECT 1 c")

scala> sql(s"CACHE LAZY TABLE v")

scala> sql(s"ANALYZE TABLE v COMPUTE STATISTICS")

org.apache.spark.sql.AnalysisException: ANALYZE TABLE is not supported on views.;

...

```

Since SPARK-25196 has supported to an ANALYZE command to collect column statistics for cached catalog view, we could support table stats, too.

## How was this patch tested?

Added tests in `StatisticsCollectionSuite` and `InMemoryColumnarQuerySuite`.

Closes#24200 from maropu/SPARK-27266.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Added new benchmarks for:

1. JSON functions: `from_json`, `json_tuple` and `get_json_object`

2. Parsing `Dataset[String]` with JSON records

3. Comparing just splitting input text by lines with schema inferring, per-line parsing when encoding is set and not set.

Also existing benchmarks were refactored to use the `NoOp` datasource to eliminate overhead of triggers like `.filter((_: Row) => true).count()`.

## How was this patch tested?

By running `JSONBenchmark` locally.

Closes#24252 from MaxGekk/json-benchmark-func.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the first PR for file source V2, there was a rule for falling back Orc V2 table to OrcFileFormat: https://github.com/apache/spark/pull/23383/files#diff-57e8244b6964e4f84345357a188421d5R34

As we are migrating more file sources to data source V2, we should make the rule more generic. This PR proposes to:

1. Rename the rule `FallbackOrcDataSourceV2 ` to `FallBackFileSourceV2`.The name is more generic. And we use "fall back" as verb, while "fallback" is noun.

2. Rename the method `fallBackFileFormat` in `FileDataSourceV2` to `fallbackFileFormat`. Here we should use "fallback" as noun.

3. Add new method `fallbackFileFormat` in `FileTable`. This is for falling back to V1 in rule `FallbackOrcDataSourceV2 `.

## How was this patch tested?

Existing Unit tests.

Closes#24251 from gengliangwang/fallbackV1Rule.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

In AggregationIterator's loop function, we access the expressions by `expressions(i)`, the type of `expressions` is `::`, a subtype of list.

```

while (i < expressionsLength) {

val func = expressions(i).aggregateFunction

```

This PR replacing index with iterator to access the expressions list, which make it simpler.

## How was this patch tested?

Existing tests.

Closes#24238 from eatoncys/array.

Authored-by: 10129659 <chen.yanshan@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This makes the `CurrentDate` expression and `current_date` function independent from time zone settings. New result is number of days since epoch in `UTC` time zone. Previously, Spark shifted the current date (in `UTC` time zone) according the session time zone which violets definition of `DateType` - number of days since epoch (which is an absolute point in time, midnight of Jan 1 1970 in UTC time).

The changes makes `CurrentDate` consistent to `CurrentTimestamp` which is independent from time zone too.

## How was this patch tested?

The changes were tested by existing test suites like `DateExpressionsSuite`.

Closes#24185 from MaxGekk/current-date.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

move

```scala

org.apache.spark.sql.execution.streaming.BaseStreamingSource

org.apache.spark.sql.execution.streaming.BaseStreamingSink

```

to java directory

## How was this patch tested?

Existing UT.

Closes#24222 from ConeyLiu/move-scala-to-java.

Authored-by: Xianyang Liu <xianyang.liu@intel.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/23130, all empty files are excluded from target file splits in `FileSourceScanExec`.

In File source V2, we should keep the same behavior.

This PR suggests to filter out empty files on listing files in `PartitioningAwareFileIndex` so that the upper level doesn't need to handle them.

## How was this patch tested?

Unit test

Closes#24227 from gengliangwang/ignoreEmptyFile.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

For now, `ReuseSubquery` in Spark compares two subqueries at `SubqueryExec` level, which invalidates the `ReuseSubquery` rule. This pull request fixes this, and add a configuration key for subquery reuse exclusively.

## How was this patch tested?

add a unit test.

Closes#24214 from adrian-wang/reuse.

Authored-by: Daoyuan Wang <me@daoyuan.wang>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

To make the blocking behaviour consistent, this pr made catalog table/view `uncacheQuery` non-blocking by default. If this pr merged, all the behaviours in spark are non-blocking by default.

## How was this patch tested?

Pass Jenkins.

Closes#24212 from maropu/SPARK-26771-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

In the original PR #24158, pruning nested field in complex map key was not supported, because some methods in schema pruning did't support it at that moment. This is a followup to add it.

## How was this patch tested?

Added tests.

Closes#24220 from viirya/SPARK-26847-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

Subquery Reuse and Exchange Reuse are not the same feature, if we don't want to reuse subqueries,and we just want to reuse exchanges,only one configuration that cannot be done.

This PR adds a new configuration `spark.sql.subquery.reuse` to control subqueryReuse.

## How was this patch tested?

N/A

Closes#23998 from 10110346/SUBQUERY_REUSE.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In data source V2, the method `PartitionReader.next()` has side effects. When the method is called, the current reader proceeds to the next record.

This might throw RuntimeException/IOException and File source V2 framework should handle these exceptions.

## How was this patch tested?

Unit test.

Closes#24225 from gengliangwang/corruptFile.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

To make https://github.com/apache/spark/pull/23788 easy to review. This PR moves `OrcColumnVector.java`, `OrcShimUtils.scala`, `OrcFilters.scala` and `OrcFilterSuite.scala` to `sql/core/v1.2.1` and copies it to `sql/core/v2.3.4`.

## How was this patch tested?

manual tests

```shell

diff -urNa sql/core/v1.2.1 sql/core/v2.3.4

```

Closes#24119 from wangyum/SPARK-27182.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

SPARK-26982 allows users to describe output of a query. However, it had a limitation of not supporting CTEs due to limitation of the grammar having a single rule to parse both select and inserts. After SPARK-27209, which splits select and insert parsing to two different rules, we can now support describing output of the CTEs easily.

## How was this patch tested?

Existing tests were modified.

Closes#24224 from dilipbiswal/describe_support_cte.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Currently, File source v2 allows each data source to specify the supported data types by implementing the method `supportsDataType` in `FileScan` and `FileWriteBuilder`.

However, in the read path, the validation checks all the data types in `readSchema`, which might contain partition columns. This is actually a regression. E.g. Text data source only supports String data type, while the partition columns can still contain Integer type since partition columns are processed by Spark.

This PR is to:

1. Refactor schema validation and check data schema only.

2. Filter the partition columns in data schema if user specified schema provided.

## How was this patch tested?

Unit test

Closes#24203 from gengliangwang/schemaValidation.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to use the SQL config `spark.sql.session.timeZone` in formatting `TIMESTAMP` literals, and make formatting `DATE` literals independent from time zone. The changes make parsing and formatting `TIMESTAMP`/`DATE` literals consistent, and independent from the default time zone of current JVM.

Also this PR ports `TIMESTAMP`/`DATE` literals formatting on Proleptic Gregorian Calendar via using `TimestampFormatter`/`DateFormatter`.

## How was this patch tested?

Added new tests to `LiteralExpressionSuite`

Closes#24181 from MaxGekk/timezone-aware-literals.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Currently in the grammar file the rule `query` is responsible to parse both select and insert statements. As a result, we need to have more semantic checks in the code to guard against in-valid insert constructs in a query. Couple of examples are in the `visitCreateView` and `visitAlterView` functions. One other issue is that, we don't catch the `invalid insert constructs` in all the places until checkAnalysis (the errors we raise can be confusing as well). Here are couple of examples :

```SQL

select * from (insert into bar values (2));

```

```

Error in query: unresolved operator 'Project [*];

'Project [*]

+- SubqueryAlias `__auto_generated_subquery_name`

+- InsertIntoHiveTable `default`.`bar`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, false, false, [c1]

+- Project [cast(col1#18 as int) AS c1#20]

+- LocalRelation [col1#18]

```

```SQL

select * from foo where c1 in (insert into bar values (2))

```

```

Error in query: cannot resolve '(default.foo.`c1` IN (listquery()))' due to data type mismatch:

The number of columns in the left hand side of an IN subquery does not match the

number of columns in the output of subquery.

#columns in left hand side: 1.

#columns in right hand side: 0.

Left side columns:

[default.foo.`c1`].

Right side columns:

[].;;

'Project [*]

+- 'Filter c1#6 IN (list#5 [])

: +- InsertIntoHiveTable `default`.`bar`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, false, false, [c1]

: +- Project [cast(col1#7 as int) AS c1#9]

: +- LocalRelation [col1#7]

+- SubqueryAlias `default`.`foo`

+- HiveTableRelation `default`.`foo`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [c1#6]

```

For both the cases above, we should reject the syntax at parser level.

In this PR, we create two top-level parser rules to parse `SELECT` and `INSERT` respectively.

I will create a small PR to allow CTEs in DESCRIBE QUERY after this PR is in.

## How was this patch tested?

Added tests to PlanParserSuite and removed the semantic check tests from SparkSqlParserSuites.

Closes#24150 from dilipbiswal/split-query-insert.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

As per https://docs.oracle.com/javase/8/docs/api/java/lang/ClassLoader.html

``Class loaders that support concurrent loading of classes are known as parallel capable class loaders and are required to register themselves at their class initialization time by invoking the ClassLoader.registerAsParallelCapable method. Note that the ClassLoader class is registered as parallel capable by default. However, its subclasses still need to register themselves if they are parallel capable. ``

i.e we can have finer class loading locks by registering classloaders as parallel capable. (Refer to deadlock due to macro lock https://issues.apache.org/jira/browse/SPARK-26961).

All the classloaders we have are wrapper of URLClassLoader which by itself is parallel capable.

But this cannot be achieved by scala code due to static registration Refer https://github.com/scala/bug/issues/11429

## How was this patch tested?

All Existing UT must pass

Closes#24126 from ajithme/driverlock.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In SPARK-26837, we prune nested fields from object serializers if they are unnecessary in the query execution. SPARK-26837 leaves the support of MapType as a TODO item. This proposes to support map type.

## How was this patch tested?

Added tests.

Closes#24158 from viirya/SPARK-26847.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

We need to add `map_keys` and `map_values` into `ProjectionOverSchema` to support those methods in nested schema pruning. This also adds end-to-end tests to SchemaPruningSuite.

## How was this patch tested?

Added tests.

Closes#24202 from viirya/SPARK-27268.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Currently, if we want to configure `spark.sql.files.maxPartitionBytes` to 256 megabytes, we must set `spark.sql.files.maxPartitionBytes=268435456`, which is very unfriendly to users.

And if we set it like this:`spark.sql.files.maxPartitionBytes=256M`, we will encounter this exception:

```

Exception in thread "main" java.lang.IllegalArgumentException:

spark.sql.files.maxPartitionBytes should be long, but was 256M

at org.apache.spark.internal.config.ConfigHelpers$.toNumber(ConfigBuilder.scala)

```

This PR use `bytesConf` to replace `longConf` or `intConf`, if the configuration is used to set the number of bytes.

Configuration change list:

`spark.files.maxPartitionBytes`

`spark.files.openCostInBytes`

`spark.shuffle.sort.initialBufferSize`

`spark.shuffle.spill.initialMemoryThreshold`

`spark.sql.autoBroadcastJoinThreshold`

`spark.sql.files.maxPartitionBytes`

`spark.sql.files.openCostInBytes`

`spark.sql.defaultSizeInBytes`

## How was this patch tested?

1.Existing unit tests

2.Manual testing

Closes#24187 from 10110346/bytesConf.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Remove Scala 2.11 support in build files and docs, and in various parts of code that accommodated 2.11. See some targeted comments below.

## How was this patch tested?

Existing tests.

Closes#23098 from srowen/SPARK-26132.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a follow-up of #24047 and it fixed wrong tests in `StatisticsCollectionSuite`.

## How was this patch tested?

Pass Jenkins.

Closes#24198 from maropu/SPARK-25196-FOLLOWUP-2.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

This fixes a typo in the SQL config value: DATETIME_JAVA8API_**EANBLED** -> DATETIME_JAVA8API_**ENABLED**.

## How was this patch tested?

This was tested by `RowEncoderSuite` and `LiteralExpressionSuite`.

Closes#24194 from MaxGekk/date-localdate-followup.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This is a follow-up of #24047; to follow the `CacheManager.cachedData` lock semantics, this pr wrapped the `statsOfPlanToCache` update with `synchronized`.

## How was this patch tested?

Pass Jenkins

Closes#24178 from maropu/SPARK-24047-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Migrate CSV to File Data Source V2.

## How was this patch tested?

Unit test

Closes#24005 from gengliangwang/CSVDataSourceV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

The hashSeed method allocates 64 bytes instead of 8. Other bytes are always zeros (thanks to default behavior of ByteBuffer). And they could be excluded from hash calculation because they don't differentiate inputs.

## How was this patch tested?

By running the existing tests - XORShiftRandomSuite

Closes#20793 from MaxGekk/hash-buff-size.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This moves parsing `CREATE TABLE ... USING` statements into catalyst. Catalyst produces logical plans with the parsed information and those plans are converted to v1 `DataSource` plans in `DataSourceAnalysis`.

This prepares for adding v2 create plans that should receive the information parsed from SQL without being translated to v1 plans first.

This also makes it possible to parse in catalyst instead of breaking the parser across the abstract `AstBuilder` in catalyst and `SparkSqlParser` in core.

For more information, see the [mailing list thread](https://lists.apache.org/thread.html/54f4e1929ceb9a2b0cac7cb058000feb8de5d6c667b2e0950804c613%3Cdev.spark.apache.org%3E).

## How was this patch tested?

This uses existing tests to catch regressions. This introduces no behavior changes.

Closes#24029 from rdblue/SPARK-27108-add-parsed-create-logical-plans.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch proposes ManifestFileCommitProtocol to clean up incomplete output files in task level if task aborts. Please note that this works as 'best-effort', not kind of guarantee, as we have in HadoopMapReduceCommitProtocol.

## How was this patch tested?

Added UT.

Closes#24154 from HeartSaVioR/SPARK-27210.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

Co-authored-by: Philip Stutz <philip.stutzgmail.com>

## What changes were proposed in this pull request?

This PR adds support for casting

* `ByteType`

* `ShortType`

* `IntegerType`

* `LongType`

to `BinaryType`.

## How was this patch tested?

We added unit tests for casting instances of the above types. For validation, we used Javas `DataOutputStream` to compare the resulting byte array with the result of `Cast`.

We state that the contribution is our original work and that we license the work to the project under the project’s open source license.

cloud-fan we'd appreciate a review if you find the time, thx

Closes#24107 from s1ck/cast_to_binary.

Authored-by: Martin Junghanns <martin.junghanns@neotechnology.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to avoid the `TimeZone` to `ZoneId` conversion in `DateTimeUtils.stringToTimestamp` by changing signature of the method, and require a parameter of `ZoneId` type. This will allow to avoid unnecessary conversion (`TimeZone` -> `String` -> `ZoneId`) per each row.

Also the PR avoids creation of `ZoneId` instances from `ZoneOffset` because `ZoneOffset` is a sub-class, and the conversion is unnecessary too.

## How was this patch tested?

It was tested by `DateTimeUtilsSuite` and `CastSuite`.

Closes#24155 from MaxGekk/stringtotimestamp-zoneid.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Refactored code in tests regarding the "withLogAppender()" pattern by creating a general helper method in SparkFunSuite.

## How was this patch tested?

Passed existing tests.

Closes#24172 from maryannxue/log-appender.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

- Support N-part identifier in SQL

- N-part identifier extractor in Analyzer

## How was this patch tested?

- A new unit test suite ResolveMultipartRelationSuite

- CatalogLoadingSuite

rblue cloud-fan mccheah

Closes#23848 from jzhuge/SPARK-26946.

Authored-by: John Zhuge <jzhuge@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This pr extended `ANALYZE` commands to analyze column stats for cached table.

In common use cases, users read catalog table data, join/aggregate them, and then cache the result for following reuse. Since we are only allowed to analyze column statistics in catalog tables via ANALYZE commands, the current optimization depends on non-existing or inaccurate column statistics of cached data. So, it would be great if we could analyze cached data as follows;

```scala

scala> def printColumnStats(tableName: String) = {

| spark.table(tableName).queryExecution.optimizedPlan.stats.attributeStats.foreach {

| case (k, v) => println(s"[$k]: $v")

| }

| }

scala> sql("SET spark.sql.cbo.enabled=true")

scala> sql("SET spark.sql.statistics.histogram.enabled=true")

scala> spark.range(1000).selectExpr("id % 33 AS c0", "rand() AS c1", "0 AS c2").write.saveAsTable("t")

scala> sql("ANALYZE TABLE t COMPUTE STATISTICS FOR COLUMNS c0, c1, c2")

scala> spark.table("t").groupBy("c0").agg(count("c1").as("v1"), sum("c2").as("v2")).createTempView("temp")

// Prints column statistics in catalog table `t`

scala> printColumnStats("t")

[c0#7073L]: ColumnStat(Some(33),Some(0),Some(32),Some(0),Some(8),Some(8),Some(Histogram(3.937007874015748,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;9f7c1c)),2)

[c1#7074]: ColumnStat(Some(944),Some(3.2108484832404915E-4),Some(0.997584797423909),Some(0),Some(8),Some(8),Some(Histogram(3.937007874015748,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;60a386b1)),2)

[c2#7075]: ColumnStat(Some(1),Some(0),Some(0),Some(0),Some(4),Some(4),Some(Histogram(3.937007874015748,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;5ffd29e8)),2)

// Prints column statistics on cached table `temp`

scala> sql("CACHE TABLE temp")

scala> printColumnStats("temp")

<No Column Statistics>

// Analyzes columns `v1` and `v2` on cached table `temp`

scala> sql("ANALYZE TABLE temp COMPUTE STATISTICS FOR COLUMNS v1, v2")

// Then, prints again

scala> printColumnStats("temp")

[v1#7084L]: ColumnStat(Some(2),Some(30),Some(31),Some(0),Some(8),Some(8),Some(Histogram(0.12992125984251968,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;49f7bb6f)),2)

[v2#7086L]: ColumnStat(Some(1),Some(0),Some(0),Some(0),Some(8),Some(8),Some(Histogram(0.12992125984251968,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;12701677)),2)

// Analyzes one left column and prints again

scala> sql("ANALYZE TABLE temp COMPUTE STATISTICS FOR COLUMNS c0")

scala> printColumnStats("temp")

[v1#7084L]: ColumnStat(Some(2),Some(30),Some(31),Some(0),Some(8),Some(8),Some(Histogram(0.12992125984251968,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;49f7bb6f)),2)

[v2#7086L]: ColumnStat(Some(1),Some(0),Some(0),Some(0),Some(8),Some(8),Some(Histogram(0.12992125984251968,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;12701677)),2)

[c0#7073L]: ColumnStat(Some(33),Some(0),Some(32),Some(0),Some(8),Some(8),Some(Histogram(0.12992125984251968,[Lorg.apache.spark.sql.catalyst.plans.logical.HistogramBin;1f5c1b81)),2)

```

## How was this patch tested?

Added tests in `CachedTableSuite` and `StatisticsCollectionSuite`.

Closes#24047 from maropu/SPARK-25196-4.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This change is a cleanup and consolidation of 3 areas related to Pandas UDFs:

1) `ArrowStreamPandasSerializer` now inherits from `ArrowStreamSerializer` and uses the base class `dump_stream`, `load_stream` to create Arrow reader/writer and send Arrow record batches. `ArrowStreamPandasSerializer` makes the conversions to/from Pandas and converts to Arrow record batch iterators. This change removed duplicated creation of Arrow readers/writers.

2) `createDataFrame` with Arrow now uses `ArrowStreamPandasSerializer` instead of doing its own conversions from Pandas to Arrow and sending record batches through `ArrowStreamSerializer`.

3) Grouped Map UDFs now reuse existing logic in `ArrowStreamPandasSerializer` to send Pandas DataFrame results as a `StructType` instead of separating each column from the DataFrame. This makes the code a little more consistent with the Python worker, but does require that the returned StructType column is flattened out in `FlatMapGroupsInPandasExec` in Scala.

## How was this patch tested?

Existing tests and ran tests with pyarrow 0.12.0

Closes#24095 from BryanCutler/arrow-refactor-cleanup-UDFs.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When passing in a user schema to create a DataFrame, there might be mismatched nullability between the user schema and the the actual data. All related public interfaces now perform catalyst conversion using the user provided schema, which catches such mismatches to avoid runtime errors later on. However, there're private methods which allow this conversion to be skipped, so we need to remove these private methods which may lead to confusion and potential issues.

## How was this patch tested?

Passed existing tests. No new tests were added since this PR removed the private interfaces that would potentially cause null problems and other interfaces are covered already by existing tests.

Closes#24162 from maryannxue/spark-27223.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Update comments in `InMemoryFileIndex.listLeafFiles` to keep according with code.

## How was this patch tested?

existing test cases

Closes#24146 from WangGuangxin/SPARK-27202.

Authored-by: wangguangxin.cn <wangguangxin.cn@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to use `ZoneId` instead of `TimeZone` in:

- the `apply` and `getFractionFormatter ` methods of the `TimestampFormatter` object,

- and in implementations of the `TimestampFormatter` trait like `FractionTimestampFormatter`.

The reason of the changes is to avoid unnecessary conversion from `TimeZone` to `ZoneId` because `ZoneId` is used in `TimestampFormatter` implementations internally, and the conversion is performed via `String` which is not for free. Also taking into account that `TimeZone` instances are converted from `String` in some cases, the worse case looks like `String` -> `TimeZone` -> `String` -> `ZoneId`. The PR eliminates the unneeded conversions.

## How was this patch tested?

It was tested by `DateExpressionsSuite`, `DateTimeUtilsSuite` and `TimestampFormatterSuite`.

Closes#24141 from MaxGekk/zone-id.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This introduces a new SQL function 'xxhash64' for getting a 64-bit hash of an arbitrary number of columns.

This is designed to exactly mimic the 32-bit `hash`, which uses

MurmurHash3. The name is designed to be more future-proof than the

'hash', by indicating the exact algorithm used, similar to md5 and the

sha hashes.

## How was this patch tested?

The tests for the existing `hash` function were duplicated to run with `xxhash64`.

Closes#24019 from huonw/hash64.

Authored-by: Huon Wilson <Huon.Wilson@data61.csiro.au>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

DecimalType Literal should not be casted to Long.

eg. For `df.filter("x < 3.14")`, assuming df (x in DecimalType) reads from a ORC table and uses the native ORC reader with predicate push down enabled, we will push down the `x < 3.14` predicate to the ORC reader via a SearchArgument.

OrcFilters will construct the SearchArgument, but not handle the DecimalType correctly.

The previous impl will construct `x < 3` from `x < 3.14`.

## How was this patch tested?

```

$ sbt

> sql/testOnly *OrcFilterSuite

> sql/testOnly *OrcQuerySuite -- -z "27160"

```

Closes#24092 from sadhen/spark27160.

Authored-by: Darcy Shen <sadhen@zoho.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

The reader schema is said to be evolved (or projected) when it changed after the data is written by writers. Apache Spark file-based data sources have a test coverage for that; e.g. [ReadSchemaSuite.scala](https://github.com/apache/spark/blob/master/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/ReadSchemaSuite.scala). This PR aims to add a test coverage for nested columns by adding and hiding nested columns.

## How was this patch tested?

Pass the Jenkins with newly added tests.

Closes#24139 from dongjoon-hyun/SPARK-27197.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

Currently, users meet job abortions while creating a table using the Hive serde "STORED AS" with invalid column names. We had better prevent this by raising **AnalysisException** with a guide to use aliases instead like Paquet data source tables.

thus making compatible with error message shown while creating Parquet/ORC native table.

**BEFORE**

```scala

scala> sql("set spark.sql.hive.convertMetastoreParquet=false")

scala> sql("CREATE TABLE a STORED AS PARQUET AS SELECT 1 AS `COUNT(ID)`")

Caused by: java.lang.IllegalArgumentException: No enum constant parquet.schema.OriginalType.col1

```

**AFTER**

```scala

scala> sql("CREATE TABLE a STORED AS PARQUET AS SELECT 1 AS `COUNT(ID)`")

Please use alias to rename it.;eption: Attribute name "count(ID)" contains invalid character(s) among " ,;{}()\n\t=".

```

## How was this patch tested?

Pass the Jenkins with the newly added test case.

Closes#24075 from sujith71955/master_serde.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This pr is a follow-up of #24093 and includes fixes below;

- Lists up all the keywords of Spark only (that is, drops non-keywords there); I listed up all the keywords of ANSI SQL-2011 in the previous commit (SPARK-26215).

- Sorts the keywords in `SqlBase.g4` in a alphabetical order

## How was this patch tested?

Pass Jenkins.

Closes#24125 from maropu/SPARK-27161-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Currently, DataFrameReader/DataFrameReader supports setting Hadoop configurations via method `.option()`.

E.g, the following test case should be passed in both ORC V1 and V2

```

class TestFileFilter extends PathFilter {

override def accept(path: Path): Boolean = path.getParent.getName != "p=2"

}

withTempPath { dir =>

val path = dir.getCanonicalPath

val df = spark.range(2)

df.write.orc(path + "/p=1")

df.write.orc(path + "/p=2")

val extraOptions = Map(

"mapred.input.pathFilter.class" -> classOf[TestFileFilter].getName,

"mapreduce.input.pathFilter.class" -> classOf[TestFileFilter].getName

)

assert(spark.read.options(extraOptions).orc(path).count() === 2)

}

}

```

While Hadoop Configurations are case sensitive, the current data source V2 APIs are using `CaseInsensitiveStringMap` in the top level entry `TableProvider`.

To create Hadoop configurations correctly, I suggest

1. adding a new method `asCaseSensitiveMap` in `CaseInsensitiveStringMap`.

2. Make `CaseInsensitiveStringMap` read-only to ambiguous conversion in `asCaseSensitiveMap`

## How was this patch tested?

Unit test

Closes#24094 from gengliangwang/originalMap.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The reader schema is said to be evolved (or projected) when it changed after the data is written by writers. Apache Spark file-based data sources have a test coverage for that, [ReadSchemaSuite.scala](https://github.com/apache/spark/blob/master/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/ReadSchemaSuite.scala). This PR aims to add `AvroReadSchemaSuite` to ensure the minimal consistency among file-based data sources and prevent a future regression in Avro data source.

## How was this patch tested?

Pass the Jenkins with the newly added test suite.

Closes#24135 from dongjoon-hyun/SPARK-27195.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This adds a new method, `capabilities` to `v2.Table` that returns a set of `TableCapability`. Capabilities are used to fail queries during analysis checks, `V2WriteSupportCheck`, when the table does not support operations, like truncation.

## How was this patch tested?

Existing tests for regressions, added new analysis suite, `V2WriteSupportCheckSuite`, for new capability checks.

Closes#24012 from rdblue/SPARK-26811-add-capabilities.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

There was some mistake on test code: it has wrong assertion. The patch proposes fixing it, as well as fixing other stuff to make test really pass.

## How was this patch tested?

Fixed unit test.

Closes#24112 from HeartSaVioR/SPARK-22000-hotfix.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

This is a minor follow-up PR for SPARK-27096. The original PR reconciled the join types supported between dataset and sql interface. In case of R, we do the join type validation in the R side. In this PR we do the correct validation and adds tests in R to test all the join types along with the error condition. Along with this, i made the necessary doc correction.

## How was this patch tested?

Add R tests.

Closes#24087 from dilipbiswal/joinfix_followup.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

During the migration of CSV V2(https://github.com/apache/spark/pull/24005), I find that we can improve the file source v2 framework by:

1. check duplicated column names in both read and write

2. Not all the file sources support filter push down. So remove `SupportsPushDownFilters` from FileScanBuilder

3. The method `isSplitable` might require data source options. Add a new member `options` to FileScan.

4. Make `FileTable.schema` a lazy value instead of a method.

## How was this patch tested?

Unit test

Closes#24066 from gengliangwang/reviseFileSourceV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The data source option check_files_exist is introduced in In #23383 when the file source V2 framework is implemented. In the PR, FileIndex was created as a member of FileTable, so that we could implement partition pruning like 0f9fcab in the future. At that time `FileIndex`es will always be created for file writes, so we needed the option to decide whether to check file existence.

After https://github.com/apache/spark/pull/23774, the option is not needed anymore, since Dataframe writes won't create unnecessary FileIndex. This PR is to remove the option.

## How was this patch tested?

Unit test.

Closes#24069 from gengliangwang/removeOptionCheckFilesExist.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Further load increases in our production environment have shown that even the read locks can cause some contention, since they contain a mechanism that turns a read lock into an exclusive lock if a writer has been starved out. This PR reduces the potential for lock contention even further than https://github.com/apache/spark/pull/23833. Additionally, it uses more idiomatic scala than the previous implementation.

cloud-fan & gatorsmile This is a relatively minor improvement to the previous CacheManager changes. At this point, I think we finally are doing the minimum possible amount of locking.

## How was this patch tested?

Has been tested on a live system where the blocking was causing major issues and it is working well.

CacheManager has no explicit unit test but is used in many places internally as part of the SharedState.

Closes#24028 from DaveDeCaprio/read-locks-master.

Lead-authored-by: Dave DeCaprio <daved@alum.mit.edu>

Co-authored-by: David DeCaprio <daved@alum.mit.edu>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

We create many stores in the SQLAppStatusListenerSuite, but we need to the close store after test.

## How was this patch tested?

Existing tests

Closes#24079 from shahidki31/SPARK-27145.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In SPARK-27011, we introduced `IgnoreCahedData` to avoid plan node copys in `CacheManager`.

Since `ClearCacheCommand` has no argument, it also can extend `IgnoreCahedData`.

## How was this patch tested?

Pass Jenkins.

Closes#24081 from maropu/SPARK-27011-2.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This pr updated parsing rules in `SqlBase.g4` to support a SQL query below when ANSI mode enabled;

```

SELECT CAST('2017-08-04' AS DATE) + 1 days;

```

The current master cannot parse it though, other dbms-like systems support the syntax (e.g., hive and mysql). Also, the syntax is frequently used in the official TPC-DS queries.

This pr added new tokens as follows;

```

YEAR | YEARS | MONTH | MONTHS | WEEK | WEEKS | DAY | DAYS | HOUR | HOURS | MINUTE

MINUTES | SECOND | SECONDS | MILLISECOND | MILLISECONDS | MICROSECOND | MICROSECONDS

```

Then, it registered the keywords below as the ANSI reserved (this follows SQL-2011);

```

DAY | HOUR | MINUTE | MONTH | SECOND | YEAR

```

## How was this patch tested?

Added tests in `SQLQuerySuite`, `ExpressionParserSuite`, and `TableIdentifierParserSuite`.

Closes#20433 from maropu/SPARK-23264.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

This patch deduplicates the huge if statements regarding getting value between specialized getters.

## How was this patch tested?

Existing UT.

Closes#24016 from HeartSaVioR/MINOR-deduplicate-get-from-specialized-getters.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a follow up PR for #23943 in order to update the benchmark result with EC2 `r3.xlarge` instance.

## How was this patch tested?

N/A. (Manually compare the diff)

Closes#24078 from dongjoon-hyun/SPARK-27034.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

It's a little awkward to have 2 different classes(`CaseInsensitiveStringMap` and `DataSourceOptions`) to present the options in data source and catalog API.

This PR merges these 2 classes, while keeping the name `CaseInsensitiveStringMap`, which is more precise.

## How was this patch tested?

existing tests

Closes#24025 from cloud-fan/option.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The PR puts in a limit on the size of a debug string generated for a tree node. Helps to fix out of memory errors when large plans have huge debug strings. In addition to SPARK-26103, this should also address SPARK-23904 and SPARK-25380. AN alternative solution was proposed in #23076, but that solution doesn't address all the cases that can cause a large query. This limit is only on calls treeString that don't pass a Writer, which makes it play nicely with #22429, #23018 and #23039. Full plans can be written to files, but truncated plans will be used when strings are held in memory, such as for the UI.

- A new configuration parameter called spark.sql.debug.maxPlanLength was added to control the length of the plans.

- When plans are truncated, "..." is printed to indicate that it isn't a full plan

- A warning is printed out the first time a truncated plan is displayed. The warning explains what happened and how to adjust the limit.

## How was this patch tested?

Unit tests were created for the new SizeLimitedWriter. Also a unit test for TreeNode was created that checks that a long plan is correctly truncated.

Closes#23169 from DaveDeCaprio/text-plan-size.

Lead-authored-by: Dave DeCaprio <daved@alum.mit.edu>

Co-authored-by: David DeCaprio <daved@alum.mit.edu>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

According to the [design](https://docs.google.com/document/d/1vI26UEuDpVuOjWw4WPoH2T6y8WAekwtI7qoowhOFnI4/edit?usp=sharing), the life cycle of `StreamingWrite` should be the same as the read side `MicroBatch/ContinuousStream`, i.e. each run of the stream query, instead of each epoch.

This PR fixes it.

## How was this patch tested?

existing tests

Closes#23981 from cloud-fan/dsv2.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a followup to #23943. This proposes to rename ParquetSchemaPruning to SchemaPruning as ParquetSchemaPruning supports both Parquet and ORC v1 now.

## How was this patch tested?

Existing tests.

Closes#24077 from viirya/nested-schema-pruning-orc-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

We only supported nested schema pruning for Parquet previously. This proposes to support nested schema pruning for ORC too.

Note: This only covers ORC v1. For ORC v2, the necessary change is at the schema pruning rule. We should deal with ORC v2 as a TODO item, in order to reduce review burden.

## How was this patch tested?

Added tests.

Closes#23943 from viirya/nested-schema-pruning-orc.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

LEGACY_DRIVER_IDENTIFIER and its reference are removed.

corresponding references test are updated.

## How was this patch tested?

tested UT test cases

Closes#24026 from shivusondur/newjira2.

Authored-by: shivusondur <shivusondur@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Added test suite for AllExecutionsPage class. Checked the scenarios for SPARK-27019 and SPARK-27075.

## How was this patch tested?

Added UT, manually tested

Closes#24052 from shahidki31/SPARK-27125.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

When cache is enabled ( i.e once cache table command is executed), any following sql will trigger

CacheManager#lookupCachedData which will create a copy of the tree node, which inturn calls TreeNode#makeCopy. Here the problem is it will try to create a copy instance. But as ResetCommand is a case object this will fail

## How was this patch tested?

Added UT to reproduce the issue

Closes#23918 from ajithme/reset.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR proposes to have one base R runner.

In the high level,

Previously, it had `ArrowRRunner` and it inherited `RRunner`:

```

└── RRunner

└── ArrowRRunner

```

After this PR, now it has a `BaseRRunner`, and `ArrowRRunner` and `RRunner` inherit `BaseRRunner`:

```

└── BaseRRunner

├── ArrowRRunner

└── RRunner

```

This way is consistent with Python's.

In more details, see below:

```scala

class BaseRRunner[IN, OUT] {

def compute: Iterator[OUT] = {

...

newWriterThread(...).start()

...

newReaderIterator(...)

...

}

// Make a thread that writes data from JVM to R process

abstract protected def newWriterThread(..., iter: Iterator[IN], ...): WriterThread

// Make an iterator that reads data from the R process to JVM

abstract protected def newReaderIterator(...): ReaderIterator

abstract class WriterThread(..., iter: Iterator[IN], ...) extends Thread {

override def run(): Unit {

...

writeIteratorToStream(...)

...

}

// Actually writing logic to the socket stream.

abstract protected def writeIteratorToStream(dataOut: DataOutputStream): Unit

}

abstract class ReaderIterator extends Iterator[OUT] {

override def hasNext(): Boolean = {

...

read(...)

...

}

override def next(): OUT = {

...

hasNext()

...

}

// Actually reading logic from the socket stream.

abstract protected def read(...): OUT

}

}

```

```scala

case [Arrow]RRunner extends BaseRRunner {

override def newWriterThread(...) {

new WriterThread(...) {

override def writeIteratorToStream(...) {

...

}

}

}

override def newReaderIterator(...) {

new ReaderIterator(...) {

override def read(...) {

...

}

}

}

}

```

## How was this patch tested?

Manually tested and existing tests should cover.

Closes#23977 from HyukjinKwon/SPARK-26923.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

The docs describing RangeBetween & RowsBetween for pySpark & SparkR are not in sync with Spark description.

a. Edited PySpark and SparkR docs and made description same for both RangeBetween and RowsBetween

b. created executable examples in both pySpark and SparkR documentation

c. Locally tested the patch for scala Style checks and UT for checking no testcase failures

Closes#23946 from jagadesh-kiran/master.

Authored-by: Jagadesh Kiran <jagadesh.n@in.verizon.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently in the grammar file, we have the joinType rule defined as following :

```

joinType

: INNER?

....

....

| LEFT SEMI

| LEFT? ANTI

;

```

The keyword LEFT is optional for ANTI join even though its not optional for SEMI join. When

using data frame interface join type "anti" is not allowed. The allowed types are "left_anti" or

"leftanti" for anti joins. ~~In this PR, i am making the LEFT keyword mandatory for ANTI joins so

it aligns better with the LEFT SEMI join in the grammar file and also the join types allowed from dataframe api.~~

This PR makes LEFT optional for SEMI join in .g4 and add "semi" and "anti" join types from dataframe.

~~I have not opened any JIRA for this as probably we may need some discussion to see if

we are going to address this or not.~~

## How was this patch tested?

Modified the join type tests.

Closes#23982 from dilipbiswal/join_fix.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

To reuse some common logics for improving `Analyze` commands (See the description of `SPARK-25196` for details), this pr moved some functions from `AnalyzeColumnCommand` to `command/CommandUtils`. A follow-up pr will add code to extend `Analyze` commands for cached tables.

## How was this patch tested?

Existing tests.

Closes#22204 from maropu/SPARK-25196.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Before a Kafka consumer gets assigned with partitions, its offset will contain 0 partitions. However, runContinuous will still run and launch a Spark job having 0 partitions. In this case, there is a race that epoch may interrupt the query execution thread after `lastExecution.toRdd`, and either `epochEndpoint.askSync[Unit](StopContinuousExecutionWrites)` or the next `runContinuous` will get interrupted unintentionally.

To handle this case, this PR has the following changes:

- Clean up the resources in `queryExecutionThread.runUninterruptibly`. This may increase the waiting time of `stop` but should be minor because the operations here are very fast (just sending an RPC message in the same process and stopping a very simple thread).

- Clear the interrupted status at the end so that it won't impact the `runContinuous` call. We may clear the interrupted status set by `stop`, but it doesn't affect the query termination because `runActivatedStream` will check `state` and exit accordingly.

I also updated the clean up codes to make sure exceptions thrown from `epochEndpoint.askSync[Unit](StopContinuousExecutionWrites)` won't stop the clean up.

## How was this patch tested?

Jenkins

Closes#24034 from zsxwing/SPARK-27111.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

Spark SQL performs whole-stage code generation to speed up query execution. There are two steps to it:

- Java source code is generated from the physical query plan on the driver. A single version of the source code is generated from a query plan, and sent to all executors.

- It's compiled to bytecode on the driver to catch compilation errors before sending to executors, but currently only the generated source code gets sent to the executors. The bytecode compilation is for fail-fast only.

- Executors receive the generated source code and compile to bytecode, then the query runs like a hand-written Java program.

In this model, there's an implicit assumption about the driver and executors being run on similar platforms. Some code paths accidentally embedded platform-dependent object layout information into the generated code, such as:

```java

Platform.putLong(buffer, /* offset */ 24, /* value */ 1);

```

This code expects a field to be at offset +24 of the `buffer` object, and sets a value to that field.

But whole-stage code generation generally uses platform-dependent information from the driver. If the object layout is significantly different on the driver and executors, the generated code can be reading/writing to wrong offsets on the executors, causing all kinds of data corruption.

One code pattern that leads to such problem is the use of `Platform.XXX` constants in generated code, e.g. `Platform.BYTE_ARRAY_OFFSET`.

Bad:

```scala

val baseOffset = Platform.BYTE_ARRAY_OFFSET

// codegen template:

s"Platform.putLong($buffer, $baseOffset, $value);"

```

This will embed the value of `Platform.BYTE_ARRAY_OFFSET` on the driver into the generated code.

Good:

```scala

val baseOffset = "Platform.BYTE_ARRAY_OFFSET"

// codegen template:

s"Platform.putLong($buffer, $baseOffset, $value);"

```

This will generate the offset symbolically -- `Platform.putLong(buffer, Platform.BYTE_ARRAY_OFFSET, value)`, which will be able to pick up the correct value on the executors.

Caveat: these offset constants are declared as runtime-initialized `static final` in Java, so they're not compile-time constants from the Java language's perspective. It does lead to a slightly increased size of the generated code, but this is necessary for correctness.

NOTE: there can be other patterns that generate platform-dependent code on the driver which is invalid on the executors. e.g. if the endianness is different between the driver and the executors, and if some generated code makes strong assumption about endianness, it would also be problematic.

## How was this patch tested?

Added a new test suite `WholeStageCodegenSparkSubmitSuite`. This test suite needs to set the driver's extraJavaOptions to force the driver and executor use different Java object layouts, so it's run as an actual SparkSubmit job.

Authored-by: Kris Mok <kris.mokdatabricks.com>

Closes#24031 from gatorsmile/cherrypickSPARK-27097.

Lead-authored-by: Kris Mok <kris.mok@databricks.com>

Co-authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

This adds a v2 API for adding new catalog plugins to Spark.

* Catalog implementations extend `CatalogPlugin` and are loaded via reflection, similar to data sources

* `Catalogs` loads and initializes catalogs using configuration from a `SQLConf`

* `CaseInsensitiveStringMap` is used to pass configuration to `CatalogPlugin` via `initialize`

Catalogs are configured by adding config properties starting with `spark.sql.catalog.(name)`. The name property must specify a class that implements `CatalogPlugin`. Other properties under the namespace (`spark.sql.catalog.(name).(prop)`) are passed to the provider during initialization along with the catalog name.

This replaces #21306, which will be implemented in two multiple parts: the catalog plugin system (this commit) and specific catalog APIs, like `TableCatalog`.

## How was this patch tested?

Added test suites for `CaseInsensitiveStringMap` and for catalog loading.

Closes#23915 from rdblue/SPARK-24252-add-v2-catalog-plugins.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

[SPARK-24638](https://issues.apache.org/jira/browse/SPARK-24638) adds support for Parquet file `StartsWith` predicate push down.

`InMemoryTable` can also support this feature.

This is an example to explain how it works, Imagine that the `id` column stored as below:

Partition ID | lowerBound | upperBound

-- | -- | --

p1 | '1' | '9'

p2 | '10' | '19'

p3 | '20' | '29'

p4 | '30' | '39'

p5 | '40' | '49'

A filter ```df.filter($"id".startsWith("2"))``` or ```id like '2%'```

then we substr lowerBound and upperBound:

Partition ID | lowerBound.substr(0, Length("2")) | upperBound.substr(0, Length("2"))

-- | -- | --

p1 | '1' | '9'

p2 | '1' | '1'

p3 | '2' | '2'

p4 | '3' | '3'

p5 | '4' | '4'

We can see that we only need to read `p1` and `p3`.

## How was this patch tested?

unit tests and benchmark tests

benchmark test result:

```

================================================================================================

Pushdown benchmark for StringStartsWith

================================================================================================

Java HotSpot(TM) 64-Bit Server VM 1.8.0_191-b12 on Mac OS X 10.12.6

Intel(R) Core(TM) i7-7820HQ CPU 2.90GHz

StringStartsWith filter: (value like '10%'): Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

InMemoryTable Vectorized 12068 / 14198 1.3 767.3 1.0X

InMemoryTable Vectorized (Pushdown) 5457 / 8662 2.9 347.0 2.2X

Java HotSpot(TM) 64-Bit Server VM 1.8.0_191-b12 on Mac OS X 10.12.6

Intel(R) Core(TM) i7-7820HQ CPU 2.90GHz

StringStartsWith filter: (value like '1000%'): Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

InMemoryTable Vectorized 5246 / 5355 3.0 333.5 1.0X

InMemoryTable Vectorized (Pushdown) 2185 / 2346 7.2 138.9 2.4X

Java HotSpot(TM) 64-Bit Server VM 1.8.0_191-b12 on Mac OS X 10.12.6

Intel(R) Core(TM) i7-7820HQ CPU 2.90GHz

StringStartsWith filter: (value like '786432%'): Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

InMemoryTable Vectorized 5112 / 5312 3.1 325.0 1.0X

InMemoryTable Vectorized (Pushdown) 2292 / 2522 6.9 145.7 2.2X

```

Closes#23004 from wangyum/SPARK-26004.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Throw an exception if spark.sql.shuffle.partitions=0

This takes over https://github.com/apache/spark/pull/23835

## How was this patch tested?

Existing tests.

Closes#24008 from srowen/SPARK-24783.2.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: WindCanDie <491237260@qq.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This is follow-up PR which addresses review comment in PR for SPARK-27001:

https://github.com/apache/spark/pull/23908#discussion_r261511454

This patch proposes addressing primitive array type for serializer - instead of handling it to generic one, Spark now handles it efficiently as primitive array.

## How was this patch tested?

UT modified to include primitive array.

Closes#24015 from HeartSaVioR/SPARK-27001-FOLLOW-UP-java-primitive-array.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a very minor pr to include the usage example to generate output for single test in SQLQueryTestSuite. I tried to deduce it from the existing example and ran into a scenario

where sbt is simply looping to run the same test over and over again. Here is the example

of running a single test.

```

build/sbt "~sql/test-only *SQLQueryTestSuite -- -z inline-table.sql"

```

I tried to generate the output for a single test by prepending `SPARK_GENERATE_GOLDEN_FILES=1` like following

```

SPARK_GENERATE_GOLDEN_FILES=1 build/sbt "~sql/test-only *SQLQueryTestSuite -- -z describe.sql"

```

In this case i found that sbt is looping trying to run describe.sql over and over again as we are running the test in on continuous mode (because of `~` prefix ) where it detects a change in

the generated result file which in turn triggers a build and test. I have included an example where

we don't run it in continuous mode when generating the output. Hopefully it saves other developers some time.

## How was this patch tested?

Verified manually in my dev setup.

Closes#23995 from dilipbiswal/dkb_sqlquerytest_usage.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

While I am migrating other data sources, I find that we should abstract the logic that:

1. converting safe `InternalRow`s into `UnsafeRow`s

2. appending partition values to the end of the result row if existed

This PR proposes to support handling partition values in file source v2 abstraction by adding a util class `PartitionReaderWithPartitionValues`.

## How was this patch tested?

Existing unit tests

Closes#23987 from gengliangwang/SPARK-27049.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

I would like to refactor `limit.scala` slightly and introduce common trait `LimitExec` for `CollectLimitExec` and `BaseLimitExec` (`LocalLimitExec` and `GlobalLimitExec`). This will allow to distinguish those operators from others, and to get the `limit` value without casting to concrete class.

## How was this patch tested?

by existing test suites.

Closes#23976 from MaxGekk/limit-exec.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

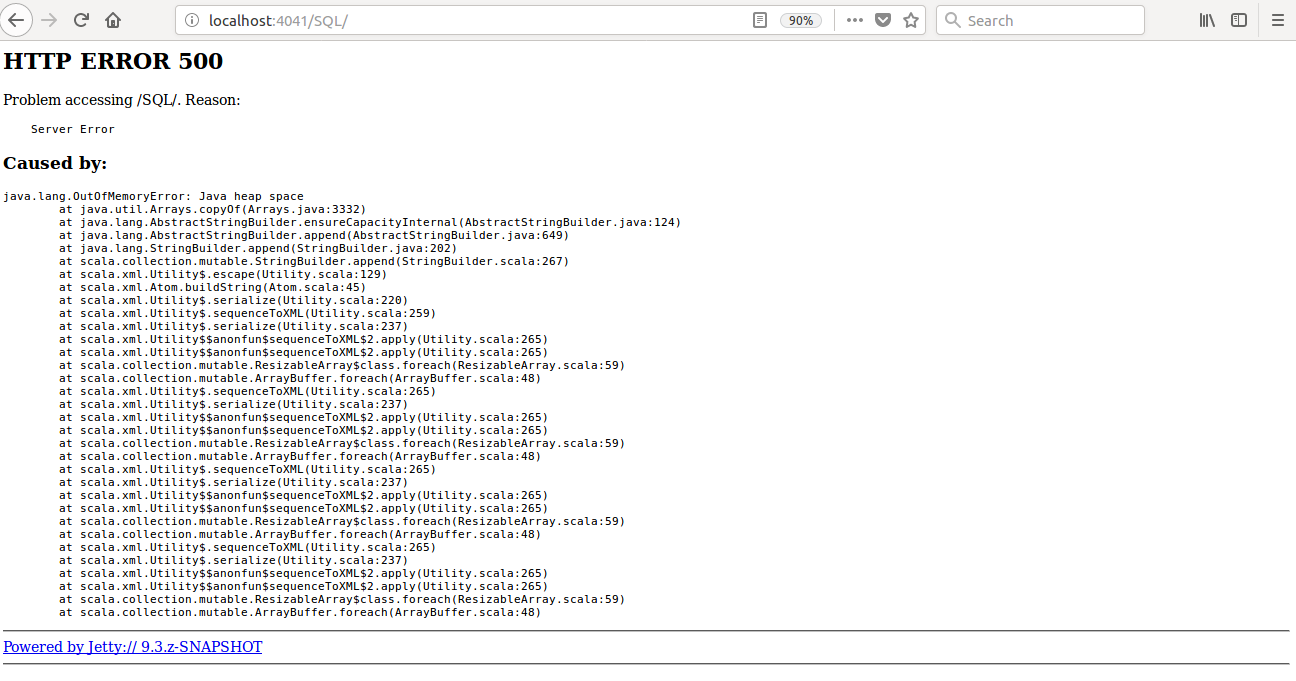

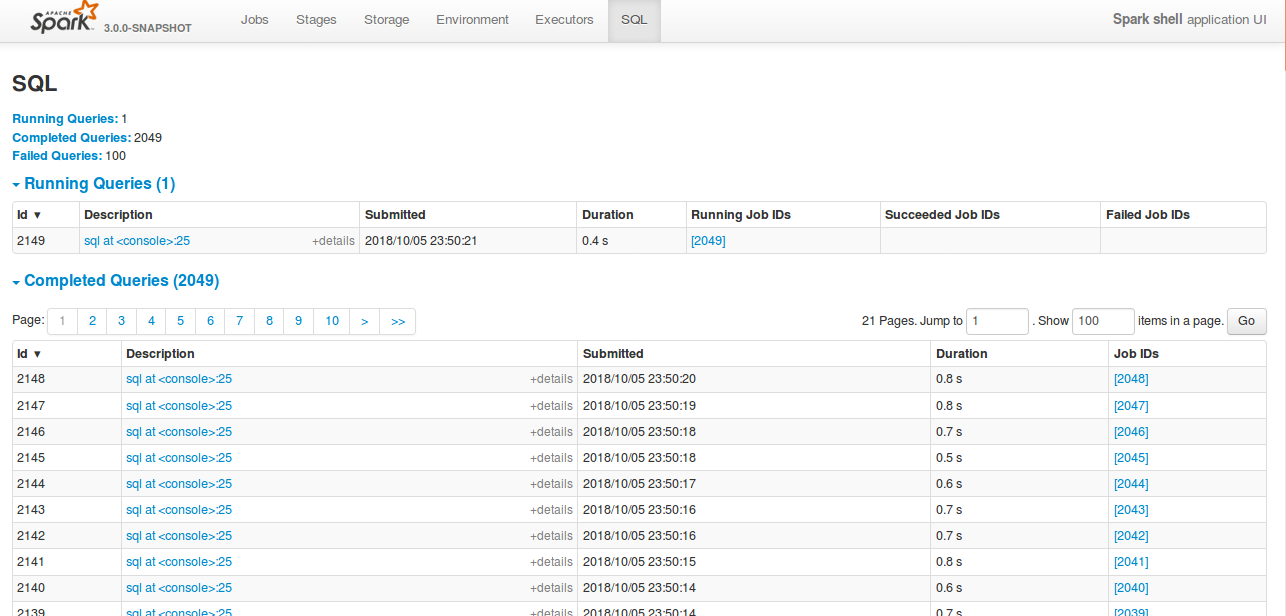

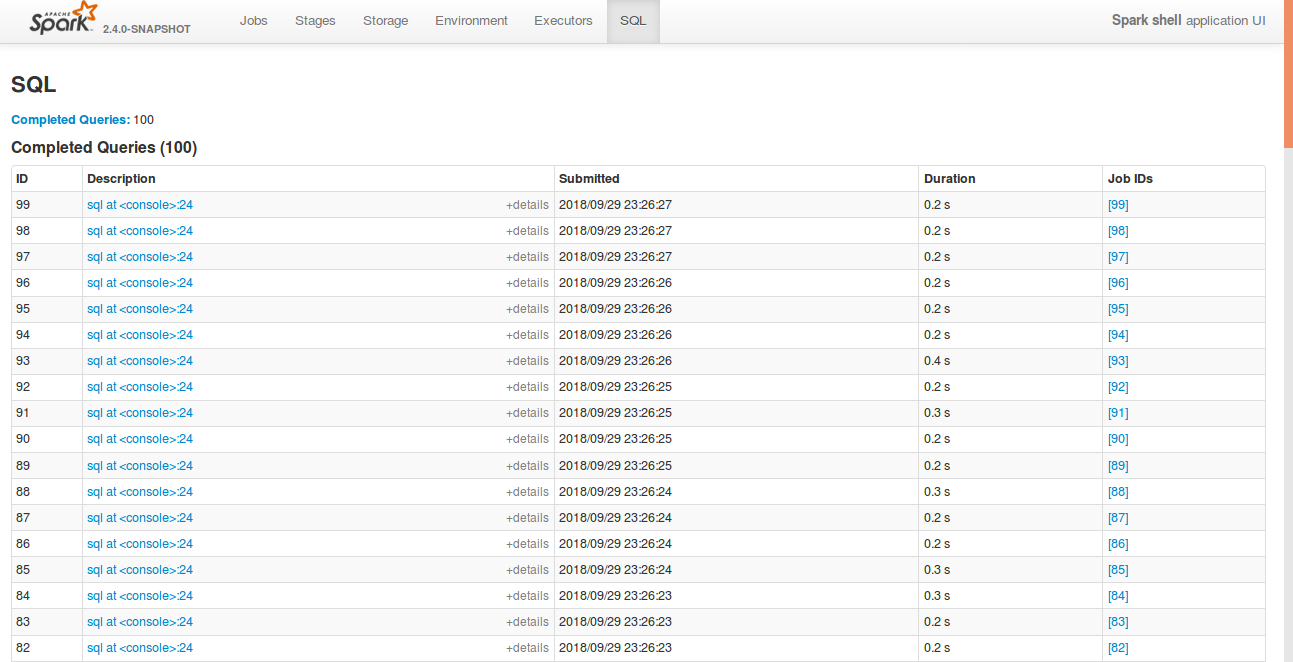

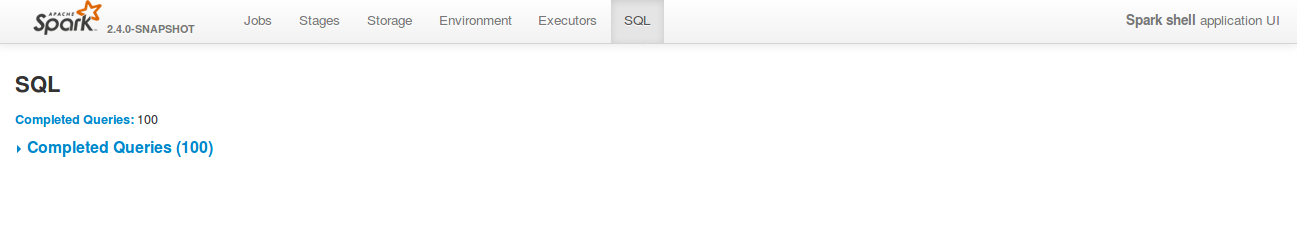

Currently, when the event reordering happens, especially onJobStart event come after onExecutionEnd event, SQL page in the UI displays weirdly.(for eg:test mentioned in JIRA and also this issue randomly occurs when the TPCDS query fails due to broadcast timeout etc.)

The reason is that, In the SQLAppstatusListener, we remove the liveExecutions entry once the execution ends. So, if a jobStart event come after that, then we create a new liveExecution entry corresponding to the execId. Eventually this will overwrite the kvstore and UI displays confusing entries.

## How was this patch tested?

Added UT, Also manually tested with the eventLog, provided in the jira, of the failed query.

Before fix:

After fix:

Closes#23939 from shahidki31/SPARK-27019.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Before dropping database refresh the tables of that database, so as to refresh all cached entries associated with those tables.

We follow the same when dropping a table.

## How was this patch tested?

UT is added

Closes#23905 from Udbhav30/SPARK-24669.

Authored-by: Udbhav30 <u.agrawal30@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Removed unnecessary conversion of microseconds in `DateTimeUtils.timestampToString` to `java.sql.Timestamp` which aims to output fraction of seconds by casting it to string. This was replaced by special `TimestampFormatter` which appends the fraction formatter to `DateTimeFormatterBuilder`: `appendFraction(ChronoField.NANO_OF_SECOND, 0, 9, true)`. The former one means trailing zeros in second's fraction should be truncated while formatting.

## How was this patch tested?

By existing test suites like `CastSuite`, `DateTimeUtilsSuite`, `JDBCSuite`, and by new test in `TimestampFormatterSuite`.

Closes#23936 from MaxGekk/timestamp-to-string.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

We have benchmark of nested schema pruning, but only for Parquet. This adds similar benchmark for ORC. This is used with nested schema pruning of ORC.

## How was this patch tested?

Added test.

Closes#23955 from viirya/orc-nested-schema-pruning-benchmark.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This PR optimizes `InSet` expressions for byte, short, integer, date types. It is a follow-up on PR #21442 from dbtsai.

`In` expressions are compiled into a sequence of if-else statements, which results in O\(n\) time complexity. `InSet` is an optimized version of `In`, which is supposed to improve the performance if all values are literals and the number of elements is big enough. However, `InSet` actually worsens the performance in many cases due to various reasons.

The main idea of this PR is to use Java `switch` statements to significantly improve the performance of `InSet` expressions for bytes, shorts, ints, dates. All `switch` statements are compiled into `tableswitch` and `lookupswitch` bytecode instructions. We will have O\(1\) time complexity if our case values are compact and `tableswitch` can be used. Otherwise, `lookupswitch` will give us O\(log n\).

Locally, I tried Spark `OpenHashSet` and primitive collections from `fastutils` in order to solve the boxing issue in `InSet`. Both options significantly decreased the memory consumption and `fastutils` improved the time compared to `HashSet` from Scala. However, the switch-based approach was still more than two times faster even on 500+ non-compact elements.

I also noticed that applying the switch-based approach on less than 10 elements gives a relatively minor improvement compared to the if-else approach. Therefore, I placed the switch-based logic into `InSet` and added a new config to track when it is applied. Even if we migrate to primitive collections at some point, the switch logic will be still faster unless the number of elements is really big. Another option is to have a separate `InSwitch` expression. However, this would mean we need to modify other places (e.g., `DataSourceStrategy`).

See [here](https://docs.oracle.com/javase/specs/jvms/se7/html/jvms-3.html#jvms-3.10) and [here](https://stackoverflow.com/questions/10287700/difference-between-jvms-lookupswitch-and-tableswitch) for more information.